Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Comparative Study of Generative AI Models for Interior Design

Authors: Revati Anil Patil, Prof. Vrushali Wankhede, Bhagyashree Patil

DOI Link: https://doi.org/10.22214/ijraset.2024.64652

Certificate: View Certificate

Abstract

The integration of Generative AI in interior design has transformed traditional methods, allowing designers to explore new concepts with impressive efficiency. This paper presents a comparative study of leading generative models—StyleGAN, Variational Autoencoders (VAEs), Pix2Pix, and Reinforcement Learning (RL)—evaluating their effectiveness in turning sketches into photorealistic renderings, generating diverse room layouts, and optimizing spaces. By analyzing the results of these models, we show their ability to create unique design solutions that meet functional requirements while enhancing aesthetic appeal. The study highlights substantial enhancements in design precision, emphasizing the potential of generative AI models to elevate the design process and create more tailored interior solutions. This survey examines the methods and performance of each model and looks at future possibilities for using Generative AI to advance the field of interior design.

Introduction

I. INTRODUCTION

Generative AI is revolutionizing the way interior design is approached, offering advanced models that allow designers to automate the creation of realistic, varied, and functional spaces. Models like StyleGAN, Variational Autoencoders (VAEs), Pix2Pix, and Reinforcement Learning (RL) have shown significant promise in generating lifelike room designs, optimizing space usage, and introducing diverse stylistic themes. These models can effortlessly turn simple sketches into detailed visualizations, suggesting furniture placements and layouts that maximize both aesthetics and functionality. However, challenges remain in ensuring these models meet real-world constraints, such as staying within budget, maintaining spatial accuracy, and aligning with the client’s vision. Furthermore, the computational resources required for many generative models limit their accessibility. Another key issue is integrating creativity with practi- cality—models often excel at producing visually impressive designs but struggle with adapting to specific requirements like room functionality or modular configurations. This study evaluates the capabilities of these AI models, offering insights into how they can advance the design process while addressing these inherent challenges.The rise of generative AI is changing how interior design is approached, offering fresh possibilities for creativity and efficiency. Designers are increasingly using AI models to generate unique design solutions that reflect individual tastes and requirements. Through the use of advanced algorithms, these models can sift through extensive collections of design elements, enabling the creation of customized interior spaces that address varied client preferences. This technology automates routine tasks, allowing designers to concentrate on more meaningful creative choices. With the capability to quickly visualize different styles and arrangements, generative AI is influencing the way designers plan and implement their concepts, leading to designs that are more aligned with current trends and client desires.

(a) Office space interior design. (b) Interior design of Pizza Place. (c) Living Room interior design.

Fig. 1: Various interior designs generated using GenAI.

II. OBJECTIVES

- Evaluate Generative Models: Conduct a comprehensive analysis of StyleGAN, Variational Autoen- coders (VAEs), Pix2Pix, and Reinforcement Learning (RL) in the context of interior design.

- Assess Performance Metrics: Compare the models based on key performance metrics, including realism, personalization, diversity, and optimization.

- Explore Practical Applications: Investigate the practical applications of each model for generating photorealistic images and optimizing layouts.

III. LITERATURE SURVEY

StyleGAN, introduced by Karras et al. in “A Style-Based Generator Architecture for Generative Adver- sarial Networks” (2019), employs a unique architecture that decouples high-level attributes from stochastic variations, allowing for intuitive manipulation of generated images. This flexibility enables designers to create photorealistic images with high realism and diversity, making it particularly effective in creative fields like interior design. The model’s ability to produce varied outputs from a single input by adjusting style vectors showcases its potential for customization and tailored design solutions.[1]

The paper titled ”RoomGen: 3D Interior Furniture Layout Generation” is authored by Wenzhe Zeng and Xingyuan Liu. In this research, they present a framework that utilizes Generative Adversarial Networks (GANs) to generate realistic 3D furniture layouts, demonstrating significant improvements over traditional design methods in layout variety and user satisfaction.[7]The authors are enhancing the framework to include interactive design feedback for personalized layouts, with future research potentially integrating Augmented Reality (AR) applications for real-world visualization.

The paper ”Virtual Interior Design Companion: Harnessing the Power of GANs” is authored by Rekha Phadke, Aditya Kumar, and Yash Mathur, introducing a GAN-based virtual assistant designed to create appealing interior designs based on user inputs. The findings indicate the potential of GANs to replicate design aesthetics, streamlining the design process, while the authors work on refining the model’s architecture to incorporate design principles such as color theory and spatial dynamics.[8]Future directions may include AI-driven feedback systems for real-time user adjustments, promoting a collaborative design experience.

Variational Autoencoders, as proposed by Kingma and Welling in “Auto-Encoding Variational Bayes” (2013), represent data in a lower-dimensional latent space using a probabilistic framework. This allows for the generation of new samples that maintain the overall structure while exhibiting variability. VAEs are particularly valuable in interior design for generating diverse room layouts and furniture arrangements, as demonstrated in studies highlighting their capacity to capture complex data distributions. This makes them effective in scenarios where functional constraints must be respected.[2]

Pix2Pix, developed by Isola et al. in “Image-to-Image Translation with Conditional Adversarial Net- works” (2017), focuses on image-to-image translation tasks by utilizing a conditional GAN framework. The model learns to convert paired datasets, such as sketches into photorealistic renders, effectively capturing the relationship between input and output. Its effectiveness in architecture and interior design enables designers to visualize concepts accurately, making it a versatile tool for translating conceptual designs into detailed visuals.[3]

Reinforcement Learning (RL) involves training agents to optimize layouts by learning from interactions with the environment. Techniques like Deep Q-Networks (DQN) enable the discovery of effective strategies for arranging furniture based on constraints such as maximizing space utilization. The application of RL in interior design, as discussed in “Deep Reinforcement Learning for Optimizing Interior Layouts” (2019), demonstrates its capability to automate the design process, providing significant advantages in creating functional layouts for smart and modular home designs.[4]

Additionally, the paper ”High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs” by Ting-Chun Wang et al. showcases the effectiveness of conditional GANs in generating high- resolution images that allow semantic manipulation of design elements. Their research emphasizes the flexibility of design alterations while maintaining visual fidelity, pointing to the significant role of GANs in interior design. The authors are focused on enhancing the realism and quality of generated images and plan to combine their model with interactive design tools for seamless real-time manipulation of design elements, thereby enriching the design experience.[10]

IV. METHODOLOGY

The methodology for this comparative study of Generative AI models in interior design involves a structured analysis of four key models: StyleGAN, Variational Autoencoder (VAE), Pix2Pix, and Rein- forcement Learning (RL).

Each model was evaluated based on its ability to generate photorealistic designs, create diverse room layouts, optimize space usage, and translate sketches or blueprints into detailed interior designs. The evaluation was conducted across the following phases:

1) Model Selection: The models were selected based on their relevance to interior design tasks, such as style transfer, layout optimization, and image-to-image translation. Each model represents a unique generative approach:

- StyleGAN for photorealism and style manipulation.

- VAE for probabilistic modeling of room layouts.

- Pix2Pix for sketch-to-image translation.

- RL for layout optimization.

2) Dataset Preparation: A diverse dataset of architectural floor plans, room sketches, and high-resolution interior images was compiled for training and testing. This dataset was categorized by room type (e.g., living rooms, bedrooms) and style (e.g., minimalism, contemporary) to evaluate the adaptability of each model.

3) Model Training and Implementation: StyleGAN was trained on a large dataset of interior design images to allow control over generated features such as color, texture, and furniture arrangement. VAE was trained on room layouts, enabling it to generate diverse arrangements while maintaining room structure. Pix2Pix was trained for image-to-image translation, specifically converting floor plans or rough sketches into photorealistic visuals. RL was used in a simulation environment, optimizing furniture placement based on constraints like space utilization and light sources. The RL agent was trained using reward-based learning, where the rewards were given for better space utilization and aesthetic coherence.

4) Performance Evaluation:

Each model’s performance was evaluated across three key dimensions:

- Photorealism: The realism of the generated designs was measured using human evaluation and visual quality metrics (e.g., Fre´chet Inception Distance).

- Diversity: The ability of the models to produce varied designs while adhering to room constraints was assessed using layout variation indices.

- Optimization: For RL, space utilization and furniture arrangement were evaluated based on a room’s functional efficiency, using metrics such as space usage percentage and flow optimization.

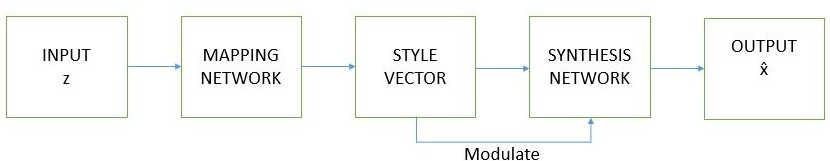

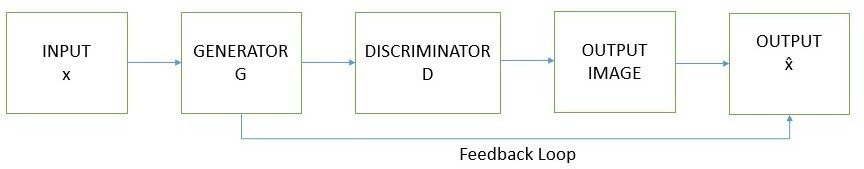

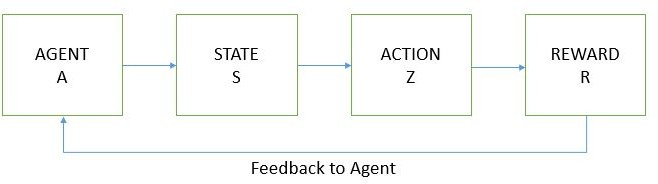

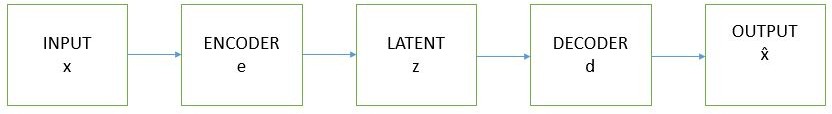

Below are the block diagrams illustrating the workflows of four key generative AI models in interior design.

Fig. 2: Block diagram for the StyleGAN.

The Style Vector modulates the Synthesis Network to generate photorealistic images by controlling attributes like color, texture, and style at various layers.

Fig. 3: Block diagram for the Pix2Pix.

The Pix2Pix model translates Sketches or Blueprints into detailed, photorealistic designs using an image- to-image translation process.

Fig. 4: Block diagram for the Reinforcement Learning.

In Reinforcement Learning, the agent optimizes Design Constraints through trial and error, producing an optimal layout with efficient furniture placement based on feedback rewards.

In VAE, the Latent Space Encoding captures the structure of input layouts and generates diverse room configurations while preserving the layout’s functionality.

Fig. 5: Block diagram for the Variational Autoencoder (VAE).

V. RESULTS AND ANALYSIS

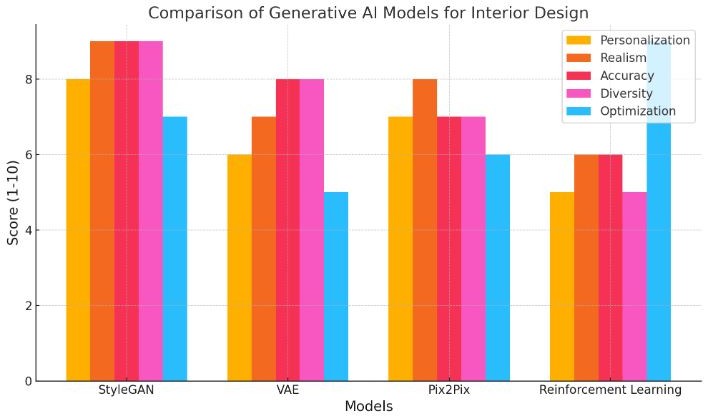

These Generative AI models (StyleGAN, Variational Autoencoder (VAE), Pix2Pix, and Reinforcement Learning) were evaluated based on five key parameters: personalization, realism, accuracy, diversity, and optimization. Each parameter plays a significant role in determining the model’s effectiveness in generating interior design solutions.

Fig. 6: Comparison of different models for interior design using GAN.

A. Personalization

StyleGAN excels in customization by allowing designers to manipulate various attributes, such as color, texture, and style. Its latent space can generate diverse outputs tailored to specific user preferences. In contrast, Variational Autoencoders (VAE) produce variations but often lack the depth of personalization that StyleGAN offers.

While VAE maintains structural integrity, which is essential for functional designs, it can limit creative expression. Pix2Pix facilitates personalization by transforming specific sketches into finished designs; however, its effectiveness largely depends on the initial input quality, which may vary across user preferences. Reinforcement Learning, while capable of optimizing layouts based on constraints, lacks the inherent ability to customize design elements for individual tastes, making it less suitable for personalization compared to the others.

B. Realism

StyleGAN is renowned for creating photorealistic images, making it a top choice for projects requiring high visual fidelity, as its outputs often look indistinguishable from real photographs. On the other hand, VAE produces realistic outputs but can sometimes generate images that lack the detail or refinement found in those from StyleGAN. Its realism is often more about maintaining structure than achieving photorealism. Pix2Pix effectively translates sketches and blueprints into realistic visuals, but the quality can vary depending on the initial input. While it is strong in creating images that closely resemble the intended design, Reinforcement Learning focuses more on functional aspects than aesthetic qualities, resulting in less realistic visuals. Its designs may optimize space but can lack the visual appeal necessary for interior design.

C. Accuracy

StyleGAN demonstrates exceptional precision in generating designs that align closely with desired aesthetics and functional requirements, with its attention to detail enhancing the final output’s accuracy. VAE maintains structural accuracy, generating variations in layouts while ensuring designs are coherent and functional, which is crucial for interior applications. Pix2Pix offers good accuracy in transforming inputs into outputs, though it heavily relies on the quality of the sketches or blueprints provided; higher quality inputs lead to more accurate results. In contrast, Reinforcement Learning, while effective in optimizing layouts, may produce less reliable results in generating aesthetically pleasing designs, as its focus is on functionality rather than accuracy in aesthetics.

D. Diversity

StyleGAN has the capability to explore a vast design space, allowing it to generate a wide range of styles and variations, which is particularly useful for projects that require multiple design themes. VAE facilitates diversity by producing a range of variations while ensuring the designs stay structurally sound, enabling it to generate different layouts that maintain usability. Pix2Pix provides diversity but is limited to the inputs it receives; while it can create various outputs from good sketches, its versatility does not match that of StyleGAN. Reinforcement Learning has a limited ability to generate diverse design options, as it primarily aims to find the best possible arrangement rather than explore different aesthetics.

E. Optimization

StyleGAN can optimize for specific styles, but its primary focus is not on layout optimization, making it less effective in this regard compared to Reinforcement Learning. VAE does not excel in optimization; it is more focused on generating variations than improving layout efficiency. Pix2Pix can provide reasonably optimized outputs from sketches but does not inherently focus on optimization as a core function. In contrast, Reinforcement Learning shines in optimizing room layouts based on given constraints, making it the best choice for maximizing space utilization and functionality, which is particularly valuable for smart home designs.

VI. COMPARISON OF GENERATIVE MODELS

The comparison table outlines key parameters for four generative AI models used in interior design. StyleGAN excels in flexibility and use case versatility, enabling diverse and creative designs, though it requires a complex training process. VAE offers moderate flexibility and is useful for generating varied layouts but operates within a simpler architecture. Pix2Pix facilitates user interaction through its ability to translate sketches to images quickly, while Reinforcement Learning stands out for its adaptability and optimization capabilities, though it involves a more extensive training period.

|

Parameter |

StyleGAN |

VAE |

Pix2Pix |

Reinforcement Learning |

||||

|

Flexibility |

High, allows for style manipulation |

Moderate, constrained latent space |

by |

Moderate, limited to paired datasets |

High, adaptable to various layouts |

|||

|

Speed of Genera- tion |

Fast, efficient ar- chitecture |

Moderate, sampling be slower |

can |

Fast, direct image translation |

Variable, depends on training time |

|||

|

User Interaction |

Limited, output- focused |

Limited, focused |

input- |

Interactive, allows for user sketches |

High, based user feedback |

on |

||

|

Model Complexity |

High, extensive training needed |

Moderate, simpler architecture |

Moderate, involves datasets |

paired |

High, requires ex- tensive training |

|||

|

Use Case Versatil- ity |

Excellent for verse designs |

di- |

Good for generation |

layout |

Best for translat- ing sketches to im- ages |

Excellent for opti- mizing layouts |

||

TABLE I: Comparison of Generative AI Models

Conclusion

Based on the comprehensive comparison conducted in this study, StyleGAN is identified as the most optimal model for applications necessitating high realism, personalization, and diversity in interior design. Its ability to blend aesthetic appeal with creative flexibility makes it particularly advantageous for designers aiming to create photorealistic and customized interior solutions. In contrast, Reinforcement Learning is highlighted for its effectiveness in projects prioritizing optimization and space efficiency, making it an excellent choice for scenarios where layout functionality is crucial. While Variational Autoencoders (VAE) and Pix2Pix possess distinct advantages, they are more suited for specific tasks within the generative design landscape. This analysis serves as a valuable resource for practitioners in determining the most suitable model aligned with their particular design needs and objectives.

References

[1] T. Karras, S. Aila, A. Lagari, and T. Lehtinen, “A Style-Based Generator Architecture for Generative Adversarial Networks,” in Proc. IEEE/CVF Conf. Computer Vision and Pattern Recognition (CVPR), 2019, pp. 4401-4410. [2] D. P. Kingma and M. Welling, “Auto-Encoding Variational Bayes,” in Proc. 2nd International Conference on Learning Representations (ICLR), 2014. [3] P. Isola, J. Yoon, D. Jiang, and A. A. Efros, “Image-to-Image Translation with Conditional Adversarial Networks,” in Proc. IEEE/CVF Conf. Computer Vision and Pattern Recognition (CVPR), 2017, pp. 1125-1134. [4] M. A. S. V. M. Y. Shen, M. Xu, and R. Shibasaki, “Deep Reinforcement Learning for Optimizing Interior Layouts,” in Proc. IEEE International Conference on Image Processing (ICIP), 2019, pp. 90-94. [5] L. Chen and Y. Wang, “Automatic Analysis and Sketch-Based Retrieval of Architectural Floor Plans,” Computer-Aided Design, 2022. [6] H. Chen, W. Gao, and R. Zhang, “Interior Layout Design Based on an Interactive Genetic Algorithm,” IEEE Transactions on Systems, Man, and Cybernetics, 2024. [7] W. Zeng and X. Liu, “RoomGen: 3D Interior Furniture Layout Generation,” IEEE Transactions on Visualization and Computer Graphics, 2024. [8] R. Phadke, A. Kumar, Y. Mathur, and S. Sharma, “Virtual Interior Design Companion - Harnessing the Power of GANs,” IEEE Transactions on Industrial Informatics, 2024. [9] H. Jeong, Y. Kim, Y. Yoo, S. Cha, and J.-K. Lee, “Gen AI and Interior Design Representation: Applying Design Styles Using Fine-Tuned Models,” IEEE Access, 2023. [10] T.-C. Wang, M.-Y. Liu, J.-Y. Zhu, A. Tao, J. Kautz, and B. Catanzaro, “High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs,” IEEE Access, 2017.

Copyright

Copyright © 2024 Revati Anil Patil, Prof. Vrushali Wankhede, Bhagyashree Patil. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64652

Publish Date : 2024-10-17

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online