Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Hybrid Classification Model (Fruits or Vegetable) Using Deep Learning Techniques

Authors: Karan Kumar Maurya, Adarsh Verma, Danish Gaur, Ankit Patel

DOI Link: https://doi.org/10.22214/ijraset.2024.63483

Certificate: View Certificate

Abstract

In modern vision and pattern recognition, complex tasks such as picture analysis, facial recognition, fingerprint identification, and DNA sequencing necessitate a nuanced approach, often requiring the integration of multiple feature descriptors. This research proposes a multi-model identification and classification strategy leveraging multi- feature fusion techniques to address these intricate challenges. Specifically, the focus is on fruit and vegetable recognition and classification, a burgeoning field in computer and machine vision. By employing an identification system tailored to fruits and vegetables and harnessing the capabilities of MobileNetV2 architecture, customers and buyers can more easily discern the type and quality of produce. MobileNetV2, a convolutional neural network architecture optimized for mobile devices, offers promising performance in real-world applications. This abstract highlight the significance of CNNs and MobileNetV2 in tackling multifaceted recognition tasks, underscoring the potential for enhanced efficiency and accuracy in fruit and vegetable classification.

Introduction

I. INTRODUCTION

In the pursuit of a healthy lifestyle, the inclusion of fruits and vegetables is paramount due to their myriad health benefits. However, the accessibility of certain produce is often dictated by seasonal variations, presenting a challenge for consumers and marketers alike. In India, where agriculture remains a cornerstone of the national economy, a staggering seventy percent of land is dedicated to cultivation. Remarkably, India boasts the third position globally in fruit production and the second in vegetable production.

In this landscape, the integration of deep learning algorithms for fruit and vegetable categorization emerges as a significant boon for both marketers and consumers. The burgeoning reliance on computer science and information technology within the agricultural sector further amplifies the relevance of such techniques. With the advent of artificial intelligence and soft computing-based methodologies, the provision of high-quality produce to consumers has become increasingly streamlined.

Convolutional Neural Networks (CNNs) have revolutionized image classification tasks due to their ability to automatically learn features from raw data. Here's an overview of some key CNN techniques and their applications in image classification:

- Convolutional Layers: These layers apply filters or kernels to the input image, performing convolutions to extract features. Convolutional operations capture spatial hierarchies of patterns, allowing the network to learn features at different levels of abstraction.

- Pooling Layers: Pooling layers reduce the spatial dimensions of the feature maps generated by the convolutional layers. Max pooling and average pooling are commonly used techniques to down sample the feature maps while retaining important information.

- Activation Functions: Activation functions like ReLU (Rectified Linear Unit), Leaky ReLU, or variants like ELU (Exponential Linear Unit) introduce non-linearity into the network, enabling it to learn complex relationships in the data.

- Normalization Layers: Techniques like Batch Normalization normalize the activations of each layer, improving the stability and convergence speed of the network during training.

- Data Augmentation: Data augmentation techniques such as rotation, translation, scaling, and flipping are applied to increase the diversity of the training dataset. This helps prevent overfitting and improves the generalization ability of the model.

- Transfer Learning: Transfer learning involves leveraging pre-trained CNN models trained on large datasets like ImageNet. Fine-tuning these models on specific image classification tasks with smaller datasets can significantly boost performance and reduce training time.

II. LITERATURE REVIEW

Generally, the ML approach is extracting the image feature-metrics for the classification. This includes various processing steps such as image preprocessing, feature extraction, classification model development, and validation (Jana et.al.,2017; Hou et.al.,2016. Azizah,2017; Thenmozhi et.al.,2019). Much work related to fruit classification has been put forward. Support Vector Machines (SVM) display acceptable results on small data sets. This can be done flawlessly using deep learning based defined neural networks (Saranya et.al., 2020).

CNN based model improves image classification for large datasets. The CNN based model (Palakodati et.al., 2020) has achieved accuracy of 97.82% in classifying fresh and rotten category of fruits. Three Convolution layers, three Max pooling, one fully connected layer, and a SoftMax classifier were used to achieve the mentioned accuracy in 225 epochs. In comparison to a few transfer learning models, this model performed better.

The fruit recognition rate was evaluated using only CNN and CNN with a selective algorithm (Hou et.al.,2016). CNN with optional algorithm is proven better than when used CNN alone. Although efficient recognition rate is achieved but work has been done on small classes of fruits and changes in the external environment and other factors such as light are not considered in creation of database.

The CNN model (Azizah et.al.,2017) was used for detecting the defect on mangosteen with accuracy of 97%. Before applying CNN for classification experts manually perform sorting of mangosteen. Image classification by CNN includes 4-fold cross validation process.

Fungus detection and discrimination between various kinds of fungus is done by CNN architecture with 11 layers (Tahir et.al.,2018). CNN layers include 3 convolution, 3 ReLU, 3 pooling and 2 fully connected layers. Accuracy of 94.8% is achieved with 5-fold validation. Fine tuning between different parameters has been done for better results.

Crop insect classification is a big challenge (Thenmozhi et al., 2019), and to solve it, deep CNN models were employed on NBAIR, Xie1 and Xie2 datasets. With the NBAIR, Xie1 and Xie2 datasets, accuracy in insect categorization was 96.75 percent,

97.47 percent, and 95.97 percent, respectively. With the same datasets, several transfer learning models (AlexNet, ResNet-50, ResNet-101, VGG-16, and VGG-19) were employed in insect classification. When compared to the transfer learning model, the CNN model was shown to be more efficient in this study.

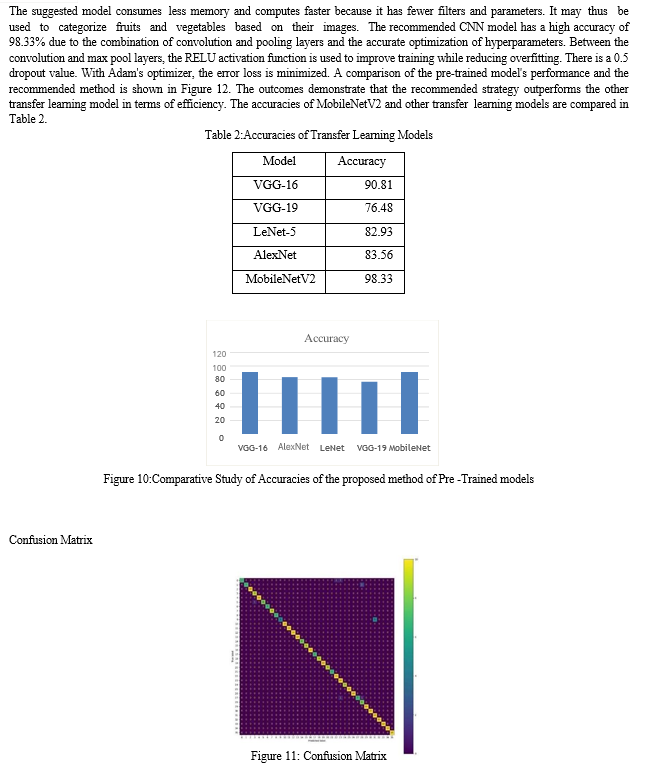

CNN is frequently employed in agriculture for picture categorization of a variety of issues (Kamilaris et.al.,2018). The current study shows that a CNN model based on deep learning is more effective in classifying fruits as "fresh" or "rotten." The suggested model's accuracy is also compared to that of other transfer learning methods. Six classes were created from three different varieties of fruit. That is, each fruit is classified as either fresh or rotten. The VGG16, VGG19, AlexNet, and LeNet-5 transfer learning models are investigated. When compared to existing defined models, our system displays a robust CNN model that has enhanced the accuracy for fresh and rotten fruit classification. For better outcomes, we additionally look at the impact of other hyper parameters.

III. METHODOLOGY

Dataset The current work uses dataset “Fruits and Vegetables Image Recognition” in fruit and vegetables classification process. This data set is acquired from Kaggle and has been engineered by collecting, separating, and then labelled. This dataset have 36 classes, almost 100 images for each class so we can say we have 3600+ training images. We have 10 images for each category in train/validation.

A. Convolutional Neural Network

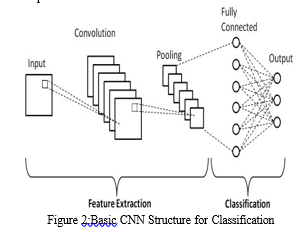

Convolution neural networks (CNN) are today's most popular class of models for image recognition and classification. One of the big advantages of using CNN is that it requires much less preprocessing time as compared with other classification algorithm. To improve the classification process, it processes the input data, gives training to model and then takeout the important information automatically. The primary purpose of a CNN algorithm is to download data in a managed format without losing important features in understanding what the data represents. This makes it suitable for working with large data sets. CNN is composed of mainly three layers. The number of layers varies depending on complexity of the problem domain. In complex applications, the number of such layers increases significantly. The image goes through these series of layers, first is convolutional layer, next is pooling layer and finally fully connected layer. After that it generates the output.

In convolution layer filters are applied to the original image. It extracts features from the image. Most of the user-specified parameters i.e. numbers of kernels and size of the kernel are found in the convolution layer. Max pooling or average pooling is performed via pooling layers. In most pooling layers, the maximum pool technique is employed. They're often employed to shrink the size of a network. The completely linked layers are the network's final layers. The output from the previous pool or convolution layer is used as the input for this layer. It classifies the input image into distinct labelled classes using the SoftMax activation function. Figure 2 represents Basic composition of CNN.

B. MobileNetV2

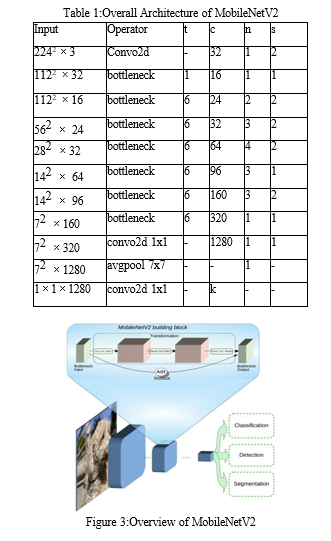

In the subsequent study, we employed CNN Model MobileNetV2. Convolutional neural network (CNN) architecture MobileNet-v2 is intended for mobile image categorization applications. It is an excellent option for devices with constrained resources since it is a lightweight model with an effective design that can maintain high accuracy. There are two different kinds of blocks in MobileNetV2. One has a stride of one and is a residual block. Another is a shrinking block with a stride of two.

Each of the two types of blocks has three layers:

- The first layer uses ReLU6 for 1×1 convolution.

- The second layer uses depthwise convolution.

- Another 1×1 convolution, this one without any non-linearity, makes up the third layer. It is asserted that deep networks only have the ability of a linear classifier on the non-zero volume portion of the output domain if ReLU is applied once more.

- Furthermore, an expansion factor t exists. t=6 for each of the primary experiments.

- The internal output would have 64×t=64×6=384 channels if the input had 64 channels.

where t: expansion factor, c: number of output channels, n: repeating number, s: stride. 3×3 kernels are used for spatial convolution.

In typical, the primary network (width multiplier 1, 224×224), has a computational cost of 300 million multiply-adds and uses 3.4 million parameters. (Width multiplier is introduced in MobileNetV1.)

The performance trade offs are further explored, for input resolutions from 96 to 224, and width multipliers of 0.35 to 1.4.

The network computational cost up to 585M MAdds, while the model size vary between 1.7M and 6.9M parameters.

To train the network, 16 GPU is used with batch size of 96.

C. Tools and Libraries Keras

Keras is a high-level, deep learning API developed by Google for implementing neural networks. It is written in Python and is used to make the implementation of neural networks easy. It also supports multiple backend neural network computation. We are using Keras for creating model, predicting the object, etc.

- Numpy

NumPy is a powerful Python library for scientific computing. It provides N- dimensional arrays, mathematical functions, linear algebra tools, random number generation, and efficient element-wise operations. With NumPy, you can handle large datasets, perform complex calculations, and optimize code speed. It’s a fundamental tool for researchers, developers, and data scientists. We are using Numpy for the image matrix handling.

2. Streamlit

Streamlit is an open-source Python framework that transforms data scripts into web apps effortlessly. With just a few lines of code, you can create interactive data applications, display model outputs, visualize data, and modify inputs—all without needing front-end experience. It is use to create the web application for the project.

3. BeautifulSoup

Beautiful Soup is a Python library that simplifies web scraping by extracting information from HTML and XML files. It sits on top of an HTML or XML parser, providing Pythonic ways to navigate, search, and modify the parse tree. Whether you’re pulling data from websites or processing XML data, Beautiful Soup saves programmers hours of work. We are using it to extract nutritional value of the predicted object from webpage.

4. Requests

Requests is an elegant and simple Python library for making HTTP requests. It provides a straightforward API for interacting with web servers, allowing you to perform operations like GET and POST effortlessly.

5. Pillow

Pillow is a Python library that adds image processing capabilities to your interpreter. It supports many file formats, has a fast internal representation, and offers powerful image operations.

IV. RESULTS

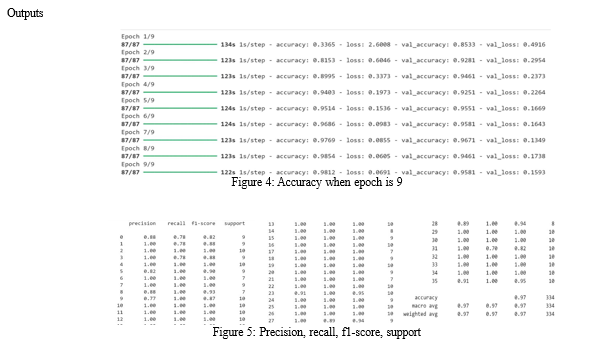

The present work includes classification of Fruits and Vegetables. Dataset utilized in this study is “Fruit and Vegetable Image Recognition”. Firstly, the dataset is partitioned into three categories. 80% dataset has used in training while the remaining 10% in validation and 10% used for testing. Validation and training of the same is done simultaneously. In the training process impact of various hyper parameters (mentioned in discussion section) were analysed and adjusted to get a precise model as compared with pre-trained transfer learning models. We used a Lenovo Legion 5 with a Ryzen 5 4000 series, 8 GB RAM, 1 TB HDD + 256 SDD, and NVIDIA GTX 1650 graphic card to run this deep CNN-based model.

V. DISCUSSION

A. CNN Model Parameters Proposed

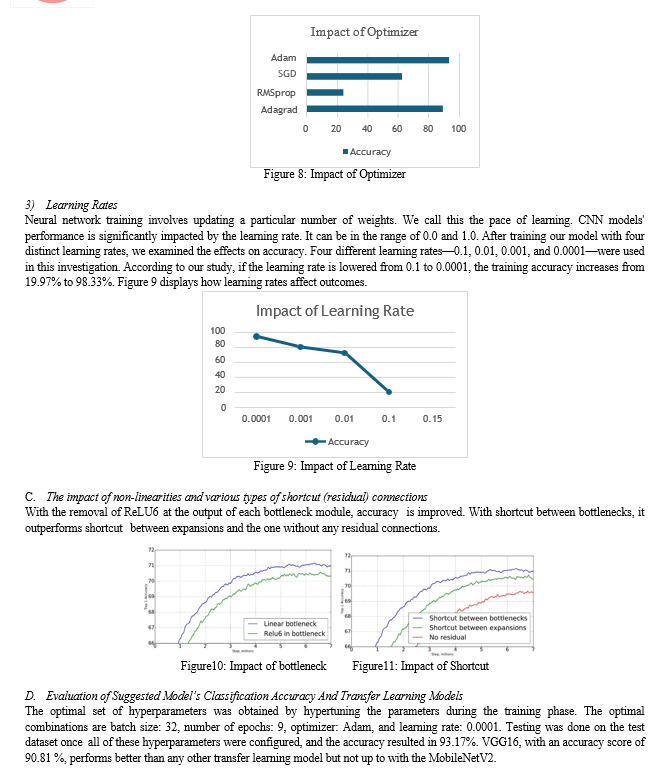

The Adam optimizer is used to train the model, which has learning rate of 0.001. Batch size of 32 is used and the number of epochs are 9. The model is trained with a training set of various varieties of Fruits and Vegetables Images and accuracy evaluation is done by using the test set.

B. Impact of Hyper-Parameters on the Model

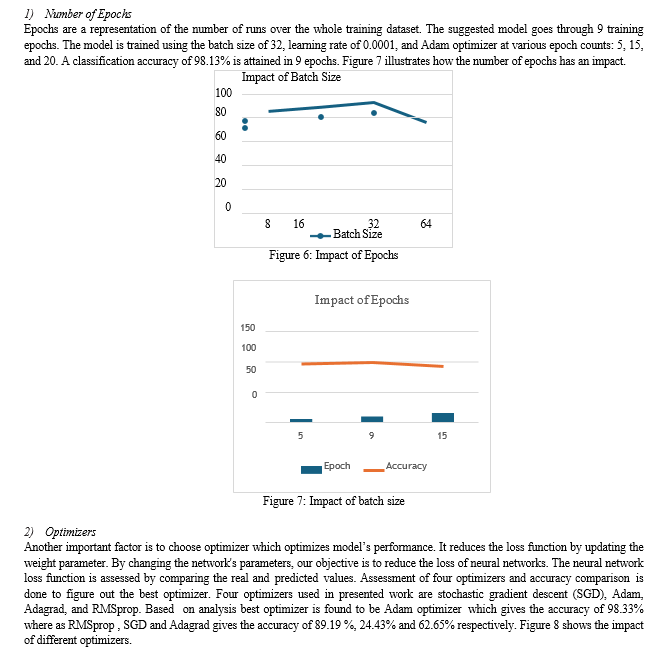

Batch SizeThe batch size specification affects the accuracy of the classification model. Larger batches impact performance overall and demand more training time. They also consume more memory. Thus, choosing the right batch size is essential to raising the model's quality. In the current study, batch sizes of 8, 16, 32, and 64 are assessed. The accuracy of the model becomes better as the batch size increases from 8 to 16. After falling to 32, it lowers 64 more times. Figure 6 shows that a batch size of 16 individuals produced the best results for the model.

Conclusion

In this project, we aimed to develop an efficient and accurate model for classifying fruits and vegetables using the MobileNetV2 pretrained Convolutional Neural Network (CNN). Our primary goal was to leverage transfer learning to achieve high accuracy on our custom dataset of fruits and vegetables. We successfully fine-tuned the MobileNetV2 model, achieving an impressive accuracy of 98.33% on the test dataset. This result not only surpasses our initial expectations but also demonstrates the effectiveness of using a lightweight yet powerful pretrained model like MobileNetV2 for image classification tasks. Throughout the project, we faced several challenges, including ensuring consistent preprocessing, and optimizing the model\'s hyperparameters. By employing data augmentation techniques and rigorous training processes, we were able to mitigate these challenges and improve the model\'s robustness and generalization capability. Our findings highlight the significant potential of transfer learning in enhancing classification accuracy with limited computational resources. The high accuracy achieved indicates that the model is well-suited for practical applications in fruit and vegetable recognition, which can be beneficial for various industries such as agriculture, retail, and food services. Despite the success, there are areas for future improvement. Enhancing the dataset with more diverse samples and exploring ensemble methods could further boost the model\'s performance. Reflecting on our journey, we have gained substantial knowledge in machine learning techniques, particularly in transfer learning and model optimization. The collaborative effort and problem-solving skills honed during this project will undoubtedly contribute to our future endeavors. In conclusion, the project on fruits and vegetables classification using the MobileNetV2 pretrained CNN model has been a significant achievement. The high accuracy attained underscores the model\'s capability and opens avenues for its application in real-world scenarios. We extend our gratitude to our mentors and peers for their invaluable support throughout this project.

References

[1] Karakaya, D., Ulucan, O., & Turkan, M. (2020). A comparative analysis on fruit freshness classification. In 2019 Innovations in Intelligent Systems and Applications Conference (ASYU) (pp. 1-4). IEEE. [2] Jana, S., & Parekh, R. (2017, March). Shape-based fruit recognition and classification. In International Conference on Computational Intelligence, Communications, and Business Analytics (pp. 184-196). Springer, Singapore. [3] Saranya, N., Srinivasan, K., Kumar, S. P., Rukkumani, V., & Ramya, R. (2019, September). Fruit classification using traditional machine learning and deep learning approach. In International Conference on Computational Vision and Bio Inspired Computing (pp. 79-89). [4] Hou, L., Wu, Q., Sun, Q., Yang, H., & Li, P. (2016, August). Fruit recognition based on convolution neural network. In 2016 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD) (pp. 18-22). [5] IEEE Azizah, L. M. R., Umayah, S. F., Riyadi, S., Damarjati, C., & Utama, N. A. (2017, November). Deep learning implementation using convolutionalneural network in mangosteen surface defect detection. In 2017 7th IEEE International Conference on Control System, Computing and Engineering (ICCSCE) (pp. 242246). IEEE. [6] Tahir, M. W., Zaidi, N. A., Rao, A. A., Blank, R., Vellekoop, M. J., & Lang, W. (2018). A fungus spores dataset and a convolutional neural network based approach for fungus detection. IEEE transactions on nanobioscience, 7(3), 281-290. [7] Thenmozhi, K., & Reddy, U. S. (2019). Crop pest classification based on deep convolutional neural network and transfer learning. Computers and Electronics in Agriculture, 164, 104906. Kamilaris, A., & Prenafeta-Boldú, F. X. (2018). Deep learning in agriculture: A survey. Computers and electronics in agriculture, 147, 70-90. [8] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25, 1097-1105. [9] LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278- [10] 2324. [11] Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large- scale image recognition. arXiv preprint arXiv:1409.1556.

Copyright

Copyright © 2024 Karan Kumar Maurya, Adarsh Verma, Danish Gaur, Ankit Patel. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63483

Publish Date : 2024-06-27

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online