Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Fake News Detection

Authors: Anisha Agrawal , Eshika Pawar , Shailja Chauhan , Swati Sahu , Dr. Abhinandan Singh Dandotiya

DOI Link: https://doi.org/10.22214/ijraset.2024.63348

Certificate: View Certificate

Abstract

There has been a considerable rise in the spread of fake news for both commercial and political reasons, which has occurred as a direct result of the fast expansion of online social networks. It is possible for members of online social networks to easily get infected by false news that is spread online via the use of language that is deceptive, which may have substantial repercussions for society that is not online. The quick detection and identification of fake news is an essential goal in the process of strengthening the reliability of information that is shared on social networks that are accessible online. This study intends to investigate the concepts, methods, and algorithms that are used in the process of identifying bogus news items, as well as the individuals that make them and the topics that they cover on online social networks. Additionally, it makes an effort to evaluate the effectiveness of various detection approaches. More and more people are growing concerned about the accuracy of information that can be found on the internet, especially on social media platforms. It is difficult to spot, evaluate, and correct such content, which is sometimes referred to as \"fake news,\" that is present on these platforms due to the large volume of data that is accessible on the internet. The purpose of this research is to propose a method for recognizing and responding to instances of \"fake news\" on Facebook, which is a social media platform that is extensively utilized online. In this method, the Naive Bayes classification model is used to make a prediction about whether or not a post on Facebook will be classified as authentic or fake. The paper addresses a wide variety of strategies that have the potential to improve the results. The results of the study suggest that the problem of identifying fake news may be efficiently addressed by using approaches that are based on machine learning.

Introduction

I. INTRODUCTION

The identification of fake news has garnered a significant amount of attention and popularity among the general public, particularly among researchers and academics from all over the globe. Numerous research have been carried out to investigate the effects of false news and the ways in which people respond to having them in their lives. It is possible for any information to be considered fake news if it is not factual and is produced with the intention of persuading its viewers to believe in anything that is not real. At this point in time, there are several social media message and sharing tools that provide users with the ability to instantly disseminate a piece of information to millions of people with only the press of a button. When people begin to understand that none of the news is "fake," they may have a fresh perspective on this issue. This is the true problem that needs to be addressed. It is the point at which the public start to trust the false news without first verifying its validity that the trouble starts. The public has access to a limited number of resources and websites that provide information on the veracity of the news.

There are a variety of problems that are being caused by fake news in today's world, ranging from satirical pieces to faked news to intentional government propaganda in some channels. In our culture, the issues of fake news and a lack of faith in the media are becoming more prevalent and have enormous implications. "Fake news" is obviously a tale that is intentionally deceptive, but the conversation that is taking place on social media is redefining the meaning of "fake news" in recent times. A number of them are now using the word in order to disregard the facts that are in opposition to their chosen perspectives.

Many social media sites, including Facebook, Instagram, and Twitter, have been at the focus of a great deal of criticism as a result of the attention that has been given to them by the media. When a user comes across stories that are considered to be false news, they have already introduced a feature that warns them about it on the website. Additionally, they have made it known to the public that they are working on developing an automatic method to differentiate between these publications. Without a doubt, it is not a simple undertaking. In light of the fact that false news may be found on both sides of the spectrum, it is imperative that any given algorithm be politically agnostic. Furthermore, it should provide an equal balance to reputable news sources on either end of the spectrum. Furthermore, the issue of legitimacy is a challenging one to consider. On the other hand, in order to find a solution to this issue, it is essential to have a comprehension of what fake news is. After that, it is necessary to investigate the ways in which the methods that are used in the area of machine learning assist us in identifying bogus news.

II. OBJECTIVES

The detection of false news is the primary purpose of this research. This is a standard text classification issue that may be approached in a simple manner. Building a model that is capable of distinguishing between "real" news and "fake" news is something that is required. With the assistance of the Python programming language, a data set that includes both types of news will be made available. This data set will then be used to categorize the news using Machine Learning techniques in order to determine whether it is fraudulent or genuine. During these trying times, when our world is going through a pandemic, I am convinced that each and every one of us would have come across certain news linked to a virus that was interpreted in a way that seemed to be accurate, only to understand later with certain explanations that it was false or phony. And in addition, life in and of itself is a massive trip in which everyone of us will, at some point, be confronted with a certain degree of ambiguity along the route, and we will blindly pursue the path without even examining it, without knowing if it is true or untrue. As a result, I was able to develop a concept for my project with the assistance of circumstances like these.I was able to construct this project using the technological principles that would assist the user in distinguishing between false news and authentic news by doing extensive study and analysis.

III. PROPOSED METHODOLOGY

A dataset that is often referred to as news. On the other hand, a csv file was obtained, which had two distinct types of news inside itself, which were categorized as either true news or fake news. The machine learning techniques, including Linear aggression, Decision tree classification, Gradient boost classification, and Random forest classification, were used in the execution of the Python code that was carried out with the help of JupyterLab. The application makes use of Tfidf Vectorizer in order to identify the word frequency scores. Additionally, it is able to tokenize documents, learn vocabulary, and invert document frequency weightings. Furthermore, it gives the user the ability to encode new documents. Within the scope of this research, the first job that we do is to establish a dataset that includes a enough quantity of both false news and real news. Following that, we will proceed to code the dataset in accordance with the directory that the dataset has been saved in. Then, in order to get started with our code, we make use of the fundamental libraries that are necessary, such as pandas and sklearn, and import them. A TdidfVectorizer will be used in the middle of this in order to determine the frequency of the words that are presented in the data. Additionally, in the latter stages of the project, machine learning methods will be included into the scripts in order to validate the correctness of the datasets that have been supplied. Furthermore, the user is now permitted to provide the input that will enable them to extract the data with regard to its validity, namely whether it is Fake or Real. This is because the user is now able to offer in the input.

Four different machine learning classification methods are included in this paper. These algorithms are as follows:

- Linear regression

- Decision tree classification

- Gradient boost classification

- Random forest classification model

Used python packages :

- Sklearn :

Sklearn is a machine learning package that is available in Python. It contains a large number of machine learning methods.

We are making use of a few of its modules, such as train_test_split, Decision Tree Classifier, and accuracy_score, in this particular instance.

2. NumPy :

It is a Python module that is used for numerical computations and offers you with quick mathematical functions. It is used for the purpose of reading data from numpy arrays and for the purpose of modification.

3. Pandas :

It is used for reading and writing a variety of files. In the case of datasets, data modification is a straightforward process.

IV. IMPLEMENTATION DETAILS

Make sure to collect the data set and put it in the location that is specified. Start the JupyterLab program by using the anaconda prompt. Bring in all of the modules that are necessary for the extraction of information for the identification of bogus news items. Conduct an analysis of the data and make use of the TfidfVectorizer to determine the rate at which words occur. In order to determine whether or not the dataset is accurate, machine learning methods such as linear regression, decision tree classification, gradient boost classification, and random forest classification are used.As a last step, the user has the ability to verify the genuineness of the information that has been supplied inside the data set by determining whether or not it is genuine. As a result, the new project is established.

Tools used

Python

Conda

jupyterLab

sklearn

NumPy

Pandas

TEST RESULTS/VERIFICATION/RESULTS

Testing

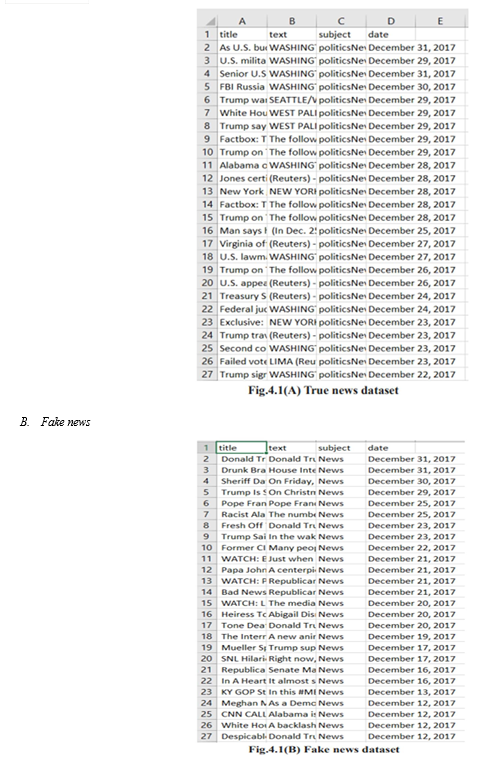

During the beginning stages of implementation of the project, the directory will be stored with two datasets, one containing false news and the other containing actual news.

A. True News

V. RESULT

Python was the programming language that was used in the effective execution of the project that was centred on the detection of fake news.

A number of machine learning methods were used in order to validate the quality of the data, and as a consequence, the outcomes demonstrated that it has an exceptionally high rate of accuracy. Following the execution of the procedures described above, a manual testing was carried out. During this testing, the user was required to enter the data, and the result shown that it was able to offer correct information about the validity of the information, making it possible to determine whether the information in question is counterfeit or genuine. After that, when I checked the news, it seemed to be completely fabricated. As a result, the Fake News Detection project has been effectively developed, carried out, and confirmed.

Conclusion

In the course of this research, I used the internet data sets that were accessible to me and utilized Python programming to do analysis and processing on the data. After that, the data had to go through a series of verification procedures in order to examine the frequency of its words and the correctness of the data, which was verified using machine learning algorithms. After that, I put this information to use by developing the most effective model for identifying false news via the use of manual testing. Make sure that you develop a distinct model for every kind of news that is sent all over the globe. 1) Construct new features by combining a variety of existing applications that are more difficult. 2) Additional data sets may also be added, which will result in the creation of a vast dataset with which it will be possible to identify instances of false news. 3) This sort of project may also be employed in specific businesses to identify the spread of erroneous information, which will aid in preventing the customers from being misled.

References

[1] Prakhar Biyani, Kostas Tsioutsiouliklis, and John Blackmer. ” 8 amazing secrets for getting more clicks”: Detecting clickbaits in news streams using article informality. In AAAI’16. [2] Jonas Nygaard Blom and Kenneth Reinecke Hansen. Click bait: Forward-reference as lure in online news headlines. Journal of Pragmatics, 76:87–100, 2015. [3] Paul R Brewer, Dannagal Goldthwaite Young, and Michelle Morreale. The impact of real news about fake news: Intertextual processes and political satire. International Journal of Public Opinion Research, 25(3):323–343, 2013. [4] Carlos Castillo, Mohammed El-Haddad, Ju¨rgen Pfeffer, and Matt Stempeck. Characterizing the life cycle of online news stories using social media reactions. In CSCW’14. [5] Carlos Castillo, Marcelo Mendoza, and Barbara Poblete. Information credibility on twitter. In WWW’11. [6] Abhijnan Chakraborty, Bhargavi Paranjape, Sourya Kakarla, and Niloy Ganguly. Stop clickbait: Detecting and preventing clickbaits in online news media. In ASONAM’16. [7] Subhabrata Mukherjee and Gerhard Weikum. Leveraging joint interactions for credibility analysis in news communities. In CIKM’15. [8] Eni Mustafaraj and Panagiotis Takis Metaxas. The fake news spreading plague: Was it preventable? arXiv preprint arXiv:1703.06988, 2017. [9] Raymond S Nickerson. Confirmation bias: A ubiquitous phenomenon in many guises. Review of general psychology, 2(2):175, 1998. [10] Brendan Nyhan and Jason Reifler. When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2):303–330, 2010. [11] Christopher Paul and Miriam Matthews. The russian firehose of falsehood propaganda model. [12] Dong ping Tian et al. A review on image feature extraction and representation techniques. International Journal of Multimedia and Ubiquitous Engineering, 8(4):385–396, 2013. [13] Martin Potthast, Johannes Kiesel, Kevin Reinartz, Janek Bevendorff, and Benno Stein. A stylometric inquiry into hyperpartisan and fake news. arXiv preprint arXiv:1702.05638, 2017. [14] Martin Potthast, Sebastian Ko¨psel, Benno Stein, and Matthias Hagen. Clickbait detection. In European Conference on Information Retrieval, pages 810–817. Springer, 2016. [15] Vahed Qazvinian, Emily Rosengren, Dragomir R Radev, and Qiaozhu Mei. Rumor has it: Identifying misinformation in microblogs. In EMNLP’11. [16] Walter Quattrociocchi, Antonio Scala, and Cass R Sunstein. Echo chambers on facebook. 2016. [17] Victoria L Rubin, Yimin Chen, and Niall J Conroy. Deception detection for news: three types of fakes. Proceedings of the Association for Information Science and Technology, 52(1):1–4, 2015. [18] Victoria L Rubin, Niall J Conroy, Yimin Chen, and Sarah Cornwell. Fake news or truth? using satirical cues to detect potentially misleading news. In Proceedings of NAACL-HLT, pages 7–17, 2016. [19] Victoria L Rubin and Tatiana Lukoianova. Truth and deception at the rhetorical structure level. Journal of the Association for Information Science and Technology, 66(5):905–917, 2015. [20] Natali Ruchansky, Sungyong Seo, and Yan Liu. Csi: A hybrid deep model for fake news. arXiv preprint arXiv:1703.06959, 2017. [21] Justin Sampson, Fred Morstatter, Liang Wu, and Huan Liu. Leveraging the implicit structure within social media for emergent rumor detection. In CIKM’15.

Copyright

Copyright © 2024 Anisha Agrawal , Eshika Pawar , Shailja Chauhan , Swati Sahu , Dr. Abhinandan Singh Dandotiya . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63348

Publish Date : 2024-06-18

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online