Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Review on the Skin Cancer Detection Using Deep Learning Algorithms and LBP

Authors: Khatija Unnisa, K. Prasanna, Bhageshwari Ratkal

DOI Link: https://doi.org/10.22214/ijraset.2024.63019

Certificate: View Certificate

Abstract

Skin cancer development is one of the conventional deadly disease types, with its cases expanding around the whole world. On the off chance that it\'s not analyzed in the early stages, it can prompt metastases, bringing about high death rates. Skin disease can be relieved whenever it\'s identified early. Thus, convenient and exact determination of such malignant growths is a key exploration objective. The early and precise identification of skin cancer has been suggested to be aided by deep-learning algorithms. The paper reviews new research articles based on deep learning methods and feature extraction techniques using LBP for skin cancer classification.

Introduction

I. INTRODUCTION

Skin cancer is a major global risk to numerous countries. According to projections by the World Cancer statistic [1], skin cancer over more than 150,000 new cases globally in 2023, making it the leading cause of death globally. Because of its mild symptoms, skin cancer is difficult to diagnose in its initial stages. Therefore, early diagnosis is important for better results. Our skin, traversing around twenty square feet altogether, fills in as the body's biggest organ, covering us from head to toe. It shifts in thickness across various regions, giving an essential hindrance against heat, actual effects, and other outside dangers. Its intercellular lipids assist with holding dampness, while additionally battling off microscopic organisms and ecological components. Sadly, skin malignant growth analyses have been on the ascent for many years, featuring the significance of early discovery and ordinary check-ups for those impacted. Unfortunately, many cases slip through the cracks until they've advanced altogether, lessening effective treatment possibilities.

Skin malignant growth will generally appear on sun-uncovered regions like the scalp, face, ears, and hands, yet can likewise appear in surprising spots like the palms, under the nails, or in the genital district. This kind of disease influences the skin's surface, with more than 5 million cases revealed in the US alone. Working on demonstrative precision and advancing early location is foremost in battling this sickness. Clinical experts and analysts alike are committing impressive work to progress symptomatic instruments, medicines, and screening strategies. Skin sores, described by unusual development or appearance contrasted with encompassing skin, come in different structures and are characterized into various forms. Subsequently, the improvement in the symptomatic precision and the pace of early conclusion is an essential undertaking. In such a manner, both clinical specialists and scientists are investing extraordinary amounts of energy in propelling clinical analysis, medicines, and assessments. Skin soreness is the unusual appearance or development of skin contrasted with the skin region around it. Sores can vary in type, surface, variety, shape, impacted area, and appropriation. They are characterized by 2,032 classifications, which are coordinated into a progressive system. Yet, sun openness doesn't make sense of skin malignant growths that are fostered on skin not usually appear in daylight. Besides it demonstrates that distinct variables might add to your gamble of skin malignant growth, for example, being presented with poisonous substances or having a condition that debilitates your resistant framework. For example, there are four significant clinical finding techniques for melanoma: ABCD rules, design investigation, Menzies strategy, and a 7-Point Agenda. Frequently, only experienced doctors can accomplish great finding accuracy with these techniques [3].

The changes brought about by the broad usage of technology are also connected to AI. Instead of manually extracting characteristics for categorization jobs, machine learning (ML) technology may be applied. Over the previous twenty years, machine learning algorithms have increased the accuracy of cancer prediction by 15% to 20%. Deep learning in artificial intelligence is a rapidly growing topic with many possible applications. Deep learning, which makes use of convolutional neural networks (CNNs), one of the most potent and popular machine learning (ML) approaches for image recognition and classification, is fueled by large datasets and cutting-edge computational algorithms. Conventional machine learning approaches are no longer widely employed since they need extensive preparation and a great deal of prior information.

Deep learning techniques are implemented in a broad range of areas such as speech recognition [4], pattern recognition [5], and bioinformatics [6] as compared with other classical approaches to machine learning. This paper thoroughly discusses also analyzes deep-learning techniques for skin cancer detection. The paper's main goal is to give a thorough, systematic literature analysis of previous studies on deep learning techniques, including convolutional neural networking (CNN) and other models, for the early identification of skin cancer.

The sections of the paper are organized as follows: Section 2 describes a literature review on skin cancer using convolutional neural networks, feature selection using local binary patterns, and insight into different datasets for skin cancers. It defines the review domain, the sources of information, the model framework, and the analysis of deep learning techniques in Section 3. The study’s findings are compiled in Section 4, along with a helpful conclusion.

II. LITERATURE REVIEW

A key component of the extraction method for identifying skin cancer, especially melanoma, is called local binary pattern (LBP). The capacity of LBP to effectively extract texture data from images—a crucial factor in recognizing malignant lesions—makes it a significant tool in the fight against skin cancer. LBP is an effective technique for removing discriminative elements from pictures of the skin. These characteristics differentiating properties between malignant and benign tumors come from the different textures. It offers a straightforward yet powerful method for describing the image's local spatial patterns with contrast, which are crucial features of skin lesions, by accurate recognition of skin lesion metrics such as asymmetry, irregular borders, shade variation, and diameter. LBP can help with early detection, which counts for therapeutic outcomes.

Table 1: Overview of the Reviewed Sources

|

Reference |

Model |

Dataset |

Accuracy (%) |

|

[8] |

AlexNet, VGG16 and ResNet18 |

ISIC 2017 |

90.63 |

|

[9] |

AlexNet, VGG16, and ResNet18 |

ISIC 2017 |

90.63 |

|

[10] |

ResNet50 |

NIH SBIR and ISIC 2017 |

83 and 94 |

|

[11] |

VGG16, ResNet59 and DenseNet121 |

HAM10000 |

79 |

|

[12] |

AlexNet, VGG16 |

PH2 |

97.50 |

|

[13] |

CNN, Resnet50, InceptionV3, and Inception Resnet |

ISIC 2018 |

83.2 |

|

[14] |

LBP, CNN, and CNN+LBP |

HAM10000 |

96, 97 and 98.9 |

|

[15] |

PU-WOA and DCNN |

Standard dataset |

0.998737 |

|

[16] |

KNN and SVM |

Standard dataset |

79.73 and 84.76 |

|

[17] |

GRACH and DRLBP |

PH2 |

99.5 and 100 |

|

[18] |

CNN |

Xiangya-Derm |

AUC= 0.87 |

|

[19] |

AlexNet, VGG-16, VGG-19 |

ISIC 2016, ISIC 2017, PH2, HAM10000 |

98.33 |

|

[20] |

Deep Residual Network |

ISIC 2017, HAM10000 |

96.971 |

|

[21] |

Pre-trained ResNet50, Xception, InceptionV3, InceptionV2, VGG19, and MobileNet |

HAM10000 |

90.48 (Xception) |

|

[22] |

Xception |

HAM10000 |

100 |

|

[23] |

CNN, ResNet-50 |

HAM10000 |

86 |

|

[24] |

DenseNet169 |

HAM10000 |

92.25 |

A. Literature review on Detection of Skin Cancer Using CNN

CNN is a deep structured learning technique applied to analyze image data. Similar to artificial neural networks, CNNs learn the features of the training set to differentiate the classes of the test set using feedforward and backpropagation [7]. CNNs give a good performance which has threesome types of layers, a convolutional layer, a pooling layer, and a fully connected layer. The wide application of CNNs can be seen in image processing and recognition tasks. Work that requires processing to be performed on images incorporates the use of CNN. CNNs can be used to categorize historical texts, objects, and collections if there is enough data available. CNNs are also used in speech-processing applications and to understand the climate and how its drastic changes can be reduced

Mahbod et al. [8] represented a design for the multi-class dermoscopic framework of skin illnesses. Pre-defined models ResNet18, AlexNet, and VGG16, portray skin sores from the dermoscopic images. This framework got an impact of an order of 90.63 percent by confirming the confounded photos of the contention of ISIC 2017.

Tschandl et al. [9] proposed a structure intended for multi-class dermoscopy pictures of skin illness plots. This paper utilizes pre-defined profound brain networks, ResNet18, AlexNet, and VGG16, to make sense of the dermoscopic pictures of skin injuries. By and through the framework, characterization results are acquired by 90.63% by inspecting intricated photos of the ISIC 2017 dataset. Hagerty et al. [10] proposed a combination system between profound learning and carefully assembled elements to get high precision for melanoma discovery through dermoscopic pictures. It utilizes a pair of datasets: the NIH SBIR and the ISIC 2018 test preparation datasets. Handmade convolutional picture examination goal that distinguishes obsessive significant melanoma highlight extractor. When the ResNet50 model is introduced to the information assortment for ISIC 2018, the AUC of the unified handmade highlights framework (HC) is 93 percent, and without the HC features, it is 94 percent.

Rahi et al. [11] proposed a CNN network utilizing the Keras Consecutive Programming interface and contrasted the outcomes they got and pre-prepared models like VGG16, RESNET50, and DENSENET121. The dataset employed was taken from the ISIC chronicle named 'HAM10000′ which encompasses 10,015 pictures. CNN model with 6 layers was initiated, comprising convolutional layers as four and concatenated with 2 max-pooling layers, completely associated bottom layer for expectation. The proposed model outgains an accuracy of 79%. An equivalent dataset was simultaneously worked with RESNET50, DENSENET50 VGG16, in which DENSENET50 gave a good precision of 90%.

Gulati et al. [12] utilized the utilization of pre-trained networks, to be specific, AlexNet and VGG16, in two distinct ways, one as an exchange learning worldview and the other as a component extractor. The work uses dataset PH2 which has 200 pictures, of which 160 have a place in the harmless class and 40 have a place in the dangerous classification. The previously mentioned networks require contributions of various sizes; consequently, carrying out preprocessing. AlexNet and VGG16 have been utilized. Of these strategies, VGG16 gave the best outcomes. A 97.5% accuracy and 96.87% specificity was attained.

Walaa et.al. [13] utilized the utilization of pre-trained networks CNN, Resnet50, InceptionV3, and Inception Resnet), this study’s innovation and contribution are the use of ESRGAN as a preprocessing step. Both the developed model and the pre-trained model performed similarly. Simulations with the ISIC 2018 skin lesion dataset demonstrated the effectiveness of the proposed method. CNN obtained an accuracy rate of 83.2%, whereas the models from Resnet50 (83.7%), InceptionV3 (85.8%), and Inception Resnet (84%) yielded higher results.

B. Literature review on Feature Selection Using Local Binary Pattern

L. Riaz et al.,[14] proposed a study that research offers a joint learning system using Convolutional Neural Networks (CNN)and Local Binary Pattern (LBP)followed by its concatenation of all the extracted features through CNN and LBP architecture. Results from this proposed study demonstrate the fusion architecture's robustness, with an accuracy of 98.60% and a validation accuracy of 97.32%.

B. Krishna et al. [15] propose a new skin cancer recognition scheme, with: “Pre-processing, Segmentation, Feature extraction, Optimal Feature Selection and Classification”. Here, pre-processed images are segmented through the “Otsu Thresholding model”. Followed by the third step is feature extraction, in which Deviation Relevance-based “Local Binary Pattern (DRLBP), Gray-Level Co-Occurrence Matrix (GLCM) features and Gray Level Run-Length Matrix (GLRM) features” are produced. From these extracted features, the optimal features are selected through the Particle Updated WOA (PU-WOA) model. Eventually, classification is done by Optimized DCNN and NN to classify the skin lesions. For deeper classification, the DCNN is upgraded by the introduced algorithm. It gains the highest accuracy of 0.998737 percent.

Mahagaonkar, Rohini S. et.al [16] propose a unique system which is to develop a system that detects skin abnormality using a machine learning framework to classify a skin abnormality as melanoma more effectively.

To identify melanoma in dermoscopy skin images. The suggested machine learning technique is predicated on the extraction of effective texture features, such as CS LBP and Harlick GLCM features. Three hundred images from dermquest.com, the standard dataset, are used in the experiment. The approach's accuracy while utilizing KNN is 79.7315%, whereas its accuracy when utilizing SVM is 84.7615%. Thamizhamutu. R et al. [17] suggests a study in which a non-invasive, effective Skin Melanoma Classification (SMC) method is demonstrated through dermoscopic pictures. The SMC system comprises four modules: segmentation, feature extraction, feature reduction, and classification. K-means clustering is applied to cluster the color information of dermoscopic images in the first module, as for the second module extracting meaningful and useful descriptors based on the statistics of local property, and spatial patterns by Dominant Rotated Local Binary Pattern (DRLBP) and parameters of the Generalized Autoregressive Conditional Heteroscedasticity (GARCH) model of wavelet.

The final module uses deep learning for classification, while the third module uses the t-test to reduce the features. According to the independent results, for the first stage (normal/abnormal GARCH parameters of the third DWT level sub-bands yield 92.50% accuracy as opposed to features based on DRLBP (88%) and local characteristics (77.5%). It is 90.8% (DRLBP), 80.8% (local properties), and 95.83% (GRACH) for the second stage (benign/malignant). The first and second stages of the SMC method yield 99.5% and 100%, respectively according to the 2% of features from the combo that were chosen. Using two phases of deep learning, the best results are obtained on photos from the PH2 database. For identifying melanoma instances, it gained an accuracy of 100%.

C. Deep Learning Techniques on Skin Cancer Detection

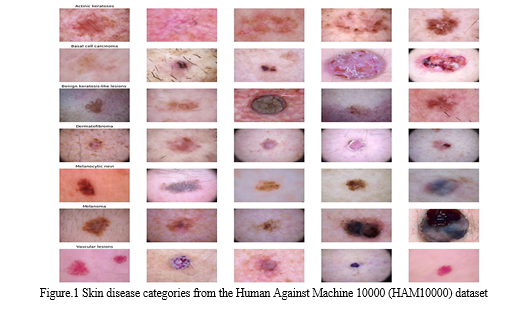

This section overviews the latest issued papers on deep learning algorithms for skin cancer detection. Skin cancer diagnosis is greatly aided by deep neural networks. They correspond to a set of connected nodes. Their structure is comparable to that of the human brain in terms of the interconnectivity of neurons. Their nodes collaborate to find solutions to specific issues. After being taught certain tasks, neural networks function as authorities in the fields for which they were trained. In our study, neural networks were created to distinguish between several types of skin cancer. Divergent types of skin lesions from the Human Against Machine 10000 (HAM10000) dataset are presented in figure 1. Different techniques of learning, such as CNN, Transfer learning, Deep residual network, etc. for skin cancer detection systems and deep neural networks-related research are discussed in detail in this section.

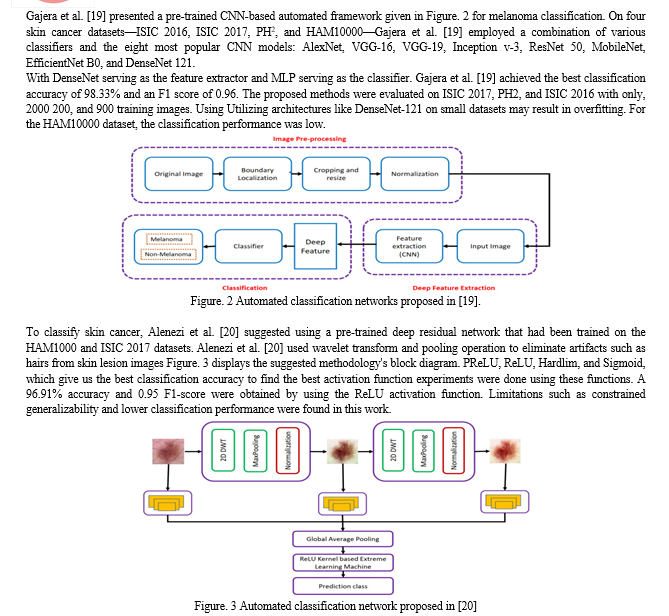

Inthiyaz et al. [18] proposed a deep-learning-based automated system for classifying skin cancers trained on the Xiangya-Derm dataset that consists of 150,223 images. Inthiyaz et al. [18] used a pre-trained convolutional neural network (CNN) for classifying skin lesion images into four categories, melanoma, eczema, psoriasis, and healthy skin, and attained an AUC of 0.875. On a very small dataset, this work was tested. Inthiyaz et al. [18] achieved an AUC of 0.87, which can still be improved; Inthiyaz et al. [18] used a deep architecture ResNet-50 which increases the computational cost.

Lu and Firoozeh Abolhasani Zadeh [22] proposed an improved Xception network based on the swish activation function for skin cancer classification. The suggested model trained on HAM10000 obtained an accuracy of 100% and an F1-score of 0.955. Additionally, the proposed model outperformed VGG16, InceptionV3, AlexNet, and the original Xception in contrast to the performance of deep learning algorithms. Alwakid et al. [23] proposed using ESRGAN and segmentation as a pre-processing step to improve the classification performance on skin lesion datasets; ESRGAN was used for enhancing the image quality and was employed to take pictures of skin lesions and extract an area of interest (ROI). Synthetic skin lesion images generated by ESRGAN were used for Data augmentation. With the trained CNN network, the suggested method produced an accuracy of 0.859 F1-score, while the ResNet-50 model produced an F1-score of 0.852.

Kousis et al. [24] trained eleven popular CNN architectures using the HAM10000 dataset for classifying skin cancers into seven categories, actinic keratoses(akiec), basal cell carcinoma (bcc), benign keratosis-like lesions, dermatofibroma (df), melanoma (mel), melanocytic nevi (NV), vascular lesions. DenseNet169 produced excellent outcomes, obtaining a 92.25% accuracy rate and a 0.932 F1 score among the eleven CNN architecture configurations.

III. DATASETS

- HAM10000: The dataset consists of 10,015 dermoscopic images and is accessible publicly through the ISIC archive. HAM10000 consists of 327 images of AK, 1099 images of benign keratoses, 115 images of dermatofibromas, 514 images of basal cell carcinomas, 6705 images of melanomas, 1113 images of melanocytic nevi, and 142 images of vascular skin lesions [25].

- PH2: This database comprises of 200 dermoscopic images. The manual segmentation, clinical diagnosis, and identification many dermoscopic structures, carried out by skilled dermatologists, are included in the PH2 database [26].

- ISIC 2016: It comprises 900 images of benign nevi and malignant. A total of 30.3% of images used in ISIC 2016 are melanoma whereas the remaining images are benign nevi class [27].

- ISIC 2017: This dataset contains 2000 training images including melanomas, seborrheic keratoses (SK), and benign nevi. The training dataset includes 2000 images of seborrheic keratosis, melanomas, and benign nevi. The validation dataset contains 750 photos of melanoma, SK, benign nevi, benign nevus images, and SK images, and 117 melanoma images in the test dataset [27].

- ISIC 2018: The ISIC 2018 dataset contains over 12,500 training images, 100 validation images, and 1000 test images [25,28].

- ISIC 2019: A total of 25,331 images representing eight different skin lesions, are included in the ISIC 2019 collection. The ISIC2019 dataset also contains image metadata, such as the patient’s sex, age, and location [27],[25].

- ISIC 2020: The dataset includes 33,126 dermoscopic training images of distinct benign and malignant skin lesions from more than 2000 people [29].

- XiangyaDerm: This dataset has 47,075 images covering 541 skin conditions, counting for nearly 99 of the prevalence of skin conditions [30].

Conclusion

Skin cancer global form of cancer as it spreads very quickly. Detecting early can be an early way to cure. This review study gives a structured overview of deep learning techniques and feature selection using local binary patterns useful for skin cancer detection. It examines various deep-learning architectures for the diagnosis of skin cancer. Deep learning approaches, including feature extraction models, fully CNN, and pre-trained models, yield superior overall outcomes by removing the need for preprocessing stages. The key aim of this study is the analysis of deep-learning algorithms and feature selection using local binary patterns for skin cancer detection systems for classification. Algorithms like - AlexNet, Vgg16, and XceptionNet provided the finest results. The algorithms were performed on datasets ISIC2017, PH2, HAM10000. This review study, which compares the effectiveness of several deep learning techniques, also includes an overview of the various standard datasets utilized in the experiments.

References

[1] American Cancer Society. Cancer Facts & Figures 2023, Skin cancer statistics | World Cancer Research Fund International (wcrf.org). [2] C. A. Hartanto and A. Wibowo, ‘‘Development of mobile skin cancer detection using faster R-CNN and MobileNet v2 model,’’ in Proc. 7th Int. Conf. Inf. Technol., Comput., Electr. Eng. (ICITACEE), Sep. 2020, pp. 58–63. [3] Smartphone-Based Applications for Skin Monitoring and Melanoma Detection - ScienceDirect Lv, E., Liu, W., Wen, P., & Kang, X. (2021). Classification of benign and malignant lung nodules based on deep convolutional network feature extraction. Journal of Healthcare Engineering, 2021,1-11. [4] Rashid, H.; Tanveer, M.A.; Aqeel Khan, H. Skin Lesion Classification Using GAN Based Data Augmentation. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 916–919. [5] Bisla, D.; Choromanska, A.; Stein, J.A.; Polsky, D.; Berman, R. Towards Automated Melanoma Detection with Deep Learning: Data Purification and Augmentation. arXiv 2019, arXiv:1902.06061. Available online: http://arxiv.org/abs/1902.06061 (accessed on 10 February 2021). [6] Huang G., Liu Z., van der Maaten L., Weinberger K.Q. Densely Connected Convolutional Networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Honolulu, HI, USA. 21–26 July 2017. [7] MdMI Rahi, Khan FT, Mahtab MT, et al. Detection of Skin Cancer Using Deep Neural Networks. Proceedings of IEEE Asia-Pacific conference on computer science and data engineering (CSDE). IEEE; 2019. doi: https://doi.org/10.1109/CSDE48274.2019.9162400 [8] A. Mahbod, G. Schaefer, C. Wang, R. Ecker, and I. Ellinge, ‘‘Skin lesion classification using hybrid deep neural networks,’’ in Proc. IEEE Int. Conf. Acoust., Speech Signal Process. (ICASSP), May 2019, pp. 1229–1233. [9] P. Tschandl, C. Rosendahl, and H. Kittler, ‘‘The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions,’’ Scientific Data, vol. 5, no. 1, pp. 1–9, Aug. 2018. [10] J. R. Hagerty, R. J. Stanley, H. A. Almubarak, N. Lama, R. Kasmi, P. Guo, R. J. Drugge, H. S. Rabinovitz, M. Oliviero, and W. V. Stoecker, ‘‘Deep learning and handcrafted method fusion: Higher diagnostic accuracy for melanoma dermoscopy images,’’ IEEE [11] MdMI Rahi, Khan FT, Mahtab MT, et al. Detection of Skin Cancer Using Deep Neural Networks. Proceedings of IEEE Asia-Pacific conference on computer science and data engineering (CSDE). IEEE; 2019. doi : https://doi.org/10.1109/CSDE48274.2019.9162400. [12] Gulati S, Bhogal RK. Detection of Malignant Melanoma Using Deep Learning. Proceedings of international conference on advances in computing and data sciences. Springer; 2019. p. 312-325. doi: https://doi.org/10.1007/978-981-13-9939-8_28. [13] Walaa G, Najam us Saba, Detection of Skin Cancer Based on Skin Lesion Images Using Deep Learning, Healthcare 2022, 10(7), https://doi.org/10.3390/healthcare10071183 [14] L. Riaz et al., \"A Comprehensive Joint Learning System to Detect Skin Cancer,\" in IEEE Access, vol. 11, pp. 79434-79444, 2023, doi: 10.1109/ACCESS.2023.3297644. [15] International Journal of Image and Graphics (worldscientific.com) [16] Mahagaonkar, Rohini S., and Shridevi Soma. \"A novel texture-based skin melanoma detection using color GLCM and CS-LBP feature.\" Int. J. Comput. Appl 171.5 (2017): 1-5. [17] Thamizhamuthu, R. and D. Manjula. “Skin Melanoma Classification System Using Deep Learning.” Computers, Materials & Continua (2021): n. pag. [18] Inthiyaz S., Altahan B.R., Ahammad S.H., Rajesh V., Kalangi R.R., Smirani L.K., Hossain M.A., Rashed A.N.Z. Skin disease detection using deep learning. Adv. Eng. Softw. 2023; 175:103361. doi: 10.1016/j.advengsoft.2022.103361. [19] Gajera H.K., Nayak D.R., Zaveri M.A. A comprehensive analysis of dermoscopy images for melanoma detection via deep CNN features. Biomed. Signal Process. Control. 2023;79:104186. doi: 10.1016/j.bspc.2022.104186. [20] Alenezi F., Armghan A., Polat K. Wavelet transform based deep residual neural network and ReLU based Extreme Learning Machine for skin lesion classification. Expert Syst. Appl. 2023;213:119064. doi: 10.1016/j.eswa.2022.119064. [21] Jain S., Singhania U., Tripathy B., Nasr E.A., Aboudaif M.K., Kamrani A.K. Deep learning-based transfer learning for classification of skin cancer. Sensors. 2021;21:8142. doi: 10.3390/s21238142. [22] Lu X., Firoozeh Abolhasani Zadeh Y. Deep learning-based classification for melanoma detection using XceptionNet. J. Healthc. Eng. 2022;2022:2196096. doi: 10.1155/2022/2196096. [23] Alwakid G., Gouda W., Humayun M., Sama N.U. Melanoma Detection Using Deep Learning-Based Classifications. Healthcare. 2022;10:2481. doi: 10.3390/healthcare10122481. [24] Kousis I., Perikos I., Hatzilygeroudis I., Virvou M. Deep Learning Methods for Accurate Skin Cancer Recognition and Mobile Application. Electronics. 2022;11:1294. doi: 10.3390/electronics11091294. [25] Tschandl P., Rosendahl C., Kittler H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data. 2018;5:1–9. doi: 10.1038/sdata.2018.161. [26] Mendonça T., Ferreira P.M., Marques J.S., Marcal A.R., Rozeira J. PH2-A dermoscopic image database for research and benchmarking; Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Osaka, Japan. 3–7 July 2013; pp. 5437–5440. [27] Codella N.C., Gutman D., Celebi M.E., Helba B., Marchetti M.A., Dusza S.W., Kalloo A., Liopyris K., Mishra N., Kittler H., et al. Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (isbi), hosted by the international skin imaging collaboration (isic); Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018); Washington, DC, USA. 4–7 April 2018; pp. 168–172. [28] Codella N., Rotemberg V., Tschandl P., Celebi M.E., Dusza S., Gutman D., Helba B., Kalloo A., Liopyris K., Marchetti M., et al. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (isic) arXiv. 20191902.03368 [29] Rotemberg V., Kurtansky N., Betz-Stablein B., Caffery L., Chousakos E., Codella N., Combalia M., Dusza S., Guitera P., Gutman D., et al. A patient-centric dataset of images and metadata for identifying melanomas using clinical context. Sci. Data. 2021;8:34. doi: 10.1038/s41597-021-00815-z. [30] LABELS2019_XiangyaDerm.pdf (csu.edu.cn)

Copyright

Copyright © 2024 Khatija Unnisa, K. Prasanna, Bhageshwari Ratkal. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63019

Publish Date : 2024-05-31

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online