Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Advanced Version of Voice for Deaf and Dumb Persons

Authors: M. Navaneetha Krishnan, Keerthana. S, Nivetha. S, Gowri Priya. R

DOI Link: https://doi.org/10.22214/ijraset.2023.51192

Certificate: View Certificate

Abstract

Normal and dumb individuals can naturally communicate with one another using sign language, but people hasn’t comprehend their sign language, they frequently struggle to do so. The major goal of this initiative is to lessen the communication gap between deaf-dumb persons and other people. The suggested device transforms their hand motions into voice that a layperson may comprehend in order to lessen this barrier. Gloves, accelerometer and flex sensors, a microprocessor, a voice module, and a 16x16 LCD display make up this device. To put it simply, the voice module will translate the gestures into real-time speech output, and the display will provide text foreach corresponding gesture. So, this device offers both deaf - dumb and sighted people an effective means of communication.

Introduction

I. INTRODUCTION

Language is a structured, traditional system of word use that is used in spoken and written human communication. It is significantly more challenging to communicate thoughts and viewpoints when two people are involved who are from different regions and speak different languages. Thus, it is necessary to enlist a third party, possibly a translator. A situation like this can happen when a normal person and a person who has trouble hearing and speaking communicate.

Partial or complete hearing loss results in an inability to hear. Hearing loss can develop later in life or be present at birth. One or both ears may experience hearing loss.

These can impair children's capacity to learn spoken language, as well as their ability to communicate socially and at work. Both temporary and permanent hearing loss are possible. Hearing loss related with ageing often affects both ears because cochlear hair cells are lost over time. Some people with hearing loss, particularly older ones, may experience loneliness. Deaf persons frequently have very little or no hearing.

Genetics, ageing, noise exposure, some illnesses, birth difficulties, ear trauma, and some drugs or toxins are only a few of the causes of hearing loss. Chronic ear infections are a common illness that causes hearing loss.] Pregnancy-related diseases like rubella, syphilis, and CMV can all result in hearing loss in the foetus. When a person's hearing is tested and it is discovered that at least one ear cannot hear 25 dB, hearing loss is identified. For every new born, hearing testing is advised. There are four levels of hearing loss: mild (between 25 and 40 dB), moderate (between 41 and 55 dB), moderate-severe (between 56 and 70 dB), severe (between 71 and 90 dB), and profound (greater than 90 dB).

Deaf and dumb people depend primarily on sign language to send or receive information to or from the others surrounding them. As it is known, sign language used a special hand gesture, facial expressions and hand movements to communicate with others. These expressions were dif?cult to be known to public which cause a big problem to deaf and dumb people to transfer their thoughts or ideas to others (Garg et al., 2009). Nowadays, information technology researchers tried to ?nd alternative solutions that can help these important categories of people to give them more con?dent when communicating with others. Deaf and dumb people depend primarily on sign language to send or receive information to or from the others surrounding them. As it is known, sign language used a special hand gesture, facial expressions and hand movements to communicate with others. These expressions were dif?cult to be known to public which cause a big problem to deaf and dumb people to transfer their thoughts or ideas to others (Garg et al., 2009). Nowadays, information technology researchers tried to ?nd alternative solutions that can help these important categories of people to give them more con?dent when communicating with others.Communication among deaf-mute people and Hearing loss is a partial or total inability to hear. Hearing loss may be present at birth or acquired at any time afterwards. Hearing loss may occur in one or both ears. In children, hearing problems can affect the ability to acquire spoken language, and in adults it can create difficulties with social interaction and at work. Hearing loss can be temporary or permanent. Hearing loss related to age usually affects both ears and is due to cochlear hair cell loss. In some people, particularly older people, hearing loss can result in loneliness. Deaf people usually have little to no Hearing loss may be caused by a number of factors, including: genetics, ageing, exposure to noise, some infections, birth complications, trauma to the ear, and certain medications or toxins.

Chronic ear infections are a frequent illness that impairs hearing. Pregnancy-related diseases like rubella, syphilis, and CMV can all result in hearing loss in the foetus. When a person's hearing is tested and it is discovered that at least one ear cannot hear 25 dB, hearing loss is identified. For every new born, hearing testing is advised. There are four levels of hearing loss: mild (between 25 and 40 dB), moderate (between 41 and 55 dB), moderate-severe (between 56 and 70 dB), severe (between 71 and 90 dB), and profound (greater than 90 dB). Conductive hearing loss, sensorineural hearing loss, and mixed hearing loss are the three basic categories of hearing loss.

As of 2013, around 1.1 billion individuals worldwide had some degree of hearing loss. It causes disability in roughly 466 million individuals (5% of the global population), and moderate to severe disability in 124 million people. 108 million people with moderate to severe disabilities reside in low- and middle-income nations. For 65 million of individuals who have hearing loss, it started in their early years. Members of the Deaf culture who use sign language could consider themselves to be different rather than disabled. Several members of the Deaf community oppose efforts to treat the condition, and some in this group are concerned about cochlear implants since they could lead to the eradication of their culture. The terms hearing impairment or hearing loss are generally considered negatively.

Implimented a special application to solve this issue. The interpreter in our application model is a desirable one that translates. A Dumb Person's Gesture to Synthesized English Words with a Matching Sign Language Meaning that Uses Real English Sentences as Text Input for Deaf People and Sign Language as a Gesture Interprets a Certain Thing as an Audio Output for Normal People. By closing the communication gap between the Normal and Deaf and Dumb communities, this will benefit both. Although the phrases are still frequently used when discussing deafness in medical situations.

II. EXISTING METHOD

Existing method consists of flex sensors, accelerometer, speaker, Arduino module. The interface of the flex sensors with the Arduino microcontroller. The measurement instrument is directly attached to Analog ports since the flex sensors achieve Analog output. Arduino NANO has an integrated ADC (Analog to Digital Converter) and is powered by a source of electricity. As soon as the software programme is put into use, it compares the information from the flex sensor, which is fully customizable for basic and everyday use, and communicates data through a Bluetooth transmitter. Flex sensors are sensors that change their resistance based on the degree of bend or flex. They consist of a flexible strip of material with conductive material on either side, and as the strip is bent, the distance between the conductive layer changes, causing the resistance of the sensor to change. Arduino is an open- source electronics platform built on intuitive hardware and software. It comprises of a board with a programmable microcontroller, a programming environment, and a sizable user and development community. A sensor called an accelerometer monitors three-dimensional acceleration, also known as the rate of change of velocity. It can pick up vibrations, movement, and changes in orientation. Vibrations from the gesture are picked up by the sensors and sent through the accelerometer. The cell phone then plays the relevant sound and the text-based display.

The main objective of studying gesture recognition is to develop a system that can recognise human gestures and make use of them for command and control or informational purposes. Wearable glove-based sensors can be used to record hand motion and location. Additionally, they can immediately provide the exact coordinates of the palm and finger placements, orientations, and configurations thanks to sensors built into the gloves.

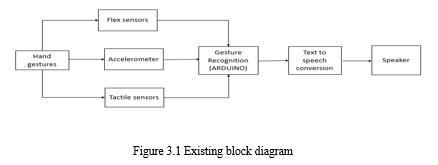

III. EXISTING BLOCK DIAGRAM

A. Flex Sensors

The amount of deflection or bending is measured using a sensor known as a flex sensor or bend sensor. In most cases, the sensor is adhered to the surface, and by bending the surface, the resistance of the sensor element may be changed. Resistance comes in three different grades:

LOW resistance

MID resistance

HIGH resistance.

We must select the ideal sensor based on the circumstance. In essence, a flex sensor is a variable resistor that changes resistance in response to bend. It is commonly referred to as a flexible potentiometer since the resistance is inversely proportional to the degree of bending. By using pressure, sensors convey voltage.

B. Accelerometer

An accelerometer absorbs the vibrations created by the body and uses it to know the orientation of the body. In a speaking device, an accelerometer can be used for a variety of purposes, depending on the specific device and application. Another possible use of an accelerometer in a speaking device is to detect motion. For example, the device may be programmed to start recording audio when it detects that it is being lifted up or moved around. This can be useful in situations where the user needs to record a conversation or lecture, but does not want to have to press a button to start the recording.

C. Tactile Sesnor

Tactile sensors can be useful in speaking devices for individuals who are deaf and dumb, also known as deaf-mute or nonverbal individuals. These devices can help these individuals communicate by translating their tactile movements into words or phrases. Tactile sensors can be used to detect pressure and movement, which can be used to interpret hand gestures and other physical movements made by the user. This can be done by placing the sensors on gloves or other wearable devices, or by embedding the sensors directly into the speaking device. Tactile sensors can also be used in conjunction with other communication aids, such as sign language or visual aids, to provide a more comprehensive communication solution for individuals who are deaf and dumb. By using multiple modes of communication, individuals can more effectively express themselves and interact with others.

D. Arduino

The TTS module can be connected to the Arduino board using jumper wires. This module can convert text or predefined phrases into speech. The Arduino board can be programmed to receive input in the form of text or hand gestures using a gesture recognition module. The gestures can be recognized using sensors such as accelerometers or flex sensors Once the input is received, the Arduino board can use the TTS module to convert the input into speech and output it through a speaker. The power supply for the speaking device can be a battery or a power supply unit. Overall, the speaking device can be programmed to recognize different hand gestures and convert them into speech. It can also be programmed to respond to specific text or phrases with pre-recorded responses. This can help people who are deaf and dumb to communicate more easily with others.

E. Speaking Module

By using computational linguistics, voice to text is a type of speech recognition software that can identify spoken language and translate it into text. The terms "computer speech recognition" and "speech recognition" are also used to describe it. Real-time transcription of audio streams can be done by certain software, equipment, and devices so that text can be shown and used for actions. Software that converts spoken word into editable text may be downloaded to a variety of devices. Voice recognition software is used to do this. Using Unicode letters, a computer application uses linguistic techniques to separate the aural data from spoken words. With a multi-step, intricate machine learning model, speech to text conversion is possible.

They are analog sensors. They can be made unidirectional or bidirectional. Even a little bend of the finger can be detected. Now the bending of each finger is quantized into 10 levels

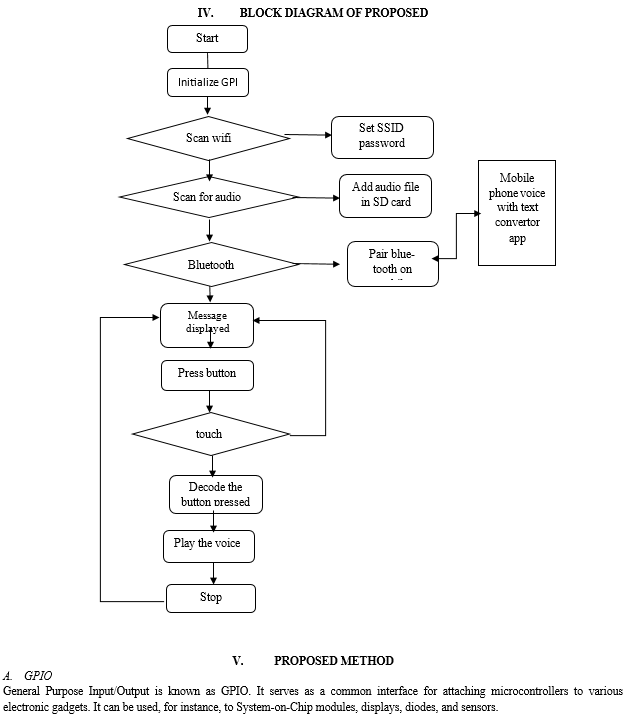

VI. RESULTS AND DISCUSSION

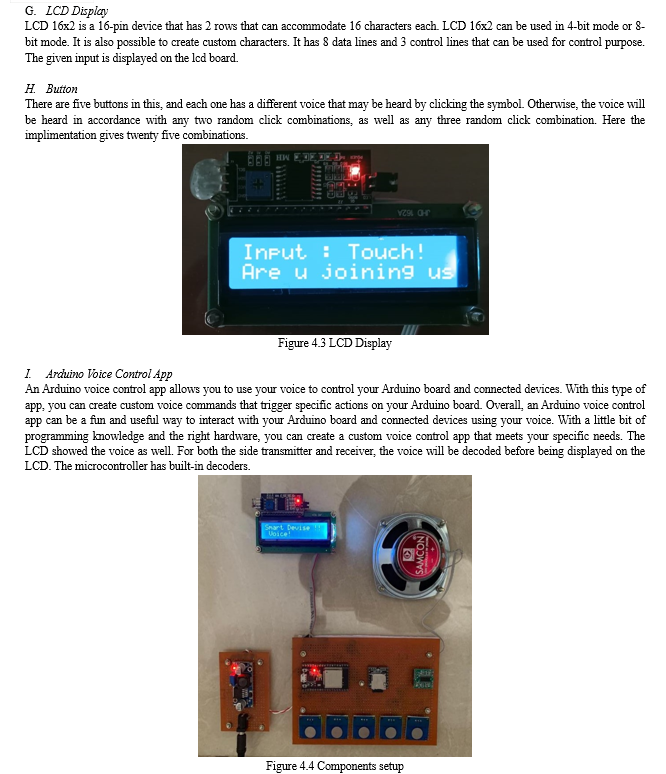

This project removes the communication barrier for dumb and deaf people. Utilizing this, interpersonal issues are resolved. The output will be displayed on the LCD after the input is triggered, which is all that the buttons are intended for. The receiver will be on the other side of the voice control software for Arduino. The LCD showed the voice as well. For both the side transmitter and receiver, the voice will be decoded before being displayed on the LCD. The microcontroller has built-in decoders.

Conclusion

In conclusion, a speaking device for deaf and mute individuals has the potential to improve communication and enhance the quality of life for technologies such as text-to-speech or speech recognition, allowing the user to express themselves in a more natural way. It is important to remember that each individual\'s communication needs are unique, and a customizable device that can be tailored to the user\'s specific requirements would be most effective. Additionally, the development of such a device requires a multidisciplinary approach that involves professionals from different fields, including linguists, engineers, and medical professionals, to ensure that the device meets the highest standards of usability and functionality. Overall, a speaking device for deaf and mute persons has the potential to greatly improve the lives of many people, and it is an area that deserves continued research and development.

References

[1] Areesha Gul A ,(2020) ,“Two-way Smart Communication System for Deaf & Dumb and Normal people” ,IEEE. [2] Bhayalakshmi C.L, Krishnamurthy Bhat,(2020), “Advanced Glove for Deaf and Dumb with Speech and Text Message on Android Cell Phone”,IEEE,2020. [3] Gowriswari S,Roshan J,Aadhithyan M, Venkatesh R.,(2019), “ An Assistive device for deaf and dumb “, International Conference on Recent Trends in InformationTechnology (ICRTIT) 2019. [4] Ganapathi Raju , A. Rajender Reddy , P. Sai Pranith, , L. Sai Nikhil , Sarwa Pasha, P. Vishwanath Bhat,(2020), “ An Assistive converter for deaf and dumb”, IEEE,2020. [5] Preethi K Mane, S.Kumuda,(2020),”Smart Assistant for Deaf and Dumb Using Flexible Resistive Sensor”,IEEE,2020. [6] Sriram N, Nithiyanandham M ,(2019),“A hand gesture recognition based communication system for silent speakers,” presented at the Int. Conf. Hum. Comput. Interact., Chennai, India, Aug. 2019. [7] Surendranath,K.Shekar, Yun, L., et al,(2017), “Assistive Device for Blind, Deaf and Dumb People Using Raspberry- pi“, “A Sign Component-Based Chinese Language Recognition Using Accelerometer and EMG Data”, IEEE Transactions biomedical Engineering,, 2012 [8] Sunita V.Matiwade,Dr.M.R.Dixit,(2016), “Electronic Device for Deaf and Dumb toInterpret Sign Language of Communication” , International Journal of Innovative Research in Computer and Communication Engineering,vol-4,issue-11 in nov 2016. [9] Praveen Kumar, S Havalagi and Shruthi Urf Nivedita,” The Amazing Gloves that give Voice to the Voiceless”, International Journal of Advances in Engineering & Technology,Vol. 6, No.1, pp. 471-480, March 2013. [10]. [10] Yun Li,Xiang Chen, Jianxun Tian, Xu Zhang, Kongqiao Wang and Jihai Yang, (2019)“Automation Recognition of Sign Language Subwords based on Portable Accelerometer and EMG Sensor”, IEEE Engineering in Medicine and Biology Society, Vol.59, No.10, pp. 2695 .

Copyright

Copyright © 2023 M. Navaneetha Krishnan, Keerthana. S, Nivetha. S, Gowri Priya. R . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET51192

Publish Date : 2023-04-28

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online