Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Advancements and Challenges in Neuromorphic Computing: Bridging Neuroscience and Artificial Intelligence

Authors: Melad Mohamed Salim Elfighi, Younis Wardko Ahmed Mohammed, Osama Abdalla Elmahdi Elghali

DOI Link: https://doi.org/10.22214/ijraset.2025.66411

Certificate: View Certificate

Abstract

Neuromorphic computing represents a paradigm shift in computational design, aiming to emulate the neural structures and functionalities of the human brain. This approach seeks to enhance efficiency and adaptability in artificial intelligence (AI) systems. This paper provides a comprehensive review of recent advancements in neuromorphic hardware and software, highlighting their potential to revolutionize AI by enabling real-time processing and energy-efficient computations. Additionally, it examines the challenges inherent in replicating complex neural processes, including issues related to scalability, material limitations, and the integration of neuromorphic systems with existing technologies. By bridging the disciplines of neuroscience and AI, neuromorphic computing offers promising avenues for the development of intelligent systems that closely mirror human cognitive functions.

Introduction

I. INTRODUCTION

The quest to develop intelligent systems that parallel human cognition has led to significant interdisciplinary research at the intersection of neuroscience and artificial intelligence. Traditional computing architectures, predominantly based on the von Neumann model, face limitations in processing speed and energy efficiency, especially when handling complex, unstructured data inherent in AI applications. Neuromorphic computing emerges as a transformative approach, aiming to replicate the brain's neural architecture and synaptic functionalities to overcome these challenges. Neuromorphic engineering involves designing both hardware and software that mimic the neural and synaptic structures of the brain, facilitating the development of systems capable of parallel processing and adaptive learning. This biomimetic approach promises substantial improvements in computational efficiency and the ability to perform complex tasks with reduced power consumption. Researchers are uncovering new perspectives on learning, memory, and decision-making processes by leveraging neuroscience to inform AI design and using AI techniques to analyze complex neural data [1]. According to a recent report by the World Health Organization (WHO), neurological and psychiatric disorders, spanning from epilepsy to Alzheimer’s disease, stroke to headaches, impact approximately one billion individuals worldwide [2]. Shockingly, an estimated 6.8 million people succumb to neurological disorders annually, with epilepsy affecting 50 million individuals and Alzheimer’s and other dementias affecting 24 million. This global burden transcends age, gender, education, or income level. In neuromorphic computing, most research is focused on the hardware systems, devices, and materials mentioned above. However, to fully utilize neuromorphic computers in the future, to exploit their unique computational characteristics, and to drive their hardware design, neuromorphic algorithms and applications must be utilized. Therefore, it is necessary to study and develop neuromorphic algorithms and applications that can be used to optimize the hardware design and maximize the use of neuromorphic computers, taking into account the unique computational characteristics of neuromorphic computers. Electronics, telecommunications, and computing all use analog and digital signal representation or processing techniques, which are two distinct types. Here is a quick description of each idea.30–33 Continuous signals or data that fluctuate smoothly and indefinitely over time or place are referred to as analog. In response to these challenges, the Institute of Medicine’s (IOM) Forum on Neuroscience and Nervous System Disorders has convened a series of public workshops involving industry, government, academia, and patient groups [3]. These discussions aimed to address obstacles in translational neuroscience research and propose strategies for improvement.

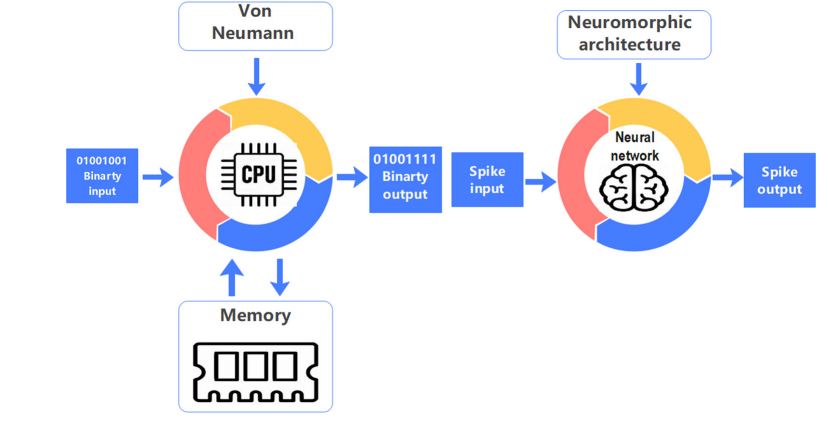

There is still hope for major developments in this field due to continuous research and advancements. Major breakthroughs in neuromorphic computing could revolutionize the way that computers are used and could open up possibilities for new and innovative applications. The potential for this technology is immense, and its development could have a profound effect on the computing industry. As seen in (Figure. 1), neuromorphic computers differ from conventional computing designs in numerous key operational ways [4].

Figure 1: Neumann architecture vs. neuromorphic architecture.

II. PURPOSE OF THE PAPER

The purpose of the is to provide a comprehensive review of the current state of neuromorphic computing, focusing on its recent advancements, inherent challenges, and its role in integrating principles from neuroscience into artificial intelligence (AI) systems. aims to provide a comprehensive review of the current state of neuromorphic computing, focusing on its recent advancements, inherent challenges, and its role in integrating principles from neuroscience into artificial intelligence (AI) systems [5].

III. OBJECTIVES OF THE PAPER

- Review Recent Advancements: Examine the latest developments in neuromorphic hardware and software, including innovations in neuromorphic chips, algorithms, and materials that emulate neural processes.

- Identify Challenges: Discuss the technical and theoretical challenges in replicating complex neural processes, such as scalability, energy efficiency, and the integration of neuromorphic systems with existing technologies.

- Explore Applications in AI: Assess how neuromorphic computing can enhance AI by enabling more efficient, adaptive, and brain-like information processing capabilities.

- Bridge Neuroscience and AI: Analyze how insights from neuroscience inform the design of neuromorphic systems and how these systems, in turn, contribute to a deeper understanding of neural computation and cognition.

By addressing these objectives, the paper aims to elucidate the potential of neuromorphic computing to revolutionize AI, offering pathways to develop intelligent systems that closely mirror human cognitive functions. Neuromorphic computing seeks to emulate the neural architecture and processing methods of the human brain to enhance computational efficiency and intelligence. This approach promises substantial improvements in computational efficiency and the ability to perform complex tasks with reduced power consumption. However, challenges persist in accurately replicating the intricate processes of the human brain. By exploring the convergence of neuroscience principles with artificial intelligence, we seek to elucidate the potential of neuromorphic systems to revolutionize the future of intelligent computing [6].

IV. IN-MEMORY COMPUTING

In the von-Neumann architecture, which dates back to the 1940s, memory and processing units are physically separated, and large amounts of data need to be shuttled back and forth between them during the execution of various computational tasks. The latency and energy associated with accessing data from the memory units are key performance bottlenecks for a range of applications, in particular, for the increasingly prominent AI-related workloads.[4] The energy cost associated with moving data is a key challenge for both severely energy constrained mobile and edge computing as well as high-performance computing in a cloud environment due to cooling constraints. . In this paradigm, the memory is an active participant in the computational task. Besides reducing latency and energy cost associated with data movement, in-memory computing also has the potential to improve the computational time complexity associated with certain tasks due to the massive parallelism afforded by a dense array of millions of nanoscale memory devices serving as compute units. By introducing physical coupling between the memory devices, there is also a potential for further reduction in computational time complexity [7].

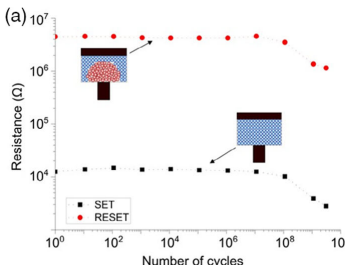

There are several key physical attributes that enable inmemory computing using memristive devices. First of all, the ability to store two levels of resistance/conductance values in a non-volatile manner and to reversibly switch from one level to the other (binary storage capability) can be exploited for computing. (Figure .2) shows the resistance values achieved upon repeated switching of a representative memristive device (a PCM device) between LRS and HRS. Due to the LRS and the HRS, resistance could serve as an additional logic state variable

Figure 2: The key physical attributes of memristive devices

V. NEUROSCIENCE INFORMING AI

Neuroscience has significantly influenced the development of artificial intelligence (AI), providing insights into brain function that inspire the creation of more efficient and adaptable AI systems. This interdisciplinary collaboration, often referred to as NeuroAI, seeks to emulate neural processes to enhance machine learning and cognitive computing.

- Key Contributions of Neuroscience to AI

- Neural Network Architectures: The structure of artificial neural networks is inspired by the human brain's interconnected neurons, enabling machines to process information in a distributed and parallel manner.

- Learning Mechanisms: Concepts such as synaptic plasticity, which refers to the brain's ability to strengthen or weaken synapses based on activity, have informed algorithms that allow AI systems to learn and adapt from data.

- Spiking Neural Networks (SNNs): These models mimic the brain's use of discrete spikes for communication, leading to more energy-efficient and biologically plausible AI systems.

- Reinforcement Learning: Inspired by behavioral neuroscience, this approach enables AI agents to learn optimal behaviors through rewards and punishments, akin to how animals learn from interactions with their environment [8,9].

VI. NEUROMORPHIC AND NEUROSCIENCE COMPUTING

Neuromorphic computing and computational neuroscience are two interrelated fields that draw inspiration from the human brain to advance technology and deepen our understanding of neural processes.

A. Neuromorphic Computing

Neuromorphic computing involves designing computer systems that emulate the architecture and functionality of the human brain. This approach seeks to create hardware and software that replicate neural networks, enabling machines to process information in ways similar to biological systems. By mimicking neural structures, neuromorphic systems aim to achieve greater efficiency and adaptability in tasks such as pattern recognition and sensory processing. For instance, Intel's neuromorphic research focuses on developing energy-efficient AI by leveraging insights from neuroscience.

B. Computational Neuroscience

Computational neuroscience employs mathematical models, computer simulations, and statistical analyses to study the functions of the nervous system. Researchers in this field aim to understand how neural circuits process information, leading to behaviors and cognitive functions. By constructing algorithms that mirror neural processes, computational neuroscience provides valuable insights into brain function and informs the development of artificial systems. Institutions like MIT's McGovern Institute are at the forefront of this research, exploring how the brain produces intelligent behavior and how such knowledge can inform AI development.

C. Intersection and Applications

The convergence of neuromorphic computing and computational neuroscience holds significant promise for the future of artificial intelligence. By applying principles uncovered through computational neuroscience, neuromorphic engineering can develop systems that process information more naturally and efficiently.

This synergy facilitates the creation of AI that not only performs tasks with high efficiency but also adapts and learns in a manner akin to human cognition. For example, neuromorphic computing technologies are being explored for their potential to revolutionize AI applications by implementing aspects of biological neural networks in electronic circuits. From machine learning to neuromorphic computing, neuromorphic computing emulates the parallel, distributed learning and cognitive structures of the neural activities in brains. Neuromorphic computing attempts to design more biological-like neural activities into hardware and software. Using these engineered devices to replace digital and virtual von Neumann computing will require further technical advances. Simultaneously, these technologies promise to build responsive embedded, and secure systems that can handle complex and open-world data with minimal human intervention. Both adaptive and secure features can be accompanied by a reduction in computation loads and power. Given functional and technological advancements in these directions, neuromorphic computing can be featured in a wide variety of applications in logistics, IoT, and intelligent systems [10,11,12].

In summary, while neuromorphic computing focuses on building brain-inspired hardware and software, computational neuroscience seeks to understand the computational principles of the brain. Together, they contribute to the advancement of intelligent systems that bridge the gap between biological processes and artificial computation [13].

VII. NEUROMORPHIC COMPUTING

Neuromorphic computing is an innovative approach to designing computer systems that emulate the architecture and functionality of the human brain. By modeling computing elements after neural structures, this paradigm aims to achieve enhanced efficiency and adaptability in information processing.

The objective of this endeavour is to construct hardware and software systems that draw inspiration from the neural networks present in the human brain. Such systems are intended to solve complex problems with greater accuracy and speed than conventional computers [14].

A. Key Features of Neuromorphic Computing

- Brain-Inspired Architecture: Neuromorphic systems incorporate artificial neurons and synapses to replicate the parallel and distributed processing capabilities of biological neural networks.

- Energy Efficiency: By mimicking the brain's low-power consumption, neuromorphic computing seeks to address the energy demands of traditional computing systems, making it particularly advantageous for applications requiring efficient processing.

- Real-Time Processing: Neuromorphic systems are designed to process information in real-time, similar to human cognition, enabling rapid responses to complex stimuli.

B. Applications of Neuromorphic Computing

- Artificial Intelligence (AI): Neuromorphic computing enhances AI by providing more efficient and adaptable architectures for machine learning and cognitive computing tasks.

- Sensory Processing: Neuromorphic systems are well-suited for processing sensory data, such as visual and auditory information, enabling advancements in robotics and autonomous systems.

- Edge Computing: The energy efficiency and real-time processing capabilities of neuromorphic systems make them ideal for deployment in edge devices, where resources are limited, and immediate data processing is essential [15].

C. Challenges and Future Directions

Despite its potential, neuromorphic computing faces challenges, including replicating the complexity of biological neural networks, developing scalable hardware, and integrating with existing technologies. Ongoing research focuses on overcoming these obstacles to fully realize the benefits of brain-inspired computing.

In summary, neuromorphic computing represents a promising frontier in computer engineering, striving to bridge the gap between artificial systems and biological intelligence by drawing inspiration from the human brain's remarkable capabilities [16].

VIII. DIFFERENT STRATEGIES ENCODING IN NEUROMORPHIC SYSTEMS

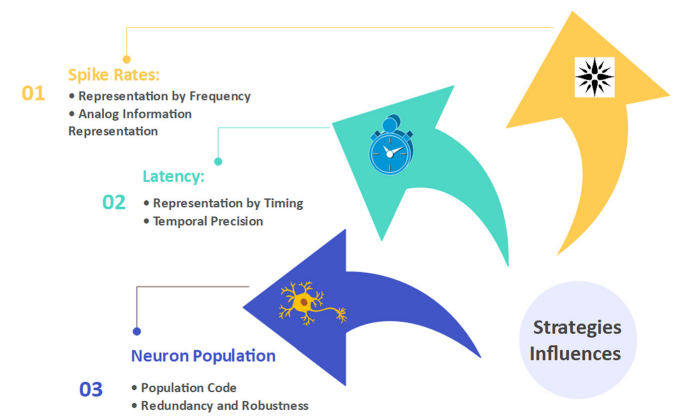

In neuromorphic systems, encoding strategies are crucial for translating information into spike patterns that spiking neural networks (SNNs) can process effectively. These strategies aim to represent data in a manner that leverages the temporal dynamics and efficiency of neuromorphic architectures. Neuromorphic systems endeavour to replicate the cognitive processes of the brain and frequently use techniques such as spike rates, latency, and neuron population to encode and convey information. The impact of each of these tactics on information representation is demonstrated in (Figure. 3).

Figure 3: Neuromorphic devices the information-processing strategies influences [17].

A. Common Encoding Strategies

- Rate Coding: Information is encoded based on the firing rate of neurons; higher stimulus intensity corresponds to higher firing rates. This method is straightforward but may not fully exploit the temporal precision of SNNs.

- Temporal Coding: Information is represented by the precise timing of spikes. Variants include:

- Time-to-First-Spike (TTFS): The timing of the first spike conveys information, with shorter latencies indicating stronger stimuli.

- Phase Coding: Spike times are aligned with specific phases of a reference signal, enabling the encoding of information in the relative timing of spikes.

- Population Coding: Information is distributed across a group of neurons, with patterns of activity conveying specific data. This approach enhances robustness and allows for complex representations.

- Burst Coding: Information is encoded in bursts of spikes rather than single spikes, potentially increasing the reliability of signal transmission.

- Spike-Count Encoding: The number of spikes within a given time window represents the information, combining aspects of rate and temporal coding.

- Binning: Continuous input signals are divided into discrete intervals (bins), with each bin's value determining the presence or timing of spikes [18].

B. Considerations for Encoding Strategies

- Signal Characteristics: The nature of the input signal (e.g., audio, visual, sensory) influences the choice of encoding strategy to ensure efficient and accurate representation [19].

- Computational Efficiency: Encoding methods should align with the processing capabilities of neuromorphic hardware to maintain energy efficiency and real-time performance [20].

Conclusion

neuromorphic computing stands at the crossroads of neuroscience and artificial intelligence, offering immense potential for creating more efficient, adaptive, and biologically inspired computing systems. The advancements in this field have led to significant improvements in hardware design, such as neuromorphic chips, and software algorithms that emulate neural processes, enhancing the performance of AI systems in areas like pattern recognition, learning, and decision-making. However, challenges persist, including the complexity of modeling the brain\'s intricate neural networks, the scalability of neuromorphic hardware, and the integration of these systems with conventional computing paradigms. Addressing these challenges will require continued interdisciplinary collaboration between neuroscientists, engineers, and AI researchers. Future advancements in neuromorphic computing could pave the way for more intelligent, energy-efficient, and adaptive systems that better align with the capabilities of the human brain. Ultimately, bridging the gap between neuroscience and AI through neuromorphic computing promises to revolutionize both fields, unlocking new possibilities for a wide range of applications from robotics to cognitive computing.

References

[1] J. Von Neumann, IEEE Ann. Hist. Comput., 1993, 15, 27–75. [2] C. Mead, Proc. IEEE, 1990, 78, 1629–1636. [3] L. Chua, IEEE Trans. Circuit Theory, 1971, 18, 507–519. 4 A. Krizhevsky, I. Sutskever and G. E. Hinton, Commun. ACM, 2017, 60, 84–90. [4] Resistive Switching: From Fundamentals of Nanoionic Redox Processes to Memristive Device Applications (Eds: D. Ielmini, R. Waser), Wiley-VCH Verlag GmbH & Co. KGaA, Weinheim 2016. [5] D. B. Strukov, G. S. Snider, D. R. Stewart, R. S. Williams, Nature 2008, 453, 80. [6] K. Szot, W. Speier, G. Bihlmayer, R. Waser, Nat. Mater 2006, 5, 312. [7] M. A. Zidan, J. P. Strachan, W. D. Lu, Nat. Electron. 2018, 1, 22. [8] L. Chua, IEEE Trans. Circuit Theory 1971, 18, 507. [9] A. Mehonic, A. J. Kenyon, in Defects at Oxide Surfaces (Eds.: J. Jupille, G. Thornton), Springer International Publishing, Cham 2015, pp. 401–428. [10] S. Yu, Neuro-Inspired Computing Using Resistive Synaptic Devices, Springer ScienceþBusiness Media, New York, NY 2017. [11] O. Mutlu, S. Ghose, J. Gómez-Luna, R. Ausavarungnirun, Microprocess. Microsyst. 2019, 67, 28. [12] S. W. Keckler, W. J. Dally, B. Khailany, M. Garland, D. Glasco, IEEE Micro. 2011, 31, 7 [13] Aravind, R., & Surabhi, S. N. R. D. (2024). Smart Charging: AI Solutions For Efficient Battery Power Management In Automotive Applications. Educational Administration: Theory and Practice, 30(5), 14257-1467. [14] Bhardwaj, A. K., Dutta, P. K., & Chintale, P. (2024). AI-Powered Anomaly Detection for Kubernetes Security: A Systematic Approach to Identifying Threats. In Babylonian Journal of Machine Learning (Vol. 2024, pp. 142–148). Mesopotamian Academic Press. https://doi.org/10.58496/bjml/2024/014 [15] Kommisetty, P. D. N. K., & Abhireddy, N. (2024). Cloud Migration Strategies: Ensuring Seamless Integration and Scalability in Dynamic Business Environments. In International Journal of Engineering and Computer Science (Vol. 13, Issue 04, pp. 26146–26156). Valley International. https://doi.org/10.18535/ijecs/v13i04.4812 [16] Bansal, A. (2024). Enhancing Customer Acquisition Strategies Through Look-Alike Modelling with Machine Learning Using the Customer Segmentation Dataset. International Journal of Computer Science and Engineering Research and Development (IJCSERD), 14(1), 30-43. [17] Aravind, R. (2023). Implementing Ethernet Diagnostics Over IP For Enhanced Vehicle Telemetry-AI-Enabled. Educational Administration: Theory and Practice, 29(4), 796-809. [18] Avacharmal, R., Pamulaparthyvenkata, S., & Gudala, L. (2023). Unveiling the Pandora\'s Box: A Multifaceted Exploration of Ethical Considerations in Generative AI for Financial Services and Healthcare. Hong Kong Journal of AI and Medicine, 3(1), 84-99. [19] Mahida, A. (2024). Integrating Observability with DevOps Practices in Financial Services Technologies: A Study on Enhancing Software Development and Operational Resilience. International Journal of Advanced Computer Science & Applications, 15(7). [20] Perumal, A. P., Deshmukh, H., Chintale, P., Molleti, R., Najana, M., & Desaboyina, G. Leveraging machine learning in the analytics of cyber security threat intelligence in Microsoft azure.

Copyright

Copyright © 2025 Melad Mohamed Salim Elfighi, Younis Wardko Ahmed Mohammed, Osama Abdalla Elmahdi Elghali. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET66411

Publish Date : 2025-01-08

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online