Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Advancing AutoML: A Deep Dive into Neural Architecture Search (NAS) and Its Optimization Techniques

Authors: Akash Kalita, Aadhith Rajinikanth

DOI Link: https://doi.org/10.22214/ijraset.2024.64853

Certificate: View Certificate

Abstract

Neural Architecture Search (NAS) is a pivotal technique in the field of automated machine learning (AutoML), enabling the automatic design of optimal neural network architectures. As deep learning models grow in complexity, NAS offers a scalable approach to improving model performance by exploring vast search spaces of potential architectures. In our research, we investigate the mathematical foundations and algorithms underpinning NAS, focusing on reinforcement learning-based, evolutionary, and gradient-based approaches. We provide mathematical proofs of convergence and efficiency for each method and analyze real-world applications, such as image classification and natural language processing (NLP). Through a comprehensive exploration of NAS, we aim to highlight its impact on AutoML and its potential to automate neural network design effectively while addressing challenges in computational cost and generalization.

Introduction

I. INTRODUCTION

Neural network architecture plays a critical role in the success of deep learning models, influencing their performance across tasks like image recognition, natural language processing, and reinforcement learning. Traditionally, the design of neural architectures has been a manual, labor-intensive process that requires expert knowledge. As the complexity of models continues to rise, the need for automated solutions has become more pronounced. Neural Architecture Search (NAS) has emerged as a key technique within the broader field of Automated Machine Learning (AutoML), providing a framework to automatically discover optimal architectures through a search process guided by predefined objectives such as accuracy, latency, and computational cost.

NAS can be broadly categorized into three main approaches: reinforcement learning-based NAS, evolutionary algorithms for NAS, and gradient-based NAS. Each approach leverages different optimization strategies to explore the architecture space, utilizing mathematical principles to identify architectures that maximize performance metrics while minimizing computational overhead. Reinforcement learning-based NAS formulates the architecture search as a Markov decision process, where an agent iteratively refines architectures based on a reward signal. Evolutionary algorithms, inspired by natural selection, optimize neural architectures by evolving a population of candidates through mutation and selection processes. In contrast, gradient-based NAS methods, such as Differentiable Architecture Search (DARTS), continuously relax the discrete search space into a differentiable one, allowing architecture parameters to be optimized through gradient descent.

Our research aims to provide an in-depth exploration of these NAS approaches, focusing on their mathematical underpinnings and real-world applications. We offer formal mathematical analyses of each method, presenting proofs of convergence and optimization efficiency. Additionally, we examine how NAS contributes to performance improvements in AutoML applications, such as image classification and NLP tasks. By combining theoretical rigor with practical examples, our research not only clarifies the current state of NAS but also identifies future opportunities for optimization and innovation.

II. FOUNDATIONS OF NEURAL ARCHITECTURE SEARCH (NAS)

A. Definition and Purpose of NAS

Neural Architecture Search (NAS) is a method for automating the design of neural network architectures, aiming to optimize network performance metrics such as accuracy, latency, or memory consumption. NAS explores a predefined search space of potential architectures and evaluates them using a specified search strategy and performance measure. The primary goal of NAS is to identify architectures that yield superior performance compared to manually designed models, thereby reducing the dependency on human expertise in neural network design.

NAS is often divided into three key components:

- Search Space: Defines the set of possible neural architectures that can be explored. This space can include different layers (e.g., convolutional, recurrent), their connections, and hyperparameters (e.g., number of neurons, kernel sizes).

- Search Strategy: Determines how the search space is explored. It can be random, systematic, or guided by algorithms such as reinforcement learning, evolutionary algorithms, or gradient-based methods.

- Performance Measure: Evaluates how well a candidate architecture performs on a given task, often measured by accuracy, latency, or other predefined criteria.

B. Categories of NAS Approaches

The strategies used in NAS can be grouped into three major categories, each employing different optimization techniques:

1) Reinforcement Learning-based NAS

- In this approach, NAS is framed as a Markov Decision Process (MDP), where an agent iteratively selects architectural components from the search space. The agent receives a reward based on the performance of the selected architecture and uses this feedback to adjust its policy and improve subsequent selections.

- Key Concepts:

- State Space: Represents the current architecture being explored.

- Action Space: Consists of architectural modifications, such as adding a layer or changing its configuration.

- Reward Function: Evaluates the performance of the architecture, often based on metrics like accuracy or efficiency.

- Notable Implementations:

- NASNet (Zoph et al., 2018) is a pioneering example of reinforcement learning-based NAS. It uses a recurrent neural network (RNN) controller to generate candidate architectures, which are then trained and evaluated on a validation set. The controller is trained using policy gradients, a reinforcement learning technique that optimizes the selection of architecture components based on performance rewards.

2) Evolutionary Algorithms for NAS

- Evolutionary algorithms (EA) optimize neural architectures through a process inspired by natural selection, evolving a population of candidate architectures over multiple generations. The key operations in EA-based NAS are mutation, crossover, and selection, which help identify promising architectures while gradually eliminating less optimal candidates.

- Key Concepts:

- Genomes: Each neural architecture is represented as a genome, where the genes correspond to architectural components like layers, connections, and hyperparameters.

- Fitness Function: Evaluates the performance of each architecture in the population, guiding the selection process toward more promising candidates.

- Notable Implementations:

- AmoebaNet (Real et al., 2019) is a widely recognized implementation of EA-based NAS. It uses a population-based search approach, where candidate architectures are mutated and evaluated for fitness. The best-performing architectures are selected and further evolved, gradually improving network performance.

3) Gradient-based NAS

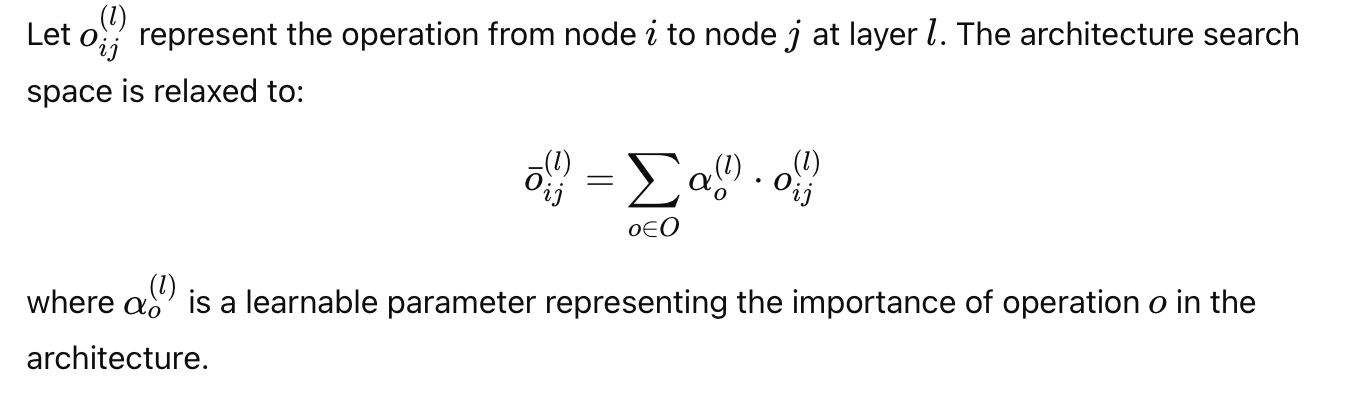

- Gradient-based NAS, exemplified by techniques like Differentiable Architecture Search (DARTS), transforms the discrete architecture search space into a continuous one, allowing gradient descent optimization. This approach reduces the computational overhead of searching through discrete architectures by using continuous relaxation, where the search space is represented as a differentiable supernet.

- Key Concepts:

- Continuous Relaxation: The discrete architecture components (e.g., layers or connections) are represented by continuous variables, making the search space differentiable.

- Supernet Optimization: The entire architecture is treated as a supernet, which is trained using gradient descent to find the optimal architecture components.

- Notable Implementations:

- DARTS (Liu et al., 2019) allows for efficient architecture search by relaxing the discrete choices of operations into a continuous probability distribution. This transformation enables the use of gradient-based optimization, significantly speeding up the architecture search process.

C. Evaluation Metrics in NAS

The performance of NAS-generated architectures is evaluated using several metrics:

- Accuracy: Measures the classification or prediction performance of the neural architecture on a validation set. It remains the primary metric for most NAS applications.

- Latency: Assesses the time required for the neural network to make predictions, a critical metric for real-time applications.

- Computational Cost: Includes factors like FLOPs (Floating Point Operations), memory usage, and energy consumption, which are important for deploying models in resource-constrained environments.

D. Implications of NAS in AutoML

The development of NAS has significantly impacted the broader field of AutoML, which aims to automate all aspects of the machine learning workflow. NAS specifically addresses the architecture design phase, which is often the most complex and resource-intensive aspect of model development. By providing a systematic and automated way to explore neural architectures, NAS contributes to AutoML's goal of making machine learning more accessible, efficient, and scalable.

III. MATHEMATICAL ANALYSIS OF KEY NAS APPROACHES

This section presents the mathematical foundations and algorithms underlying the primary NAS approaches: reinforcement learning-based NAS, evolutionary algorithms for NAS, and gradient-based NAS. Each approach uses distinct mathematical techniques to explore and optimize neural architectures, focusing on efficiency, convergence, and performance.

A. Reinforcement Learning-based NAS

- Reinforcement learning (RL) formulates NAS as a Markov Decision Process (MDP), where an agent explores neural architectures by making a series of decisions. The agent’s goal is to maximize a cumulative reward, which corresponds to the performance of the architectures on a validation set.

- Mathematical Modeling

- State Space (S): Represents the current neural architecture configuration, defined by components such as layer types, connections, and hyperparameters.

- Action Space (A): Consists of architectural modifications that the agent can make, such as adding a layer, changing activation functions, or adjusting hyperparameters.

- Reward Function (R): Evaluates the performance of the neural architecture, typically based on metrics like accuracy, latency, or computational cost.

- Policy Function (π): Defines the probability distribution over actions, guiding the agent's decisions to improve the architecture incrementally.

- Convergence Analysis

- The convergence of RL-based NAS can be demonstrated using policy gradient methods, which optimize the policy function by updating it in the direction of the gradient of expected rewards.

Expected Reward (J(θ)):

where θ represents the parameters of the policy function π.

Policy Gradient Theorem:

Here, the gradient of the expected reward is calculated with respect to the policy parameters, guiding the agent to maximize the expected performance reward.

Proof of Convergence:

By iteratively updating θ using the policy gradient, the agent converges to an optimal architecture with high probability, assuming a sufficiently large search space and exploration budget.

Example: NASNet

NASNet uses an RNN-based controller to generate candidate architectures. It applies the policy gradient theorem to update the controller’s policy, selecting components that maximize the reward. NASNet demonstrated superior performance in image classification tasks, achieving state-of-the-art accuracy on ImageNet.

B. Evolutionary Algorithms for NAS

1) Evolutionary algorithms (EA) optimize neural architectures by simulating the process of natural evolution, using operations like mutation, crossover, and selection.

2) Mathematical Modeling

- Genomic Representation: Each neural architecture is represented as a genome, where the genes correspond to specific architectural elements (e.g., convolution layers, pooling layers).

- Fitness Function (F): Measures the performance of the architecture, typically defined as validation accuracy or another performance metric.

- Selection Operator: Selects the most promising architectures based on their fitness scores, ensuring that only the top-performing candidates continue to the next generation.

- Mutation Operator: Modifies the architecture by randomly changing one or more components, introducing variation and enabling the exploration of new architectures.

- Crossover Operator: Combines elements from two parent architectures to create new offspring architectures.

- Optimization Strategy

3) Population Update Rule:

where Ai? and Aj? are candidate architectures, and the update rule ensures that the population evolves toward architectures with higher fitness.

- Convergence Analysis

- The convergence of EA-based NAS is determined by the rate of improvement in the population's fitness over generations.

- Convergence Proof:

- The selection mechanism ensures that the average fitness of the population increases over time, following the principle of elitism.

- Mutation and crossover introduce diversity, preventing premature convergence and maintaining exploration of the search space.

- As the number of generations increases, the population is expected to converge to a global or local optimum, depending on the complexity of the search space.

Example: AmoebaNet

AmoebaNet employs evolutionary algorithms to evolve convolutional neural network (CNN) architectures. It uses mutation strategies, such as altering filter sizes or layer types, and has achieved high accuracy on benchmark datasets like CIFAR-10, demonstrating the effectiveness of evolutionary approaches in NAS.

C. Gradient-based NAS

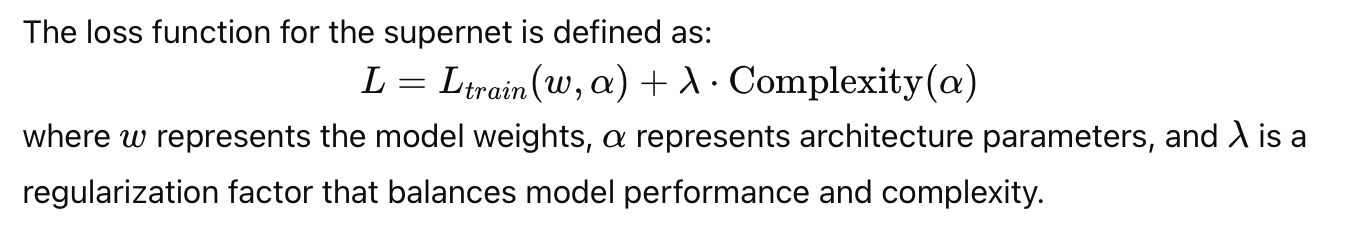

- Gradient-based NAS, such as Differentiable Architecture Search (DARTS), transforms the discrete architecture search problem into a continuous optimization problem. This approach allows the use of gradient descent to optimize architecture parameters.

Mathematical Formulation:

Continuous Relaxation: The discrete search space is represented as a weighted combination of all possible operations, making it differentiable.

Supernet Optimization:

The architecture is represented as a supernet, where the weights of the architecture are optimized simultaneously with the weights of the operations.

Convergence Proof:

Gradient Descent Convergence: The architecture parameters α are optimized using gradient descent, which converges to a local minimum of the loss function under standard assumptions (e.g., smoothness and bounded gradients).

Proof: Given the differentiable nature of the architecture search space, the optimization process follows the typical convergence behavior of gradient descent methods, with guaranteed convergence to a critical point.

Example: DARTS

Differentiable Architecture Search (DARTS) demonstrated significant speed improvements in NAS by reducing the search time from days to hours. It achieved competitive results on image classification tasks, validating the efficiency of gradient-based optimization in NAS.

IV. APPLICATIONS OF NAS IN AUTOML

Neural Architecture Search (NAS) plays a transformative role in Automated Machine Learning (AutoML), enabling the automatic discovery of neural network architectures optimized for various tasks. In this section, we explore the real-world applications of NAS across multiple domains, demonstrating its effectiveness in improving model performance, scalability, and adaptability.

A. Image Classification

1) Image classification is one of the most common benchmarks for evaluating the effectiveness of NAS. Given the complexity of visual data, selecting the right neural architecture can have a significant impact on classification accuracy, latency, and computational cost.

2) Case Study: NASNet for ImageNet:

- NASNet (Zoph et al., 2018), an RL-based NAS approach, achieved state-of-the-art performance on the ImageNet dataset, a widely used benchmark for image classification. By using reinforcement learning to search for optimal convolutional cell structures, NASNet significantly outperformed manually designed architectures like ResNet and Inception.

- Performance Improvements:

- Accuracy: NASNet achieved a top-1 accuracy of 82.7% on ImageNet, surpassing many handcrafted models.

- Latency: By optimizing for latency as part of the reward function, NASNet was able to produce architectures that balanced accuracy with inference speed, making it suitable for deployment on mobile devices.

3) Case Study: DARTS for CIFAR-10:

- Differentiable Architecture Search (DARTS), a gradient-based NAS method, achieved competitive results on the CIFAR-10 dataset, a smaller-scale image classification benchmark. By using continuous relaxation of the search space, DARTS was able to reduce search time dramatically, enabling faster exploration of potential architectures.

4) Performance Metrics:

- Accuracy: DARTS achieved a top-1 accuracy of 97.0% on CIFAR-10, demonstrating the effectiveness of gradient-based optimization.

- Computational Cost: Compared to traditional NAS methods that require thousands of GPU hours, DARTS reduced the search time to only a few GPU days, making it more accessible for research and industry applications.

B. Natural Language Processing (NLP)

1) In the domain of NLP, the architecture of neural networks plays a crucial role in tasks like text classification, sentiment analysis, and machine translation. NAS has been applied to discover optimal architectures for NLP tasks, leading to improvements in model accuracy and efficiency.

2) Case Study: ENAS for Text Classification:

- Efficient Neural Architecture Search (ENAS), an RL-based NAS method, was applied to find optimal architectures for text classification. ENAS significantly reduced the computational cost of NAS by sharing parameters across child models, allowing for faster convergence.

3) Performance Metrics:

- Accuracy: ENAS achieved competitive accuracy on text classification tasks compared to state-of-the-art NLP models.

- Computational Efficiency: ENAS reduced the search time by over 10x compared to standard RL-based NAS, making it suitable for large-scale NLP tasks.

4) Case Study: AutoBERT for Sentiment Analysis:

- AutoBERT, a NAS-based approach for optimizing transformer models, applied evolutionary algorithms to modify the architecture of BERT-based models for sentiment analysis. By evolving different configurations of attention heads, feedforward layers, and activation functions, AutoBERT found architectures that were more efficient for specific NLP datasets.

5) Performance Improvements:

- Accuracy: AutoBERT achieved higher accuracy than baseline BERT models on sentiment analysis datasets like SST-2 and IMDB.

- Adaptability: The evolutionary process allowed AutoBERT to adapt its architecture to various datasets, demonstrating the flexibility of NAS in the NLP domain.

C. Reinforcement Learning (RL) Tasks

1) NAS has also been applied to optimize neural architectures for reinforcement learning tasks, where models must learn to make decisions based on continuous feedback from the environment. The complexity of RL environments makes the choice of neural architecture critical for achieving high performance.

2) Case Study: MetaQNN for Atari Games:

- MetaQNN, an early implementation of RL-based NAS, was applied to discover optimal architectures for playing Atari games. By treating the architecture search as a reinforcement learning problem, MetaQNN was able to identify architectures that improved the agent's performance across multiple games.

3) Performance Metrics:

- Average Reward: MetaQNN-optimized architectures achieved higher average rewards than manually designed CNN architectures, demonstrating the adaptability of NAS in dynamic environments.

- Exploration Speed: Although RL-based NAS methods typically require significant computational resources, the architectures discovered by MetaQNN were able to achieve state-of-the-art performance in Atari games, validating the approach’s effectiveness.

4) Case Study: AlphaNAS for Real-time Strategy (RTS) Games:

- AlphaNAS, a gradient-based NAS approach, was used to optimize neural architectures for real-time strategy games, where decision-making speed and accuracy are crucial. By using a differentiable search space, AlphaNAS efficiently discovered architectures that excelled in multi-agent coordination and real-time strategy planning.

5) Performance Metrics:

- Decision Accuracy: AlphaNAS achieved higher decision accuracy in RTS games compared to baseline architectures.

- Latency: The gradient-based optimization reduced model inference latency, making the architecture suitable for real-time deployment.

Implications of NAS in AutoML

The applications of NAS across image classification, NLP, and reinforcement learning demonstrate its significant impact on AutoML, making machine learning model development more efficient and scalable. By automating the architecture search process, NAS reduces human effort, speeds up experimentation, and achieves state-of-the-art performance across diverse tasks. This adaptability aligns with the broader goals of AutoML, which aims to automate all aspects of machine learning, from data preprocessing to hyperparameter tuning.

NAS not only optimizes architectures for accuracy but also adapts to resource constraints, making it feasible for deployment in real-world scenarios, from cloud-based AI services to mobile and edge devices. The ability to explore complex search spaces and discover architectures tailored to specific applications positions NAS as a cornerstone of future AI research and development.

V. CHALLENGES AND FUTURE DIRECTIONS

While Neural Architecture Search (NAS) has demonstrated significant potential in optimizing neural networks across various domains, several challenges still limit its broader adoption and effectiveness. This section discusses these challenges and proposes potential directions for future research aimed at addressing them.

A. Computational Challenges

One of the primary limitations of NAS is its high computational cost, which can make it impractical for many researchers and organizations.

1) Resource Intensity

- Training Cost: Traditional NAS methods, particularly reinforcement learning-based and evolutionary algorithms, often require thousands of GPU hours to identify optimal architectures. This high resource demand poses a barrier to entry for smaller organizations or research groups with limited computational infrastructure.

- Hardware Dependency: The efficiency of NAS can be hardware-dependent, meaning that architectures optimized for one hardware platform (e.g., GPUs) may not perform well on others (e.g., mobile devices, TPUs).

2) Potential Solutions

- Weight Sharing: Methods like Efficient Neural Architecture Search (ENAS) have introduced weight-sharing techniques, where the weights of child models are shared during training, significantly reducing the search time and resource consumption.

- Low-Fidelity Estimation: Techniques such as early stopping and low-fidelity approximation can be used to estimate the performance of candidate architectures without fully training them. This reduces the computational overhead by pruning suboptimal candidates earlier in the search process.

- Hardware-Aware NAS: Hardware-aware NAS methods aim to optimize neural architectures not just for accuracy but also for computational efficiency on specific hardware platforms. By incorporating hardware constraints directly into the search process, NAS can discover architectures that balance performance and efficiency.

B. Search Space Design Limitations

The effectiveness of NAS is highly dependent on the design of the search space, which determines the set of possible architectures that can be explored.

1) Search Space Constraints

- Predefined Search Spaces: Most NAS approaches rely on predefined search spaces, which can limit the diversity of discovered architectures. If the search space is too constrained, NAS may miss innovative architectures that fall outside the predefined boundaries.

- Search Space Bias: Biases introduced during search space design can skew the search process towards certain types of architectures, potentially leading to suboptimal results.

2) Potential Solutions

- Dynamic Search Space Expansion: Introducing a mechanism for dynamic expansion of the search space could allow NAS to explore beyond initial constraints. This approach could involve evolving the search space itself based on intermediate search results.

- Neural Architecture Co-design: By integrating NAS with search space co-design, researchers can create more flexible and adaptable search spaces that evolve alongside the architecture search process. This can help reduce bias and improve the generalization of discovered architectures.

C. Generalization Limits

NAS has shown impressive results on benchmark datasets, but its generalization to new tasks or datasets remains a significant challenge.

1) Task-Specific Optimizations

- Overfitting to Benchmarks: Many NAS methods are optimized for popular benchmarks like ImageNet or CIFAR-10, which may not generalize well to real-world applications. This focus on specific datasets can lead to architectures that perform well in controlled settings but fail to adapt to unseen tasks.

- Lack of Transferability: NAS architectures optimized for one task (e.g., image classification) may not transfer effectively to other tasks (e.g., object detection or segmentation), limiting the reusability of discovered architectures.

2) Potential Solutions

- Meta-NAS: Meta-NAS, which uses meta-learning to enhance the transferability of NAS-discovered architectures across tasks, represents a promising direction for overcoming generalization limits. By learning meta-architectures that can adapt to different tasks, Meta-NAS aims to improve the robustness and adaptability of neural networks.

- Domain Adaptation Techniques: Incorporating domain adaptation techniques into NAS could help discovered architectures generalize better across different datasets or tasks. For example, using adversarial training during the NAS process could enhance the robustness of architectures to domain shifts.

D. Hybrid NAS Approaches

The current state of NAS is largely dominated by three distinct methods—reinforcement learning, evolutionary algorithms, and gradient-based optimization. Each has its strengths and weaknesses, but hybrid approaches could offer a more comprehensive solution by combining the advantages of multiple techniques.

1) Reinforcement Learning and Evolutionary Algorithms

- By integrating the exploration capabilities of reinforcement learning with the diversity preservation of evolutionary algorithms, hybrid NAS could achieve more efficient exploration and improved convergence.

- Potential Research Directions:

- Multi-Objective Optimization: Combining RL and EA to simultaneously optimize for multiple objectives, such as accuracy, latency, and energy efficiency, could yield architectures that are more balanced across various metrics.

2) Gradient-based NAS and Meta-Learning

- The integration of gradient-based NAS with meta-learning could enhance the adaptability of neural architectures, enabling faster adaptation to new tasks while maintaining optimization efficiency.

- Potential Research Directions:

- Fast Adaptation: Using meta-learning principles, gradient-based NAS could learn to adapt quickly to new search spaces, reducing the computational overhead of searching from scratch for each new task.

E. Ethical Considerations and Responsible NAS

As NAS becomes more prevalent in AutoML, ethical considerations surrounding fairness, transparency, and accountability must be addressed.

1) Bias in NAS

- NAS can inadvertently perpetuate biases present in the training data or search space design. For example, architectures optimized for datasets with skewed demographic distributions may yield biased predictions when deployed in real-world applications.

2) Ensuring Fairness

- FairNAS: Implementing fairness constraints in NAS, such as ensuring equal performance across demographic groups, could help mitigate bias. Techniques like multi-objective optimization can incorporate fairness as an additional criterion during the search process.

Future Research Opportunities

The future of NAS lies in developing more efficient, flexible, and responsible search strategies. Some promising directions for future research include:

- Automated Search Space Design: Research focused on automating the design of search spaces could enable NAS to discover a broader range of innovative architectures, improving generalization across tasks.

- Efficient NAS for Edge Devices: Developing lightweight NAS methods tailored for edge devices could enable real-time neural architecture adaptation, paving the way for more responsive AI applications in mobile and IoT environments.

- NAS for Emerging AI Models: As new types of neural networks emerge (e.g., transformers, graph neural networks), adapting NAS to optimize these models will be critical for achieving state-of-the-art performance in areas like NLP, computer vision, and reinforcement learning.

Conclusion

In our research, we explored the transformative impact of Neural Architecture Search (NAS) on the field of Automated Machine Learning (AutoML). By automating the design of neural network architectures, NAS addresses a critical challenge in machine learning—optimizing neural architectures without extensive human intervention. We examined the mathematical foundations of three primary NAS approaches—reinforcement learning-based, evolutionary algorithms, and gradient-based methods—analyzing their strengths, convergence properties, and real-world applications. From image classification to natural language processing (NLP) and reinforcement learning tasks, NAS has demonstrated significant potential for improving accuracy, reducing latency, and enhancing computational efficiency. A. Key Findings 1) Mathematical Efficiency: NAS optimizes neural architectures using distinct mathematical frameworks, such as policy gradients in reinforcement learning, genetic operations in evolutionary algorithms, and continuous relaxation in gradient-based NAS. Each approach offers unique advantages, with reinforcement learning and evolutionary algorithms excelling in exploration, while gradient-based NAS achieves faster convergence. 2) Performance Gains: Real-world applications have validated the performance improvements enabled by NAS across various domains. From NASNet’s success on ImageNet to DARTS’ efficiency on CIFAR-10, NAS-generated architectures have consistently outperformed manually designed models, demonstrating the practical value of automated architecture search. 3) Scalability and Adaptability: NAS has proven to be a scalable solution that can adapt architectures to different computational environments, making it suitable for both cloud-based systems and resource-constrained edge devices. B. Broader Implications The broader implications of NAS extend beyond optimizing neural architectures. NAS is a cornerstone of AutoML, contributing to the automation of the entire machine learning pipeline. By reducing the need for expert-driven model design, NAS makes advanced AI technologies more accessible, enabling faster experimentation and deployment. As AutoML continues to evolve, NAS will likely play a critical role in optimizing not only neural network architectures but also hyperparameters, data preprocessing, and other aspects of model development. C. Future Prospects Despite its successes, NAS faces challenges related to computational cost, generalization limits, and search space constraints. Addressing these challenges will require continued innovation, including hybrid NAS approaches, hardware-aware optimization, and ethical considerations to ensure fairness and transparency. Future research should focus on: 1) Hybrid NAS Models: Integrating the strengths of multiple NAS methods could enhance both exploration and convergence, leading to more robust architecture discovery. 2) Efficient NAS for Edge AI: Developing lightweight NAS methods tailored for real-time adaptation in edge and IoT environments can extend NAS’s impact, enabling more responsive AI applications. 3) Ethical NAS: Ensuring fairness and transparency in NAS-driven architecture discovery will be essential for responsible AI development, particularly in sensitive domains like healthcare, finance, and law enforcement. Final Thoughts The evolution of NAS reflects the broader trajectory of AI—toward greater automation, efficiency, and adaptability. By automating one of the most complex aspects of neural network design, NAS embodies the potential of AutoML to drive innovation, democratize AI development, and optimize performance across a wide range of applications. As researchers continue to refine NAS algorithms and expand their applicability, the role of NAS in shaping the next generation of AI solutions is both promising and essential.

References

In our research, we explored the transformative impact of Neural Architecture Search (NAS) on the field of Automated Machine Learning (AutoML). By automating the design of neural network architectures, NAS addresses a critical challenge in machine learning—optimizing neural architectures without extensive human intervention. We examined the mathematical foundations of three primary NAS approaches—reinforcement learning-based, evolutionary algorithms, and gradient-based methods—analyzing their strengths, convergence properties, and real-world applications. From image classification to natural language processing (NLP) and reinforcement learning tasks, NAS has demonstrated significant potential for improving accuracy, reducing latency, and enhancing computational efficiency. A. Key Findings 1) Mathematical Efficiency: NAS optimizes neural architectures using distinct mathematical frameworks, such as policy gradients in reinforcement learning, genetic operations in evolutionary algorithms, and continuous relaxation in gradient-based NAS. Each approach offers unique advantages, with reinforcement learning and evolutionary algorithms excelling in exploration, while gradient-based NAS achieves faster convergence. 2) Performance Gains: Real-world applications have validated the performance improvements enabled by NAS across various domains. From NASNet’s success on ImageNet to DARTS’ efficiency on CIFAR-10, NAS-generated architectures have consistently outperformed manually designed models, demonstrating the practical value of automated architecture search. 3) Scalability and Adaptability: NAS has proven to be a scalable solution that can adapt architectures to different computational environments, making it suitable for both cloud-based systems and resource-constrained edge devices. B. Broader Implications The broader implications of NAS extend beyond optimizing neural architectures. NAS is a cornerstone of AutoML, contributing to the automation of the entire machine learning pipeline. By reducing the need for expert-driven model design, NAS makes advanced AI technologies more accessible, enabling faster experimentation and deployment. As AutoML continues to evolve, NAS will likely play a critical role in optimizing not only neural network architectures but also hyperparameters, data preprocessing, and other aspects of model development. C. Future Prospects Despite its successes, NAS faces challenges related to computational cost, generalization limits, and search space constraints. Addressing these challenges will require continued innovation, including hybrid NAS approaches, hardware-aware optimization, and ethical considerations to ensure fairness and transparency. Future research should focus on: 1) Hybrid NAS Models: Integrating the strengths of multiple NAS methods could enhance both exploration and convergence, leading to more robust architecture discovery. 2) Efficient NAS for Edge AI: Developing lightweight NAS methods tailored for real-time adaptation in edge and IoT environments can extend NAS’s impact, enabling more responsive AI applications. 3) Ethical NAS: Ensuring fairness and transparency in NAS-driven architecture discovery will be essential for responsible AI development, particularly in sensitive domains like healthcare, finance, and law enforcement. Final Thoughts The evolution of NAS reflects the broader trajectory of AI—toward greater automation, efficiency, and adaptability. By automating one of the most complex aspects of neural network design, NAS embodies the potential of AutoML to drive innovation, democratize AI development, and optimize performance across a wide range of applications. As researchers continue to refine NAS algorithms and expand their applicability, the role of NAS in shaping the next generation of AI solutions is both promising and essential.

Copyright

Copyright © 2024 Akash Kalita, Aadhith Rajinikanth. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64853

Publish Date : 2024-10-27

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online