Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

AI Based Tool for Preliminary Diagnosis of Dermatological Manifestations

Authors: Minal Kharat, Rutuja Nalawade, Mrunali Patil, Trupti Sarang, Sanika Shinde, Jyoti Sarawade

DOI Link: https://doi.org/10.22214/ijraset.2024.60207

Certificate: View Certificate

Abstract

The article suggests combining a cutting-edge deep convolution neural network (DCNN) with a texture map to automatically detect malignant spots and stain the ROI over the course of a single version. A better department dedicated to disease identification and a smaller department for linguistic segmentation and ROI marking make up the two cooperating branches of the anticipated DCNN version. The community version extracts the malignant spots with the help of the better department, and the lower department also gives the disease and heath care areas more accuracy. The community version pulls the texture images from the input image in order to form the options inside the disease areas extra regular. The same old texture image deviation values are then decoded using a window. The best deviation values are then utilized to create a texture map that is divided into multiple patches and used as the computer\'s input for the deep convolution community version. The strategy proposed in this paper is known as a texture-map- based entirely department-collaborative network.

Introduction

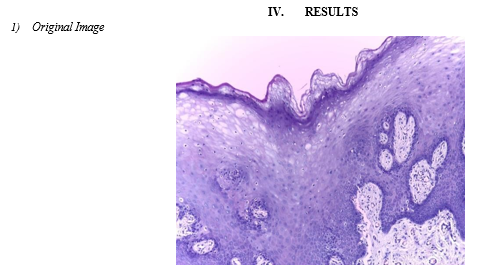

I. INTRODUCTION

Dermatology studies a variety of skin diseases and illnesses, including skin cancer. In this branch of research, the skin's external appearance is mostly used to make diagnoses. As a result, a variety of imaging methods, including ultrasonography, autography, and reflectance confocal microscopy, are required for the detection of skin diseases. There are still other issues, such the vast amount of data that is routinely collected about a person individually and through their imaging findings. In the last 20 years, several types of artificial intelligence and machine learning have been used to improve medicine in general, including diagnosis and therapy[2]. They have also been used to other industries, such as the markets for electricity and power as well as renewable energy. Artificial intelligence (AI) refers to devices or programmers that mimic how the human brain thinks. Machine learning, in contrast, makes use of a variety of smart algorithms that can be trained to solve problems, manage enormous datasets, and even develop independently.

Coetaneous melanoma has an extremely high death rate. More skin disease lesion types exist; however, they are too close to one another to be automatically categorized. A patient may be able to recover from these diseases if they are discovered in the early stages. The accurate identification of lesions is currently the main challenge in the diagnosis of skin diseases including Squamous cell carcinoma, melanoma, dermatofibroma, vascular lesion, melanocytic nevus, basal cell carcinoma, etc.

The procedure of removing the Region of Interest from photographs, such as a lesion, is referred to as image segmentation [3]. Different methods, such as threshold-based, clustering-based, fuzzy method-based, active contour-based, quantization-based, merging threshold-based, pattern clustering-based, etc., can be used to do this. [5]. Deep learning-based segmentation algorithms have recently advanced [4]. Due to the existence of hair, blood vessels, skulls, and other physical characteristics of the human body, images of individuals may be hazy, noisy, or of low quality. The development of numerous computer methods based on machine learning and deep learning produces fast and precise findings in the diagnosis of illness. We'll talk about a computer method for categorizing skin lesion conditions based on clinical data in this suggested study.

For image enhancement, the autoregressive approach is employed. Autoregressive (AR) approaches build a tractable explicit density model (tractable density) to maximize the likelihood of training data. A deep neural network called PixelCNN includes the distribution of pixel dependence in its parameters. It successively creates two spatial dimensions of an image, one pixel at a time. PixelCNN can simultaneously learn the distribution of every pixel in the image using convolutional processes. The receptive field of a standard convolutional layer, however, contradicts the sequential prediction of autoregressive models when calculating the probability of a particular pixel. The convolutional filter processes data from a center pixel and calculates the output feature map by taking into account all of the pixels around it as well as the previous pixels. Then, masks are used to stop pixels that haven't yet been predicted from sending information. With these methods, it is straightforward to quantify the probability of data observation and to offer a generative model evaluation metric. Neural Autoregressive Distribution Estimation (NADE) models tackle the challenge of unsupervised distribution and density estimation.

They achieve this through neural network architectures, particularly by employing the constrained Boltzmann machine weight sharing scheme and the probability product rule. This approach provides a practical and versatile way to compute p(x), demonstrating efficiency and tractability, even when dealing with various configurations of x's dimensions. A fundamental issue in machine learning, distribution estimation can be used for a variety of inference tasks, including classification, regression, missing value imputation, and predictions. In this article, we provide Neural Autoregressive Distribution estimate (NADE), a third distribution estimate method based on feed-forward neural networks and autoregressive models. NADE is unique in that it can compute p(x) quickly independent of the input dimension order. We investigate the usefulness of NADE models for managing both binary and real-valued input. Furthermore, we clarify how resilient performance can be achieved by training deep NADE models to ignore the order of the input dimensions. Last but not least, we explore how to further improve NADE models by including a deep convolutional architecture that takes advantage of the topological structure of pixel data in images.

II. LITERATURE SURVEY

With an estimated 377,713 new cases and 177,757 fatalities from head and neck disease worldwide in 2020, skin disease is the most prevalent type of the disease [1]. Surgery is typically the first line of treatment and has a high success rate, with early stage overall survival rates of 75–90% [2, 3]. However, more than 80% of cases have advanced diagnoses and have severe morbidity and fatality rates [2, 4]. Given the horrendous incidence and mortality rates, carcinoma screening has been a crucial component of many humanitarian programmes in an effort to improve early diagnosis of the disease [5]. Skin doubtless malignant diseases (OPMD), including as leukoplakia and erythroplakia, are frequently present before skin squamous cell disease (OSCC), which accounts for approximately 90% of instances of carcinoma [6]. The primary focus of screening programmes has been on OPMD detection since it carries a risk of malignant transformation and is crucial for lowering disease-related morbidity and death [6]. However, it has been discovered that the execution of those programmes, backed by visual examination, is difficult in a real-world context since they rely on medical personnel, United Nations agency are typically not sufficiently qualified or prepared to recognize these lesions [6, 7]. The significant heterogeneity in the appearance of skin lesions makes their diagnosis by healthcare workers extremely challenging and is thought to be the main cause of delays in patient referrals to carcinoma experts [7]. Furthermore, early-stage OSCC lesions and OPMD are typically benign and should appear as small, innocuous lesions, causing patients to present later than expected and ultimately adding to the diagnostic lag. Advances in computer vision and deep learning offer effective approaches to create linked technologies that can automatically screen for the skin fissure and provide feedback to help professionals during patient assessments as well as on individuals for contemplation. The majority of the work on the use of specialised imaging technologies, such as optical coherence tomography [8,9], hyper spectral imaging [10], and motor vehicle light imaging [11-16], has focused on the automatic diagnosis of carcinoma using images [7,8]. On the other hand, some white-light photography studies have been conducted [17–21], the majority of which focus on the identification of bound forms of skin lesions.

The discovery of OPMD is essential for the early detection of carcinoma and plays a significant role in the creation of instruments for screening for the disease. In this study, we looked into the prospects for a deep learning-based automatic system for carcinoma screening as well as the prospective applications of various computer vision techniques to the carcinoma domain in the context of photographic images.

???????III. PROPOSED SYSTEM ARCHITECTURE

It is challenging to train a deep convolution neural network (CNN) from scratch since it requires a large amount of labeled training data and a great deal of expertise to ensure appropriate convergence. A promising alternative is to adjust a CNN that has already been pre-trained using, for instance, a large collection of labeled natural images. The significant differences between natural and medical images, however, might discourage such knowledge transfer. In the area of medical image analysis, we tend to seek an answer to the following key question: Will using pre-trained deep CNNs with additional fine-tuning obviate the requirement for training a deep CNN from scratch? We examined the performance of deep CNNs trained from scratch compared with pre-trained CNNs that were fine-tuned in a very layer-wise manner for four different medical imaging applications in three specialties (radiology, cardiology, and gastroenterology) involving classification, detection, and segmentation from three completely different imaging modalities. Experiments consistently demonstrate that using a pre-trained CNN with adequate fine-tuning outperformed or, in the worst case, performed similarly to a CNN trained from scratch. Fine-tuned CNNs were also much more robust to the size of training sets than CNNs trained from scratch. Finally, neither shallow standardization nor deep standardization was the best option for a particular application, and our layer-wise fine-tuning theme may provide a practical solution.

Convolution Neural Network (CNN/ConvNet) is a more advanced multi-layer neural network designed to automatically extract alternatives from unprocessed raw component images. One of the benefits of ConvNet is its ability to learn from a small dataset using a deep learning model that has already been trained, such as Image Net. The VGG architecture is the first deep CNN to achieve the most promising results, and it is also a very appealing network because to its simple and cohesive design. VGG internet is used to seizing baseline options from the provided input images. It functions flawlessly with the small dataset we have built. Smaller convolution filters and a smaller variety of receptive field channels are used by the VGG design to equalize the process costs inside the ImageNet in exchange for increasing network depth. Convolution layer, pooling layer, and totally connected layer make up the bulk of the VGG16 design. The classification of medical images is crucial to both clinical care and academic tasks. The conventional approach's performance has reached its apex, though. Furthermore, it takes a lot of time and effort to identify and extract the categorization criteria from them. The deep neural network is a cutting-edge machine learning method that has proven effective for a number of categorization issues. The convolution neural network stands out with the best results on a number of picture classification tasks. The labelling of medical images, however, necessitates a high level of professional knowledge, making the compilation of medical image collections difficult. These use transfer learning on two models of convolutional neural networks and a linear SVM classifier using features that are free of local rotation and orientation.

Skin disease is the most common type of head and neck disease, killing about 177,757 individuals’ year worldwide. Early detection increases the likelihood of skin diseases' survival by up to 75–90%. However, the majority of cases are only detected once they are far along, mainly due to a lack of public awareness of the mouth disease signs and a delay in referrals to skin disease specialists. The development of vision-based adjunctive technologies that can identify skin potentially malignant disorders (OPMDs), which carry a risk of disease development, presents significant opportunities for the skin disease screening process. Early detection and treatment remain the most effective ways to improve skin disease outcomes. In this study, we evaluated the prospects for an automated system for identifying OPMD and examined the potential applications of computer vision techniques in the domain of skin disease within the context of photographic photographs.

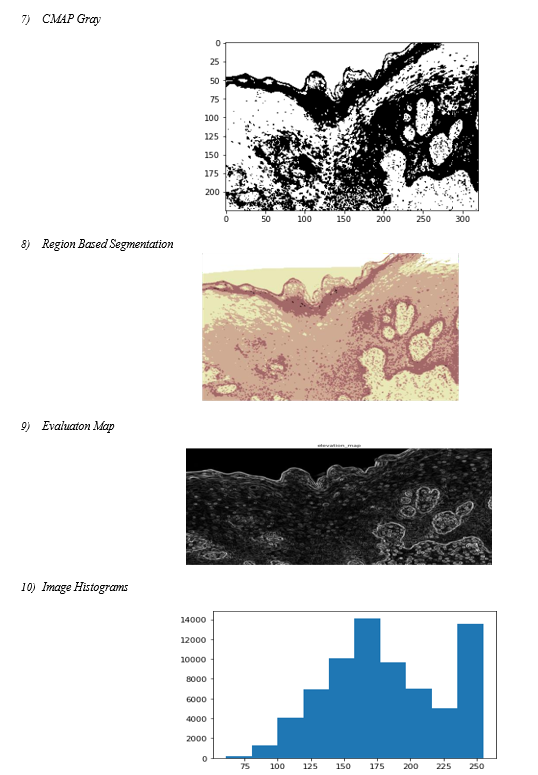

Image segmentation is a crucial and challenging step in the processing of images. It is currently a prominent topic in the study of images. The use of 3D reconstruction and other technologies is similarly constrained by this constraint. The entire image is divided into a number of sections with some shared characteristics by image segmentation. To put it simply, it involves removing the backdrop from the target of an image. Image segmentation techniques are currently progressing more quickly and precisely. We are discovering a broad segmentation algorithm that can be applied to many types of photos by fusing several new theories and technology.

A. Why CNN Model is used for Image Classification?

Convolution Neural Networks come under the sub domain of Machine Learning which is Deep Learning. Algorithms under Deep Learning process information.Image classification involves the extraction of features from the image to observe some patterns in the dataset. Using an ANN for the purpose of image classification would end up being very costly in terms of computation since the trainable parameters become extremely large Convolution Neural Networks (CNNs) are the backbone of image classification, a deep learning phenomenon that takes an image and assigns it a class and a label that makes it unique. Image classification using CNN forms a significant part of machine learning experiments.

- We use CNN here because CNN Requires little image processing

- CNN Requires little computation complexity

- CNN Detect import feature without any human interaction

For this purpose, we have CNN over other deep learning algorithms

B. Feature Extraction

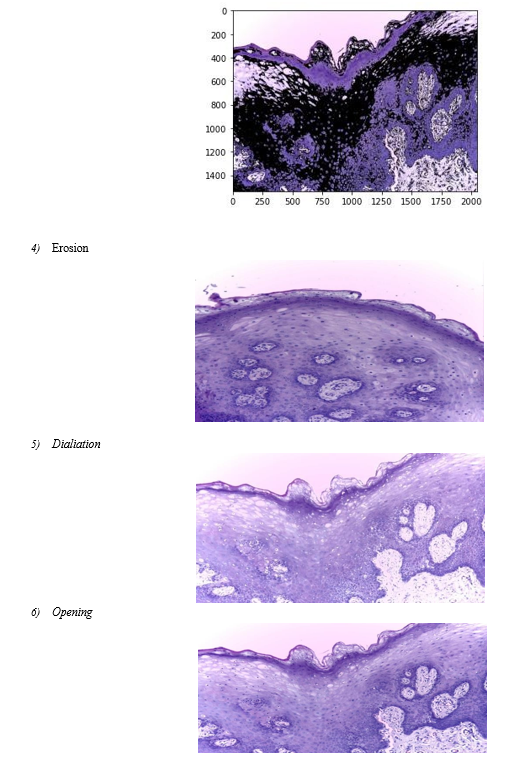

Feature plays a very important role in the area of image processing. Before getting features, various image preprocessing techniques like binarization, thresholding, resizing, normalization etc. are applied on the sampled image. Feature extraction is a method of capturing visual content of images for indexing and retrieval. Feature extraction is used to denote a piece of information which is relevant for solving the computational task related to certain application system. There are two types of texture feature measures. They are given as first order and second order measures. In the first order, texture measures are statistics, calculated from an individual pixel and do not consider pixel neighbor relationships.

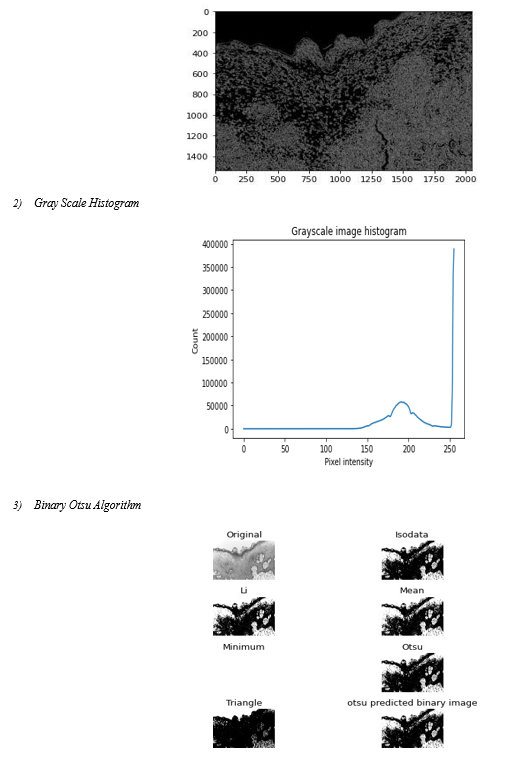

C. Intensity Histogram

A frequently used approach for texture analysis is based on statistical properties of Intensity Histogram. A histogram is a statistical graph that allows the intensity distribution of the pixels of an image, i.e. the number of pixels for each luminous intensity, to be represented. By convention, a histogram represents the intensity level using X-coordinates going from the darkest (on the left) to lightest (on the right). Thus, the histogram of an image with 256 levels of grey will be represented by a graph having 256 values on the X-axis and the number of image pixels on the Y-axis. The histogram graph is constructed by counting the number of pixels at each intensity value

D. Gray Level Co – occurrence Matrix

A gray level co-occurrence matrix (GLCM) or co-occurrence distribution is a matrix or distribution that is defined over an image to be the distribution of co-occurring values at a givenoffset. A GLCM is a matrix where the number of rows and columns is equal to the number of gray levels, G, in the image. The use of statistical features is therefore one of the early methods proposed in the image processing literature.

E. Artificial Intelligence

Through the use of computer-based image analysis, subjective differences between and across observers can be overcome, enabling an objective assessment of the parameters.78 The application of artificial intelligence in dermatology as a diagnostic tool is on the rise. In dermatology, computational tools are applied to expedite data processing and provide more accurate and dependable diagnosis. More people became aware of the issue in the 1980s due to a rise in malignant melanoma cases, which led to the development of improved diagnostic techniques for early lesion diagnosis. Wilhelm Stolz and colleagues in the Munich imaging group created a hand- held dermatoscope for cutaneous surface microscopy at about the same time. Later, computer-aided diagnosis was used to test automated melanomas diagnosis, replicating the dermatologist's judgment when viewing images of pigmented skin lesions obtained using spectrophotometry, dermoscopy, or photography.

Image segmentation: One of the most significant and vital aspects of the automated analysis of pigmented skin lesions is precise border identification, or segmentation. Due to the low contrasts surrounding the skin, hazy borders, the presence of artifacts, and the uneven features that define lesional pictures, it is also thought to be the most challenging task.

Several machine learning techniques have been reported for lesion segmentation. They are predicated on the lesion's color information, texture, and brightness. They fall into one of several general categories: region-based, fuzzy c-means, edge- based, gradient vector flow snakes, and thresholding. The most popular approach, the thresholding method, works best when the skin and lesion have a good contrast.

???????

???????

Conclusion

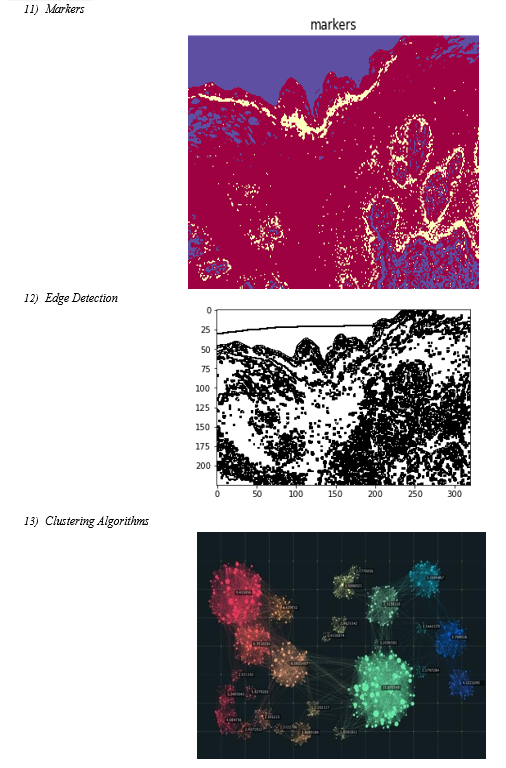

Here we have implemented the image processing and deep neural network technique for skin disease detection till now we have implemented the image processing part and clustering techniques for the skin disease images.

References

[1] International Agency for Research on Disease. 900 World Fact Sheets. Available online: https://gco.iarc.fr/today/data/factsheets/ populations/900- world-fact-sheets.pdf (accessed on 27 August 2020). [2] Stathopoulos, P.; Smith, W.P. Analysis of Survival Rates Following Primary Surgery of 178 Consecutive Patients with Skin Disease in a Large District General Hospital. J. Maxillofac. Skin Surg. 2017, 16, 158–163. [CrossRef] [PubMed] [3] Grafton-Clarke, C.; Chen, K.W.; Wilcock, J. Diagnosis and referral delays in primary care for skin squamous cell disease: A systematic review. Br. J. Gen. Pract. 2018, 69, e112–e126. [CrossRef] [PubMed] [4] Seoane, J.; Alvarez-Novoa, P.; Gómez, I.; Takkouche, B.; Diz, P.; Warnakulasiruya, S.; Seoane-Romero, J.M.; Varela- Centelles, P. Early skin disease diagnosis: The Aarhus statement perspective. A systematic review and meta-analysis. Head Neck 2015, 38, E2182–E2189. [CrossRef] [PubMed] [5] WHO. Skin Disease. Available online: https://www.who.int/disease/prevention/diagnosis- screening/skin-disease/en/ (accessed on 2 January 2021). [6] Warnakulasuriya, S.; Greenspan, J.S. Textbook of Skin Disease: Prevention, Diagnosis and Management; Springer Nature: Basingstoke, UK, 2020. [7] Scully, C.; Bagan, J.V.; Hopper, C.; Epstein, J.B. Skin disease: Current and future diagnostic techniques. Am. J. Dent. 2008, 21, 199–209. [8] Wilder-Smith, P.; Lee, K.; Guo, S.; Zhang, J.; Osann, K.; Chen, Z.; Messadi, D. In Vivo diagnosis of skin dysplasia and malignancy using optical coherence tomography: Preliminary studies in 50 patients. Lasers Surg. Med. 2009, 41, 353–357. [CrossRef] [9] Heidari, A.E.; Suresh, A.; Kuriakose, M.A.; Chen, Z.; Wilder-Smith, P.; Sunny, S.P.; James, B.L.; Lam, T.M.; Tran, A.V.; Yu, J.; et al. Optical coherence tomography as an skin disease screening adjunct in a low resource settings. IEEE J. Sel. Top. Quantum Electron. 2018, 25, 1–8. [CrossRef] [10] Jeyaraj, P.R.; Nadar, E.R.S. Computer-assisted medical image classification for early diagnosis of skin disease employing deep learning algorithm. J. Disease Res. Clin. Oncol. 2019, 145, 829–837. [CrossRef] [11] Song, B.; Sunny, S.; Uthoff, R.D.; Patrick, S.; Suresh, A.; Kolur, T.; Keerthi, G.; Anbarani, A.; Wilder-Smith, P.; Kuriakose, M.A.; et al. Automatic classification of dual- modalilty, smartphone-based skin dysplasia and malignancy images using deep learning. Biomed. Opt. Express 2018, 9, 5318–5329. [CrossRef] [12] Uthoff, R.D.; Song, B.; Sunny, S.; Patrick, S.; Suresh, A.; Kolur, T.; Gurushanth, K.; Wooten, K.; Gupta, V.; Platek, M.E.; et al. Small form factor, flexible, dual-modality handheld probe for smartphone-based, point-of-care skin and oropharyngeal disease screening. J. Biomed. Opt. 2019, 24, 1–8. [CrossRef] [13] Uthoff, R.D.; Song, B.; Sunny, S.; Patrick, S.; Suresh, A.; Kolur, T.; Keerthi, G.; Spires, O.; Anbarani, A.; Wilder-Smith, P.; et al. Point-of-care, smartphone-based, dual-modality, dual-view, skin disease screening device with neural network classification for low-resource communities. PLoS ONE 2018, 13, e0207493. [CrossRef] [14] Uthoff, R.; Wilder-Smith, P.; Sunny, S.; Suresh, A.; Patrick, S.; Anbarani, A.; Song, B.; Birur, P.; Kuriakose, M.A.; Spires, O. Development of a dual-modality, dual-view smartphone-based imaging system for skin disease detection. Des. Qual. Biomed. Technol. XI 2018, 10486, 104860V. [CrossRef] [15] Rahman, M.S.; Ingole, N.; Roblyer, D.; Stepanek, V.; Richards-Kortum, R.; Gillenwater, A.; Shastri, S.; Chaturvedi, P. Evaluation of a low-cost, portable imaging system for early detection of skin disease. Head Neck Oncol. 2010, 2, 10. [CrossRef] [PubMed] [16] Roblyer, D.; Kurachi, C.; Stepanek, V.; Williams, M.D.; El-Naggar, A.K.; Lee, J.J.; Gillenwater, A.M.; Richards- Kortum, R. Objective detection and delineation of skin neoplasia using autofluorescence imaging. Disease Prev. Res. 2009, 2, 423–431. [CrossRef]

Copyright

Copyright © 2024 Minal Kharat, Rutuja Nalawade, Mrunali Patil, Trupti Sarang, Sanika Shinde, Jyoti Sarawade. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60207

Publish Date : 2024-04-12

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online