Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

AirCanvas using OpenCV and MediaPipe

Authors: Dikshith S M, Deepika R, Sahithya Patil, Nagaveni V

DOI Link: https://doi.org/10.22214/ijraset.2025.66601

Certificate: View Certificate

Abstract

Human-Computer Interaction (HCI) has undergone significant transformations with the advent of Artificial Intelligence (AI) and Machine Learning (ML), enhancing the ways in which users engage with computing systems. This paper introduces AirCanvas, a novel hands-free digital interaction tool that leverages air gestures for intuitive and seamless computer control. The system uses advanced image processing techniques, specifically OpenCV for visual data analysis and MediaPipe for accurate hand gesture recognition, enabling users to manipulate virtual environments without physical touch. By integrating a standard webcam as the primary sensor, AirCanvas offers an accessible solution for gesture-based interaction, eliminating the need for specialized external hardware like motion sensors or gloves. Users can perform a variety of tasks such as virtual drawing, cursor control, and presentation navigation with simple hand gestures in 3D space. The system\'s gesture recognition capabilities are powered by deep learning models trained on large datasets of hand movements, ensuring robust performance in diverse lighting and environmental conditions. The potential applications of AirCanvas are far-reaching, ranging from interactive art creation to more practical uses in assistive technology, where people with mobility impairments can benefit from hands-free control. In the field of robotics, the tool can enable more natural human-robot interaction, while in gaming, it can introduce new forms of immersive gameplay through gesture-based interfaces. Furthermore, the tool\'s open-source nature allows for further customization and enhancement, fostering innovation and collaboration in various industries. As human-computer interaction continues to evolve, AirCanvas represents a significant step forward in making technology more intuitive, engaging, and accessible for all users.

Introduction

I. INTRODUCTION

The rise of Artificial Intelligence (AI) has ushered in transformative changes in Human-Computer Interaction (HCI), shifting the focus from traditional, physical input devices like keyboards and mice to more natural, touchless interaction methods. These innovations are redefining how users engage with computers, allowing for more intuitive and immersive experiences. One of the most exciting advancements in this domain is the development of AirCanvas, a gesture-based control system that utilizes computer vision to detect and interpret hand gestures. The primary goal of AirCanvas is to make HCI more efficient, seamless, and natural, by enabling users to control applications—such as virtual painting, cursor movement, and voice-controlled input—through simple hand gestures.

Historically, HCI has been facilitated by conventional input devices such as keyboards, mice, and touchscreens. While these devices have served their purpose for decades, the increasing demand for more intuitive and accessible systems has led to the exploration of alternative interaction methods. Gesture recognition, in particular, has emerged as a powerful tool in this evolution. By enabling users to interact with computers through physical movements, gesture-based systems offer an alternative that feels more direct and organic, eliminating the need for physical contact or complex input devices.

In the context of AirCanvas, this paper emphasizes the integration of two pivotal technologies: OpenCV (Open Source Computer Vision Library) and MediaPipe. OpenCV is renowned for its image processing capabilities, providing the foundation for detecting and tracking hand movements in real time. MediaPipe, developed by Google, is a framework for building multimodal, cross-platform machine learning pipelines, particularly well-suited for hand gesture recognition. Together, these technologies enable AirCanvas to translate hand gestures into actionable commands that control various computer functions, from drawing in a virtual space to navigating presentations or interacting with media.

Several studies have laid the groundwork for gesture recognition systems, highlighting their potential to revolutionize HCI. For example, Ahmad Puad Ismail et al. (2020) demonstrated a hand gesture recognition system using Haar-cascade classifiers for real-time interactions. While their system showcased the promise of gesture-based control, it also pointed out the limitations of existing methods, particularly in terms of real-world efficiency and accuracy. The need for optimized systems that can work in diverse and dynamic environments was evident.

AirCanvas builds upon these foundational studies, addressing these challenges by leveraging the power of advanced computer vision libraries like OpenCV and MediaPipe. These libraries enable the system to perform gesture recognition efficiently and accurately with minimal hardware requirements, making it accessible to a broader range of users. The benefits of AirCanvas extend beyond entertainment and art creation. Gesture recognition has great potential in fields like assistive technology, where individuals with physical disabilities can interact with computers without relying on traditional input devices. In gaming, it can open new possibilities for immersive, gesture-driven experiences. Furthermore, the simplicity of using a standard webcam for gesture input makes it a cost-effective and scalable solution, further cementing AirCanvas’s potential to revolutionize HCI.

Index Terms—AirCanvas, OpenCV, MediaPipe, Human-Computer Interaction (HCI), Gesture Recognition, Image Processing, Hand Gesture Recognition, Artificial Intelligence, Machine Learning, Assistive Technology, Gesture-Based Control, Real-Time Interaction.

II. PROPOSED SYSTEM

A. System Components

The AirCanvas system is designed to provide a seamless and efficient hands-free interaction experience using state-of-the-art computer vision techniques and machine learning models. The key components of the system are detailed below:

- Image Processing: At the core of the AirCanvas system is image processing, facilitated by the OpenCV library. The system utilizes frames captured by a standard webcam, which are then processed to detect the user's hand and its movements. The first step in this process is to define a region of interest (ROI), which isolates the user's hand from the rest of the image. This technique significantly reduces the computational load and allows for faster processing. By focusing only on the hand region, the system ensures higher accuracy and efficiency, particularly in real-time applications. OpenCV handles various tasks such as background subtraction, filtering, and edge detection to enhance hand visibility.

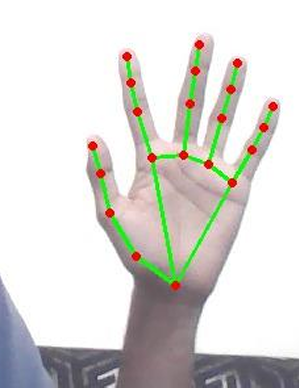

- Hand Landmark Detection: The system employs MediaPipe’s hand-tracking module, which is capable of identifying 21 distinct 3D landmarks on the user’s hand. These landmarks represent key points on the hand, such as the tips of the fingers, the wrist, and the joints of the fingers. The precise identification of these landmarks is crucial for gesture recognition. By analyzing the relative distances and angles between these landmarks, the system can accurately interpret different hand gestures. For example, a specific configuration of the hand may represent a "pinch" gesture to draw on the canvas, while another gesture may signal a "swipe" to move the cursor. This allows for a wide range of gesture-based controls without the need for physical touch.

- Functionalities: The system offers a variety of gesture-based functionalities, each designed to improve the user experience and enhance the capabilities of the AirCanvas tool. These functionalities include:

- Virtual Drawing: One of the most intuitive features of AirCanvas is the ability to create art or notes on a virtual canvas using hand gestures. Users can control the drawing tool by positioning their fingers or hands in specific ways. For example, raising the index finger might activate the drawing tool, and moving the fingertip in the air would trace a line on the screen, mimicking the action of a pen on paper. This feature allows for hands-free creative expression and is ideal for artists, educators, or anyone who needs to draw in digital space.

- Cursor Control: AirCanvas replaces the traditional mouse or trackpad with hand gestures, providing users with the ability to control the cursor’s movement in a way that feels natural and intuitive. By using hand movements such as pointing, swiping, or pinching, users can control the position of the cursor on the screen. The system tracks hand movements in real-time and translates them into corresponding movements of the cursor, eliminating the need for physical input devices like a mouse or touchpad. This functionality is ideal for presentations, remote desktop operations, or any scenario where touchless control is desirable.

- Presentation Navigation: Another key functionality of AirCanvas is its ability to navigate presentations using predefined hand gestures. For example, a user could perform a swipe gesture with their hand to move to the next slide, or a pinch gesture to go back to the previous slide. This touchless control enhances the experience during meetings or conferences, allowing presenters to move through slides without the need for a clicker or keyboard. The system recognizes specific gestures associated with presentation navigation, making it easy for users to stay focused on the presentation content without interruption.

- Voice Commands: In addition to gesture-based control, AirCanvas integrates speech recognition technology to enable voice commands. This hybrid approach enhances user interaction by allowing them to issue spoken commands that are converted into actions. For example, a user can say "draw" to activate the virtual drawing tool or "next slide" to move to the next presentation slide. Voice commands offer an added layer of convenience, particularly for users who may find it difficult to perform gestures for extended periods. The combination of voice and gesture control ensures a flexible and accessible system for a wide range of users.

III. RELATED WORK

- Gesture recognition has become an increasingly important area of research, particularly as touchless interaction methods gain prominence across various industries. Several studies have contributed to the development of systems and frameworks that enable intuitive gesture-based controls. Below are some key contributions in this field:

- S. Shriram et al. (2021): In response to the COVID-19 pandemic, Shriram and colleagues developed a virtual mouse system based on hand gestures to reduce physical contact with traditional input devices. Their work was focused on providing a touchless solution for controlling computers, aiming to minimize the spread of germs through shared hardware. The system utilized computer vision techniques to track hand movements, and users could control the cursor, click, and perform other mouse functions using predefined gestures. This work highlighted the potential of gesture recognition in promoting hygiene and physical distancing while maintaining functional interaction with digital systems.

- Jay Patel et al. (2021): Patel and his team explored the concept of air-drawing for text recognition, specifically utilizing color-based object detection techniques. The system employed color markers or tracked objects to recognize hand movements in the air, converting them into text input for various applications. This approach offered a creative method for gesture-based input, especially for tasks such as writing or drawing in digital environments. While the use of color-based detection improved accuracy, it also raised challenges in terms of environmental constraints (e.g., lighting conditions or background noise) that can affect detection performance. Patel et al.'s work paved the way for more advanced gesture-based input techniques, including systems that rely solely on hand movements without any physical markers.

- Fan Zhang et al. (2020): Zhang and colleagues introduced MediaPipe Hands, a high-fidelity hand-tracking system that utilizes deep learning models to provide real-time, precise tracking of hand landmarks. This groundbreaking work laid the foundation for gesture recognition in immersive environments such as Augmented Reality (AR) and Virtual Reality (VR). MediaPipe Hands can track 21 key landmarks on each hand, making it highly suitable for applications requiring fine-grained control, such as gaming, virtual interfaces, and robotics. The ability to track multiple hands simultaneously and detect gestures with high accuracy in real-time has been integral to advancing gesture-based systems, including the AirCanvas project. MediaPipe’s open-source nature has also made it a valuable tool for researchers and developers in the HCI domain.

- These works collectively contribute to the body of knowledge in the field of gesture recognition, with each offering unique insights and approaches to enhancing human-computer interaction. While previous studies have focused on specific domains like virtual mice or object-based gesture recognition, AirCanvas builds on these advancements by integrating real-time hand-tracking with versatile functionalities such as virtual drawing, cursor control, and voice commands, without the need for additional hardware.

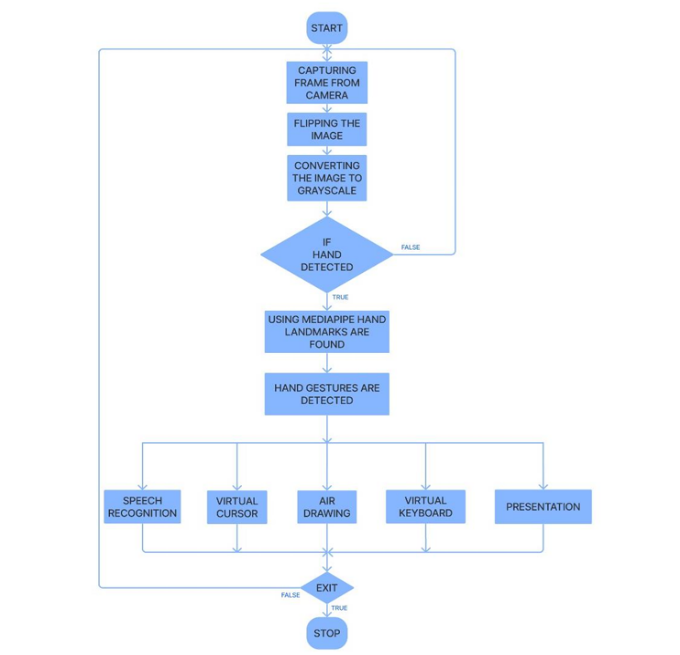

IV. SYSTEM ARCHITECTURE

The architecture of the AirCanvas system is designed to ensure seamless real-time interaction through a series of well-structured stages. These stages—input capture, processing, and output—work together to provide a smooth, hands-free user experience. The following outlines the three primary stages of the system architecture:

- Input Capture: The first stage involves capturing real-time video frames from a standard webcam, which serves as the primary input for the system. The webcam continuously streams video to the computer, and the system processes each frame to detect the user's hand. The quality of the video feed is essential for the accurate identification of hand gestures, so factors such as lighting and camera resolution are optimized for the best possible performance. In this stage, the system focuses on isolating the hand region within the captured frame, ensuring that the processing is efficient and focused only on relevant information.

- Processing: Once the video frames are captured, the next stage involves processing these frames to identify and interpret hand gestures. This step is facilitated by OpenCV and MediaPipe, two powerful libraries that handle image processing and hand landmark detection, respectively. The processing pipeline includes several steps:

- Frame Resizing: To improve computational efficiency, the captured video frames are resized to a smaller resolution while maintaining enough detail for gesture recognition. This helps speed up processing times without sacrificing accuracy.

- Color Space Conversion: The frames undergo a conversion to a color space that is more suitable for hand detection. Typically, the system converts the frames to grayscale or utilizes techniques like background subtraction to enhance the visibility of the hand region.

- Landmark Extraction: Using MediaPipe's hand-tracking model, the system extracts key landmarks on the user's hand, identifying 21 distinct points (e.g., fingertips, wrist, joints) in 3D space. These landmarks are then used to track the movement of the hand and identify specific gestures.

- Gesture Recognition: The extracted landmarks are analyzed for changes in position, distance, and angles between points. The system maps these variations to predefined gestures, such as a "pinch" to start drawing or a "swipe" to move the cursor. The gesture recognition process is highly optimized to run in real time, allowing for quick and accurate detection of user inputs.

- Output: In the final stage, the recognized gestures are mapped to specific virtual actions that the user can control. These actions include drawing on a virtual canvas, moving the cursor, or controlling presentation slides. The system responds to gestures by providing real-time feedback to the user, ensuring that their actions are immediately reflected on the screen. For example, as the user performs a gesture to draw, the system generates a line on the virtual canvas; similarly, when a "swipe" gesture is detected, the system moves the cursor accordingly. Feedback is visually displayed on the screen, creating an intuitive and interactive user experience.

By combining input capture, processing, and output in a seamless architecture, AirCanvas enables real-time, hands-free interaction with digital systems, offering a versatile solution for applications ranging from virtual art creation to cursor control and presentation navigation.

Fig. 1. System Architecture

V. APPLICATIONS

The AirCanvas system has a wide range of potential applications across various fields, leveraging gesture recognition to improve user interaction in innovative ways. Some of the key applications include:

- Virtual Presentations: AirCanvas offers a hands-free solution for navigating presentation slides, allowing educators, business professionals, and speakers to control their presentations without relying on a mouse, keyboard, or remote clicker. By using simple hand gestures, presenters can advance slides, return to previous ones, or annotate them in real-time. This is particularly beneficial in large presentations or virtual settings, where physical interaction with input devices might be impractical. The ability to control the flow of a presentation through gestures enhances engagement and allows for a more fluid, interactive delivery.

- Gaming: In the realm of gaming, AirCanvas enables more immersive experiences by allowing players to interact with the game environment through hand gestures. By replacing traditional controllers with gesture-based controls, AirCanvas adds a layer of physicality to gameplay, making it more engaging and intuitive. Whether used in virtual reality (VR) or augmented reality (AR) settings, or with conventional displays, the system opens up new possibilities for interactive gameplay, from controlling characters or objects with hand movements to performing actions like casting spells or executing complex maneuvers.

- Robotics: AirCanvas has significant potential in robotics, where users can control robotic devices using simple hand gestures. By tracking hand movements and translating them into commands, AirCanvas allows for more intuitive human-robot interaction. This is particularly useful in fields like industrial automation, where operators can remotely control robotic arms or drones to perform tasks with minimal physical input. Additionally, AirCanvas can be used in surgical robotics, allowing surgeons to interact with robotic systems with precision and hands-free control, enhancing safety and efficiency during complex procedures.

Fig. 2. Example of Gesture Recognition

4) Assistive Technology: AirCanvas can provide life-changing benefits for individuals with physical disabilities or mobility impairments. Gesture-based control enables users to interact with computers and devices without needing to rely on physical input devices like a mouse or keyboard. For individuals with limited hand or finger mobility, AirCanvas offers an accessible alternative, allowing them to perform daily tasks such as browsing the web, sending emails, or even controlling smart home devices. By combining gesture recognition with voice commands, the system ensures that users with various abilities can engage with technology in a way that suits their needs.

5) Education: In the educational field, AirCanvas opens up new opportunities for interactive learning experiences. For example, virtual art classes can be enhanced by allowing students to draw, paint, or design using hand gestures instead of traditional input devices. This hands-on approach to learning engages students more effectively and can be especially useful in remote learning environments, where the tactile nature of education can sometimes be lost. Beyond art, AirCanvas can be used in other subjects, allowing for gesture-based control of digital tools for mathematics, science, and interactive tutorials. The system promotes active participation and collaboration in the classroom, even in virtual or hybrid learning settings.

VI. RESULTS AND DISCUSSION

The performance of the AirCanvas system was thoroughly evaluated across a variety of scenarios to assess its effectiveness in real-world applications. The system demonstrated robust performance, particularly in terms of hand gesture recognition and real-time interaction. Below is a detailed discussion of the evaluation results:

- Accuracy of Hand Gesture Recognition: The core functionality of AirCanvas lies in its ability to accurately detect and interpret hand gestures. In ideal conditions, with sufficient lighting and minimal distractions, the system achieved a high accuracy rate of hand gesture recognition. Using MediaPipe’s hand-tracking model, AirCanvas successfully tracked the 21 landmarks on the hand, allowing for precise classification of gestures. Gestures such as "draw," "swipe," and "pinch" were consistently recognized with minimal error, enabling smooth interaction with the system. In scenarios that involved more complex gestures, such as multi-finger movements, the system's performance remained reliable, although slight latency was observed in certain cases.

- Impact of Lighting Conditions: Lighting plays a critical role in the accuracy of computer vision systems, and this was particularly evident in the AirCanvas evaluation. The system performed optimally in well-lit environments, where the hand was clearly visible against the background. However, challenges arose in low-light conditions, where hand detection became less accurate, leading to occasional gesture misinterpretation. To address this, the system incorporated adaptive thresholding and dynamic contrast enhancement techniques to improve hand visibility. These methods allowed the system to adjust to varying lighting conditions, significantly reducing errors in gesture recognition and improving overall performance in low-light environments.

- Handling Background Noise and Occlusion: In real-world scenarios, background noise and occlusion (e.g., when a hand is partially obscured) can pose significant challenges for gesture recognition systems. AirCanvas tackled these issues using noise-reduction algorithms that filtered out irrelevant motion in the background, ensuring that the hand gestures were the primary focus of the system. Additionally, the hand tracking model was designed to handle partial occlusions, such as when a hand was positioned partially out of frame or when fingers overlapped. While complete occlusion still presented a challenge, the system remained responsive to gestures that were only partially visible, maintaining a high degree of accuracy in most situations.

- Real-Time Performance: One of the key goals of AirCanvas was to provide real-time feedback with minimal latency. The system demonstrated excellent performance in terms of processing speed, achieving near-instantaneous recognition of gestures and corresponding actions. The processing pipeline, powered by OpenCV and MediaPipe, was optimized to handle video frames in real-time, even with complex gestures. This responsiveness was crucial for applications such as virtual drawing and cursor control, where a delay in feedback could disrupt the user experience. However, in more resource-intensive tasks, such as tracking multiple hands or high-speed gestures, slight delays were observed, though they did not significantly impact the system's overall functionality.

- User Feedback and Usability: User testing was conducted to evaluate the overall usability of the system. Participants found the gesture controls intuitive, with a quick learning curve for basic functionalities such as drawing and navigating presentations. The feedback indicated that users appreciated the flexibility of the system, especially in scenarios that required hands-free interaction, such as giving presentations or controlling media during meetings. However, some users noted that more complex gestures, like those requiring precise finger movements, could be challenging for beginners or individuals with limited dexterity. This feedback highlighted areas for potential improvement in gesture design, such as simplifying multi-finger gestures or incorporating more customizable controls.

- Limitations and Future Work: While the system demonstrated strong performance, several limitations were identified during testing. For instance, the reliance on a standard webcam for hand tracking meant that the system's accuracy could be affected by factors like camera resolution and the distance between the user and the camera. In future versions, integrating higher-quality cameras or using depth-sensing technologies (e.g., LiDAR) could improve hand tracking and gesture recognition. Additionally, the system could be further enhanced by incorporating more sophisticated machine learning models to improve gesture classification in noisy environments or for users with varying hand shapes and sizes.

Conclusion

AirCanvas represents a significant advancement in human-computer interaction (HCI) by introducing touchless, gesture-based controls powered by cutting-edge computer vision techniques. By leveraging the capabilities of OpenCV and MediaPipe, AirCanvas provides users with an intuitive and natural method of interacting with digital environments, eliminating the need for traditional input devices like keyboards, mice, and touchscreens. This system enables seamless, hands-free interaction, positioning itself as a versatile tool for a wide range of applications, including gaming, robotics, virtual presentations, assistive technology, and education. The core strength of AirCanvas lies in its ability to accurately recognize hand gestures in real-time, translating them into actionable commands. This opens up exciting possibilities for innovation in areas like immersive gaming experiences, intuitive robot control, and interactive learning. By removing barriers to physical interaction, the system enhances accessibility and convenience, making it an ideal solution for users with mobility challenges or those seeking more ergonomic computing interfaces. While the current version of AirCanvas demonstrates strong performance in controlled environments, future improvements will focus on optimizing the system for broader applicability. Key areas for development include: 1) Reducing Computational Costs: Efforts will be made to optimize the underlying algorithms for lower computational requirements, ensuring the system can run efficiently on a wider range of devices, including mobile platforms. 2) Improving Performance in Varied Lighting Conditions: Although adaptive techniques have been employed to handle different lighting conditions, further enhancements to lighting-agnostic hand tracking will be explored to improve accuracy in challenging environments. 3) Expanding Gesture Recognition: The system can be enhanced by expanding the range of recognizable gestures, improving its ability to handle more complex interactions and gestures involving multiple hands or fingers. 4) Integration with Other Interfaces: Future iterations may integrate with additional technologies, such as voice control or eye-tracking, to create a more holistic and customizable interaction experience. In summary, AirCanvas exemplifies the potential of AI and computer vision in redefining the way we interact with digital devices, offering a more natural, hands-free alternative to traditional input methods. As the system continues to evolve, it holds promise for further transforming HCI across various domains, driving innovation and improving accessibility for users around the world.

References

[1] S. Shriram, B. Nagaraj, J. Jaya, S. Shankar, P. Ajay, Deep Learning-Based Real-Time AI Virtual Mouse System Using Computer Vision to Avoid COVID-19 Spread - Journal of Healthcare Engineering, October 2021. [2] Jay Patel, Umang Mehta, Dev Tailor, Devam Zanzmera, Kevin Panchel TEXT RECOGNITION BY AIR DRAWING – Conference on Computer Intelligence and Communication Technology, September 2021. [3] S.U. Saoji, Nishtha Dua, Akash Kumar, Choudhary Bharat Phogat, AIR CANVAS APPLICATION USING OPENCV AND NUMPY IN PYTHON - International Research Journal of Engineering and Technology Volume: 08 Issue: 08 August 2021. [4] Virtual Painting with OpenCV Using Python - International Journal of Scientific Research in Science and Technology, Issue November 2020. [5] Megha S. Beedkar, Arti Wadhekar “A Research on Digital Art using Machine Learning Algorithm”, Department of Electronics and Telecommunication Deogiri Institute of Engineering and Management Studies Aurangabad, India, Journal of Engineering Science Vol 11, Issue 7/July/2020. [6] Ahmad Puad Ismail, Farah Athirah Abd Aziz, Nazirah Mohamat Kasim, Kamarulazhar Daud, Hand gesture recognition on python and OpenCV - First International Conference on Electrical Energy and Power Engineering, June 2020. [7] Fan Zhang, Valentin Bazarevsky, Andrey Vakunov, Andrei Tkachenka, George Sung, Chuo-Ling Chang, Matthias Grundmann, MediaPipe Hands: On-device Real-time Hand Tracking – Google Research, June 2020. [8] Shivangi Nagdewani, Ashika Jain, A REVIEW ON METHODS FOR SPEECH-TO-TEXT AND TEXT TO-SPEECH CONVERSION - International Research Journal of Engineering and Technology (IRJET), May 2020. [9] Riza Sande, Neha Marathe, Neha Bhegade, Akanksha Lugade, Prof. S. S. Jogdand, Virtual Mouse using Hand Gestures - International Research Journal of Engineering and Technology Volume: 05 Issue: 04 | Apr 2018.

Copyright

Copyright © 2025 Dikshith S M, Deepika R, Sahithya Patil, Nagaveni V. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET66601

Publish Date : 2025-01-20

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online