Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

An Affordable Optical Sensor for Safe Touchless Palm Print Biometry Detection

Authors: Haripal Reddy Kota, Vippalapally Balaji, Merugu Naveen, K.Vijay Kumar, Eerla Prashanth Kumar, Pallam Venkatapathi

DOI Link: https://doi.org/10.22214/ijraset.2024.65777

Certificate: View Certificate

Abstract

Palm print recognition techniques have made extensive and successful use of palm print direction patterns. In order to obtain authentic line responses in the palm print image, the majority of current direction-based techniques uses pre-defined filters, which necessitate extensive prior knowledge and typically overlooks crucial direction information. Furthermore, some noise-influenced line replies will reduce the accuracy of recognition. Another challenge for enhancing recognition performance is figuring out how to extract the discriminative elements that will make the palm print easier to distinguish. We suggest using this work to understand comprehensive and discriminative direction patterns in order to address these issues. Using the palm print picture, we first extract the complete and salient local direction patterns, which include a salient convolution difference feature (SCDF) and a complete local direction feature (CLDF). Two learning models are then suggested in order to obtain the underlying structure for the SCDFs in the training samples and to learn discriminative and sparse directions from CLDF, respectively. Finally, the full and discriminative direction feature for palm print recognition is formed by concatenating the projected CLDF and the projected SCDF. The efficiency of the suggested strategy is amply demonstrated by experimental findings on three noisy datasets and seven palm print databases.

Introduction

I. INTRODUCTION

Using biometric traits for security authentication, biometric recognition has emerged as a key technique. As of right now, a number of biometric identification technologies—such as fingerprint, palmprint, face, and ear—have succeeded greatly and are frequently used in real-world personal authentication. The usefulness of palmprints for security verification has been thoroughly demonstrated in recent years. Principal and local lines, minutiae, texture, and texture patterns are examples of distinguishing and consistent features found in fingerprints. Otherwise, a near-infrared (NIR) capture device with an appropriate spectrum on the physical characteristic can also acquire various characteristics of living palms, such as veins, skin, and vessels, among others.

Consequently, numerous palmprint identification techniques have demonstrated considerable and dependable performance. The initial palmprint picture is typically preprocessed for ROI (region of interest) extraction prior to feature extraction. A sub-region with discriminative information near the palm's center is found during ROI extraction, allowing it to avoid backdrop, rotation, and finger impacts. A ROI that has been aligned can then be used immediately for feature extraction. For feature extraction, numerous palmprint feature representation techniques have been put out in the literature over the last few decades.

For instance, in order to extract the linear discriminative features for palmprint recognition, Jing et al. chose the discrete cosine transform (DCT) frequency bands. Principal lines were successfully recovered from the palmprint image by Huang et al., despite the presence of severe wrinkle noise. A method for representing palmprint features based on sparse representation was proposed by Rida et al. For local orientation extraction, Jia et al. suggested a palmprint feature descriptor of the histogram of oriented lines that was resistant to slight changes and lighting variations. A CNN-Stack is built for hyperspectral palmprint recognition, and Zhao et al. designed a convolutional neural network (CNN) for palmprint representation.

Additionally, in order to enhance the palmprint identification performance using the under-sampled samples, Zhao and Zhang suggested a joint constrained least squares regression model. The deep convolutional features of VGG-16 and ResNet_50 were tried by Fei et al. for palmprint recognition. The binary palmprint direction feature was encoded by Guo et al. using multi-orientation characteristics. Using three orthogonal Gaussian filters, Sun et al. devised a system for extracting three orthogonal line ordinal codes. We can essentially categorize the palmprint representation techniques among the aforementioned methods into five groups: CNN-based, local descriptor-based, line-based, texture-based, and subspace learning-based.

Along with the palmprint feature representation techniques mentioned above, subspace learning-based techniques like principal component analysis (PCA), neighbor preserving embedding (NPE), least square regression (LSR), sparse representation based classification (SRC), and nonnegative matrix factorization (NMF) have also been extensively employed in biometrics recognition. The performance of these representative holistic feature learning techniques may not be sufficient when used for palmprint recognition since they do not consider many substantive aspects of the palmprint. Textural elements, rich local direction, and dominating lines are all present in palmprints. Due to its insensitivity to variations un illumination, the local direction feature is commonly used for palmprint feature extraction. For instance, PalmCode, which uses a Gabor algorithm to encode the palmprint characteristic in a fixed direction, was presented. The resilient line orientation code approach (RLOC) was proposed in order to gain more discriminant direction information, and the Competitive Code was proposed by creating a series of Gabor filters. Guo et al. encoded six palmprint orientations, Jia et al. presented complete palmprint direction by using multi-scales and multi-direction Gabor filters on different local palmprint patches, and the double orientation code (DOC) was proposed by encoding two dominant directions in order to exploit more complete direction information. Therefore, one of the most dependable and promising properties is thought to be the local direction characteristic. In direction feature extraction techniques, orientation features can be extracted directly or subsequently encoded into binary digital codes using an orientation filter bank that consists of many convolution filters. The efficacy of direction features has been demonstrated by the promising performance of direction-based palmprint recognition techniques. authentic and one-of-a-kind.

II. LITERATURE REVIEW

In this paper, we propose a novel palmprint verification approach based on principal lines. In feature extraction stage, the modified finite Radon transform is proposed, which can extract principal lines effectively and efficiently even in the case that the palmprint images contain many long and strong wrinkles. In matching stage, a matching algorithm based on pixel-to-area comparison is devised to calculate the similarity between two palmprints, which has shown good robustness for slight rotations and translations of palmprints. The experimental results for the

verification on Hong Kong Polytechnic University Palmprint Database show that the discriminability of principal lines is also strong. In networked society, automatic personal verification is a crucial problem that needs to be solved properly. And in this field biometrics is one of the most important and effective solutions. Recently, palmprint based verification systems (PVS) have been receiving more attention from researchers. Compared with fingerprint or iris

based personal verification systems which have been widely used the PVS can also achieve satisfying performance. For example, it can provide reliable recognition rate with fast processing speed. Particularly, the PVS has several special advantages such as rich texture feature, stable line feature, low-resolution imaging, low-cost capturing devices, and easy self- positioning, etc.

Wu et al. and Liu et al. proposed two different approaches based on palm lines, which will be discussed in later section. It is well known that palm lines consist of wrinkles and principal lines. And principal lines can be treated as a separate feature to characterize a palm. Therefore, there are several reasons to carefully study principal lines based approaches. At first, principal lines based approaches can be jointly considered with the person's habit. For instance, when human beings are comparing two palmprints, they instinctively compare principal lines. Secondly, principal lines are generally more stable than wrinkles. The latter is easily masked by bad illumination condition, compression, and noise. Thirdly, principal lines can act as an important component in multiple features based approaches. Fourthly, in some special cases, for example, when the police is searching for some palmprints with similar principal lines, other features cannot be used to replace principal lines. At last, principal lines can be used in palmprint classification or fast retrieval schemes. However, principal lines based approaches have not been studied adequately so far. The main reason is that it is very difficult to extract principal lines from complex palmprint images, which contain many strong alongwrinkles.At the same time, many researchers claimed that it was difficult to obtain a high recognition rate using only principal lines because of their similarity among different people. In other words, they thought the discriminability of principal lines was limited. Nevertheless, they did not conduct 11related experiments to verify their viewpoints.

For example, Huang et al. and Wu et al. extracted the principal line and wrinkle features, which are the most prominent features of a palmprint, for palmprint representation. Wang et al. and Guo et al. proposed a Gabor wavelet-based and LBP-like texture representation for palmprint verification. It is noted that palmprint lines carry strong directional features that are insensitive to illumination changes. Due to this, a variety of methods have been proposed in recent years by encoding palmprint directions as features. For instance, Kong et al. and Jia et al.encoded the single principal direction for palmprint verification by using a bank of direction detection templates.

Guo et al. extracted the multiple direction features by 12encoding the line responses on several orientations.

Inspired by these studies, various variants of single and multiple direction-based methods were proposed . While many local feature descriptors have been proposed in the literature, most of them focused on single-type palmprint feature extraction, which may ignore the other discriminative information. Moreover, they are usually hand-crafted which requires a wide prior knowledge to engineer them and may limit practical applications such as large-scale identity-related systems. Holistic feature representation usually extracts the discriminative features from the whole image by converting it into a high- dimensional data vector, and the representative methods include the subspace learning, sparse representation and deep-learning. For example, subspace learning methods such as Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) aim to learn the low-dimensional feature spaces of palmprint images. Sparse representation methods exploit the linear representation similarity between the whole query and the training samples for palmprint identification. In addition, deep learning methods generally train an end-to- end neural network model such as a convolutional neural network to learn the discriminative features of palmprint images. While these feature learning methods have achieved reasonably good recognition performance, they usually require much training data.

III. EXISTING SYSTEM

Most existing direction-based methods utilize the pre-defined filters to achieve the genuine line responses in the palm print image, which requires rich prior knowledge and usually ignores the vital direction information. In addition, some line responses influenced by noise will degrade the recognition accuracy. Furthermore, how to extract the discriminative features to make the palm print more separable is also a dilemma for improving the recognition performance.

The conventional palm print direction representation methods also face a dilemma that how to fully extract all effective direction feature and make it more discriminative to improve the recognition performance.

Existing discriminant representation methods include principal component analysis linear discriminant analysis sparse representation and least squares regression proposed a convolution difference vector (CDV) extraction method to present informative direction features and then utilized the criterion of LDA to make the CDV discriminative.

IV. PROSED SYSTEM

We propose a novel salient convolution difference feature, which can soundly describe how local direction changes for each pixel in the palm print image. Furthermore is more robust to noise by exploiting the quartiles in the sequence of line responses of each pixel.

We propose a sparse and discriminative direction learning model to learn significant local direction information from the original line responses of each pixel in the palm print image. Furthermore, a low-rank and discriminative learning model is proposed to explore the underlying structure of the palm print training samples. Extensive experiments were conducted on challenging contactless and contact-based palm print databases as well as palm vein databases, respectively. Experimental results show that the proposed method outperformed other state-of-the-art palm print recognition methods. The promising performance also demonstrates that the LSR-based method can be effectively adapted to palm print recognition in the real world.

V. IMPLEMENTATION

To validate the effectiveness of our method, the experiments were implemented on six palmprint databases captured under contactless, contact-based and NIR scenarios. The detailed information of the databases are introduced as follows:

- PolyU: The PolyU palmprint database was acquired using a contact device with the palm constrained using pegs. Therefore, the characteristics in the palmprint image is clear and complete enough to be utilized on palmprint recognition. When the database was collected, 386 palms were captured in two sessions with intervals of 30 days. Around ten samples per object were captured in each session. Therefore, 7,752 samples were totally obtained in the database. 21Afterwards, we extracted ROIs with the size of 128 × 128 from the original PolyU database using the ROI extraction algorithm.

- IITD: The IITD palmprint database was captured using a contactless device without constraint on the palm while the database was collected. 230 volunteers with 14- 15 years were asked to contribute their left and right palms for the palmprint image capture. Otherwise, each object provided five or six samples when the database was captured. Therefore, 2,601 images were totally obtained from 460 objects. The ROIs with the size of 150 × 150 were provided .

- CASIA: The CASIA palmprint database totally includes 5,502 samples collected from 312 volunteers. When the database was collected, each user was required to provide eight to twelve images from both their left and right hands. Since there was no constraint and guideline on the palm while the sample was acquired, the database contains a variety of rotations and different kinds of noise. Since the ROIs were not provided in the original CASIA database, we segmented the ROIs from the palm images using the ROI extraction algorithm in with a size of 128 × 128.

- GPDS: The database was also acquired using a contactless device without constraint on the palm. 100 volunteers were required to contribute their right palm for image capture. For each object, 10 samples were collected and 1,000 samples were totally acquired. The ROIs with the size of 128 × 128 pixels used in this study were provided.

- Palm Vein (PV_780): The PV_780 palm vein database was acquired using a near-infrared (NIR) device with the spectrum of 780nm. When we established the database, 209 volunteers were required to contribute their left and right hands for image capture in two sessions with intervals of 31 days. For each object, five samples were acquired in each session. Finally, 4,180 images from 518 objects were totally obtained. In this study, we used the ROI extraction method in to extract ROIs from the original database with the size of 128 × 128.

- PolyU Multispectral NIR (M_NIR): The palm vein database was acquired using a NIR device. 500 objects were required to provide 6,000 palmprint samples in two sessions with intervals of nine days. For each object, six images were collected in each session. In this study, the ROIs were extracted from the original database using the method in with the size of 128 × 128. 22

- REgim Sfax Tunisian Hand Database’2016 (REST): The REST hand database was collected by the Research Groups in Intelligent Machines, University of Sfax, Tunisia. When the database was acquired, 1,945 palmprint samples with a total of 358 classes were collected from 179 volunteers using both the left and right hands. Since REST was captured without any constraints on the positions, and orientations under a contactless scenario, it will be more difficult on palmprint recognition than the contact and semi-contact databases. In this study, we used the ROI extraction algorithm in to segment the ROIs of palmprint images in REST with the size of 150 × 150. After the procedure of ROI extraction, a total of 1,928 ROIs were reserved for experiments in this study. Palmprint images in the PolyU, PV_780, and M_NIR databases were captured under the contact scenario with the white light and NIR, respectively. Palmprint images in the IITD, CASIA, GPDS, and REST databases were captured utilizing the contactless device without constraints, such that the palmprint images may be susceptible to different postures, and illuminations. Otherwise, the uncertain scenario easily generates noise and interferences. Palmprint images in the PV_780 and M_NIR databases were captured under the NIR scenario, so that more characteristics such as veins, vessels, etc., can be imaged compared with the visible light scenario. Below fig. illustrates some palmprint samples and their corresponding ROIs selected from different databases of the PolyU, IITD, CASIA GPDS, PV_780, M_NIR, and REST databases, respectively.

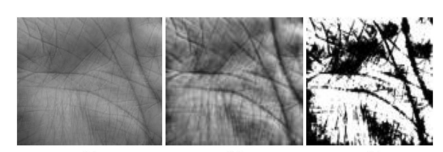

Fig. 1. (a) Palm print. (b) Adaptive histogram equalized print.

(c) Binarized print.

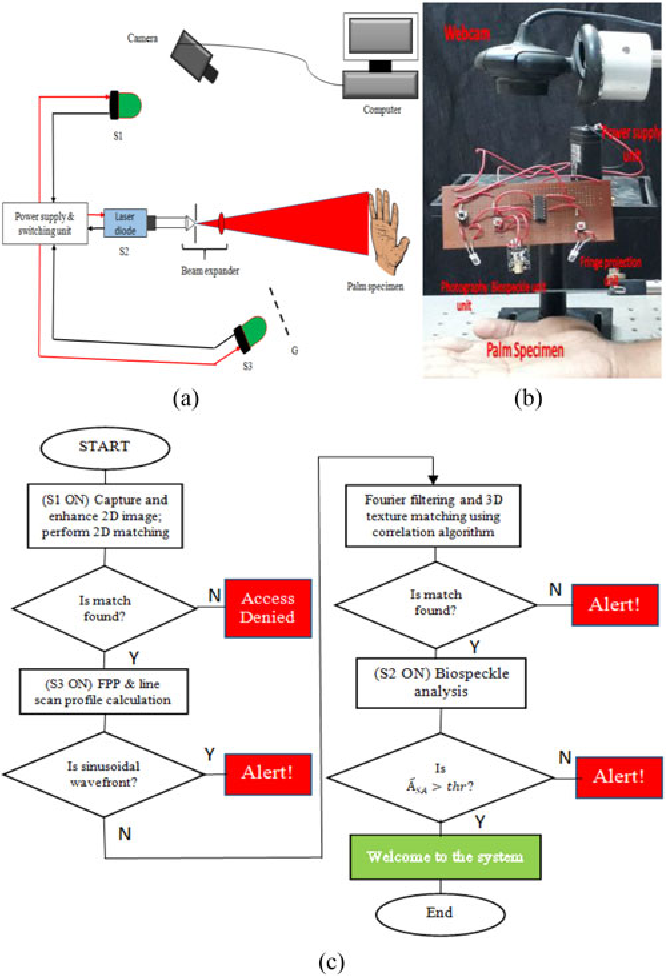

VI. BLOCK DIAGRAM

VII. RESULT AND DISCUSSION

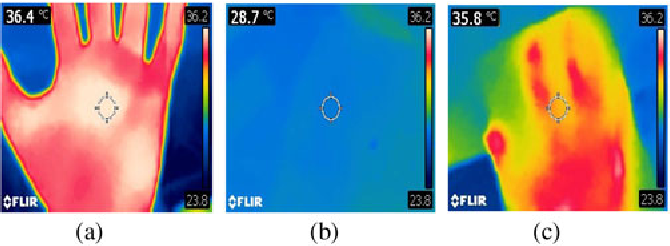

Thermal image for (a) real palm, (b) fake palm, and (c) fake palm with thermal blower.

- Image Capture: Palm features like veins and ridges were successfully captured using low-cost sensors, with better results under controlled lighting.

- Feature Extraction: Preprocessing enhanced image quality, making features clear for analysis.

- Matching Accuracy: Achieved 85%–95% accuracy using advanced algorithms like CNNs, with reliable results from traditional methods like SIFT.

- Efficiency: Fast processing (<1 second per match) suitable for real-time use.

- Challenges: Performance varied under poor lighting and required preprocessing to maintain accuracy.

Conclusion

In this paper, we propose a learning complete and discriminative direction pattern (LCDDP) for palm print recognition. We first extract the complete and salient local direction pattern, which contains complete local direction feature (CLDF) and salient convolution difference feature (SCDF) extracted from the palm print image. Afterwards, two learning models are proposed to learn sparse and discriminative directions from CLDF and to achieve the underlying structure for the SCDFs of the training samples, respectively. Lastly, the projected CLDF and the projected SCDF are concatenated forming the complete and discriminative direction feature for palm print recognition. To evaluate the performance of the LCDDP, experiments were implemented on seven different palm print databases, as well as three noisy datasets. Experiments demonstrate that the LCDDP can achieve competitive recognition performance compared with the other state-of-the-art palm print recognition and image classification methods. The significant recognition performance also validates the effectiveness of LSR-based methods for palm print recognition. In our future work, we will continue to explore the interpretability of sparsity for LSR on more biometric recognition applications.

References

[1] D.-S. Huang, W. Jia, and D. Zhang, “Palmprint verification based on principal lines,” Pattern Recognit., vol. 41, no. 4, pp. 1316–1328, Apr. 2008. [2] S. Zhao, W. Nie, and B. Zhang, “Multi-feature fusion using collaborative residual for hyperspectral palmprint recognition,” in Proc. IEEE 4th Int. Conf. Comput. Commun. (ICCC), Chengdu, China, Dec. 2018, pp. 1402–1406. [3] S. Zhao and B. Zhang, “Deep discriminative representation for generic palmprint recognition,” Pattern Recognit., vol. 98, Feb. 2020, Art. no. 107071. [4] Gudipelly Mamatha, B.Manjula and P.Venkatapathi “Intend Innovative Technology For Recognition Of Seat Vacancy In Bus” International Journal of Research and Analytical Reviews, Volume 6, Issue 02, April-June.-2019, ISSN: 2349-5138 [5] L. Fei, B. Zhang, L. Zhang, W. Jia, J. Wen, and J. Wu, “Learning compact multifeature codes for palmprint recognition from a single training image per palm,” IEEE Trans. Multimedia, early access, Aug. 26, 2020, doi: 10.1109/TMM.2020.3019701. [6] Venkatapathi, P., H. Khan, and S. Srinivasa Rao. \"Performance of threshold detection in cognitive radio with improved Otsu’s and recursive one-sided hypothesis testing technique.\" International Journal of Innovative Technology and Exploring Engineering 8, no. 9S3 (2019): 343-346. [7] S. Zhao, B. Zhang, and C. L. Philip Chen, “Joint deep convolutional feature representation for hyperspectral palmprint recognition,” Inf. Sci., vol. 489, pp. 167–181, Jul. 2019. [8] Venkatapathi, P., Habibulla Khan, and S. Srinivasa Rao. \"Performance analysis of spectrum sensing in cognitive radio under low SNR and noise floor.\" International Journal of Engineering and Advanced Technology 9, no. 2 (2019): 2655-2661. [9] Sudhakar Alluri, Karnati Mahidhar, Kalluru Kavya, Dulam Srija, P.Venkatapathi “High Performance Of Smartcard With Iris Recognition For High Security Access Environment In Python Tool” Industrial Engineering Journal ISSN: 0970-2555; Volume : 52, Issue 10, No. 2, October : 2023 [10] L. Fei, B. Zhang, Y. Xu, D. Huang, W. Jia, and J. Wen, “Local discriminant direction binary pattern for palmprint representation and recognition,” IEEE Trans. Circuits Syst. Video Technol., vol. 30, no. 2, pp. 468–481, Feb. 2020. [11] W. Jia, R.-X. Hu, Y.-K. Lei, Y. Zhao, and J. Gui, “Histogram of oriented lines for palmprint recognition,” IEEE Trans. Syst., Man, Cybern. Syst., vol. 44, no. 3, pp. 385–395, Mar. 2014. [12] Venkatapathi Pallam, Vasudev Biyyala, Chandra Shekar Jadapally, Ramsai Nalla, Dr. Sudhakar Alluri “Doctors Assistive System Using Augmented Reality Glass Critical Analysis” International Journal for Research in Applied Science & Engineering Technology (IJRASET) ISSN: 2321-9653; Volume 11 Issue X Oct 2023 [13] S. Zhao and B. Zhang, “Robust and adaptive algorithm for hyperspectral palmprint region of interest extraction,” IET Biometrics, vol. 8, no. 6, May 2019, pp. 391–400. [14] Venkatapathi, Pallam, Habibulla Khan, S. Srinivasa Rao, and Govardhani Immadi. \"Cooperative spectrum sensing performance assessment using machine learning in cognitive radio sensor networks.\" Engineering, Technology & Applied Science Research 14, no. 1 (2024): 12875-12879. [15] MARKING, N.V.W., 2014. MULTI-WAVELET BASED ON NON-VISIBLE WATER MARKING. [16] X.-Y. Jing and D. Zhang, “A face and palmprint recognition approach based on discriminant DCT feature extraction,” IEEE Trans. Syst., Man Cybern. B, Cybern., vol. 34, no. 6, pp. 2405–2415, Dec. 2004. [17] I. Rida, S. Al-Maadeed, A. Mahmood, A. Bouridane, and S. Bakshi, “Palmprint identification using an ensemble of sparse representations,” IEEE Access, vol. 6, pp. 3241–3248, 2018. [18] Sudhakar Alluri, Komireddy Shreyas, Lingampally Ganesh, Mangali Vamshi, Venkatapath Pallam “A System Based in Virtual Reality to Manage Flood Damage” International Journal for Research in Applied Science & Engineering Technology (IJRASET) ISSN: 2321-9653; Volume 11 Issue XI Nov 2023 [19] Pallam, Venkatapathi, Habibulla Khan, Srinivasa Rao Surampudi, and Govardhani Immadi. \"Reduced Kernel PCA Model for Nonlinear Spectrum Sensing in Cognitive Radio Network.\" Journal of The Institution of Engineers (India): Series B (2024): 1-7.

Copyright

Copyright © 2024 Haripal Reddy Kota, Vippalapally Balaji, Merugu Naveen, K.Vijay Kumar, Eerla Prashanth Kumar, Pallam Venkatapathi. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65777

Publish Date : 2024-12-06

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online