Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Arduino Robot Arm – Controlled by Human Gestures

Authors: Soham Tikam, Vinay Jadhav, Yash Lokhande, Ansh Birje, Lata Upadhye

DOI Link: https://doi.org/10.22214/ijraset.2024.59466

Certificate: View Certificate

Abstract

This paper presents the development and implementation of a Arduino Robot Arm controlled by human gestures. The project, named , explores the integration of hand gestures to control a six-axis robotic arm. The system utilizes flex sensors and accelerometers in a robotic glove to capture human gestures, enabling intuitive control of the robotic arm. The paper discusses the hardware components, assembly process, and the software implementation for gesture-based control. The presented solution offers a cost-effective alternative to commercial robotic arms, providing a platform for experimentation and customization.

Introduction

I. INTRODUCTION

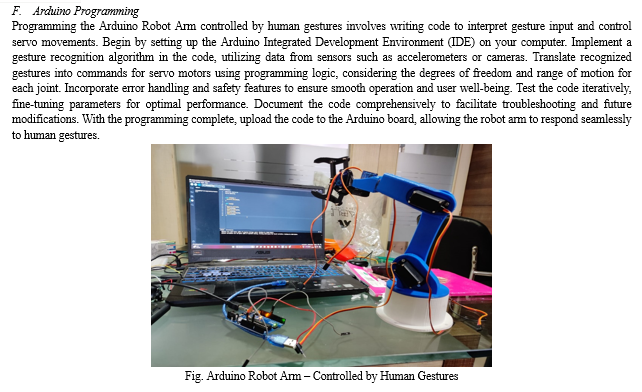

Step into the realm of robotics with the project—an Arduino-powered Robot Arm that responds to human gestures, seamlessly blending technology and imagination. Through careful selection of a robotic arm kit, Arduino board, and a gesture sensor module like the APDS-9960, this project offers a hands-on experience in assembling and programming a responsive robotic system. As you intricately connect servo motors to each joint and integrate the gesture sensor, the creative fusion of hardware and software begins to take shape. Delve into Arduino programming to create a code that not only interprets a range of hand gestures but also orchestrates precise movements of the robotic arm. The calibration and testing phase ensures that the system is finely tuned for accurate gesture recognition, refining the code for optimal performance. For an enhanced user experience, optional features like visual feedback through an LCD display and audible indicators are explored, making the interaction with not just functional but also enjoyable. Comprehensive documentation of the assembly steps, code intricacies, and troubleshooting insights is provided, making this project accessible to beginners and inspiring enthusiasts to delve into the exciting world of robotics. With we're not just creating a robot; we're sculpting an interactive bridge between human expression and machine responsiveness, offering a unique and engaging exploration of the possibilities that Arduino and gesture control bring to the table. Join us in this thrilling venture as we unlock the potential of robotics and transform your vision into a reality of innovation and creativity.

II. LITERATURE REVIEW

The exploration of sensors and robotic control, as discussed by R. V. Dharaskar et al. [1], represents a fundamental inquiry into the synergy between human-machine interfaces and technological innovations. Dharaskar's work provides a foundational understanding of how sensors, the critical components capturing physical data, can be seamlessly integrated into robotic control systems. The emphasis on robotic control through gestures indicates a move towards more intuitive and user-friendly interfaces, potentially bridging the gap between human intention and machine action. Furthermore, the applications discussed in this study shed light on the diverse realms where these technologies can be harnessed, from industrial automation to assistive devices and beyond.

Dong ZheKang et al.'s examination of a diagnostic approach for multiple circuits using fuzzy logic [2] introduces a layer of intelligence in circuit diagnostics. Fuzzy logic, known for its ability to handle uncertainty, is applied to enhance the diagnosis process. This not only contributes to the reliability of circuit diagnostics but also signifies a shift towards incorporating advanced computational techniques in traditionally hardware-centric domains.

The study by Abidhusain et al. [3] expands the discourse into the practical implementation of sensors and microcontrollers for controlling robotic arms. This work highlights the tangible applications of these technologies in real-world scenarios, emphasizing their role in enhancing precision and efficiency in industrial and automated systems. The insights provided contribute to the growing body of knowledge on the integration of sensors and microcontrollers for effective robotic control.

In this paper, Rashmi A. et al. delve into the utilization of Arduino microcontrollers and sensors to control robot arms. Arduino's open-source platform has democratized the development of such systems, making them more accessible to a broader audience. This study not only showcases the versatility of Arduino but also underscores the significance of open-source platforms in fostering innovation and collaboration within the robotics community.[4]

The exploration of five-axis control in [5] adds a layer of complexity to the discussion, reflecting the continual evolution of robotic capabilities. This level of sophistication opens up new possibilities for intricate and precise maneuvers in robotic arms, expanding their utility in fields requiring advanced spatial manipulation, such as manufacturing and medical applications.

Mounika Bhusa et al.'s innovative approach in [6] of connecting human fingers to control a robot arm introduces a novel dimension to human-robot interaction. This work explores the potential for intuitive control interfaces, paving the way for applications where human gestures can seamlessly guide robotic actions. The concept of linking every human finger's movement to a corresponding robotic function holds promise for intuitive and natural interactions in various contexts

II. PROPOSED METHODOLOGY

A. Define Objectives and Requirements

The Arduino Robot Arm, controlled by human gestures, is an accessible educational project introducing robotics and Arduino programming. Prioritizing user-friendliness and open-source collaboration, it utilizes versatile and cost-effective components for easy replication. Safety features, seamless Arduino integration, and an optional enhanced user interface aim to enrich the overall experience, bridging the gap between robotics and human gestures in an engaging manner.

B. Research and Select Components and Part List

Selecting components for a Arduino Robot Arm controlled by gestures is crucial. Choose a compatible Arduino board (Uno, Nano, or Mega) considering pins. Opt for servo motors with the right torque and compatibility. Integrate a gesture recognition sensor (accelerometer, gyroscope, or camera) for precise gesture interpretation.

Design the mechanical structure with brackets, linkages, and joints, considering range of motion. Ensure a stable power supply for Arduino and servos, addressing voltage and current requirements. Use appropriate wiring and connectors for reliable electrical connections.

Acquire a chassis, mounting hardware, and optional user interface components like a display or buttons based on project design. Consider safety features such as emergency stop buttons or obstacle detection. Optionally, add sensors like proximity or temperature sensors. Set up the Arduino IDE on your computer, ensuring compatibility and installing necessary libraries.

Prepare documentation tools (camera, notepads) for comprehensive project documentation. Always refer to datasheets for proper integration and compatibility. Fig 1 shows the components selected progress to assembly and programming phases.

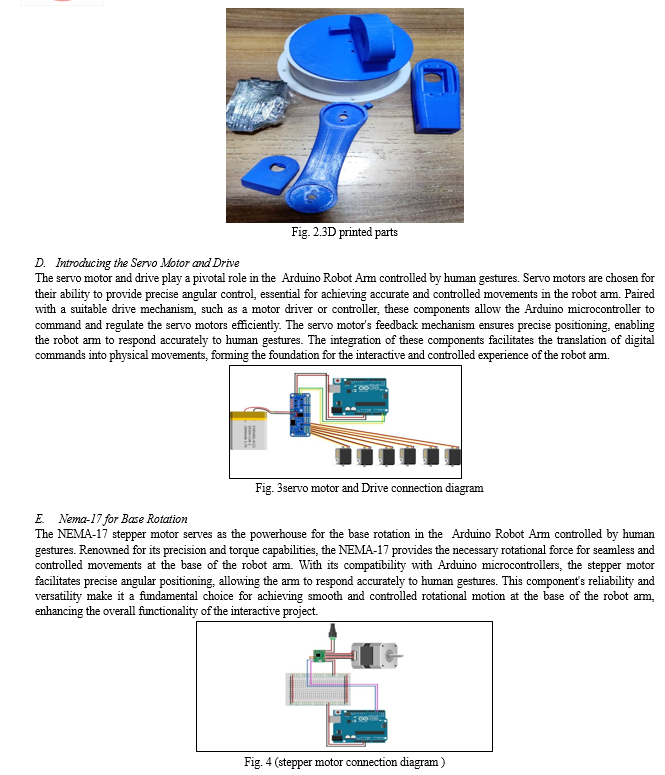

C. Assembly Parts :Robotic Arm – Assembly

Purchasing a Robotic Arm can be quite expensive. To to keep this robotic arm within Budget, 3D printing a Robotic Arm was the best option.

This helps in the long run, as there are many examples of a 3D printed robotic arm that can be customized, e.g., adding an LED, creating a camera stand Clamp:

As previously mentioned, the Robotic Arm was modeled around the Robotic Arm on Thingyverse. I’ve chosen this example as it was an excellent design providing six-axis, well documented, and had strength/ robustness. You can access Wonder Tiger’s Robotic Arm from the Part’s list.

You can also find my remixes for a Robotic Arm Camera Stand. We’ll cover this more in a future release. However, the parts could take a collect at least 40 hours to print all parts.

G. Future Improvements

- Implement more sophisticated gesture recognition algorithms or integrate machine learning techniques to improve the system's ability to recognize a diverse range of gestures accurately.

- Explore cloud-based solutions to offload computational tasks, enabling the robot arm to perform more complex operations without relying solely on its onboard processing capabilities.

- Implement real-time feedback mechanisms, such as haptic feedback or visual indicators, to enhance user awareness and control during interactions with the robot arm..

H. Future Scope

The future of the DIY Arduino Robot Arm controlled by human gestures is promising, featuring advancements in human-robot interaction and education. Technological evolution may enhance gesture recognition through advanced sensors and machine learning. Improved materials could lead to more robust arms capable of intricate tasks, while edge computing and power-efficient components may extend capabilities. In education, the robot arm may become a key hands-on learning tool, fostering collaboration through online platforms. Beyond education, applications in assistive tech, entertainment, and collaborative work are expected. The growing enthusiast community, coupled with cutting-edge tech, ensures a dynamic future for Arduino-based gesture-controlled robot arms.

IV. ACKNOWLEDGEMENT

I would like to express my sincere gratitude to Swaraj Motors for their generous support and collaboration throughout the development of this project. Their commitment to innovation and dedication to advancing technological solutions have been instrumental in shaping the success of our endeavor.

Additionally, I extend heartfelt appreciation to VESP for providing valuable resources that have significantly enriched our project. The guidance and insights received from VESP have been crucial in enhancing our understanding and contributing to the overall excellence of the work. This project is a testament to the power of collaboration, and the support from Swaraj Motors and VESP has played a pivotal role in its realization. Their commitment to fostering progress and sharing knowledge has not only enriched our experience but has also contributed to the broader community's understanding of cutting-edge technologies.

On behalf of the author, I extend sincere thanks to Swaraj Motors and VESP for their unwavering support and valuable contributions to the success of this project. Their partnership has been a cornerstone in achieving our goals and pushing the boundaries of innovation.

Conclusion

Indeed, the Arduino Robot Arm, governed by human gestures, serves as a captivating educational tool, ushering enthusiasts and beginners into the realms of robotics and Arduino programming. Its accessible design, merging Arduino\'s versatility with precise servo motors and gesture recognition, offers an engaging hands-on experience in human-robot interaction. The project\'s open-source nature fosters collaboration within a supportive and evolving community. Looking ahead, potential advancements in gesture recognition, sensor technologies, and mechanical design open avenues for diverse applications, solidifying the Arduino Robot Arm as a symbol of limitless possibilities in robotics, inspiring ongoing innovation and community involvement.

References

[1] R. V. Dharaskar. et.al., Robotic Arm Control Using Gesture and Voice. Int. Journal of Computer, Inf. Technology & Bioinformatics (IJCITB). ISSN:2278-7593, Vol. 1, Issue-1. 2012. [2] D. ZheKang. et.al. A fuzzy-based parametric fault diagnosis approach for multiple circuits. 11th Int. Conference on Control. DOI: 10.1109/CONTROL. 2016.7737525. Publisher: IEEE. Belfast, UK. 2016. [3] Abidhusain, et.al. “Flex Sensor Based Robotic Arm Controller Using Micro Controller” Journal of Software Eng. and Applications, Vol.5 No.5, 2012 [4] A. Rashmi, et.al. “Robotic Hand Controlling Using Flex Sensors and Arduino Uno”. Int. Research Journal of Eng. and Technology (IRJET) e- ISSN: 2395-0056. Vol. 06 Issue: 04, Apr 2019. [5] U. D. Meshram and R. Harkare, “FPGA Based Five-Axis Robot Arm Controller,” Int. Journal of Electronics Engineering, Vol. 2, No. 1, 2010, pp. 209-211. [6] M. Bhusa, et.al. \"Controlling Robot by Fingers Using Flex Sensors\" Int. Journal of Computer Trends and Tech.67.8 (2019): 13-17. [7] C. P. Shinde,” Design of Myoelectric Arm”, Int. Journal of Advanced Science, Engineering, and Technology. ISSN 2319-5924, V. 1, I. 1, pp 21-25. 2012 [8] R. Singh, et.al, “Design and Development of a Data Glove for the Assistance of the Physically Challenged”. Int. Journal of Elect. and Communication Eng. and Tech. (IJECET).4(4), pp. 36–41. 2013. [9] L. Shrimanth Sudheer, Immanuel J., P. Bhaskar, and Parvathi C. S. ARM 7 Microcontroller Based Fuzzy Logic Controller for Liquid Level Control System. International Journal of Electronics and Communication Eng.& Tech. (IJECET).4(2), pp. 217–224. 2013

Copyright

Copyright © 2024 Soham Tikam, Vinay Jadhav, Yash Lokhande, Ansh Birje, Lata Upadhye. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59466

Publish Date : 2024-03-27

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online