Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Artificial Intelligence (AI): Evolution, Methodologies, and Applications

Authors: Dr. Anurag Shrivastava, Abhishek Pandey, Nikita Singh, Samriddhi Srivastava, Megha Srivastava, Astha Srivastava

DOI Link: https://doi.org/10.22214/ijraset.2024.61241

Certificate: View Certificate

Abstract

Artificial intelligence, also known as AI, is a technology that enables computers and machines to emulate human intelligence and problem-solving abilities. Computers can perform a wide range of advanced functions thanks to the use of artificial intelligence (AI) technologies. These functions include the ability of machines to see, comprehend, and translate spoken and written language, analyzing data, making recommendations, and more. Artificial intelligence is considered a field of computer science that encompasses other areas like machine learning and deep learning, data analytics, linguistics, software engineering, and so on. These disciplines often involve the development of AI algorithms that are based on the decision-making processes of the human brain, that has the ability to learn and memorize from existing data and allows more precise classifications or predictions over a period of time. AI is the foundation for innovation in modern computing, discovering the value for both individuals and businesses. For illustration, AI is used to extricate content and information from pictures and documents, turns unstructured content into business-ready structured data, and unlocks valuable insights. AI has the ability to perform tasks that would otherwise require human intelligence or intervention, when combined with other technologies such as sensors, and robotics. It is used in many areas of life, including education, finance, healthcare, and manufacturing. Here are some examples of AI in different areas: Facial detection and recognition, Text editors, Digital assistants, Self-driving cars, and many more.

Introduction

I. INTRODUCTION

Artificial intelligence [1] is a broad field of science that is concerned with developing computers and machines that are able to reason, learn, and act in a way that would normally require human intelligence or involving data that is beyond what humans can analyze. AI can perform a variety of advanced functions, including: Seeing, Understanding and translating spoken and written language, Analyzing data, and Making recommendations. AI works by combining huge amounts of data with rapid, iterative processing and intelligent algorithms. This enables the software to learn automatically based on patterns or features in the data. At an operational level for business use, AI is composed of technologies that predominantly utilize machine learning and deep learning, data analytics, predictions and forecasting, object categorization, natural language processing, recommendations, intelligent data retrieval, and more. AI is capable of automating repetitive learning and discovery through data. AI does not automate manual tasks, but rather performs frequent, high-volume, computerized tasks. AI enhances the intelligence of existing products. Numerous products we already use will be improved with the capabilities of AI. Various technologies can be improved by combining automation, conversational platforms, bots, and smart machines with large amounts of data. Upgrades that can be made at home and in the workplace like security cameras. AI attunes through dynamic learning algorithms to let the data do the programming. AI observes structure and regularities in data so that algorithms can acquire skills. Just as an algorithm can instruct itself to play chess, it can teach itself to recommend the type of product in online advertisement and the AI models adapt when they are given new data.

AI analyses more and profound data using neural networks that have various hidden layers. Developing a fraud detection system with hidden layers used to be a difficult task but now it's all changed with extraordinary computer power and big data. Lots of data are required to train deep learning models because they learn directly from the data. AI's incredible accuracy is achieved through deep neural networks. For instance, the way you interact with Alexa and Google is all based on deep learning. Over time, these products become more accurate and precise as they are used. AI technologies such as deep learning and object recognition can now be employed in the medical field to pinpoint cancer on medical images with greater accuracy. AI is capable of maximizing the value of data. When algorithms learn by themselves, the data becomes an asset. The data holds the answers - you just need to use AI to uncover them. The importance of data is now greater than ever, which can lead to a competitive advantage. If you have the most valuable data in a competitive industry, even if everyone is employing similar techniques, the most valuable data will prevail.

Even so using that data responsibly necessitates the use of trustworthy AI and thus the ethical, equitable, and sustainable nature of your AI systems is essential.

A. Four Stages of Artificial Intelligence Include

- Reactive Machines: Reactive machines are artificial intelligence systems that only react to different types of stimuli according to preprogrammed rules. These machines do not use memory and thus cannot learn from new data. IBM’s Deep Blue is an example of a reactive machine.

- Limited Memory: Most modern AI is considered to have a limited amount of memory. Through the use of memory, it can be improved over time by being trained with new data, typically through an artificial neural network or other training model. Deep learning is a subset of machine learning that is classified as limited memory artificial intelligence.

- Theory of Mind: The theory of mind AI is not yet available, but research is currently underway to explore its possibilities. It describes artificial intelligence that is able to mimic the human mind and has decision-making abilities that are equivalent to those of a human, that includes comprehending and remembering emotions and reacting to social situations as a human would.

- Self-Aware: Self-aware AI, which is more advanced than theory of mind AI, describes an imaginary machine that is aware of itself and has the intellectual and emotional capabilities of a human. Just like theory-of-mind AI, self-aware AI is not currently in existence.

II. HISTORY OF AI

- In 1950, Alan Turing, often referred to as the "father of computer science", published a paper on 'Computing Machinery and Intelligence', in which, he proposes a test, now well-recognized as the Turing Test, in which a human interrogator attempts to differentiate between a computer text response and a human text response. In the test the interrogator asks the questions like: "Can machines think?". This test marks as an important event in the history of AI.

- In 1956, The term 'Artificial Intelligence' was first coined by John McCarthy at the first-ever AI conference at Dartmouth College, but AI has gained more recognition today due to rise in data volumes, advanced algorithms, and advancements in computing power and storage. During the 1950s, topics like problem solving and symbolic methods were explored in early AI research.

- In the 1960s, the US Department of Defense began training computers to emulate basic human reasoning due to its interest in this type of work. For instance, in the 1970s, the Defense Advanced Research Projects Agency (DARPA) finished a street mapping projects. In 2003, DARPA manufactured intelligent personal assistants. In 1967, Frank Rosenblatt built the Mark 1 Perceptron, which was the first computer that was based on a neural network that learned through trial and error. In the following year, a book titled Perceptrons was published by Marvin Minsky and Seymour Papert, which establishes itself as a significant work on neural networks and, for a brief period, a defense against future neural network research projects.

- In 1980s, AI applications utilized neural networks that use a back propagation algorithm to train themselves which became more widely used.

- In 1997, IBM's Deep Blue [9], an AI powered computer system, was designed that beats the world chess champion Garry Kasparov, in a chess match.

- In 2011, IBM [9] built an open-domain question-answering system named Watson. IBM Watson is able to defeat two of the most highly-ranked champions, Ken Jennings and Brad Rutter, in Jeopardy!

- In 2016, a deep neural network program titled AlphaGo by DeepMind, defeated the world champion Go player, Lee Sodol, in a five-game match. Due to the game's enormous number of possible moves, the victory is considerable. DeepMind was later acquired by Google for a reported USD 400 million.

- In 2023, the growth of large language models, like ChatGPT, are resulting in a significant shift in AI's performance and the potential to boost enterprise value. Deep-learning models can be trained on massive amount of raw and unlabeled data with the help of these new generative AI practices.

The current advancement of AI technologies is not as smart or terrifying as depicted in movies (such as AI robots taking over the world), however, AI has advanced to provide numerous specific advantages in different industries.

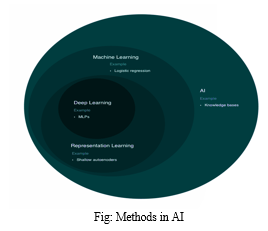

III. METHODS IN AI

Artificial intelligence (AI) encompasses a diverse set of approaches and techniques designed to enable machines to perform tasks that typically require human intelligence. These are some of the most common methods and techniques used in Artificial intelligence:-

- Machine Learning (ML): Machine Learning [2] is a subset of AI that focuses on developing algorithms that enables computers to learn from data and make predictions or decisions, and improve over time without explicitly programming them to perform the task.

Machine learning can be classified into various types:

a. Supervised Learning: Supervised learning algorithms is a branch of machine learning that allow machine to learn from labeled data (structured data), in which each input is linked with a corresponding output or target label. Examples include linear regression, logistic regression, neural networks, decision trees, random forests, and support vector machines SVMs.

b. Unsupervised Learning: Unsupervised learning algorithms is a branch of machine learning that allows machines to learn from unlabeled data (unstructured data) to find patterns, structure, or relationships within the data. Example: K-means clustering, hierarchical clustering algorithms.

c. Semi-supervised Learning: Semi-supervised learning algorithm is a branch of machine learning that involves combining both supervised and unsupervised learning, using a small amount of labeled data with a larger amount of unlabeled data for training.

d. Reinforcement Learning: Reinforcement learning algorithm is a branch of machine learning in which agents learn to make decisions by interacting with an environment to maximize cumulative rewards. Example: Q-learning, policy gradients, and deep reinforcement learning approaches.

2. Deep Learning: Deep learning [3] is a subfield of machine learning that uses artificial neural networks with several layers to learn complex patterns in huge amounts of data. The application of deep learning has been particularly effective in areas such as image recognition, natural language processing, and speech recognition.

3. Natural Language Processing (NLP): Natural Language Processing is a branch of AI that focuses on enabling computers to comprehend, interpret, and generate human language. Text analysis, sentiment analysis, machine translation, named entity recognition are a few of the techniques used in NLP.

4. Computer Vision: Computer vision is concerned with enabling computers to interpret and understand visual information from the real world. Some techniques of computer vision include image recognition, object detection, image segmentation, and video tracking.

5. Expert Systems: Expert systems are AI systems that mimic the decision-making capabilities of a human expert in a specific field. They use rules and logical reasoning to provide solutions or recommendations.

6. Evolutionary Algorithms: Evolutionary algorithms, inspired by biological evolution, involve the utilization of mechanisms such as mutation, recombination, and selection to evolve solutions to optimization or search problems.

7. Fuzzy Logic: Fuzzy logic allows for reasoning under uncertainty by modeling linguistic terms and imprecise information. It can be especially beneficial in systems where traditional binary logic may not be suitable.

8. Bayesian Networks: Bayesian networks are probabilistic graphical models that are used to represent probabilistic relationships among variables. They are used for reasoning under uncertainty, decision making, and prediction tasks.

9. Swarm Intelligence: Swarm intelligence entails the collective behavior of decentralized, self-organized systems, which are inspired by the behavior of social insects or other animal species. Swarm intelligence involves techniques such as particle swarm optimization and ant colony optimization.

10. Robotics: Robotics involves the integration elements of AI, machine learning, and mechanical engineering that helps in designing and building robots that can perform tasks autonomously or with minimal human intervention.

IV. APPLICATIONS OF AI

Artificial intelligence (AI) is becoming crucial for today's times due to its ability to solve complex problems through an efficient way. AI has a wide range of applications across various industries and domains, transforming the way tasks are performed, decisions are made, and problems are solved. AI has been used in significant ways:

A. Healthcare

- Medical Diagnosis: AI algorithms can analyze medical images (e.g., X-rays, MRIs, CT scans) to assist radiologists in diagnosing diseases like cancer, fractures, and neurological disorders.

- Personalized Treatment: AI systems analyze patient data to recommend personalized treatment plans, predict disease progression, and identify potential drug interactions or adverse reactions.

- Drug Discovery: AI accelerates the drug discovery process by simulating molecular interactions, predicting drug efficacy, and identifying potential drug candidates for various diseases.

B. Finance

- Algorithmic Trading: AI-powered trading algorithms analyze market data, detect patterns, and execute trades at high speed to optimize investment strategies and minimize risks.

- Fraud Detection: AI systems detect false or fraud activities in financial transactions by analyzing patterns, anomalies, and historical data to identify suspicious behavior and prevent financial crimes.

- Credit Scoring: AI-based credit scoring models assess credits by analyzing financial data, transaction history, and behavioral patterns to make lending decisions.

C. Retail

- Personalized Recommendations: AI algorithms analyze customer behavior, preferences, and purchase history to provide personalized product recommendations and improve customer engagement and sales.

- Inventory Management: AI systems optimize inventory levels, forecast demand, and organize supply chain operations to reduce stock-outs, minimize overstocking, and improve profitability.

- Visual Search: AI-powered visual search technologies enable users to search for products using images, enhancing the shopping experience and increasing conversion rates.

D. Autonomous Vehicles

- Self-Driving Cars: AI-driven autonomous vehicle systems use sensors, cameras, and machine learning algorithms to perceive the environment, navigate safely, and make real-time decisions to control vehicle movements.

- Traffic Management: AI technologies optimize traffic flow, reduce congestion, and improve road safety by analyzing traffic patterns, predicting traffic conditions, and coordinating traffic signals.

E. Cyber-security

- Threat Detection: AI-based cyber-security systems detect and respond to cyber-security threats in real-time by analyzing network traffic, identifying suspicious behavior, and blocking malicious activities.

- Vulnerability Assessment: AI algorithms identify vulnerabilities in software and network infrastructure, prioritize security patches, and proactively mitigate security risks to protect against cyber-attacks.

F. Manufacturing

- Predictive Maintenance: AI-driven predictive maintenance systems monitor equipment health, analyze sensor data, and predict potential failures or breakdowns, enabling proactive maintenance to minimize downtime and reduce maintenance costs.

- Quality Control: AI-powered quality control systems inspect products, detect defects, and ensure product quality by analyzing images, sounds, or sensor data in real-time during the manufacturing process.

These are a few examples of how AI is transforming industries and bringing innovation across various sectors. As AI technologies continue to evolve, their applications are expanding further, leading to new possibilities and opportunities for improving efficiency, productivity, and decision-making in diverse areas of human venture.

V. ACKNOWLEDGEMENT

We would like to express our profound appreciation and thanks generously to the Head of Computer Science and Engineering and our project guide. Without this wise counsel and able guidance, it would have been impossible to complete the project in this manner. We convey gratitude to our team members for their hard work and co-operation in completing this paper and also for their cognitive support throughout the course of this work. We consider this opportunity as a huge accomplishment in growth of our career. We will strive to use the knowledge and skills gained during the completion of this work in the most optimal way, and we will keep working on the enhancement of these skills, to achieve our career goals.

Conclusion

Artificial Intelligence (AI) has a major effect on technological innovation, industries, and the economy across the globe. Through this paper, we have explored the different dimensions of AI, digging into its origins, evolution, different methods, and its various applications. The paper initiates by highlighting its ability to mimic human intelligence and solve complex problems across various domains, then focusing on the emergence of AI followed by its evolution throughout the years. Next, we are explaining the methods of AI illustrating the basic techniques and algorithms. From conventional approaches such as expert systems and rule-based reasoning to contemporary methods like machine learning, natural language processing, and computer vision. AI includes various tools and methods adapted to specific tasks and challenges. Lastly, we explore the applications of AI showcasing its huge impact through different sectors and industries. Whether improving healthcare delivery through personalized treatment recommendations, optimizing financial markets, or using autonomous vehicles for safer transportation, AI is reshaping the way of living through technology and innovation.

References

[1] Artificial Intelligence: A Modern Approach by Stuart Russell and Peter Norvig. [2] Machine Learning Yearning by Andrew Ng. [3] Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville. [4] Peter Flach. Machine learning: the art and science of algorithms that make sense of data. Cambridge University Press, 2012. [5] Artificial Intelligence and Its Applications, International Journal of Science & Engineering Development Research - Vol.8, Issue 4, page no.356 – 360. [6] https://en.wikipedia.org/wiki/Artificial_intelligence [7] https://www.investopedia.com/terms/a/artificial-intelligence-ai.asp [8] https://www.britannica.com/technology/artificial-intelligence [9] https://www.ibm.com/topics/artificial-intelligence [10] https://cloud.google.com/learn/what-is-artificial-intelligence#section-9

Copyright

Copyright © 2024 Dr. Anurag Shrivastava, Abhishek Pandey, Nikita Singh, Samriddhi Srivastava, Megha Srivastava, Astha Srivastava. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61241

Publish Date : 2024-04-29

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online