Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Automatic Home Controlling System for Paralyzed Patient using Eye Gestures

Authors: Chanthini. K, Ravuri Mona Sri Vallika, Srinithi. V, K. Divya

DOI Link: https://doi.org/10.22214/ijraset.2024.61051

Certificate: View Certificate

Abstract

The system revolutionizes the lives of paralyzed individuals by harnessing the power of eye-tracking technology for seamless control of home appliances. Utilizing OpenCV for robust and real-time eye tracking, the system enables patients to effortlessly interact with their surroundings by focusing on predefined patterns or commands. A user-friendly interface facilitates the establishment of a connection between eye movements and various household devices, including lights, fans, and entertainment systems. This innovative solution empowers individuals with limited mobility to regain independence, simplifying the management of daily routines and living spaces through intuitive gaze-based commands. By providing a novel avenue for communication and control, the system offers paralyzed patients a renewed sense of autonomy, convenience, and improved quality of life.

Introduction

I.INTRODUCTION

Paralysis is a condition where you lose muscle function in a specific part of your body. This could happen to anyone, at any point in their life. Unfortunately, there is currently no cure for paralysis. Depending on the type and cause of paralysis, partial or complete recovery is possible. Temporary paralysis, such as that caused by Bell's palsy or stroke, may resolve on its own without any medical treatment. And even when paralysis is due to a spinal cord injury or chronic neurological condition, a person may still recover some muscle control. Although rehabilitation does not cure paralysis completely, it can help prevent symptoms from worsening. The proposed system signifies a paradigm shift in empowering paralyzed individuals through the integration of eye-tracking technology for seamless control of household appliances. In an era marked by technological advancements aimed at enhancing the quality of life, this project specifically addresses the unique needs of paralyzed patients, providing a comprehensive solution to facilitate their interaction with the surrounding environment.

The proposed system is designed to empower paralyzed patients with a means to control their home appliances effortlessly through eye tracking technology. It leverages OpenCV for robust and real-time eye tracking, allowing patients to interact with their environment by focusing their gaze on specific predefined patterns or commands. Through a user-friendly interface, patients can establish a connection between their eye movements and various household devices, such as lights, fans, and entertainment systems. By implementing this innovative system, individuals with limited mobility can regain a sense of independence and convenience as they manage their daily routines and living spaces simply by directing their gaze.

A. Existing Challenges

Despite the promising potential of eye-tracking technology, there are several challenges to overcome in its implementation for controlling home appliances for paralyzed individuals. One major hurdle is ensuring the accuracy and reliability of eye-tracking systems, especially in real-time applications where precision is critical for seamless interaction. Additionally, adapting the technology to cater to diverse eye movement patterns and individual variations poses a significant challenge. Furthermore, integrating the system with existing home appliances and ensuring compatibility across different brands and models can be complex and requires careful consideration.

???????B. Project Objectives

Develop a robust and accurate eye-tracking algorithm using OpenCV to precisely capture and interpret the user's eye movements in real-time. Design an efficient communication protocol between the software interface and the hardware components, ensuring seamless transmission of commands for controlling home appliances.

Implement a reliable and responsive hardware interface using NodeMCU, servo motor, and relay to interpret the received commands and activate/deactivate the corresponding appliances with minimal latency and maximum accuracy.

???????C. Key Features and Innovations

This revolutionary system incorporates OpenCV for robust and real-time eye tracking, enabling paralyzed individuals to control various household appliances effortlessly. The system offers a user-friendly interface that facilitates the mapping of predefined eye movement patterns or commands to specific actions, such as turning on lights, adjusting fans, or controlling entertainment systems. By harnessing the power of gaze-based commands, this innovative solution empowers individuals with limited mobility to regain independence and streamline the management of their daily routines and living spaces.

II. LITERATURE SURVEY

A literature survey is the key step in the software development process. Before developing the tool it is necessary to determine the time factor, economy, and company strength. This chapter presents previous studies done in the field of Automatic Home Appliances Controlling Systems using Eye Gestures during critical conditions. It deals with background studies in the field of technology applications

This project was developed based on the following papers:

Dhanasekar J,Guru Aravindh K B et al. [1] (2023) discussed about “System Cursor Control Using Human Eyeball Movement” This paper aims to develop a system for controlling the computer cursor using the movement of the users 2019s eyeballs, providing a hands-free alternative to the traditional mouse. We developed a program using image processing techniques and machine learning algorithms in Python to obtain the eyeball movements and blink and translate them respectively into cursor movements and click actions. Our system was able to achieve a high level of accuracy in tracking the useru2019s eye movements. Users were easily able to adapt to the new input method. This system has great potential to improve the accessibility and usability of computers for individuals with motor impairments or disabilities. This hands-free control method has great potential in the area of applications in gaming and virtual reality environment etc.

Praveena Narayanan, Sri Harsha. N et al. [2] (2022) discussed about “A Generic Algorithm for Controlling an Eyeball-based Cursor System” proposed a specific human- computer interaction system. To get input from the user, a system solely depends on various input devices. But the people who are afflicted by specific ailments or disorders, are unable to use computers. Allowing persons with disabilities and vision impairments to operate computers with their eyes will be very beneficial to them. Additionally, this form of control will reduce the need for other parties to assist in operating the computer. The individual who is handless and can just utilize their eye motions to work will find this measure to be most useful. The center of the pupil is intimately related to how the cursor moves. The electronic device controls the movement of the computer cursor. In this proposed system, OpenCV libraries and the Haar cascade algorithm are used for detecting eye movements.

Maheswari R et al [3] (2022) “Voice Control and Eyeball Movement Operated Wheelchair” Physically disabled persons rely heavily on rehabilitative mobility aids. Huge efforts are being undertaken to construct Human Interfacing Machines (HMIs) that will interface with bio-signals to regulate electronic mobility aids. Bio-signals are conveyed to an HMI using precise instructions and movement of body parts, which is the real issue for persons with a high level of handicap. As a result, this study introduces a signal-driven system employing ocular movement and speech recognition to accomplish the task of staircase climbing mechanism implementation in a wheelchair for a physically disabled population. The system is separated into three parts: First, the optical signal is used to identify ocular movement. The second is radiofrequency voice recognition. Third, with the procedure of the rocker-bogie, all three mechanisms are integrated into the wheelchair.

Marwa Tharwat et al. [4] (2022) discussed about “Eye- Controlled Wheelchair” A powered wheelchair is a mobility device for moderate or severe physical disabilities or chronic disorders. Many patients with Amyotrophic Lateral Sclerosis and quadriplegia have to depend on others to move their wheelchairs. Although assistive mobility devices, such as manual and electrical wheelchairs, exist, these options do not suit all individuals who suffer from activity limitations. This project aims to use information technology to assist people with disabilities to move their wheelchairs independently in order to enjoy their lives and integrate into their community. The proposed hands-free wheelchair is based on an eye-controlled system. Different measurement systems for the eye trackers have been evolved, such as search coil, electrooculography, video- oculography, and infrared oculography systems.

Rani. V Udaya, S Poojasree et al. [5] (2022) explained about “An IOT Driven Eyeball And Gesture-Controlled Smart Wheelchair System for Disabled Person” The area of smart homes has evolved with goal of restoring the capacity of physically disabled people, aged, and others with limited mobility to accomplish critical every day activities by giving sufficient help via current technology devices. One of most difficult aspects of smart wheelchair's design is assuring that it functions appropriately and effectively. Smart wheelchairs make it easier for challenged people to travel indoor areas and perform daily activities on their own. In this device, manual wheelchair operation is substituted by automatic wheelchair control, which is operated by eyeball movement, allowing patients to feel freer and with less or no trouble in their movements.

S.N.Shivappriya et al [6] (2021) “Intelligent Eyeball Movement Controlled Wheelchair” For people who are physically disabled, wheelchairs play a significant role. A joystick control system controls the wheelchairs that are now available. These traditional wheelchairs only work with the aid of the hand to allow the user to travel in a specific direction. It is very difficult to use such a form of device for completely paralyzed people since paralyzed people do not lift their hands. Their eye movements can allow them to step in the desired direction in such circumstances.

Sivasangari.A et al.[7] (2020) explained about “Eyeball based Cursor Movement Control” they introduced an individual Human computer interference system. Those people who are suffering from certain disease or illness cannot be able to operate computers. The idea of controlling the computers with the eyes will serve a great use for handicapped and disabled person. Also this type of control will eliminate the help required by other person to handle the computer. This measure will be the most useful for the person who is without hands through which they can operate with the help of their eye movements.

Vandana Khare et al. [8] (2019) explained about “Cursor Control Using Eye Ball Movement” A few people and groups are not able to operate the computer because of their illnesses. In this scenario, it is more sound, to introduce a method of computer operation, which is easily accessible, even considering the disabilities of the differently abled. The Human eye can be considered as a perfect substitute of computer operating hardware. In this paper an Internet protocol camera has been used to take the image of an eye frame for cursor movement. In this regard, we need to focus on the job of the EYE, to begin with. For Pupil identification we are using Raspberry pi which can deal with the cursor of the computer and in this task, even an Eye Aspect Ratio (EAR) is ascertained which talks to the snaps of the eye (left or right) by utilizing the Open Source Computer Vision module of the Python programming dialect.

Adarsh Rajesh et al. [9] (2017) “Eyeball gesture controlled automatic wheelchair using deep learning” Traditional wheelchair control is very difficult for people suffering from quadriplegia and are hence, mostly restricted to their beds. Other alternatives include Electroencephalography based and Electrooculography based automatic wheelchairs which use electrodes to measure neuronal activity in the brain and eye respectively. These are expensive and uncomfortable, and are almost impossible to procure for someone from a backward economy. We present a wheelchair system that can be completely controlled with eye movements and blinks that uses deep convolutional neural networks for classification.

Osama Mazhar et al 10] ( 2015) discussed about “A real- time webcam based Eye Ball Tracking System using MATLAB” Eye Ball Tracking System is a device which is intended to assist patients that cannot perform any voluntary tasks related to daily life. Patients who only can control their eyes can still communicate with the real-world using the assistive devices like one proposed in this paper. This device provides a human computer interface in order to take decisions based on their eye movement. A real time data stream is captured via webcam that transfers data serially to MATLAB.

??????? III. METHODOLOGY

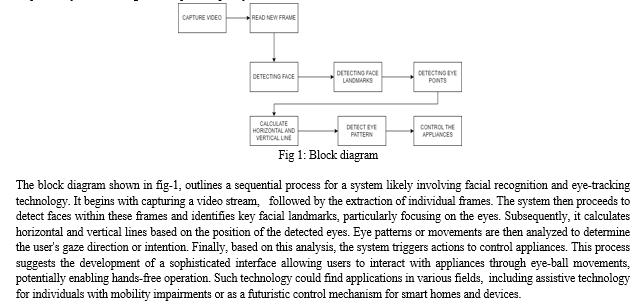

The methodology for implementing the proposed eye-tracking system aims to empower paralyzed individuals by leveraging advanced computer vision techniques for controlling home appliances. This intricate process involves several key steps, each contributing to the accurate and responsive analysis of user eye movements.

A. Video capture and frame extraction:

The initial phase focuses on video capture, where real-time footage of the user's face is obtained through a camera. This video stream serves as the foundation for subsequent processing. Frame extraction is then performed, processing the captured video frame by frame. This real-time extraction is crucial for ensuring the system's prompt response to the user's gaze.

B. Face Detection

Following frame extraction, the attention shifts to face detection. OpenCV's face detection algorithms are employed to identify and isolate the user's face within each frame. This step is pivotal, as accurate face detection forms the basis for subsequent eye-tracking analysis.

C. Eye Detection Through Face Landmarks

Once the face is successfully identified, the methodology progresses to eye detection through face landmarks. Facial landmark detection algorithms are applied to identify key points on the face, including those corresponding to the eyes. These landmarks serve as crucial reference points for precise eye-tracking.

D. Eye Point Detection

The subsequent step involves detecting specific eye points within each eye. Algorithms are employed to pinpoint key features, such as corners and the center. The precise localization of these points is essential for accurately calculating the horizontal and vertical positions of the eyes.

E. Calculation Of Horizontal And Vertical Eye Positions

The calculation of horizontal and vertical eye positions is a crucial intermediary step in the methodology. Through the analysis of the relative positions of the identified eye points, the system determines the orientation of the user's gaze within the frame. This quantitative data forms the foundation for subsequent pattern recognition.

F. Pattern Recognition And Analysis

The final phase involves the analysis of eye patterns to discern specific user actions, such as blinks or directional gazes. For blink detection, the system monitors changes in eye openness over time, identifying instances of rapid closure and reopening. Directional gaze detection involves analyzing the movement patterns of the eyes to determine whether the user is looking to the left or right.

G. User Intent Classification

The culmination of these analyses results in the classification of user intent. The system distinguishes between blinks and directional gazes, enabling it to send corresponding control signals to home appliances. A blink may signify a general command, while left or right gazes could be associated with specific appliance control commands.

H. System Integration And Independence

The detailed methodology underscores the intricate process involved in implementing the proposed eye-tracking system. The integration of advanced computer vision techniques, particularly leveraging OpenCV, facilitates the translation of subtle eye movements into meaningful control signals. This empowers paralyzed individuals to manage their living spaces effortlessly and independently, contributing to an improved quality of life.

???????

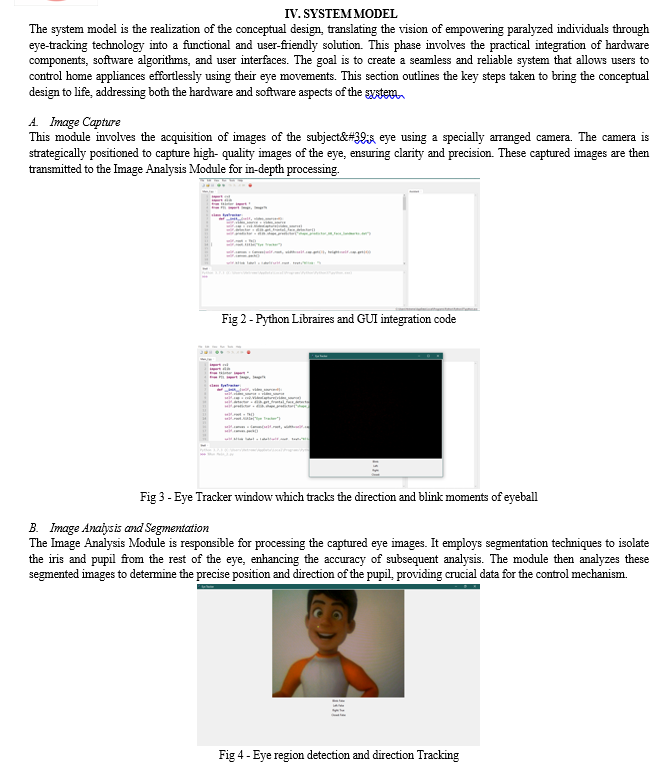

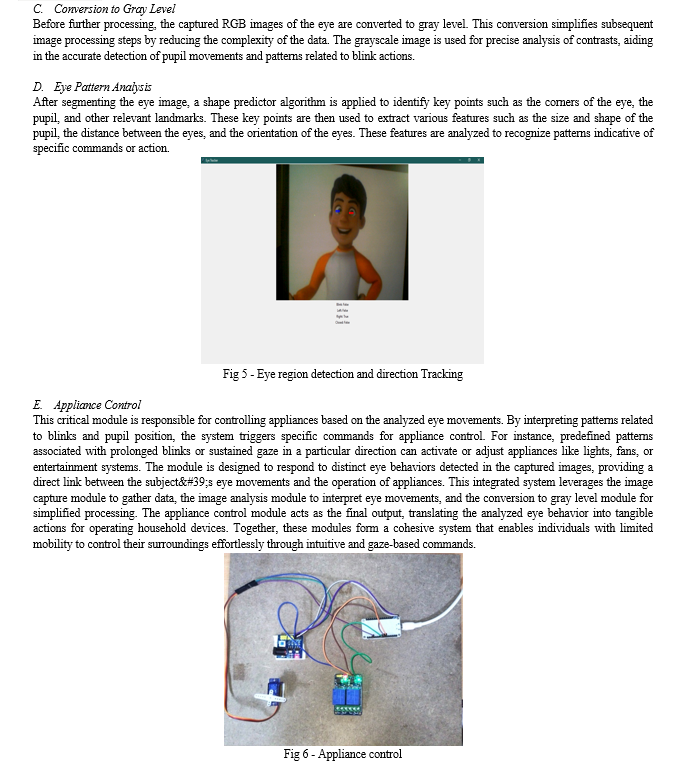

???????

Conclusion

The future scope of this eye-tracking system lies in continual refinement and expansion. Further research and development efforts could focus on enhancing the accuracy of gaze analysis algorithms, expanding the range of controllable appliances, and exploring advancements in eye-tracking technology. Additionally, considerations for integration with emerging platforms and devices could open new avenues for accessibility and usability. Continued collaboration with healthcare professionals and end-users will be pivotal in tailoring the system to diverse needs and ensuring its sustained impact in the field of assistive technology. In conclusion, the presented eye-tracking system, developed with Python 3.7, Thonny IDE, and leveraging OpenCV and GazeTracking, stands as a promising solution for enhancing the autonomy of paralyzed individuals in home environments. By interpreting subtle eye movements, the software enables intuitive control of household appliances, contributing to an improved quality of life for users with limited mobility. The successful integration of computer vision technologies underscores the potential for assistive systems that prioritize accessibility and user-friendly interfaces.

References

[1] Praveena Narayanan,Sri Harsha. N,Sai Rupesh. G,Sunil Kumar Redy,Rupesh. S,Yeswanth. M,\"A Generic Algorithm for Controlling an Eyeball-based Cursor System\",2022 International Conference on Automation Computing and Renewable Systems (ICACRS) [2] A Sivasangari.,D Deepa.,T Anandhi.,Anitha Ponraj,M.S Roobini.,\"Eyeball based Cursor Movement Control\",2020 International Conference on Communication and Signal Processing (ICCSP) [3] Marwa Tharwat,Ghada Shalabi,Leena Saleh,Nora Badawoud,Raghad Alfalati,\"Eye-Controlled Wheelchair\",2022 5th International Conference on Computing and Informatics (ICCI) [4] Rani.V Udaya,S Poojasree,\"An IOT Driven Eyeball And Gesture-Controlled Smart Wheelchair System for Disabled Person\",2022 8th International Conference on Advanced Computing and Communication Systems (ICACCS) [5] Dhanasekar J,Guru Aravindh K B,Kiren A S,Faizal Ahamath A,\"System Cursor Control Using Human Eyeball Movement\",2023 3rd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE) [6] Vandana Khare,S.Gopala Krishna,Sai Kalyan Sanisetty,\"Cursor Control Using Eye Ball Movement\",2019 Fifth International Conference on Science Technology Engineering and Mathematics (ICONSTEM) [7] Osama Mazhar,Taimoor Ali Shah,Muhammad Ahmed Khan,Sameed Tehami,\"A real-time webcam based Eye Ball Tracking System using MATLAB\",2015 IEEE 21st International Symposium for Design and Technology in Electronic Packaging (SIITME) [8] \"Intelligent Eyeball Movement Controlled Wheelchair\",2021 International Conference on Advancements in Electrical Electronics Communication Computing and Automation (ICAECA) [9] Maheswari R.,Vignesh S.,Rakesh Kumar M.,Venkatesh T.M.,Sundar R.,Jose Anand A.,\"Voice Control and Eyeball Movement Operated Wheelchair\",2022 International Conference on Edge Computing and Applications (ICECAA) [10] Adarsh Rajesh, Megha Mantur,\"Eyeball gesture controlled automatic wheelchair using deep learning\",2017 IEEE Region 10 Humanitarian Technology Conference (R10-HTC)

Copyright

Copyright © 2024 Chanthini. K, Ravuri Mona Sri Vallika, Srinithi. V, K. Divya. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61051

Publish Date : 2024-04-26

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online