Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Blind Spot Monitoring System for Heavy Vehicles

Authors: Ms. Nisha Joy, Yashwanth T, Shashank K S, Yashwanth M, Pavithra K

DOI Link: https://doi.org/10.22214/ijraset.2025.66233

Certificate: View Certificate

Abstract

Blind Spot Monitoring( BSM) systems are essential for enhancing safety in heavy vehicles, which witness significantly larger eyeless spots compared to lower vehicles. These eyeless spots, especially on the sides and hinder, limit the motorist’s visibility, adding the threat of collisions during lane changes, incorporating, and turning. The primary thing of this design is to design a BSM system that can directly descry objects or vehicles within these critical zones and warn the motorist in real time, reducing the chance of accidents. This BSM result eventually enhances road safety for both the vehicle motorist and near road druggies, particularly in civic or densely peopled business surroundings. By addressing the unique visibility challenges faced by heavy vehicles, the system not only minimizes accident pitfalls but also provides peace of mind for motorists. It represents a precious safety advancement for lines, trucking companies, and public transport drivers aiming to ameliorate overall functional safety.

Introduction

I. INTRODUCTION

The" Blind Spot Monitoring System for Heavy Vehicles" is an innovative safety technology designed to address visibility challenges in large vehicles similar as exchanges, motorcars, and construction vehicles. Due to their larger size and different structural design, heavy vehicles naturally retain substantial eyeless spots, which pose serious pitfalls during lane changes, turns, and reversing. This design seeks to alleviate these pitfalls by developing a system able of detecting objects and waking motorists about implicit hazards in these eyeless areas. Integrating this system would enhance visibility for heavy vehicle motorists, promoting safer road conditions for all druggies. This system will use a combination of detectors, cameras, and real- time processing algorithms to descry and cover objects within the vehicle's eyeless spots. By gathering and recycling data from multiple points, the system can reliably identify and detect objects, including other vehicles, cyclists, and climbers, near the heavy vehicle. cautions are also handed through visual, audio, or haptic feedback, allowing the motorist to respond instantly. Designed to work across colorful driving surroundings, this system is protean and aims to be robust enough to operate in adverse rainfall and low- light conditions.

The design aligns with current assiduity trends toward safer, smarter, and more connected vehicle systems. As motorist- backing technologies come more popular, integrating eyeless spot monitoring into heavy vehicles can reduce collision rates and set a new standard for heavy vehicle safety. Beyond reducing accident pitfalls, this system represents a step toward developing completely independent safety results in the heavy vehicle sector.

II. LITERATURE REVIEW

The review highlights the need for- detector results acclimatized for heavy vehicles, balancing delicacy, cost, and functional trustability. Let’s explore some fascinating improvements that are shaping this future:

- Arash Pourhasan Nezhad, Mehdi Ghatee, Hedieh Sajedi( 2021) presents a real- time, camera- grounded object discovery eyeless spot warning system covering a vehicle's eyeless spots. This paper uses deep literacy for object discovery and relative speed and distance estimation for vehicles in the eyeless spot of the system, waking the motorist to implicit hazards. This model processes optic inflow data to give 97 delicacy for the discovery of temporal movement with a bitsy neural network. It's compared to the before models, which have frequently been computationally precious and not so suitable for real- time perpetration.

- Tsz Laam Kiang( 2021) designed amulti-sensor crash safety system aimed at reducing rambler and other vehicle accidents for heavy- duty vehicle eyeless spots. As the eyeless spots of an HDV are huge, conventional results similar as cameras and radar systems fail to operate duly in utmost rainfall or road conditions. The proposed system comprises several detectors, a motorist alert wristband, and external airbags. trials were conducted to estimate the camera's field of view and optimal settings under different lighting conditions. Results showed bettered safety discovery effectively covering a eyeless spot area of roughly 80.3 square measures.

- Shayan Shirahmad Gale Bagi, Mohammad Khoshnevisan, Hossein Gharaee Garakani, Behzad Moshiri.( 2019) suggests amulti-sensor structure for a eyeless spot discovery( BSD) system in vehicles. This system demands high delicacy and rigidity in different conditions. BSD systems calculate substantially on radar detectors that have their own limitations similar as poor performance in adverse rainfall and limited delicacy due to clutter. To overcome these limitations, the proposed design integrates colorful detectors, similar as radar, LiDAR, cameras, and ultrasonic detectors, along with data emulsion ways to ameliorate discovery and reduce false admonitions. Thismulti-sensor approach is intended to enhance the system's trustability and functionality across colorful driving scripts.

- Jiann-Der Lee and Kuo-Fang Huang (2013) the system has improved obstacle detection under all different conditions in a vehicle's lateral blind spot and further provided better driver assistance and safety. The proposed system integrated various detection techniques such as HOG + SVM for the pedestrian's detection and image subtraction and hybrid edge and tire for the vehicle recognition. Designed to work effectively in a wide range of conditions, it has been tested in different speeds, weather, and lighting conditions. Experimental results showed high accuracy, especially when integrating all the detection methods, providing a robust and reliable alert system that assists drivers in detecting hidden pedestrians or vehicles in blind spots even in challenging environments.

- Pasi Pyykonen, Ari Virtanen, Arto Kyytinen.( 2015) This paper outlines the development of an intelligent eyeless spot discovery system for HGVs in order to ameliorate the safety of VRUs like cyclists and climbers. It's part of the DESERVE design financed by the European Commission that seeks to ameliorate ADAS for exchanges. This employs a blend of cameras, radar, and ultrasonic detectors that cover eyeless spots for obstacles to warn the motorists. Cross-domain exercise is eased with software standardization. The testing was indicating failure in discovery of motorist gaping due to changing light sources and variations in the motorists' seating position, still, with implicit to fete obstacles. The unborn prospects include including this system within the trainings and road driving to acquire nonstop literacy toward icing safety.

III. METHODOLOGY

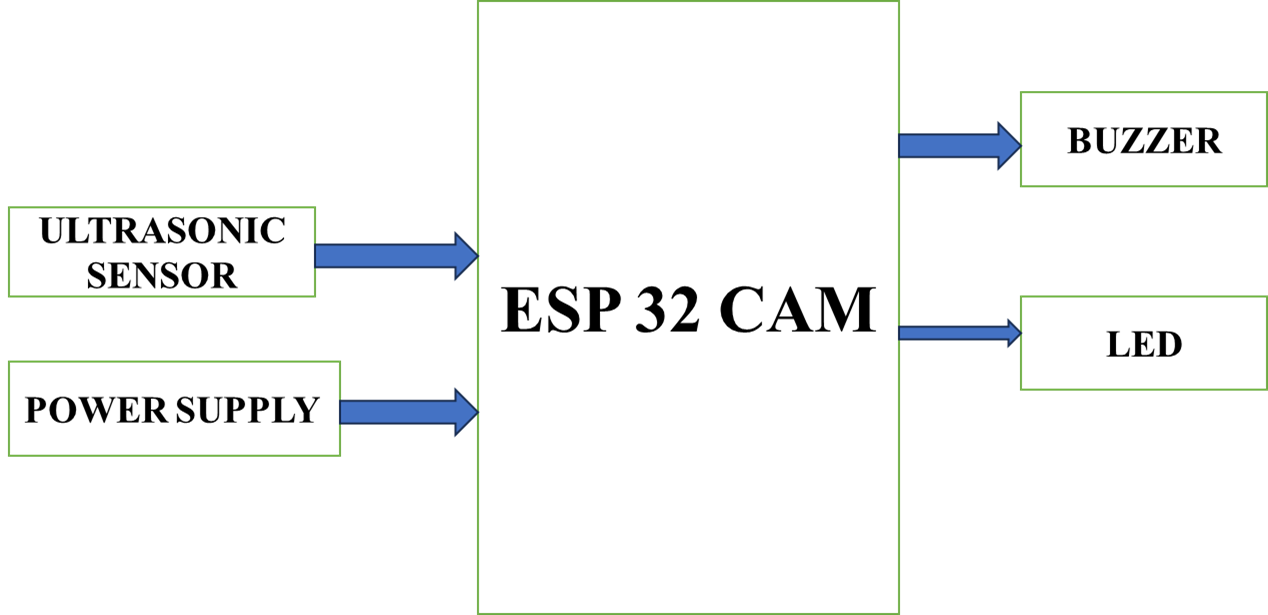

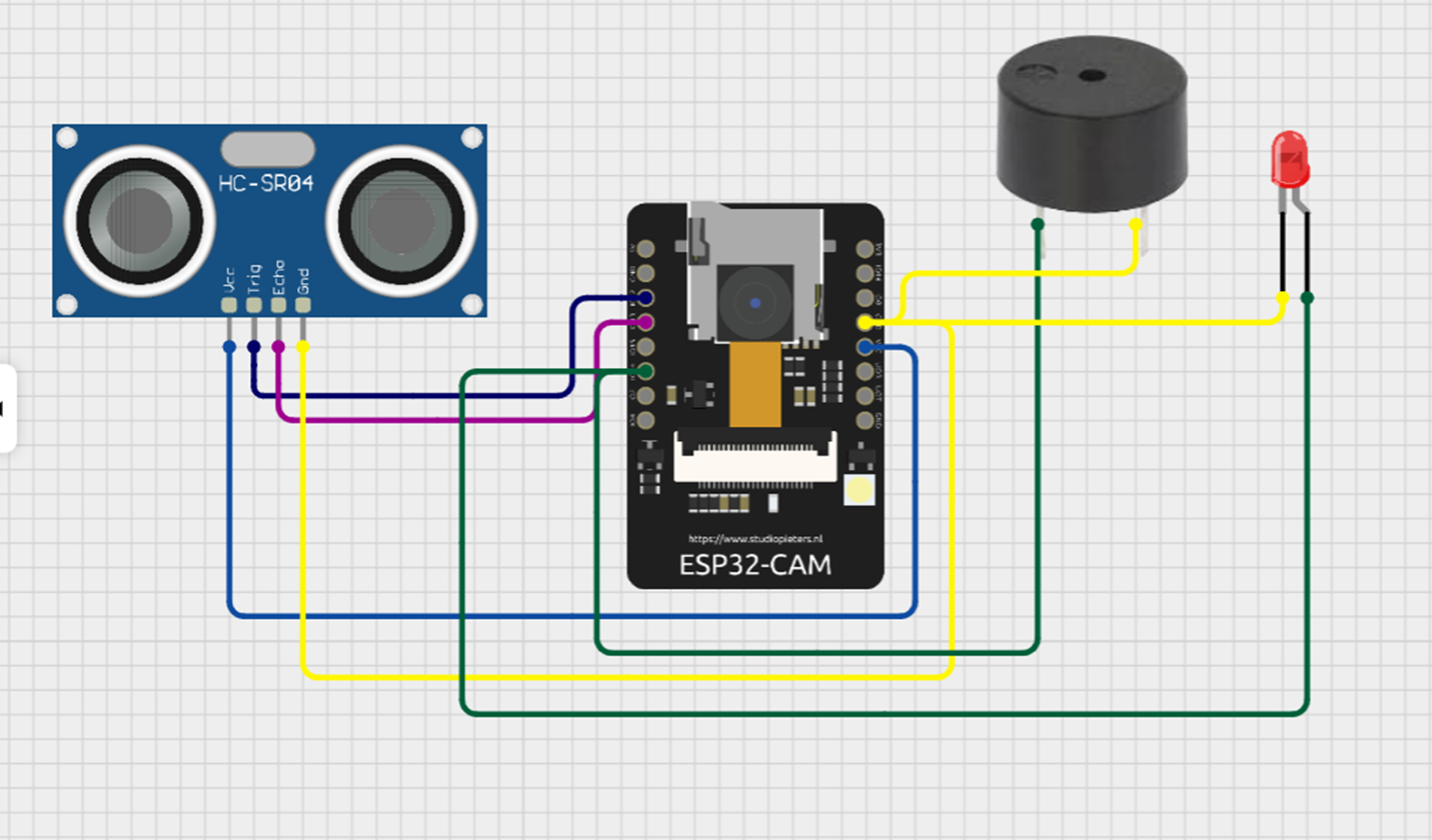

The sightless spot monitoring system for heavy vehicles uses an intertwined approach that combines attack and software factors to insure accurate discovery and timely cautions for implicit hazards. The attack setup consists of the ESP32- CAM, HC- SR04 ultrasonic detectors, an LED index, and a buzzer.

The ESP32- CAM module, HC- SR04 ultrasonic detectors, LEDs, and buzzer areutilized to identify implicit sightless spots in a heavy vehicle, as well as to advise the motorists consequently.

Fig no 1 Block illustration blind spot monitoring system

Fig no 1 Block illustration blind spot monitoring system

A. Hardware Implementation

The ESP32- CAM module is installed to capture the real- time videotape from the sightless spots around the vehicle. HC- SR04 ultrasonic detectors are strategically mounted at the hinder and side of the vehicles to descry objects in terms of distance. The LEDs and the buzzer acts as affair bias by giving visual and audible signals to motorists when an object enters the sightless spot. All the factors work on a 5V power force, so this device is dependable while running.

B. Software Implementation

The software is developed using Arduino IDE, OpenCV, and YOLOv3( You Only Look formerly). YOLOv3 is integrated with the ESP32- CAM to grease real- time object discovery. OpenCV processes the videotape feed, relating objects similar as climbers, cyclists, and vehicles. Meanwhile, the Arduino microcontroller gathers distance data using ultrasonic detectors.

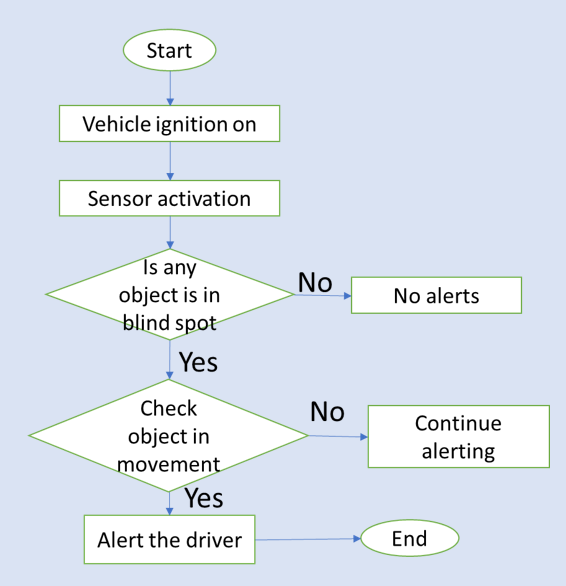

Fig no:2 Flow chart for blind spot monitoring system

The system is integrated with detector information and videotape- rested object discovery for alert prioritization.However, the buzzer begins to sound, and an LED blinks to specify on which side of the vehicle it has spotted, If a hazard is honored within a set distance range.this will give the motorist complete and accurate information in lower time to assess possible hazards within the sightless spot ares.System testing was performed in varied to check for perfection, response time, and strength.

Fig no : Circuit diagram for blind spot monitoring system

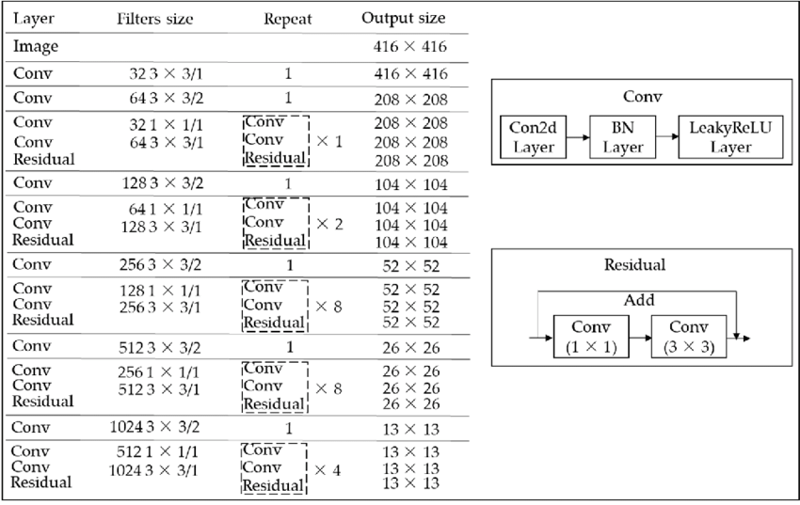

" You Only Look Once" or YOLO is a family of deep knowledge models specifically intended to be presto at object discovery. There are three introductory variations of YOLO, they're YOLOv1, YOLOv2, and YOLOv3. The first one put the general armature where the alternate one perfected the design to make use ofpre- set anchor boxes for better bounding box offer and interpretation three farther fine- tuned the armature of the model and its training methodology. A variant of Darknet, firstly having 53 estate network trained on Imagenet, is used in YOLO v3. For the task of discovery, 53 further layers are piled onto it. thus, it gives us a 106 estate completely convolutional bolstering armature for YOLO v3. In YOLO v3, the discovery is done by applying 1 x 1 discovery kernels on point charts of three different sizes at three different places in the network.

Fig no 4 : Architecture diagram of YOLOv3

IV. RESULTS

The sightless spot monitoring system performed well in controlled and real- world testing scripts. The ESP32- CAM module, using the YOLOv3 algorithm, achieved an delicacy of 95 by well- lit surroundings and 85 in low- light conditions. The HC- SR04 ultrasonic detectors rounded the system by reliably detecting objects within a 3- cadence range, especially when visual conditions were sour. The combined setup replied in a discovery delicacy of 92 across colorful environmental conditions.

The system displayed an average response time of 0.4 seconds, enabling timely cautions to the motorist. This was rapid-fire- fire- fire enough to minimize collision chances indeed in fast collaborative surroundings. False cons were observed 8 of the time, due to stationary objects like road signs or poles. The false negatives, substantially brought about in bad downfall and small objects beyond the seeing range, passed only 3%.

Field tests carried out on collaborative roads, roadways, and parking IOts vindicated that the system was effective in hazard discovery. The audio-visual cautions were intuitive and not abstracting to the motorists, with the LEDs indicating the direction of detected hazards. The integration of multiple detectors and YOLOv3 meliorate responsibility, furnishing redundancy in hazard discovery.

Field tests carried out on collaborative roads, roadways, and parking Iots vindicated that the system was effective in hazard detection. The audio-visual cautions were intuitive and not abstracting to the motorists, with the LEDs indicating the direction of detected hazards. The integration of multiple detectors and YOLOv3 bettered responsibility, furnishing redundancy in hazard detection. generation marks a significant step forward in enhancing healthcare support systems.

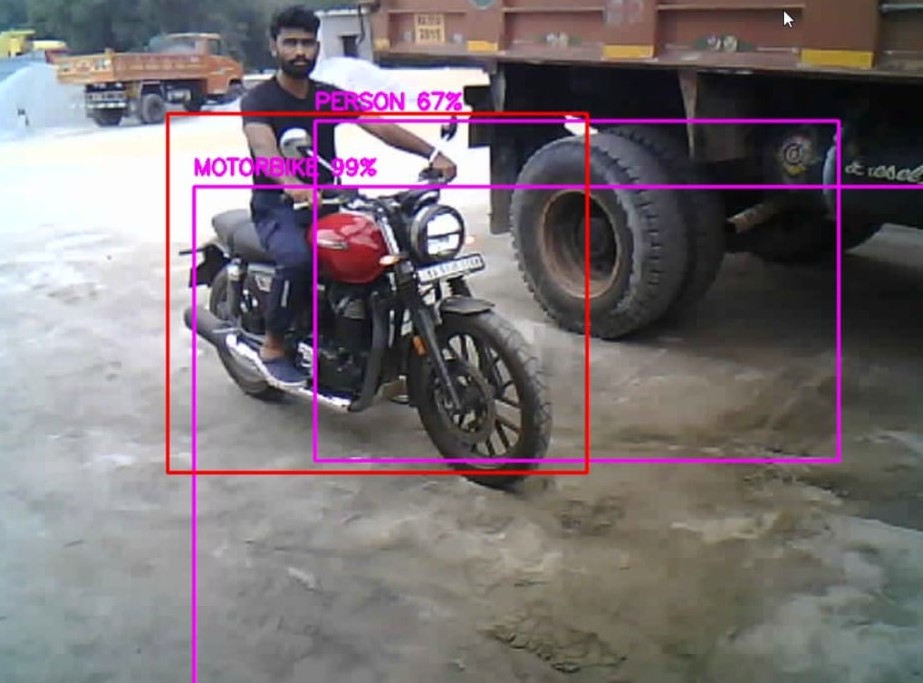

Fig no 5: object is not in ROI blind spot.

Fig no 6 :object is in ROI blind spot.

V. DISCUSSION

The sightless spot monitoring system combines affordable attack and advanced software to attack the problem of hazard discovery in the heavy vehicle sightless spot. The ESP32- CAM module worked OK with real- time videotape processing especially during daylight. HC- SR04 ultrasonic detectors offered dependable distance measures, though some issues have appeared in low- light conditions on the performance of the ESP32- CAM module that demanded more infrared lighting to have clear view at night time.

The YOLOv3 algorithm was necessary in achieving accurate object discovery. Its capability to identify multiple objects in real- time handed a significant advantage over simpler discovery styles. still, occasional misclassifications passed, particularly for objects incompletely obscured or located at the edges of the camera frame. Expanding the training dataset to include different scripts could ameliorate YOLOv3's performance further.

Redundancy through ultrasonic detectors handed chances for undetected hazards. The detectors had sins, similar as detecting objects with irregular shapes or those of sizes extremely small. videotape and detector data dependence would be fairly strong on robust discovery capabilities but increases complexity on data emulsion and generation of cautions.

Despite these challenges, this system was shown to have advantages over the being results due to low- cost discovery delicacy along with the debate cautions of system anomalies. This scalability also ensures its affordability with simpler attack corridor like ESP32- CAM and HC- SR04 detectors for both line drivers as well as individual possessors of heavy vehicles.

Conclusion

The developed eyeless spot monitoring system successfully enhances safety for heavy vehicles by addressing the critical problem of eyeless spot hazards, with ESP32- CAM, HC- SR04 ultrasonic detectors, and superior discovery algorithms similar as YOLOv3 at its core. With 92 delicacy and a response time of 0.4 seconds, such a system drastically decreases the propensity of accidents caused due to occlusions in the eyeless spots. Testing results validate the success of the system especially on civic roads with considerable overflows of climbers and cyclists, where motorists reported enhancement of situational mindfulness in tighter spaces and confidence in dealing with them. The success was attributed to this intuitive audio-visual alert. Affordability, alongside ease of deployment, provides good eventuality for wide relinquishment of such a system. Unborn advancements would include infrared lighting integration to overcome low- light performance issues and model fine- tuning of YOLOv3 with a larger dataset to ameliorate discovery delicacy. fresh detectors, like radar, could further enhance trustability in adverse rainfall conditions. V2X communication could be explored to enable the system to partake hazard information with other vehicles hard, further enhancing road safety. Overall, the design reflects a cost-effective and scalable result that\'s effective enough to enhance road safety for heavy vehicles. The integration of innovative tackle and advanced software in the system opens avenues for safer highways and lays down a foundation for farther invention in vehicle safety technology.

References

[1] Pashaei, M. Ghatee, and H. Sajedi, “Convolution neural network joint with mixture of extreme learning machines for feature extraction and classification of accident images,” Journal of Real-Time Image Processing, vol. 17, no. 4, pp. 1051–1066, 2020. [2] M. Rezaei, M. Terauchi, and R. Klette, “Robust vehicle detection and distance estimation under challenging lighting conditions,” IEEE transactions on intelligent transportation systems, vol. 16, no. 5, pp. 2723–2743, 2015. [3] Takeuchi, Eijiro , Y. Yoshihara , and Y. Ninomiya . \"Blind Area Traffic Prediction Using High Definition Maps and LiDAR for Safe Driving Assist.\" IEEE International Conference on Intelligent Transportation Systems IEEE, 2015. [4] Szumska, Emilia Magdalena , and P. T. Grabski . \"An analysis of the impact of the driver\'s height on their visual field range.\" 2018 XI International Science-Technical Conference AUTOMOTIVE SAFETY 2018. [5] Bing-Fei Wu, Chih-Chung Kao, Ying-Feng Li, and Min-Yu Tsai, “A Real-Time Embedded Blind Spot Safety Assistance System,” Hindawi International Journal of Vehicular Technology, vol. 2012, January 2012. [6] E. Schubert, F. Meinl, M. Kunert, and W. Menzel, “Clustering of highresolution automotive radar detections and subsequent feature extraction for classification of road users,” in 16th International Radar Symposium(IRS), Dresden, pp. 174-179, 2015. [7] A. Cord, “Towards rain detection through use of in-vehicle multipurpose cameras”, Proceedings of IEEE Intelligent Vehicles Symposium, vol. IV, pp. 833-838, 2011 [8] Yen-Lin Chen, “Real-time vision-based multiple vehicle detection and tracking for nighttime traffic surveillance”, IEEE Trans. on Systems, Man and Cybernetics, pp. 3352-3358, 2009 [9] Matti Kutila, Pasi Pyykönen, Aarno Lybeck, Pirita Niemi, Erik Nordin“Towards Autonomous Vehicles with Advanced Sensor Solutions”,IEEE 11th International Conference on Intelligent Computer Communication and Processing (2015 IEEE ICCP). Cluj-Napoca, Romania. 3-5 Sep 2015. [10] J. Scholliers, D. Bell, A. Morris, A.B. Garcia, “Improving safety and mobility of Vulnerable Road Users through ITS applications,” Transport Research Arena 2014, 14 - 17 April 2014.

Copyright

Copyright © 2025 Ms. Nisha Joy, Yashwanth T, Shashank K S, Yashwanth M, Pavithra K. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET66233

Publish Date : 2025-01-02

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online