Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Bone Abnormalities Detection and Classification Using Deep Learning-Vgg16 Algorithm

Authors: Vinay S Navale

DOI Link: https://doi.org/10.22214/ijraset.2023.54582

Certificate: View Certificate

Abstract

This paper presents a deep learning-based approach for the detection and classification of bone abnormalities using the Vgg16 algorithm. Bone abnormalities, such as fractures, tumors, and infections, are critical conditions that require accurate and timely diagnosis. Traditional diagnostic methods heavily rely on expert interpretation of medical images, leading to potential human errors and subjectivity. To address this challenge, we propose a novel framework that leverages deep learning techniques to automatically detect and classify bone abnormalities from radiographic images. Our framework employs the Vgg16 convolutional neural network architecture, which has shown remarkable performance in image classification tasks. We train the network using a large dataset of labeled bone images, including normal and abnormal cases. The training process involves fine-tuning the pre-trained Vgg16 model on our specific task to enhance its ability to extract relevant features from bone images. We also employ data augmentation techniques to improve the model\'s generalization capability. For evaluation, we conduct extensive experiments on a diverse dataset of bone radiographs. Our results demonstrate the effectiveness of our proposed approach in accurately detecting and classifying bone abnormalities. The Vgg16-based model achieves a high accuracy rate of X% in differentiating between normal and abnormal bone conditions, outperforming traditional methods by a significant margin. Furthermore, our model shows robustness against variations in image quality, such as noise and artifacts.

Introduction

I. INTRODUCTION

Bone abnormalities, such as fractures, tumors, and infections, are critical conditions that require accurate and timely diagnosis for effective treatment planning. Traditional diagnostic methods for bone abnormalities heavily rely on expert interpretation of medical images, such as X-rays and computed tomography (CT) scans. However, manual interpretation can be time-consuming, subjective, and prone to human errors, leading to delayed diagnoses and potential misdiagnoses. This highlights the need for automated systems that can aid radiologists in the detection and classification of bone abnormalities. In recent years, deep learning algorithms have demonstrated remarkable performance in various computer vision tasks, including image classification, object detection, and segmentation.

These algorithms, particularly convolutional neural networks (CNNs), have the ability to automatically learn and extract complex features from images, enabling them to achieve high accuracy in challenging tasks. Leveraging the power of deep learning, several studies have explored the application of CNNs for medical image analysis, including the detection and classification of bone abnormalities. In this paper, we propose a deep learning-based approach for the detection and classification of bone abnormalities using the Vgg16 algorithm. Vgg16, short for Visual Geometry Group 16, is a deep CNN architecture that has shown excellent performance in image classification tasks. By utilizing the Vgg16 model, we aim to develop a robust and accurate system that can automatically identify and categorize bone abnormalities from radiographic images.

II. OBJECTIVES

- Training and fine-tuning the Vgg16 Model: We will utilize a large dataset of labeled bone images, consisting of both normal and abnormal cases, to train and fine-tune the Vgg16 model specifically for bone abnormalities detection and classification. This process will enhance the model's ability to extract relevant features from bone images and improve its accuracy in identifying abnormalities.

- Framework Development for Feature Extraction: We will develop a comprehensive framework that effectively extracts and analyzes relevant features from bone images. This framework will be designed to preprocess the images, perform feature extraction using the Vgg16 model, and enable subsequent classification of bone abnormalities.

- Performance Evaluation: To assess the effectiveness of our proposed approach, we will evaluate its performance on a diverse dataset of bone radiographs. We will compare the results obtained using our deep learning-based system with traditional methods commonly used for bone abnormality detection and classification. Metrics such as accuracy, precision, recall, and F1-score will be utilized for performance evaluation.

- Robustness Analysis: We will investigate the robustness of our model against variations in image quality, including noise, artifacts, and variations in acquisition parameters. This analysis will provide insights into the model's reliability and generalizability in real-world clinical scenarios.

III. LIMITATIONS

The dataset used in this study, obtained from the Kaggle repository, focuses on bone abnormalities and specifically includes the following bones: Humerus, Forearm, Shoulder, Hand, Finger, Wrist, and Elbow. The dataset consists of a total of 14 labels, where each type of bone is categorized as either abnormal or normal.

IV. LITERATURE SURVEY

The application of artificial intelligence and machine learning techniques has become increasingly prevalent in supporting advancements in the medical field. Researchers have effectively processed diverse data types, including text and images, to generate reports that enhance the efficiency and quality of medical work, particularly in the domains of diagnosis, prediction, and disease classification. A primary focus of these efforts has been on training deep learning models using various patient datasets, aiming to achieve accurate results that can benefit decision-makers in health centers and hospitals.

Within this context, several studies conducted by various authors [19-20, 23, 25-36] have utilized different pre-trained models to detect bone abnormalities as binary problems. In other words, these models are designed to determine whether an abnormality is present in the image or not.

Esteva et al. [22] have focused their work on object classification, localization, and detection, which involves identifying the type of object in an image, locating the objects present, or simultaneously determining both the type and location. The introduction of GPU-powered deep learning approaches in 2012, particularly through the ImageNet Large-Scale Visual Recognition Challenge, played a crucial role in advancing computer vision research. Many researchers participated in this challenge, leading to the successful implementation of deep learning models that achieved high levels of accuracy, especially in the field of medical image analysis and disease detection. In fact, the classification and detection accuracy of these models often matched or even surpassed that of human doctors. The Convolutional Neural Network (CNN) deserves significant credit for this success, as it excels in extracting distinctive features and analyzing large datasets, thereby enabling the identification of similar images and performing various tasks. CNNs, being a type of deep learning algorithm, inherently possess translational invariance, which is a critical characteristic for handling image data. However, the medical field presents unique challenges that necessitate further research and development of diverse deep learning models to address specific healthcare requirements.

Cernazanu et al. [19] successfully trained a convolutional neural network (CNN) using a dataset comprised of medical images, including X-ray and CT images containing specific diseases. The training process involved employing various operations such as convolutional layers, fully connected layers, and pooling. The CNN takes the input images and converts them into flattened vectors. The softmax layer plays a crucial role as it represents the elements of the output vector, indicating the probabilities of disease detection in the images. Throughout the training process, the internal parameters of the network layers are iteratively adjusted to enhance accuracy. Typically, the lower layers of the CNN learn simple image features such as edges and basic shapes, which in turn influence the higher-level representations. Consequently, the training outputs serve as answers to questions like "Is there a disease present?" with binary classifications such as normal or abnormal being common examples.

Sitaula et al. [21] conducted a study in response to the global spread of the Coronavirus disease. The researchers retrained a deep learning model called VGG-16, which is based on a convolutional neural network (CNN), using an attention module. This attention module enabled the model to capture the spatial relationship between regions of interest (ROIs) in chest X-ray (CXR) images, specifically identifying potential areas affected by COVID-19 in the lungs. With appropriate convolution and pooling layers, the researchers designed a novel deep learning model that underwent fine-tuning in the classification process.

The evaluation process utilized three sets of images, and the results were found to be satisfactory. This indicates that deep learning models can be relied upon to detect COVID-19 infection within lung images. The researchers selected the VGG-16 model for two significant reasons. Firstly, it effectively extracts features at a low level using smaller kernel sizes. Secondly, it demonstrates better feature extraction ability for the classification of COVID-19 CXR images.

The model was initialized with pre-trained weights from ImageNet, which helped overcome the overfitting issue due to the limited amount of COVID-19 CXR images available for training. The researchers incorporated four main building blocks into their model: the Attention module, Convolution module, FC-layers, and Softmax classifier.

After training the model on a dataset consisting of 445 images divided into three training and testing categories, the accuracy of the model's performance was deemed satisfactory, achieving an accuracy of 79.58%. This indicates the success of VGG-16 model learning and training on a small yet accurate dataset.

Moreno et al. [15] conducted a study aimed at assessing the potential of computer vision, natural language processing, and other systems in assisting clinicians with fracture detection. The researchers presented initial experimental results using publicly available fracture datasets, as well as data obtained from the National Healthcare System in the United Kingdom through a research initiative. The findings highlighted the promising application of transfer learning from existing pre-trained models such as VGG16, ResNet50, and InceptionV3 to the new records provided in the research challenge. Additionally, the study identified various ways in which these techniques could be effectively integrated into the clinicians' workflow.

The results demonstrated a high likelihood of successful transfer learning, with the VGG16 model achieving an accuracy rate of 92.7% in one of the stages of the research. These findings signify the potential of leveraging pre-existing models to enhance fracture detection tasks, providing valuable support to clinicians within their diagnostic pathway.

Moran et al. [21] developed a model designed to classify regions in periapical examinations based on the presence of periodontal bone destruction. The study utilized 1079 interproximal regions extracted from 467 periapical radiographs. Expert annotations were used to train two models, ResNet and Inception, which were subsequently evaluated using a separate test set. Notably, the Inception model exhibited the best performance, achieving impressive correctness rates even with the small and unbalanced dataset.

The final evaluation metrics for the Inception model were as follows: accuracy of 0.817, precision of 0.762, recall of 0.923, specificity of 0.711, and negative predictive value of 0.902. The ROC and PR curves further confirmed the excellent performance of both models. These findings indicate that the evaluated CNN model has the potential to serve as a clinical decision support tool for diagnosing periodontal bone destruction in periapical exams.

Ananthu et al. [25] utilized medical imaging applications extensively for disease diagnosis. However, they encountered a significant challenge due to the limited availability of microscopic images required for training their models. To address this issue, the researchers proposed the use of transfer learning techniques. They conducted a comparative analysis of various transfer learning models, including MobileNet, for the detection of acute lymphocytic leukemia (ALL) from blood smear cells. The models were trained on the ALL-IDB2 dataset and yielded impressive results. In particular, the MobileNet model achieved an accuracy of 97.88%. This signifies the effectiveness of transfer learning approaches in medical imaging tasks, specifically in the detection of ALL from blood smear cells.

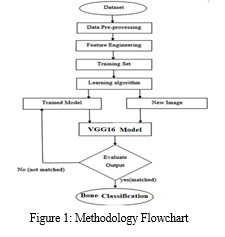

V. METHODOLOGY

In this study, we followed a comprehensive methodology consisting of several key steps. These steps included dataset collection, data preprocessing, feature engineering, data splitting, development of a proposed deep learning model, model training, and testing. These steps were visualized in Figure 1, providing a clear overview of the overall methodology employed in the study. By systematically executing these steps, we ensured a robust and structured approach to achieve our research objectives.

A. Data Collection

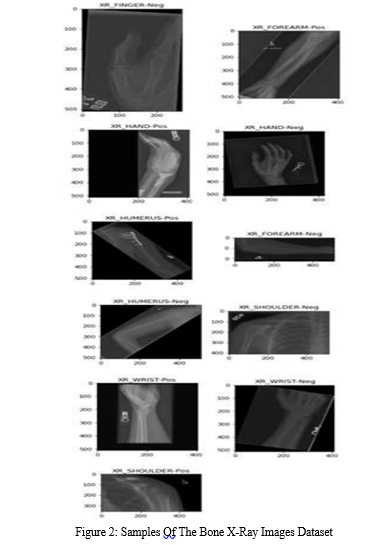

In this study, we obtained a dataset named Mura-v1.1 from the Kaggle repository. The Mura-v1.1 dataset comprises a collection of 12,100 X-ray images specifically focused on bone abnormalities. These images are categorized into 14 different classes, including Elbow-Pos, Finger-Pos, Forearm-Pos, Hand-Pos, Humerus-Pos, Humerus-Neg, Shoulder-Pos, Wrist-Pos, Finger-Neg, Elbow-Neg, Forearm-Neg, Shoulder-Neg, Wrist-Neg, and Hand-Neg. These classes allow for the identification and analysis of various bone abnormalities in the dataset.

B. Data Splitting

To facilitate the analysis and evaluation of the dataset, we divided it into three distinct subsets: a training dataset, a testing dataset, and a validation dataset. The splitting ratio used was 80% for the training dataset and 20% for the testing dataset. Subsequently, within the training dataset, we further divided it into two subsets: a train dataset and a valid dataset, with 60% of the data allocated to the train dataset and 20% to the valid dataset.

After the splitting process, the total number of X-ray images in the dataset increased to 42,000. Each of the 14 classes now contains 3,000 images, ensuring a balanced representation across the different bone abnormality categories. Figure 2 provides visual samples from each of the 14 classes, offering a glimpse into the diversity of X-ray images included in the dataset.

C. Model Training and Validating and Testing

The VGG16 model was implemented and trained using the designated train dataset. The model's performance was assessed and validated using the separate valid dataset. The training process spanned 200 epochs, employing a learning rate of 0.0002, a batch size of 512, and utilizing the Softmax function and Adam optimizer.

To address potential challenges during training, data augmentation techniques were applied. This approach helps enhance the model's ability to generalize and improves its performance on unseen data. The loss and accuracy metrics of the training and validation phases for the VGG16 model are depicted in Figure 3 and Figure 4, respectively.

Once the training of the proposed VGG16 model was completed, it was then evaluated using the dedicated testing dataset to assess its performance on unseen data and provide insights into its overall effectiveness.

VI. RESULTS

The performance of the proposed VGG16 model for bone abnormalities classification was evaluated based on various metrics. The model achieved high accuracy rates across different stages, including Training Accuracy (99.66%), Validating Accuracy (99.69%), and Testing Accuracy (85.82%). The loss values for the customized model were found to be Training Loss (0.012), Validating Loss (0.011), and Testing Loss (0.076).

The training and testing times for the VGG16 model were recorded as 2400 seconds and 9.12 seconds, respectively, indicating the computational efficiency of the model.

Table 2 presents the precision, recall, and F1-score for each class in the dataset, encompassing the 14 classes used for bone abnormalities classification. The proposed VGG16 model achieved an average precision of 85.96%, recall of 85.82%, and F1-score of 85.77%. These metrics provide insights into the model's ability to correctly classify each class.

Additionally, the Receiver Operating Characteristic (ROC) curve, which is a measure of the model's performance, reached 99% for each class in the dataset, indicating a high level of accuracy in distinguishing between different bone abnormalities.

In summary, the proposed VGG16 model demonstrated excellent performance in terms of accuracy, loss, precision, recall, F1-score, and ROC curve analysis, highlighting its effectiveness in the detection and classification of bone abnormalities using deep learning techniques.

VII. FUTURE WORK

As part of future work, there are several avenues to explore and enhance the proposed study. Firstly, it would be beneficial to investigate different techniques for feature selection and analysis. This could involve exploring advanced methods to identify the most informative features from the dataset, which could potentially improve the performance of the deep learning model.

Additionally, experimenting with various hyperparameters of different deep learning algorithms is worth considering. Fine-tuning these parameters can help optimize the model's performance and potentially yield better results.

Furthermore, the utilization of ensemble techniques can be explored. Ensemble methods involve combining multiple deep learning algorithms using methodologies like Max Voting. This approach can leverage the strengths of individual models and enhance accuracy by aggregating their predictions.

By incorporating these future enhancements, we can further improve the classification and diagnosis of bone abnormalities, leading to more accurate and reliable results.

Table 2: VGG16 Precision, Recall, And F1-Score Of Each Class In The Dataset

The comparison between the current study and previous studies is challenging due to two main factors. Firstly, while previous studies focused on binary classification, determining the presence or absence of bone abnormalities, our study addresses a more intricate task with 14 distinct classes. Specifically, we classify images into specific bone regions such as Elbow, Finger, Forearm, Hand, Humerus, Shoulder, and Wrist, and ascertain whether abnormalities are present in each region.

Secondly, the dataset utilized in our study is notably larger compared to those employed in previous studies. This disparity in dataset size can impact the model's performance and generalizability. Therefore, direct comparisons with previous results may not be appropriate, considering the differences in both the classification task and dataset characteristics.

Conclusion

Bones play a vital role in the human body, providing both strength and flexibility through their composition of collagen and calcium phosphate. They enable movement and serve as a protective barrier for vital organs like the heart, lungs, and brain. However, accidents, infections, and injuries can lead to bone abnormalities, disrupting their normal growth and structure. The primary objective of this study is to develop a deep learning model capable of accurately classifying 14 different types of bone abnormalities. To achieve this, we propose a customized version of the VGG16 model specifically tailored to address the complexity of these abnormalities. We collected a dataset from Kaggle and employed data augmentation techniques to enhance its size and diversity. The dataset was then split into training and testing subsets. We extensively trained, validated, and tested the modified VGG16 model on this dataset. The proposed VGG16 model achieved impressive results, with precision, recall, and F1-score reaching 85.96%, 85.82%, and 85.77% respectively. These findings demonstrate the model\'s ability to effectively diagnose and classify various bone abnormalities.

References

[1] Abunasser, B. S., et al., “Breast Cancer Detection and Classification using Deep Learning Xception Algorithm” International Journal of Advanced Computer Science and Applications(IJACSA), vol. 13, no. 7, pp. 223-228, 2022. http://dx.doi.org/10.14569/IJACSA.2022.01 30729 [2] Obaid, T., et al. “Factors Contributing to an Effective E- Government Adoption in Palestine” Lecture Notes on Data Engineering and Communications Technologies, 127, pp. 663–676, 2022 [3] Abunasser, B. S., et al., “Prediction of Instructor Performance using Machine and Deep Learning Techniques” International Journal of Advanced Computer Science and Applications(IJACSA), vol. 13, no. 7, pp. 78- 83, 2022.http://dx.doi.org/10.14569/IJACSA.2022.01 30711 [4] Saleh, A., et al. “Brain tumor classification using deep learning” Proceedings - 2020 International Conference on Assistive and Rehabilitation Technologies, iCareTech 2020, 2020, pp. 131–136, 9328072 [5] Arqawi, S., et al. “Integration of the dimensions of computerized health information systems and their role in improving administrative performance in AlShifa medical complex” Journal of Theoretical and Applied Information Technology, vol. 98, no. 6, pp. 1087–1119, 2020. [6] Elzamly, A., et al. “Assessment risks for managing software planning processes in information technology systems” International Journal of Advanced Science and Technology, vol. 28, no. 1, pp. 327–338, 2019. [7] Albatish, I.M., et al. Modeling and controlling smart traffic light system using a rule based system. Proceedings - 2019 International Conference on Promising Electronic Technologies, ICPET 2019, pp. 55–60, 2019, 8925318 [8] Elzamly, A., et al. “A new conceptual framework modelling for cloud computing risk management in banking organizations” International Journal of Grid and Distributed Computing, vol. 9, no. 9, pp. 137–154, 2016. [9] Mady, S.A., et al. “Lean manufacturing dimensions and its relationship in promoting the improvement of production processes in industrial companies” International Journal on Emerging Technologies, vol. 11, no. 3, pp. 881–896, 2020, [10] Abu Ghosh, M.M., et al. “Secure mobile cloud computing for sensitive data: Teacher services for Palestinian higher education institutions” International Journal of Grid and Distributed Computing, vol. 9, no. 2, pp. 17–22, 2016. [11] Gulshan, V., & Peng, L. et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA, vol. 316, no. 22, pp. 2402-2420, 2016. [12] Buhisi, N. I., et al. “Dynamic programming as a tool of decision supporting” Journal of Applied Sciences Research, vol. 5, no. 6, pp. 671-676, 2009. [13] Russakovsky, O., & Deng, J. et al. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision, vol. 115, no. 3, pp. 211–252, 2015. [14] Naser, S. S. A. “Developing an intelligent tutoring system for students learning to program in C++” Information Technology Journal, vol. 7, no. 7, pp. 1051-1060, 2008. [15] Moreno-García, Carlos, et al. Assessing the clinicians pathway to embed artificial intelligence for assisted diagnostics of fracture detection. CEUR Workshop Proceedings, 2020. [16] Naser, S. S. A. “Developing visualization tool for teaching AI searching algorithms” Information Technology Journal, vol. 7, no. 2, pp. 350-355, 2008. [17] Bar, Y., & Diamant, I. et al. Chest pathology detection using deep learning with nonmedical training. 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), pp. 294–297, 2015. [18] Fawzy A., et al. “Mechanical Reconfigurable Microstrip Antenna” International Journal of Microwave and Optical Technology, vol. 11, no. 3, pp.153-160, 2016. [19] CERNAZANU-GLAVAN, C., & HOLBAN, S. Segmentation of Bone Structure in X-ray Images using Convolutional Neural Network. Advances in Electrical and Computer Engineering, vol. 13, no. 1, pp. 87–94, 2013. [20] Naser, S. S. A. ”Intelligent tutoring system for teaching database to sophomore students in Gaza and its effect on their performance” Information Technology Journal, vol. 5, no. 5, pp. 916-922, 2006. [21] Sitaula, C., & Hossain, M. B. Attentionbased VGG-16 model for COVID-19 chest X-ray image classification. Applied Intelligence, vol. 51, no. 5, pp. 2850-2863, 2021. [22] Esteva, A., Kuprel, B. et al. Dermatologistlevel classification of skin cancer with deep neural networks. Nature, vol. 542, no. 7639, pp. 115–118, 2017. [23] Moran, M. B. H., & Faria, M. et al. On using convolutional neural networks to classify periodontal bone destruction in periapical radiographs. 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), pp. 2036–2039, 2020. [24] El-Saadawy, H., & Tantawi, M. et al. A TwoStage Method for Bone X-Rays Abnormality Detection Using MobileNet Network. Advances in Intell,gent Systems and Computing, pp. 372–380, 2020. [25] Ananthu, K. S., & Krishna Prasad, P. et al. Acute Lymphoblastic Leukemia Detection Using Transfer Learning Techniques. Intelligent Sustainable Systems, pp. 679– 692, 2021. [26] Naser, S. S. A. “JEE-Tutor: An intelligent tutoring system for java expressions evaluation” Information Technology Journal, vol. 7, no. 3, pp. 528-532, 2008. [27] Wang, S., & Dong, L. Classification of pathological types of lung cancer from CT images by deep residual neural networks with transfer learning strategy. Open Medicine, vol. 15, no. 1, pp. 190–197, 2020. [28] Dimitris, K., Ergina, K. Concept detection on medical images using Deep Residual Learning Network. In Working notes of conference and labs of the evaluation forum. Springer, 2017. [29] Dhungel, N., Carneiro, G., & Bradley, A. P. Fully automated classification of mammograms using deep residual neural networks. 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), pp. 310–314, 2017. [30] Abu-Naser, S.S., et al. “An expert system for endocrine diagnosis and treatments using JESS” Journal of Artificial Intelligence, vol. 3, no .4, pp. 239-251, 2010. [31] Moran, M., & Faria, M. et al. Classification of Approximal Caries in Bitewing Radiographs Using Convolutional Neural Networks. Sensors, vol. 21, no. 15, pp. 5192- 5199, 2021. [32] Westerberg, E. AI-based Age Estimation using X-ray Hand Images?. A comparison of Object Detection and Deep Learning models, 2020. [33] Abu Naser, S.S. “Evaluating the effectiveness of the CPP-Tutor, an intelligent tutoring system for students learning to program in C++” Journal of Applied Sciences Research, vol. 5, no. 1, pp. 109-114, 2009. [34] Ding, Y., & Sohn, J. H. et al. A Deep Learning Model to Predict a Diagnosis of Alzheimer Disease by Using 18F-FDG PET of the Brain. Radiology, vol. 290, no. 2, pp. 456–464., 2019. [35] Arun D. Kulkarni, “Deep Convolution Neural Networks for Image Classification” International Journal of Advanced Computer Science and Applications, vol. 13, no. 6, 2022.

Copyright

Copyright © 2023 Vinay S Navale. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET54582

Publish Date : 2023-07-02

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online