Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Brain Tumor Detection and Classification Using MRI Images

Authors: Greeshma Mahesh, Yogesh K M

DOI Link: https://doi.org/10.22214/ijraset.2024.64719

Certificate: View Certificate

Abstract

Brain tumors pose a serious health risk, and prompt and precise identification is essential for successful treatment. This article describes an automated method employing convolutional neural networks (CNNs) to identify and categorize brain cancers in MRI images. The dataset that was used consists of 7,023 MRI scans that were obtained from three different sources: the Br35H dataset, the SARTAJ dataset, and the fig share repository. Four classes are identified from the images: pituitary tumors, gliomas, meningioma’s, and no tumor. With the use of this improved dataset, the CNN model was created and trained, ultimately attaining a 97.5% test accuracy. Moreover, class-wise analysis showed that the precision values for pituitary tumors were 96.1%, 98.8%, 96.1%, and 98.6%, respectively, with equivalent recall rates of 94.7%, 95.4%, 100%, and 99% for gliomas, meningioma’s, and no tumors. With values of 0.966 for gliomas, 0.957 for meningioma’s, 0.994 for no tumor, and 0.975 for pituitary tumors, the F1-scores demonstrate the general resilience of the model. These findings show that the suggested CNN-based method is effective in assisting with brain tumor diagnosis from MRI scans, providing radiologists and other medical practitioners with a useful tool. In order to improve diagnostic accuracy, future study may entail enhancing the model even further and investigating the integration of other imaging modalities.

Introduction

I. INTRODUCTION

A broad category of neoplasms known as brain tumors is caused by abnormal cell development tin the brain or surrounding tissues. There are other varieties of them that can be distinguished, such as brain-originating primary tumors and body-wide metastasizing secondary tumors. Gliomas and meningioma are the most prevalent forms among them, however pituitary tumors also present serious clinical difficulties. It is projected that 700,000 people in the United States alone receive a brain tumor diagnosis each year, as the prevalence of these tumors continues to climb. These tumors' effects on brain function and general quality of life highlight how crucial early and precise diagnosis.

MRI is the gold standard for brain tumor diagnosis because of its high-resolution pictures and capacity to view soft tissues. Traditional neuroimaging methods are used to diagnose brain cancers. But deciphering an MRI scan is a difficult task that calls for a great deal of knowledge and experience.

Radiologists are required to distinguish minute variations in tissue properties, which can be impacted by a number of variables, such as the anatomy of the patient and the existence of artifacts. As a result, misdiagnoses may happen, which may result in in effective treatment strategies, postponed interventions, and ultimately poor patient outcomes.

The present techniques for diagnosing brain tumors are confronted with various obstacles, even with the progress made in neuroimaging. First of all, because MRI interpretation is subjective, radiologists may interpret images differently from one another. Research has shown that inter-observer variability in tumor categorization is a significant problem. Second, radiologists may find it difficult to keep up with the rising number of scans that need to be interpreted due to the growing volume of imaging data. Because of this, there is an immediate need for automated solutions that can aid in diagnosis, lighten the workload for medical personnel, and increase the precision and effectiveness of brain tumor detection.

Brain tumors are a significant health concern globally, contributing to a substantial number of morbidity and mortality cases. Due to their prominent role in a considerable number of cases of illness and death, brain tumors are a major global health problem. Gliomas, meningiomas, and pituitary tumors are just a few of the tumor classifications that might come from them because they can originate from different types of brain cells. Correct brain tumor diagnosis is essential since it directly affects treatment choices and patient outcomes. In the past, skilled radiologists have primarily depended on human interpretation of Magnetic Resonance Imaging (MRI) data to diagnose brain cancers. However, there may be differences and delays in diagnosis due to the growing complexity of imaging data and the inherent diversity in human interpretation.

Modern developments in machine learning (ML) and artificial intelligence (AI) approaches hold out a lot of potential for improving medical imaging diagnosis accuracy. Convolutional Neural Networks (CNNs) are one of these methods that have shown to be extremely effective for image classification tasks, such as brain tumor identification. The use of manual feature extraction is greatly reduced by CNNs, which can also potentially increase the accuracy and speed of diagnoses by automatically learning and extracting pertinent features from images. This study aims to develop an automated system for the detection and classification of brain tumors in MRI images using a robust CNN model. We utilized a comprehensive dataset comprising 7,023 MRI images sourced from figshare, the SARTAJ dataset, and the Br35H dataset, encompassing four classes: glioma, meningioma, no tumor, and pituitary tumors.

- The goal of this research is to use convolutional neural networks (CNNs) to create an automated system for the identification and categorization of brain cancers in MRI images. We specifically concentrate on building a reliable CNN model that can discriminate between pituitary tumors, meningiomas, gliomas, and no tumor with accuracy.

- Using photos from the figshare repository to improve the trustworthiness of the SARTAJ dataset in order to address labeling errors in glioma images.

- Assessing the constructed model's performance in terms of F1-score, accuracy, precision, and recall to ascertain its suitability for use in clinical settings.

This research is significant because it has the potential to change how brain tumor diagnosis is approached in clinical settings. Our automated system can quickly and accurately evaluate MRI scans by utilizing AI and machine learning, which will help radiologists make well-informed decisions. By using such technologies, brain tumors may be discovered earlier, which would ultimately improve treatment results and patient survival rates. Healthcare workers may have less work to do when automated diagnostic technologies are integrated, freeing them up to concentrate on more difficult cases that call for human knowledge. Our research opens the door for further developments in this crucial field of healthcare by adding to the increasing corpus of material supporting the application of AI in medical imaging. The rest of the paper is structured as follows: Section 2 provides an overview of related work in brain tumor detection using deep learning methods. Section 3 discusses the dataset, preprocessing steps, and the CNN architecture used in this study. Section 4 presents the experimental results and performance evaluation, while Section Finally, Section 5 concludes the paper with future directions for this research

II. RELATED WORK

The detection and classification of brain tumors using deep learning models have gained significant attention in recent years due to the increasing availability of MRI datasets and advancements in neural network architectures. Convolutional Neural Networks (CNNs) have been the most widely used models for this task, as they excel at feature extraction from medical images. Researchers have explored various CNN-based architectures, including ResNet, VGG, and Inception models, demonstrating their effectiveness in classifying tumor types like glioma, meningioma, and pituitary tumors. Studies have also applied transfer learning techniques, leveraging pre-trained models to enhance performance on limited datasets. Hybrid models combining CNNs with other approaches, such as Generative Adversarial Networks (GANs), have shown further improvements by addressing class imbalance and data scarcity challenges.

Bjoern H. Menze et al.(2015) The document The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS) offers a thorough description of the BRATS problem. The challenge was created to assess how well different cutting-edge algorithms performed while segmenting brain tumors using multimodal MRI data, with gliomas being the most common type. Because of their infiltrative nature and varied appearance, gliomas—the most prevalent kind of primary brain tumor—present considerable problems. The T1, T1c, T2, and FLAIR imaging modalities—each of which highlights distinct tumor sub regions such edema, necrosis, and active tumor tissue—are included in the BRATS benchmark dataset, which consists of actual and synthetic MRI scans annotated by specialists. The challenge findings show that no one algorithm can consistently achieve superior outcomes across all tumor sub regions; instead, ensemble techniques exhibit superior performance, with the best results exceeding human. [1]

In comparison to CT, X-ray, and ultrasound scans, which do not provide as good of contrast for distinguishing soft tissues, magnetic resonance imaging (MRI) scans are used to detect brain tumors. The study "Image Processing Techniques for Brain Tumor Detection: A Review" Vipin Y. Borole et al. (2015) examines these techniques. Essential image processing procedures like pretreatment, feature extraction, segmentation, and post-processing are covered in this review. The article discusses methods for increasing tumor identification accuracy, including filtering, edge detection, and morphological processes. This research also presents certain methods, such as k-Nearest Neighbor (k-NN) and Neural Networks (NN), which achieve 100% and 98.92% accuracy in brain tumor classification, respectively.

These findings highlight how effective it is to combine cutting-edge machine learning algorithms with MRI-based image processing to accurately detect brain tumors, especially when these algorithms are implemented with software like MATLAB. [2]

In order to identify and categorize brain tumors from MRI scans, a novel transformer-based method is presented in the study "Knowledge Distillation in Transformers with Tripartite Attention: Multiclass Brain Tumor Detection in Highly Augmented MRIs". Salha M. Alzahrani et al.(2024) The authors use a tripartite attention mechanism that blends neighborhood, global, and cross-attention layers to train a larger "teacher" model on a smaller "student" model. This is known as the knowledge distillation framework. With this approach, feature extraction is improved for accurate tumor detection. The suggested model performs better than conventional CNNs and transformers when tested on substantially augmented MRI datasets, with a top-1 accuracy of 87.6% and a top-5 accuracy of 97.5%. The study shows how this strategy works well in contexts with limited resources, such as smart healthcare systems, and how it helps with accurate, real-time diagnosis.[4]

The study by Jain et al. (2022) suggests utilizing image segmentation techniques to identify brain cancers. The goal of the authors' work is to improve brain scan accuracy in identifying tumor locations, which is essential for early diagnosis and treatment planning. Their method improves detection precision by isolating the tumor from surrounding tissues through the analysis of medical images. The work shows how picture segmentation improves the visibility of tumor boundaries and tackles important obstacles in brain tumor identification, such as the heterogeneity of tumor sizes and forms. The findings demonstrate the efficacy of the suggested approach, providing a dependable means of differentiating between healthy and sick brain tissue, which can greatly support clinical decision-making.[3]

III. METHODOLOGY

A. Dataset

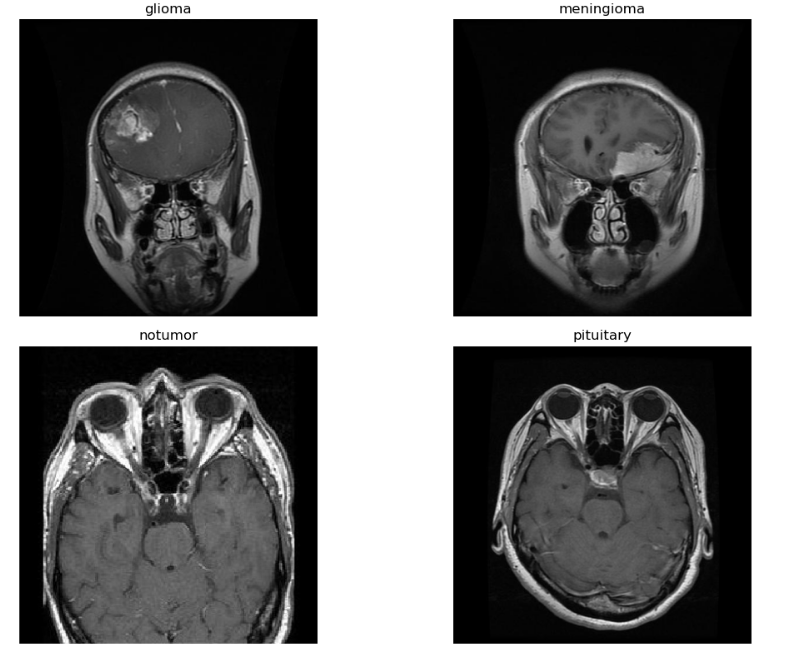

Fg.2 represents MRI scans in the dataset used in this investigation are concentrated on four distinct kinds of brain conditions: no tumor, pituitary tumor, meningioma, and glioma. A total of 7,023 photos were used, which came from figshare, SARTAJ, and Br35H, among other publically accessible datasets. To ensure precise classification, each image was meticulously annotated, which made it easier to build a reliable machine learning model. Preprocessing techniques were used to guarantee high-quality data for the Convolutional Neural Network (CNN) training process. These included data augmentation approaches to broaden the dataset's diversity, image resizing to preserve a constant input size, and normalization to modify the pixel values for better model performance. Rotating, flipping, and zooming were some of the data augmentation techniques used to improve the model's generalization and decrease overfitting. The distribution of the dataset among the four classes is adjusted to enable the CNN to efficiently learn unique features for every type of tumor. It is especially important to include a "no tumor" category since it aids in separating tumorous areas from healthy brain tissue. Because this dataset is so extensive, the model can learn a wide range of patterns linked to various tumor kinds, which will ultimately lead to increased detection and classification accuracy.

Fig. 1 Example of MRI Dataset

B. Preprocessing

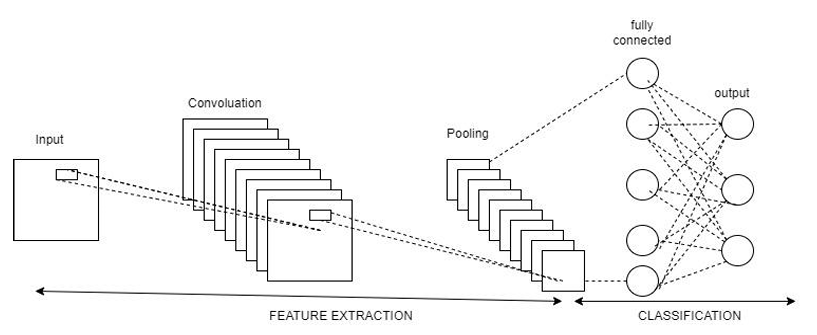

Convolutional Neural Networks (CNNs) are a class of deep learning algorithms specifically designed for processing structured grid data, such as images. They have gained widespread popularity in image classification tasks due to their ability to automatically extract and learn features from raw image data. In this project, we employ a CNN architecture to detect and classify brain tumors from MRI images into four categories: glioma, meningioma, pituitary tumor, and no tumor. The following components outline how the CNN algorithm is applied in this context:

MRI images were preprocessed and augmented to enhance the diversity of the training dataset, reducing the risk of overfitting. All images were rescaled by dividing pixel values by 255 to normalize them to the [0, 1] range. Augmentation included rotation, shifting, shearing, zooming, and flipping (horizontal and vertical), enhancing the dataset variability.

Mathematically, the pixel rescaling is given by:

I′=I255

where I is the original pixel intensity, and I′ is the rescaled intensity.

C. Augmentation Transformations

Random rotation within ±20°, Shifting the image horizontally and vertically by ±10%., Shearing and Zooming: Applying transformations within ±10% range. The Image Data Generator from Keras handles these transformations, increasing the dataset’s diversity.

D. CNN Model

The CNN is built using Keras Sequential API. Each layer in a sequential model is stacked one after another to form a feedforward network.

1) Sequential Model: A Sequential model allows us to define the layers one by one in a linear stack. This is useful for building feedforward neural networks.

2) Conv2D Layer: Conv2D applies 32 filters of size 3x3 to the input image to extract important features. input_shape=(image_size[0], image_size[1], 3) specifies the size of the input image (height, width, and 3 channels for RGB). Activation function: ReLU (Rectified Linear Unit) introduces non-linearity. This helps the model learn complex patterns and prevents negative activations.

f(x)=max(0,x)

3) MaxPooling2D Layer: MaxPooling2D reduces the spatial dimensions by selecting the maximum value from each 2x2 window. Reduces the size of feature maps.Helps in reducing overfitting and computational cost.

4) Second and Third Conv2D + MaxPooling Layers: Each convolutional layer applies more filters (64 and 128) to extract higher-level features such as shapes, edges, or textures. Max-pooling follows each Conv2D layer to reduce the spatial size and computational load.

5) Fourth Conv2D Layer: Converts the 2D feature maps into a 1D vector to feed into the dense (fully connected) layers.

6) Dense Layer: A fully connected layer with 512 neurons learns high-level patterns by combining the extracted features. ReLU is used as the activation function to introduce non-linearity.

7) Dropout Layer: Dropout randomly drops 50% of the neurons during training to prevent overfitting.

8) Output Layer: This layer has len(categories) neurons (equal to the number of tumor classes), one for each class. Softmax activation converts the logits into probabilities for multi-class classification:

P(y=j?x)=∑k?ezk?ezj??

The CNN algorithm is particularly well-suited for the task of brain tumor detection and classification from MRI images. Its hierarchical structure allows for automatic feature extraction, significantly reducing the need for manual feature engineering and enabling the model to learn complex patterns associated with different tumor types. The implementation of CNNs in this project aims to enhance diagnostic accuracy and assist medical professionals in making informed decisions regarding patient treatment.

This defines a CNN architecture for multi-class classification using convolutional layers for feature extraction and fully connected layers for prediction. The Adam optimizer helps to converge the model faster, and categorical cross-entropy loss ensures better handling of multiple classes. Dropout prevents overfitting, and softmax activation ensures the output is a probability distribution for each class. The model is designed to effectively classify tumor categories based on MRI image inputs.

9) Model Compilation: Adam Optimizer: Adam is an optimization algorithm that combines the benefits of Momentum and RMSProp. It adjusts the learning rate during training. The update rule for each parameter θ\thetaθ is

θ=θ-α⋅mtvt+ ?

where: mt? is the first moment estimate (momentum), vt is the second moment estimate (RMSProp-like behavior), α\alphaα is the learning rate, and ?\ is a small constant for numerical stability.

Categorical Cross-Entropy Loss: This loss function measures the performance of a classification model where the output is a probability distribution.

L=-i=1Nj=1cyij log?(y^ij)

N is the number of samples, C is the number of classes, Yij is the true label (either 0 or 1), and y^ij\ is the predicted probability.

10) Accuracy Metric: Accuracy is used as a metric to evaluate the performance:

Accuracy=Number of Correct PredictionsTotal Predictions

Fig. 2 Workflow of CNN Architecture

IV. MODEL DIAGRAM

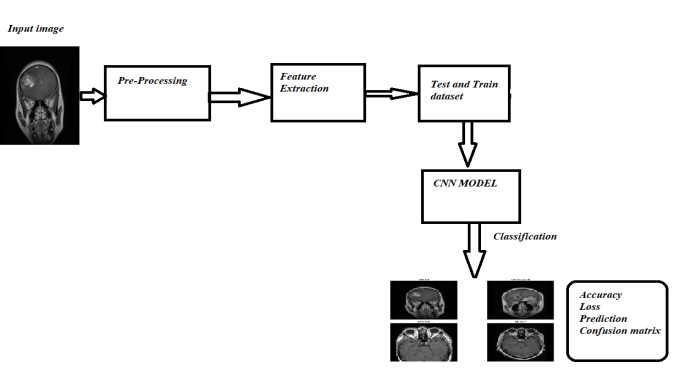

Fig. 3. Block Diagram

The presented model diagram outlines the workflow for brain tumor detection and classification using a CNN-based approach. The process starts with input MRI images of the brain, which are analyzed to detect and classify tumors. These images undergo pre-processing steps such as resizing, normalization, and noise removal to ensure consistency and improve model accuracy. Next, feature extraction is performed, where essential patterns such as shape, texture, and intensity are identified from the images. The dataset is then split into training and testing sets, with the training data used to teach the CNN model and the test data employed to assess its performance on unseen images. The CNN model serves as the core component, learning from the extracted features to classify the images into four categories: glioma, meningioma, pituitary, and no tumor. Once classification is complete, the output includes key metrics such as accuracy, loss, predictions, and a confusion matrix to evaluate the model’s performance. This pipeline ensures an efficient and accurate detection system, which can aid in early diagnosis and assist clinicians in making better treatment decisions.

V. RESULTS AND ANALYSIS

The goal of this project was to develop an effective Convolutional Neural Network (CNN) for detecting and classifying brain tumors into four categories: glioma, meningioma, pituitary tumor, and no tumor using MRI images. In this section, we present the results obtained from training and testing the CNN model on the curated dataset and analyze the model's performance using various metrics.

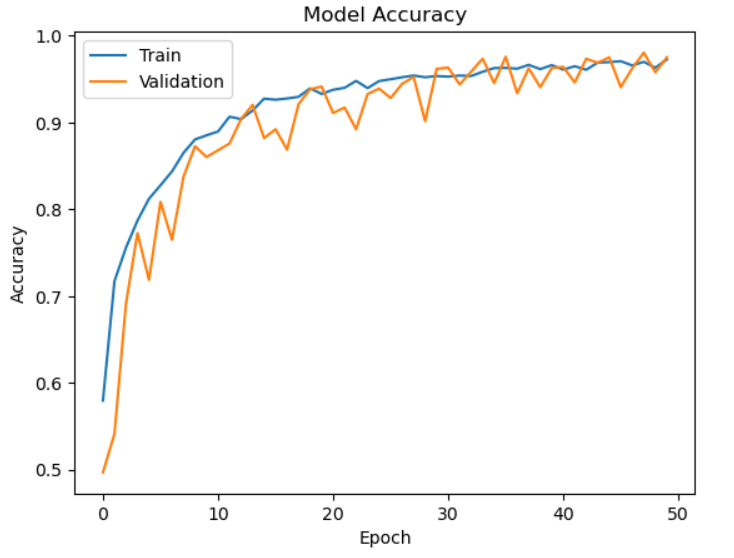

Fig. 4 Model Accuracy

Fig.4 represents The accuracy of the CNN model throughout 50 epochs of training and validation is depicted in the graph. During the early epochs, both accuracies rise quickly, suggesting efficient learning. Early on, validation accuracy fluctuates slightly before settling at epoch 20. Both accuracies achieve a final value of roughly 0.98 to 1.0, indicating robust performance. Good generalization and little overfitting are demonstrated by the training and validation curves' tight alignment, which is probably the result of data augmentation and dropout. The model works consistently on unseen data if there is no discernible difference between the two. Small fluctuations in validation accuracy point to possible gains from changing the learning rate or running more epochs. All things considered, the model shows strong learning and excellent accuracy on the dataset.

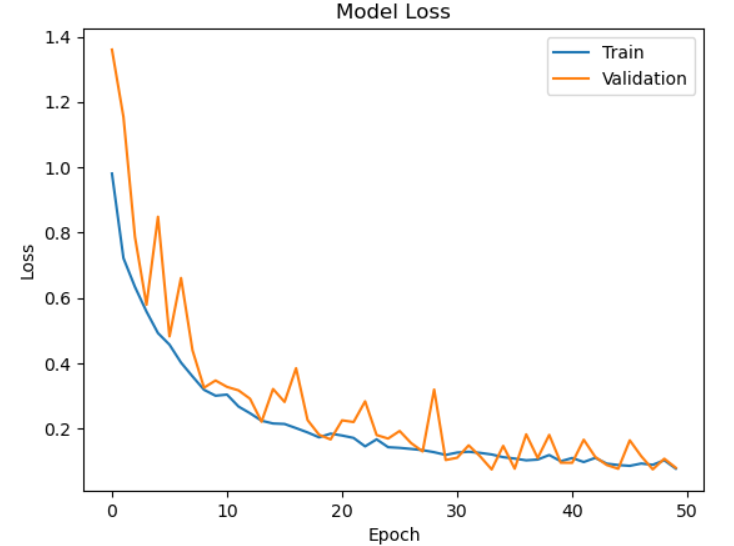

Fig. 5. Model Loss

Fig.5 represents The loss graph depicts the drop in training and validation loss across 50 epochs, illustrating the model's learning process. Both losses begin large at first but quickly drop throughout the first ten epochs, demonstrating efficient optimization. Variations in validation loss are noted, indicating minor discrepancies in performance on unobserved data. Both losses, however, eventually stabilize, and the final values get closer to zero, suggesting little mistake. The model appears to be well-suited to fresh data, as evidenced by the near alignment of the training and validation loss curves. The consistent decline in loss indicates that the CNN is picking up the characteristics required for precise brain tumor categorization.

|

Class |

Precision |

Recall |

F1-Score |

|

glioma |

0.986 |

0.946 |

0.965 |

|

meningioma |

0.960 |

0.954 |

0.957 |

|

notumor |

0.987 |

1.0 |

0.993 |

|

pituitary |

0.961 |

0.99 |

0.975 |

Table.1 Accuracy

Table.1 represents The model's performance is shown by the precision, recall, and F1-scores for the four classes of pituitary, meningioma, glioma, and no tumor. Elevated precision scores, beyond 0.96, indicate the model's precision in forecasting positive cases with minimal false positives. High recall values also show that the model can identify most tumors properly with few false negatives. Interestingly, the "no tumor" class had a perfect recall of 1.0, meaning that no detections were missed. All classes exhibit consistently high F1-scores, a harmonic mean of precision and recall, with the "no tumor" class demonstrating the best performance (0.99). These outcomes demonstrate the model's dependable and strong performance in brain tumor classification.

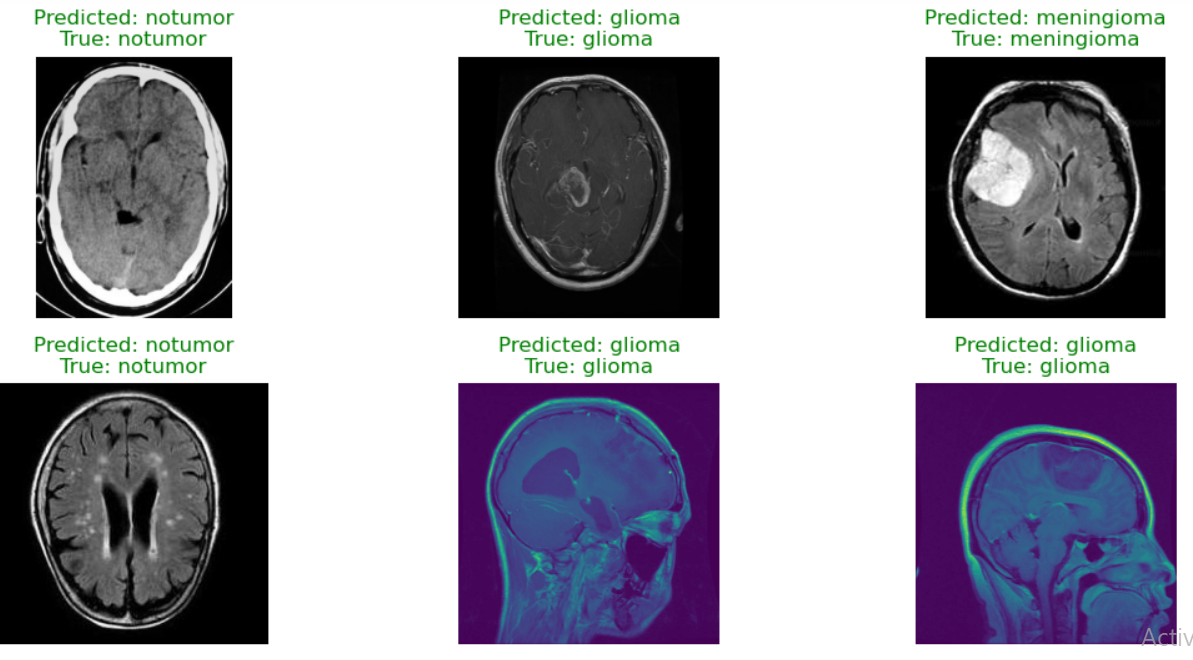

Fig. 6 Predicted Output

Fig. 6 Predicted Output

Conclusion

The proposed CNN model for brain tumor detection and classification using MRI images demonstrates high accuracy and reliable performance across four tumor classes: glioma, meningioma, pituitary, and no tumor. The model achieved strong metrics, with F1-scores exceeding 0.95 for all classes, and excellent recall and precision values, indicating robust classification with minimal false positives and negatives. The accuracy and loss graphs show stable training, with the validation accuracy closely matching the training accuracy, confirming the absence of overfitting. This system can effectively aid radiologists by providing accurate, automated tumor detection, thereby speeding up diagnosis and reducing human error. Further enhancements might entail broadening the dataset to encompass a greater variety of MRI pictures and extra tumor kinds to enhance generalization. Performance may be improved by implementing sophisticated architectures, such as transfer learning with trained models (ResNet or VGG). Including explainable AI techniques could enhance the interpretability of the model and make its predictions easier for doctors to comprehend. Furthermore, creating a simplified version of the model might enable its implementation in mobile health applications, hence enabling the solution\'s accessibility in distant or resource-constrained environments. Prior to integrating the system into actual healthcare settings, more robustness can be ensured by validation on larger clinical datasets.

References

[1] Bjoern H. Menze*, Andras Jakab, Stefan Bauer, Jayashree Kalpathy-Cramer, (2015). The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE TRANSACTIONSONMEDICALIMAGING,VOL.34,NO.10,OCTOBER2015[1] [2] Vipin Y. Borole1, Sunil S. Nimbhore2, Dr. Seema S. Kawthekar2. (2015). Image Processing Techniques for Brain Tumor Detection: A Review, International Journal of Emerging Trends & Technology in Computer Science (IJETTCS), Volume 4, Issue 5(2), September - October 2015[2] [3] Harshit Jain1 | Gaurav Maindola1 | Dhruv Rustagi1 | Bhanu Gakhar1 | GunjanChugh2. (2022) Brain Tumor Detection Using Image Segmentation. International Journal for Modern Trends in Science and Technology, 8(06): 147-153, 2022 Copyright © 2022 International Journal for Modern Trends in Science and Technology ISSN: 2455-3778 online DOI: https://doi.org/10.46501/IJMTST0806022 [3] [4] Salha M. Alzahrani * , Abdulrahman M. Qahtani. 2024. Knowledge distillation in transformers with tripartite attention: Multiclass brain tumor detection in highly augmented MRIs, Journal of King Saud University - Computer and Information Sciences journal www.sciencedirect.com [4] [5] S M. Bhandarkar and P. Nammalwar, “Segmentation of Multispectral MR images Using Hierarchical Self-Organizing Map,” Proceedings of Computer-Based medical system CBMS 2001.[5] [6] C. A. Parra, K. Iftekharuddin and R. Kozma, “Automated Brain Tumor segmentation and pattern Recoginition using ANN,” Computional Intelligence Robotics and Autonomous Systems, 2003.[6] [7] Sudipta Roy and Samir K. Bandyopadhyay, “Detection and Quantification of Brain Tumor from MRI of Brain and it?s Symmetric Analysis,” International Journal of Information and Communication Technology Research, Vol. 2, No. 6, June 2012.[7] [8] Hossain, T.; Shishir, F.S.; Ashraf, M.; Al Nasim, M.A.; Shah, F.M. Brain tumor detection using convolutional neural network. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladesh, 3–5 May 2019; pp. 1–6.[8] [9] Ullah, F.; Salam, A.; Abrar, M.; Amin, F. Brain Tumor Segmentation Using a Patch-Based Convolutional Neural Network: A Big Data Analysis Approach. Mathematics 2023, 11, 1635[9] [10] Shahajad, M., Gambhir, D. & Gandhi, R. Features extraction for classification of brain tumor MRI images using support vector machine. In 2021 11th International Conference on Cloud Computing, Data Science and Engineering (Confluence), IEEE, 2021, 767–772. https://doi.org/10.1109/Confluence51648.2021.9377111[10

Copyright

Copyright © 2024 Greeshma Mahesh, Yogesh K M. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64719

Publish Date : 2024-10-21

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online