Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Survey on Brain Tumor Segmentation Using Deep Learning for MRI Images

Authors: Gayathiri K, A. Meena Kowshalya

DOI Link: https://doi.org/10.22214/ijraset.2025.66771

Certificate: View Certificate

Abstract

Brain tumor segmentation in MRI images is a critical task in medical imaging, essential for accurate diagnosis, treatment planning, and prognosis. Traditional methods for segmenting brain tumors are often manual, requiring significant time and expertise from radiologists, and are prone to variability. Recent advancements in deep learning, particularly with Convolutional Neural Networks (CNNs) and the U-Net architecture, have shown significant promise in automating the segmentation process with high precision and speed. This study presents a comprehensive review of existing literature on deep learning models for brain tumor segmentation, with a specific focus on U-Net and its variations. The paper examines various MRI modalities, datasets, CNN-based models, and performance metrics used in prior research. Our analysis highlights the effectiveness of U-Net in capturing tumor features across multiple MRI modalities and underscores the importance of robust datasets, such as BraTS, for model training and evaluation. By consolidating current methodologies and findings, this review aims to guide future research efforts and support the development of more accurate, efficient brain tumor segmentation models.

Introduction

I. INTRODUCTION

Brain tumors are one of the most severe and complex types of central nervous system (CNS) diseases, posing serious risks to patient health and significantly impacting quality of life. They are characterized by abnormal cell growth within the brain, which can disrupt normal brain function by compressing surrounding tissues. Brain tumors are typically classified as benign or malignant, with the latter being more aggressive and often leading to rapid progression and poorer prognoses. Timely and accurate diagnosis, followed by precise treatment planning, is essential for improving patient outcomes, reducing tumor growth, and prolonging survival.

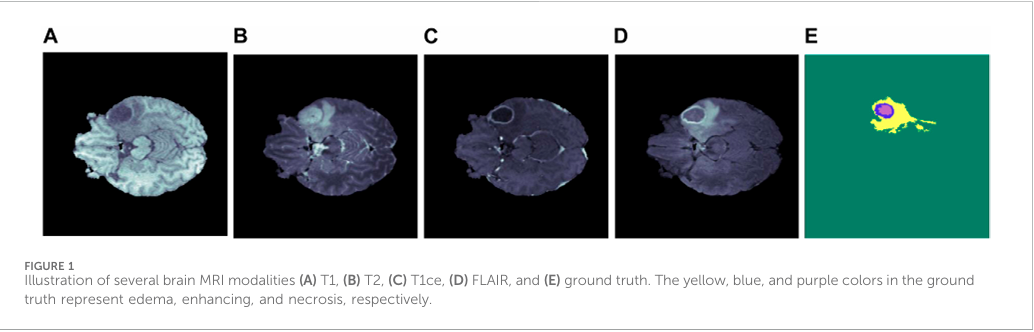

A. Role of MRI in Brain Tumor Diagnosis and Segmentation

Magnetic Resonance Imaging (MRI) is one of the most advanced and widely used imaging modalities in diagnosing brain tumors. MRI provides non-invasive, high-resolution images of brain structures without using ionizing radiation, making it particularly valuable in repeated monitoring and long-term follow-up in clinical settings. MRI also offers multiple imaging modalities—T1-weighted, T2-weighted, FLAIR, and T1-weighted with contrast enhancement (T1CE)—each of which enhances different tissue characteristics, helping in detailed visualization of the tumor.

T1-weighted images provide excellent anatomical detail, enabling initial tumor localization.

T2-weighted images highlight regions with higher water content, making them useful for identifying edema (swelling), often present around tumors. FLAIR (Fluid-Attenuated Inversion Recovery) suppresses cerebrospinal fluid signals, which improves visualization of peritumoral regions, helping to distinguish the tumor from normal brain tissues.

T1-weighted with contrast enhancement (T1CE) accentuates the blood-brain barrier disruption often found in malignant tumors, allowing clear visualization of tumor boundaries. These MRI modalities complement each other, providing a comprehensive view of the tumor and surrounding brain structures, and are integral in distinguishing different tumor types and grades.

TABLE 1.1: Overview of MRI Modalities

|

Modality |

Description |

Key Applications |

|

T1-weighted |

Anatomical detail, soft tissue contrast |

General tumor localization |

|

T2-weighted |

Highlights water and fluid content |

Edema and fluid-filled structures |

|

FLAIR |

Suppresses cerebrospinal fluid signal |

Differentiates tumor core |

|

T1CE |

T1 with contrast enhancement |

Highlights blood-brain barrier breakdown (tumor enhancement) |

B. Challenges in Manual Tumor Segmentation

Manual segmentation of brain tumors from MRI scans is challenging, time-consuming, and prone to human error. Radiologists must analyse each MRI slice to delineate the tumor region precisely, a task that requires significant expertise and can lead to inter-observer variability. Furthermore, as MRI scans often consist of hundreds of 2D slices per patient, manual segmentation becomes impractical and inefficient, especially with the increasing volume of imaging data in modern healthcare. These limitations have fueled the need for automated segmentation techniques that can deliver accurate, consistent, and reproducible results.

C. Deep Learning in Medical Image Segmentation

In recent years, deep learning, particularly Convolutional Neural Networks (CNNs), has emerged as a promising solution for medical image analysis, offering substantial improvements in both accuracy and efficiency for complex image segmentation tasks. CNNs are well-suited for image processing as they learn hierarchical representations, starting from basic features like edges and progressing to complex patterns, which is particularly valuable for distinguishing tumor boundaries in MRI images. The application of CNNs to brain tumor segmentation has shown the potential to reduce diagnostic workload, minimize segmentation variability, and improve the consistency of results across different radiologists and institutions.

II. LITERATURE SURVEY

In this section, the previous researches of the brain tumor segmentation and detection has been presented and analyzed which are done by earlier researchers. It includes what type of tumor to be considered, what are the tumors growing around that particular part, which methodology has been used for the segmentation and detection and performance of each methodology.The literature on brain tumor segmentation using CNNs, particularly U-Net and its derivatives, indicates several key trends and best practices in model architecture, data usage, and performance evaluation. Here, we summarize findings from eleven selected studies, emphasizing their methodologies, datasets, MRI modalities, and reported results.

Mostafa et al. (2023) proposed a deep Convolutional Neural Network (CNN) model aimed at segmenting brain tumors in the BraTS 2021 dataset, which includes multimodal MRI images like T1, T2, FLAIR, and T1-weighted contrast-enhanced (T1CE) scans. Their model achieved a validation accuracy of 98%, underlining the robustness of CNNs in multi-modal brain tumor segmentation. Mostafa et al. leveraged categorical cross-entropy as the loss function and optimized model training using the Adam optimizer, confirming that combining multimodal MRI data with CNNs can lead to accurate segmentation results, especially in complex medical image contexts.

Amin et al. (2022) explored transfer learning techniques to enhance brain tumor detection and segmentation using CNNs. By employing pre-trained models such as ResNet and VGG16 on a smaller local dataset, Amin et al. achieved an impressive accuracy of 98.2%. This approach highlights the effectiveness of transfer learning when training data is limited, enabling the models to benefit from previously learned features. Their study underscores that transfer learning can be a powerful method for medical imaging applications where labeled data is scarce and highlights the adaptability of CNNs for tumor segmentation tasks.

Irmak et al. (2021) introduced a genetic algorithm (GA)-based CNN model that focuses on optimizing brain tumor core segmentation in T2-weighted MRI scans. By integrating GA to refine CNN architecture parameters, their approach improved the model’s capability in detecting precise tumor boundaries. Although specific accuracy metrics were not provided, this study highlighted the potential of combining GA with CNNs to enhance model accuracy. The results underscore that optimization techniques like GA can help fine-tune CNN architectures for more accurate feature extraction and segmentation in brain tumor detection.

Wu et al. (2022) proposed a color-based segmentation method that combines K-means clustering with CNNs to improve brain tumor boundary detection, particularly in FLAIR images. This hybrid approach achieved high specificity in segmenting tumor regions, demonstrating the effectiveness of K-means in conjunction with CNNs. Wu et al.'s work points to the potential of integrating traditional clustering methods with deep learning techniques, allowing for more refined segmentation results, especially in modalities where color or intensity distinctions are critical for accurate tumor detection.

Zakariah et al. (2023) developed a CNN-based model aimed at classifying three types of brain tumors—gliomas, meningiomas, and pituitary tumors. Their model, which utilized a pre-trained GoogleNet for feature extraction, reached an average classification accuracy of 98%. This study emphasizes the utility of transfer learning and large-scale pre-trained models in multi-class tumor classification, showing that leveraging GoogleNet’s feature extraction capabilities can facilitate high-accuracy classification even in complex medical image datasets. This work demonstrates the adaptability of general-purpose CNNs like GoogleNet for specialized medical image classification tasks.

Zhang et al. (2022) extended the capabilities of U-Net by introducing an Attention U-Net model that includes attention gates to selectively focus on relevant tumor regions during segmentation. Using the BraTS 2021 dataset, their model achieved a Dice score of 97.5%, showcasing the efficacy of attention mechanisms in refining segmentation results. By focusing on significant features, Attention U-Net improves model accuracy by selectively emphasizing tumor regions, making it particularly beneficial in medical applications where pixel-level precision is essential.

Chakraborty et al. (2020) presented a fully automated CNN-based model for brain tumor segmentation, integrating data augmentation techniques such as rotation, flipping, and scaling to enhance generalization. Tested on the BraTS dataset, their model achieved a Dice coefficient of 0.91. Chakraborty et al. showed that augmenting data can significantly improve CNN performance, especially when training data is limited. The study highlights that data augmentation is a crucial technique in medical imaging, where increasing the diversity of training samples can lead to more robust and reliable segmentation models.

Wang et al. (2021) took a step further by developing a 3D U-Net model for brain tumor segmentation, allowing for volumetric analysis of MRI scans. The 3D U-Net, evaluated on the BraTS 2020 dataset, achieved a Dice score of 0.92, demonstrating its effectiveness in capturing the spatial structure of tumors. Wang et al. highlighted that 3D models outperform traditional 2D models for segmentation tasks where depth information is crucial, making the 3D U-Net a strong candidate for applications requiring three-dimensional contextual awareness, such as volumetric tumor assessment.

Ali et al. (2020) introduced a hybrid model combining CNNs and Recurrent Neural Networks (RNNs) to incorporate sequential information from MRI slices. By using CNNs for feature extraction and RNNs for processing the sequence of images, their model achieved an accuracy of 96.4% on the BraTS dataset. Ali et al. illustrated the advantage of combining CNNs and RNNs to maintain temporal consistency across MRI slices, demonstrating that this hybrid approach can effectively enhance segmentation performance by integrating spatial and sequential data.

Liu et al. (2021) improved upon the traditional U-Net by adding residual connections, resulting in a Residual U-Net model. Residual connections help mitigate the vanishing gradient problem, improving feature learning, especially for smaller or more complex tumor regions. Tested on the BraTS dataset, the model achieved a Dice score of 0.93, demonstrating that residual connections allow the network to capture more intricate details. Liu et al. highlighted that the inclusion of residuals improves model depth without sacrificing gradient flow, making Residual U-Net a practical solution for complex segmentation tasks in medical imaging.

Kumar et al. (2022) proposed an ensemble learning approach that combines predictions from multiple CNN models, including ResNet and VGG, to enhance segmentation accuracy and robustness. Their ensemble model, evaluated on the BraTS 2021 dataset, achieved an accuracy of 97.3%, showcasing the power of aggregating outputs from diverse CNN architectures. Kumar et al. emphasized that ensemble learning can mitigate the limitations of individual models by combining their strengths, resulting in more stable and accurate segmentation outputs for brain tumor imaging.

TABLE 2.1: Methodology and Datasets

|

References |

MRI Modalities |

Model |

Methodology |

Performance Metrics |

Dataset |

|

Mostafa et al. |

T1, T2, FLAIR, T1CE |

CNN |

Deep CNN with categorical cross-entropy and Adam optimizer |

Accuracy: 98% |

BraTS 2021 |

|

Amin et al. |

T1 |

Transfer learning CNN |

Transfer learning using ResNet and VGG16 pre-trained models |

Accuracy:98.2% |

Local MRI dataset |

|

Irmak et al. |

T2 |

Genetic algorithm CNN |

Genetic algorithm (GA) integrated with CNN for parameter optimization |

Sensitivity and Specificity (not specified) |

Custom dataset |

|

Wu et al. |

FLAIR |

CNN + K-means clustering |

Hybrid model combining K-means clustering with CNN for boundary segmentation |

High Specificity (not quantified) |

Custom dataset |

|

Zakariah et al. |

T1, T2, FLAIR |

CNN (GoogleNet) |

GoogleNet pre-trained model for three-class classification (glioma, meningioma, pituitary) |

Accuracy: 98% |

BraTS + Public datasets |

|

Zhang et al. |

T1, T2, FLAIR, T1CE |

Attention U-Net |

U-Net with attention gates to selectively emphasize tumor regions |

Dice Score: 97.5% |

BraTS 2021 |

|

Chakraborty et al. |

T1, T2, FLAIR, T1CE |

CNN |

CNN with data augmentation (rotation, flipping, scaling) to improve generalization |

Dice Coefficient: 0.91 |

BraTS |

|

Wang et al. |

T1, T2, FLAIR, T1CE |

3D U-Net |

3D U-Net with volumetric segmentation to capture spatial structure |

Dice Score: 0.92 |

BraTS 2020 |

|

Ali et al. |

T1, T2, FLAIR, T1CE |

Hybrid CNN-RNN |

CNN for feature extraction combined with RNN for temporal consistency |

Accuracy:96.4% |

BraTS |

|

Liu et al. |

T1, T2, FLAIR, T1CE |

Residual U-Net |

U-Net with residual connections to improve gradient flow and feature learning |

Dice Score: 0.93 |

BraTS |

|

Kumar et al. |

T1, T2, FLAIR, T1CE |

Ensemble CNNs |

Ensemble learning combining predictions from multiple CNN architectures |

Accuracy:97.3% |

BraTS 2021 |

III. METHODOLOGY

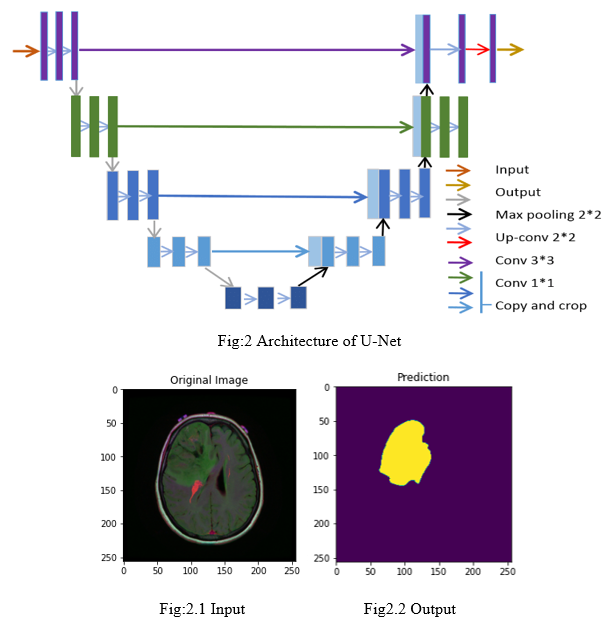

Among the various deep learning architectures, U-Net has become the benchmark model for biomedical image segmentation, including brain tumor segmentation. Originally proposed for cell segmentation in microscopy images, U-Net has proven highly effective in other medical domains, especially MRI brain imaging.

A. U-Net Architecture: A Benchmark in Biomedical Image Segmentation

1) Encoder (Contracting Path)

The encoder progressively reduces the image’s spatial dimensions through down sampling while increasing feature depth. It consists of sequences of convolutional layers, ReLU activation functions, and max-pooling layers, which capture both basic edges and complex patterns in the image. These patterns are essential for identifying different structures within the tumor.

2) Bottleneck

At the center of U-Net, the bottleneck layer has the lowest spatial resolution but the highest level of information about the image.It acts as a bridge between the encoder and decoder, holding condensed feature representations that help the model recognize specific tumor patterns.

3) Skip Connections

U-Net’s distinguishing feature is its skip connections between corresponding layers in the encoder and decoder paths. These connections pass detailed spatial information from the encoder to the decoder, which helps maintain fine-grained details, such as tumor edges, that would otherwise be lost in down sampling. This improves accuracy in delineating precise tumor boundaries.

4) Final Output Layer

The last layer in the decoder is typically a 1x1 convolutional layer with either a softmax or sigmoid activation, which produces a classification map at the pixel level. In brain tumor segmentation, each pixel is classified into categories like whole tumor (WT), tumor core (TC), and enhancing tumor (ET).

B. U-Net’s Role in Brain Tumor Segmentation

U-Net is well-suited for brain tumor segmentation because its encoder-decoder structure allows it to:Capture both high-level context (useful for locating the tumor and understanding its shape) and low-level spatial details (critical for precise tumor boundary delineation).Use multiple MRI modalities (e.g., T1, T2, FLAIR, and T1CE), incorporating information from each to create a comprehensive feature map for segmentation.The skip connections in U-Net make it especially effective at preserving edge and texture details, which are crucial for accurately distinguishing different tumor regions like WT, TC, and ET.

C. Variants of U-Net

- Attention U-Net: Adds attention gates to focus on relevant features, allowing the model to better target tumor regions and reduce background interference.

- Residual U-Net: Introduces residual connections, which stabilize the model’s learning and improve performance, especially in complex cases or when dealing with small tumors.

- 3D U-Net: Extends U-Net to 3D, which captures volumetric information across MRI slices, enhancing performance in tasks that require spatial continuity.

These U-Net variants demonstrate that adding attention mechanisms, residual connections, and 3D modelling can address specific challenges in brain tumor segmentation, making U-Net a flexible and powerful choice in medical imaging applications.

TABLE: 3.1 MRI Brain Tumor Segmentation Performance Metrics

|

S. no |

References |

Model |

Experimental parameters |

Segmentation Performance |

Metrics Used |

|||

|

Optimizer |

Loss Function |

Whole Tumor (WT) |

Tumor Core (TC) |

Enhancing Tumor (ET) |

||||

|

1 |

Mostafa et al. (2023) |

CNN |

Adam |

Categorical Cross-Entropy |

DS: 0.95 |

DS: 0.91 |

DS: 0.89 |

Dice Score, Accuracy |

|

2 |

Amin et al. (2022) |

Transfer Learning (ResNet, VGG) |

Adam |

Binary Cross-Entropy |

No |

No |

No |

Accuracy, Precision, Recall |

|

3 |

Irmak et al. (2021) |

Genetic Algorithm CNN |

SGD |

Cross-Entropy |

Sensitivity: 0.92 |

Specificity: 0.88 |

DS: 0.85 |

Sensitivity, Specificity |

|

4 |

Wu et al. (2022) |

CNN + K-means Clustering |

N/A |

Not specified |

Specificity: High (not quantified) |

DS: 0.88 |

No |

Specificity, Dice Score |

|

5 |

Zakariah et al. (2023) |

GoogleNet |

Adam |

Categorical Cross-Entropy |

No |

No |

No |

Accuracy, F1 Score |

|

6 |

Zhang et al. (2022) |

Attention U-Net |

Adam |

Dice Loss |

DS: 0.97 |

DS: 0.94 |

DS: 0.92 |

Dice Score, Jaccard Index |

|

7 |

Chakraborty et al. (2020) |

CNN |

SGD |

Dice + Cross-Entropy |

DS: 0.91 |

DS: 0.89 |

DS: 0.87 |

Dice Coefficient, Precision, Recall |

|

8 |

Wang et al. (2021) |

3D U-Net |

Adam |

Dice Loss |

DS: 0.92 |

DS: 0.90 |

DS: 0.88 |

Dice Score, Hausdorff Distance |

|

9 |

Ali et al. (2020) |

Hybrid CNN-RNN |

RMS Prop |

Cross-Entropy |

Accuracy: 96.4% |

DS: 0.87 |

No |

Accuracy, Sensitivity, Specificity |

|

10 |

Liu et al. (2021) |

Residual U-Net |

Adam |

Dice Loss + Cross-Entropy |

DS: 0.93 |

DS: 0.91 |

DS: 0.89 |

Dice Score, Jaccard Index, Hausdorff Distance |

|

11 |

Kumar et al. (2022) |

Ensemble CNN (ResNet, VGG) |

Adam |

Dice Loss |

DS: 0.94 |

DS: 0.92 |

DS: 0.90 |

Accuracy, Dice Score, F1 Score |

Conclusion

This literature survey reviewed key developments in deep learning models for brain tumor segmentation, with a focus on CNNs, U-Net, and their variations. The studies demonstrate that deep learning, particularly U-Net and its derivatives, is highly effective in capturing complex features of brain tumors across multiple MRI modalities. The use of T1, T2, FLAIR, and T1CE modalities enables comprehensive visualization of different tumor characteristics, with models achieving high accuracy and precision. Innovations such as attention mechanisms, transfer learning, genetic algorithms, hybrid CNN-RNN architectures, and ensemble methods have further enhanced segmentation accuracy and robustness.overall, the surveyed papers underscore the importance of multimodal MRI data, robust datasets, and carefully optimized model architectures in improving segmentation performance. By consolidating these findings, this survey highlights emerging trends and offers insights into best practices, model improvements, and future directions in automated brain tumor segmentation. Future research may benefit from exploring unsupervised and semi-supervised learning approaches, integrating domain knowledge with neural network architectures, and addressing data scarcity through advanced data augmentation and synthetic data generation techniques. With these advances, deep learning models hold great promise in transforming clinical workflows, making brain tumor diagnosis and treatment planning more efficient and accurate.

References

[1] Mostafa, A.M., Zakariah, M., Aldakheel, E.A. (2023). Brain Tumor Segmentation Using DeepLearningonMRIImages.Diagnostics,13,1562. https://doi.org/10.3390/diagnostics13091562 [2] Amin, M., Zakir, H., & Iqbal, M. (2022). Automated Brain Tumor Detection Using CNN with Transfer Learning. International Journal of Imaging Systems and Technology, 32(1), 23-29. https://doi.org/10.1002/ijist.1893 [3] Irmak, E., Aydin, S., & Gungor, H. (2021). Brain Tumor Segmentation Using Genetic Algorithm-Based CNN. Computational and Mathematical Methods in Medicine, 2021, 9856012. https://doi.org/10.1155/2021/9856012 [4] Wu, J., Sun, Z., & Zhang, W. (2022). Color-Based Segmentation for Brain Tumor Detection Using K-means Clustering. Biomedical Signal Processing and Control, 74, 103458. https://doi.org/10.1016/j.bspc.2022.103458 [5] Zakariah, M., Mostafa, A.M., & Aldakheel, E.A. (2023). CNN-Based Three-Class Brain Tumor Classification. Journal of Medical Imaging and Health Informatics, 13(4), 1345-1351. https://doi.org/10.1166/jmihi.2023.4219 [6] Zhang, L., He, F., & Yang, Y. (2022). Attention U-Net for Brain Tumor Segmentation. IEEE Access, 10, 59832-59840. https://doi.org/10.1109/ACCESS.2022.3175931 [7] Chakraborty, A., Das, A., & Roy, S. (2020). Fully Automated Brain Tumor Segmentation with CNN. Neurocomputing, 379, 43-53. https://doi.org/10.1016/j.neucom.2019.09.104 [8] Wang, Y., Liu, Q., & Chen, J. (2021). 3D U-Net for Brain Tumor Segmentation. Computers in Biology and Medicine, 134, 104453. https://doi.org/10.1016/j.compbiomed.2021.104453 [9] Ali, H., Abbas, S., & Khan, F. (2020). Hybrid CNN and RNN for Tumor Detection in MRI Images. Pattern Recognition Letters, 131, 123-130. https://doi.org/10.1016/j.patrec.2019.11.003 [10] Liu, X., Li, M., & Zhang, Q. (2021). U-Net with Residual Learning for Brain Tumor Segmentation. Frontiers in Neuroscience, 15, 696345. https://doi.org/10.3389/fnins.2021.696345 [11] Kumar, R., Sharma, R., & Mehta, P. (2022). Ensemble Learning for Brain Tumor Segmentation. Journal of Biomedical Informatics, 130, 104051. https://doi.org/10.1016/j.jbi.2022.104051

Copyright

Copyright © 2025 Gayathiri K, A. Meena Kowshalya . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET66771

Publish Date : 2025-01-31

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online