Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Brain Tumor Segmentation Using U-Net Model

Authors: M Hemanth Kumar , Madhusmita Priyadarshini , Saurabh Sarang Bhide , Saakshi Singh , Prof. Sumathi S

DOI Link: https://doi.org/10.22214/ijraset.2024.61857

Certificate: View Certificate

Abstract

Cancer of the brain presents a significant challenge due to its lethality, necessitating precise surgical segmentation. This study explores the application of U-Net, a Convolutional Neural Network (CNN) architecture, to segment brain tumors. Specifically, the segmentation involves distinguishing necrotic, edematous, growing, and healthy tissues within the brain. Analyzing overlaps between these diverse tissue types can be intricate, making the extraction of relevant information from images challenging. The study enhances the 2D U-Net network, leveraging the BraTS datasets for training. U-Net\'s flexibility in setting up various encoder and decoder routes proves invaluable for extracting pertinent information from images, adaptable for diverse applications. To optimize computational efficiency, image segmentation techniques are employed to exclude extraneous background details. Experimental results conducted on the BraTS datasets demonstrate the effectiveness of the proposed model in accurately segmenting brain tumors from MRI scans. Furthermore, the study reveals that the BraTS datasets from 2017, 2018, 2019, and 2020 exhibit comparable performance, with achieved dice scores of 0.8717 for necrotic regions, 0.9506 for edematous areas, and 0.9427 for enhancing regions in the BraTS 2019 dataset.

Introduction

I. INTRODUCTION

In recent decades, the field of medical imaging has seen a revolutionary transformation characterised by the amalgamation of machine learning and deep learning algorithms for the purposes of tumour segmentation, detection, and prognostication. Furthermore, it helps doctors diagnose brain cancers early on and improves prognostic skills.

Gliomas are primarily adult brain tumours that are thought to have their primary source in glial cells that invade surrounding tissues. Specific subtypes within the glioma category include high-grade glioblastoma (HGG) and low- grade glioblastoma (LGG).

In order to get quantitative data, radiologists have traditionally manually examined magnetic resonance imaging (MRI) modalities. However, segmenting 3D modalities is time-consuming and prone to variance and mistake, particularly when tumours display a range of sizes, forms, and positions. This problem is made worse.

Accurately classifying brain tumours from other diseases is crucial for the effectiveness of illness diagnosis, treatment planning, monitoring, and clinical interventions.

Neural imaging techniques. Accurately identifying the location and size of the tumour is crucial because tumours tend to appear in a variety of places in a range of sizes and forms. Furthermore, a tumor's intensity may coincide with the health of nearby brain tissue, which frequently results in an unfavourable contrast.

It can be difficult to distinguish a tumour from healthy tissue. As an example, T1c displays a bright tumour edge, whereas T2w displays a bright tumour area. On the other hand, the FLAIR scan helps distinguish edoema from cerebrospinal fluid (CSF). Effectively addressing this problem necessitates combining data from many MRI modalities, including Fluid- Attenuated Inversion Recovery (FLAIR), T2-weighted MRI (T2), T1-weighted MRI with contrast (T1c), and T1-weighted MRI (T1).

Expert efficiency has increased with the use of automated and semi-automated segmentation approaches. Nevertheless, automated segmentation of a brain tumour and its sub-regions is still a difficult task since tumorous cells within brain tissues are unpredictable and vary in size, appearance, and form. Notwithstanding these difficulties, these imaging technologies' accurate and quick segmentation skills greatly aid in the safe treatment of tumours, especially during surgical procedures, without endangering healthy brain regions. Various convolutional neural network (CNN) architectures have been employed and are currently in use for automatic segmentation of 3D MRI images. Previously, this task presented difficulties due to the scarcity of medical image data and the substantial processing power required. Initiatives like Brain Tumor Segmentation (BraTS) have eased the process by providing annotated 3D MRI images. In this study, we propose our 2D UNET model and undertake a comprehensive comparative analysis of various CNN models. The objective is to help physicians improve their performance based on the initial scan that an intelligent and automated technology provides. We compare metrics from the BraTS 2017-2020 dataset between our proposed approach, alternative CNN algorithms, and conventional machine learning models.

Artificial Intelligence (AI) is being used extensively in many different fields, such as SPI-GAN (Single Pixel Imaging through Generative Adversarial Network), COVID-19, Fine- Grained Image Generation, nonuniform compressed sensing, and more. The adaptability of AI means that it may be used to solve a wide range of issues in a variety of fields.

II. LITERATURE SURVEY

- Authors: Olaf Ronneberger, Philipp Fischer, Thomas Brox Paper title: U-Net Convolutional Networks for Biomedical Image Segmentation.

Description: The U-Net architecture is proposed for efficient and accurate segmentation of biomedical images, with a particular focus on tasks like identifying and delineating structures in medical images, such as tumors. The key characteristics of the U-Net architecture include a contracting path to capture context and a symmetric expansive path for precise localization. The network design incorporates skip connections between the contracting and expanding paths, allowing the model to retain high-resolution features during the upsampling process.

The U-Net architecture is structured like a "U," with a contracting encoder path and an expansive decoder path. The contracting path involves convolutional and pooling layers to capture context, while the expansive path utilizes upsampling layers to localize features. Skip connections facilitate the transfer of detailed information from the contracting path to the expanding path, aiding in accurate segmentation.

This architecture has proven effective for biomedical image segmentation tasks, including the segmentation of brain tumors. The U-Net's ability to capture both global context and local details makes it a valuable tool for applications where precise delineation of structures in medical images is crucial. The paper provides experimental results and demonstrates the efficacy of U-Net in various biomedical image segmentation challenges.

2. Authors: Konstantinos Kamnitsas, Christian Ledig, Virginia

F.J. Newcombe, Joanna P. Simpson, Andrew D. Kane Paper title: Efficient Multi-Scale 3D CNN with Fully Connected CRF for Accurate Brain Lesion Segmentation.

Description: The DeepMedic model is proposed for precise segmentation of brain tumors using Convolutional Neural Networks (CNNs). It is particularly tailored to handle 3D medical imaging data. The key contributions of DeepMedic include a dual-pathway architecture that combines both local and global context information, enabling the model to capture detailed structures and overall spatial relationships.

The dual-pathway architecture of DeepMedic consists of two parallel pathways: one for capturing fine-grained details using smaller convolutional filters and the other for capturing global context using larger convolutional filters. The outputs from both pathways are combined to provide a comprehensive understanding of the input image, enhancing the segmentation accuracy.

The model is trained and evaluated on the MICCAI Brain Tumor Segmentation Challenge (BraTS) dataset, showcasing its effectiveness in accurately segmenting different types of brain tumors. DeepMedic's architecture, leveraging the advantages of multi-scale information, demonstrates competitive performance in comparison to other state-of-the- art methods.

Overall, DeepMedic presents an innovative CNN architecture designed for 3D brain tumor segmentation, with a focus on balancing local and global context information to achieve accurate and robust results in medical image analysis.

3. Authors: Bakas, S., Akbari, H., Sotiras, A., Bilello, M., Rozycki, M., Kirby, J.S., Freymann, J.B., Farahani, K., Davatzikos, C

Paper title: Advancing The Cancer Genome Atlas glioma MRI collections with expert segmentation labels andradiomic features.

Description: The paper titled "Advancing The Cancer Genome Atlas glioma MRI collections with expert segmentation labels and radiomic features" significantly contributes to the augmentation of the Cancer Genome Atlas (TCGA) glioma MRI datasets. Its principal focus lies in the introduction of expert segmentation labels and the incorporation of radiomic features to elevate the overall quality and richness of available data. This augmentation aims to propel research within the realm of brain tumor segmentation, particularly in initiatives like the Brain Tumor Segmentation (BraTS) challenge.

By providing meticulous and precise expert segmentation labels, the paper addresses the crucial need for more accurate annotations in glioma MRI datasets. This meticulous annotation serves to refine existing datasets, offering a higher level of granularity that is essential for the development and evaluation of advanced brain tumor segmentation algorithms. Additionally, the integration of radiomic features introduces a quantitative dimension to the analysis of medical images, surpassing traditional visual assessments and providing a more comprehensive set of information regarding the characteristics of gliomas.

The impact of this contribution extends beyond mere dataset refinement. The enriched datasets, now equipped with expert annotations and radiomic features, are poised to catalyze and stimulate further advancements in the field of brain tumor segmentation.

Researchers and practitioners, particularly those engaged in algorithm development, now have access to more sophisticated and informative datasets that can significantly enhance the robustness and accuracy of segmentation methodologies. This, in turn, has the potential to foster innovations in diagnostic and therapeutic approaches for glioma patients.

In conclusion, the paper plays a pivotal role in advancing the landscape of glioma MRI datasets by introducing meticulous annotations and quantitative radiomic features. The resulting enriched datasets are poised to serve as valuable resources for researchers, ultimately driving advancements in brain tumor segmentation and contributing to improved diagnostic and therapeutic strategies for individuals affected by gliomas.

4. Authors: Havaei, M., Davy, A., Warde-Farley, D., Biard, A., Courville, A., Bengio, Y., ... & Pal, C

Paper title: Brain Tumor Segmentation with Deep Neural Networks

Description: This paper focuses on the application of deep neural networks for brain tumor segmentation. The authors propose an end-to-end trainable convolutional neural network (CNN) architecture designed to automatically segment brain tumors from multi-modal magnetic resonance imaging (MRI) scans. The model utilizes a combination of 2D and 3D convolutional layers to capture both spatial and contextual information.

The study evaluates the proposed deep neural network on the MICCAI 2013 Brain Tumor Segmentation Challenge dataset (BRATS), showcasing competitive performance compared to traditional methods. The CNN-based approach demonstrates the potential for accurate and automated brain tumor segmentation, offering insights into the effectiveness of deep learning techniques in medical image analysis.

5. Authors: Menze, B., Jakab, A., Bauer, S., Kalpathy-Cramer, J., Farahani, K., Kirby, J., Burren, Y., Porz, N., Slotboom, J., Wiest, R., Lanczi, L., Gerstner, E., Weber, M., Arbel

Paper title: The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS)

Description: The paper introduces the Multimodal Brain Tumor Image Segmentation Benchmark (BRATS), which serves as a comprehensive evaluation platform for assessing algorithms in the field of brain tumor image segmentation. The initiative aims to address the challenges associated with accurately segmenting brain tumors by providing a standardized dataset and evaluation framework.

The BRATS dataset includes multimodal magnetic resonance imaging (MRI) scans, and the paper emphasizes the importance of considering multiple imaging modalities for a holistic understanding of brain tumors. The benchmark encourages researchers to develop and test segmentation algorithms capable of handling the complexity and heterogeneity of brain tumor images.

By providing a common ground for evaluation, BRATS facilitates the comparison of different segmentation approaches and encourages the development of robust and accurate methods. The collaborative effort involves a large number of researchers and institutions, fostering advancements in the field of brain tumor image analysis and segmentation.

III. EXISTING METHODOLOGIES

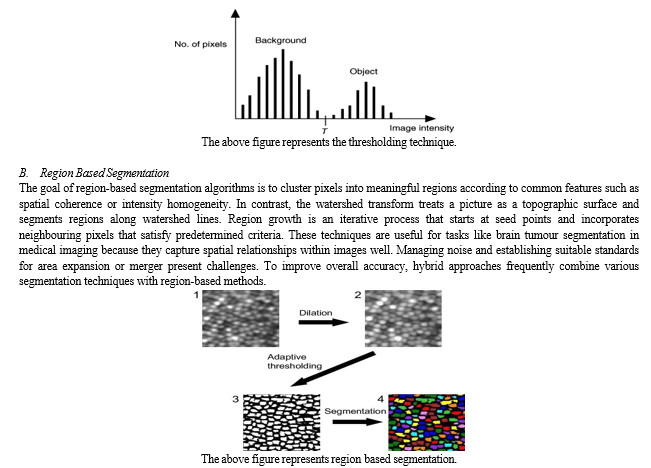

A. Thresholding techniques

Picture segmentation techniques called thresholding divide objects or areas in a picture according to the levels of pixel intensity. The basic idea is to specify a threshold value, and then classify pixels into distinct regions based on whether their intensity values are above or below this threshold.

There are various kinds of thresholding techniques: adaptive thresholding, which modifies the threshold locally depending on the content of the image, and basic thresholding, which employs a constant threshold for the entire image. These methods are straightforward and computationally effective, although they might not work as well when there is a large amount of variation in the image's intensity or when the intensities of the background and objects overlap. When starting image processing and segmentation operations, thresholding techniques are frequently used.

C. Clustering Techniques

In image segmentation, clustering techniques combine pixels with similar properties together, usually according to their intensity values. Fuzzy C-Means clustering and K-Means clustering are two popular techniques. With the goal of minimising the sum of squared differences inside each cluster, K-Means is an unsupervised method that divides pixels into clusters. By adding fuzzy memberships, fuzzy C- Means expands on K-Means by permitting pixels to partially belong to several clusters according to intensity similarity. These techniques are flexible, but the selection of fuzziness parameters or cluster centroids may have an impact on how well they perform. Due to their ease of use and efficiency in identifying innate patterns in picture data, clustering techniques are frequently employed in a variety of image segmentation applications, such as brain tumour analysis through medical imaging.

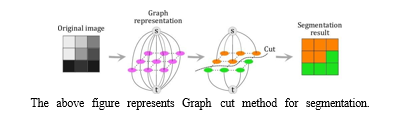

D. Graph Cut Methods

A set of picture segmentation methods known as "graph cut methods" treats the segmentation issue as an optimisation problem on a graph. The main concept is to create a graph that represents the image and divide it into segments iteratively in order to maximise a particular criterion. Graph cut segmentation is a well-known graph cut technique in the context of picture segmentation. By allocating nodes to pixels and linking them with edges weighted by the dissimilarity between pixels, this method formulates the segmentation issue. The segmented portions correspond to the best cut through the graph.

Graph cut techniques are a popular tool for medical picture segmentation, particularly the investigation of brain tumours, as they offer an efficient means of capturing global information. It is imperative to select suitable methodologies for graph building and optimisation, which may want substantial computational resources.

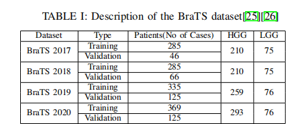

IV. DATASET INFORMATION

In the realm of medical image analysis, the BRATS (Multimodal Brain Tumour Segmentation) dataset is a commonly used benchmark dataset, particularly for brain tumour segmentation. With multimodal magnetic resonance imaging (MRI) data, BRATS aims to promote the development and assessment of algorithms for the automatic segmentation of brain tumours.

A. Key features of the BRATS Dataset Include

Multimodal Imaging: The dataset offers T1-weighted, T1- weighted with contrast enhancement, T2-weighted, and FLAIR (Fluid Attenuated Inversion Recovery) MRI scans, among other modalities.

Types of Tumours: BRATS has photos displaying glioblastomas (GBM), low-grade gliomas (LGG), and other anomalies that are associated with brain tumours.

Training and Testing Sets: Usually, the dataset is split up into sets for testing and training. Segmentation algorithms are trained on the training set, and their performance on fresh, untested data is assessed on the testing set. Manual Annotations: To provide ground truth segmentation masks for tumour regions, each MRI scan in the dataset has a manual annotation attached to it. These annotations are crucial for segmentation algorithm training and accuracy assessment.

Challenge and Evaluation: Researchers and developers have been invited to submit their brain tumour segmentation algorithms for annual challenges linked to the BRATS dataset. Metrics like the Dice coefficient, sensitivity, specificity, and others form the basis of the assessment.

Versions and Updates: The dataset has undergone a number of versions and updates, with each new release bringing fixes for issues and adding new features.

The BRATS dataset is used by practitioners and researchers to evaluate and contrast the capabilities of different brain tumour segmentation algorithms. It has significantly advanced the creation of reliable and precise segmentation methods for use in medical settings. To find out the most recent details on the dataset and any updates, it's important visiting the official BRATS website or any related publications.

V. METHODOLOGY

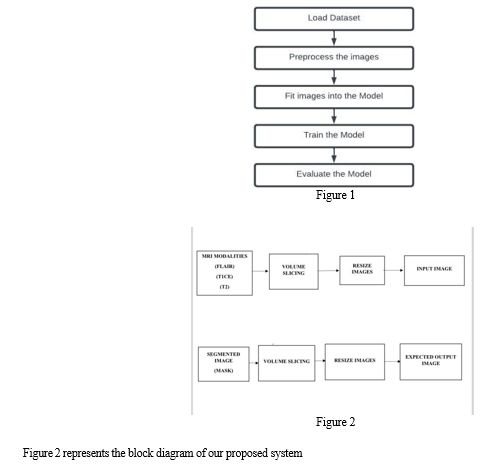

We have applied brain tumour segmentation on many datasets (BraTS 2017, 2018, 2019, and 2020) using the 2D U-Net convolutional neural network architecture. Preprocessing methods were unique to each dataset and were designed to improve the quality of the data. In this experiment, we used a data generator class to effectively control memory utilisation. The dataset was then divided into training, testing, and validation sets in order to prepare it for input into the U-Net model. Next, performance measures were checked and the trained model was reviewed.

The workflow of the model is depicted in Figure 1.

A. Algorithms Used

Algorithm1: Algorithm of U-Net approach with pre- processing for each image in dataset do Resize of images into dimensions of x*y*z Slice the images to remove blank portions Normalize input images end for Train U-Net for x iterations Evaluate the model

Algorithm 2: Algorithm for Evaluation loaddataset() DataGenerator() train_test_split() loadModel() for each epoch in epochNumber do loss=categorical_crossentropy(y_actual,y_predict) evaluation() end for end for return()

B.  Network architecture:

Network architecture:

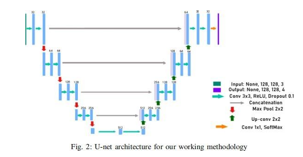

Figure 2 depicts the 2D U-Net network design and its main constituent parts. The growing path is shown on the right side of Figure 2, while the contracting path is shown on the left. With two 3x3 convolutions and an activation function of rectified linear unit (ReLU), the contracting route has the form of a conventional convolutional network. A 2x2 max pooling operation is used to accomplish downsampling, which doubles the number of features at each stage.

In contrast, the expansive path upsamples the feature map using 2x2 transposed convolutional layers. Concatenation with the contracting path's corresponding feature map and two 3x3 convolutions with ReLU activation come next. Half of the characteristics are used in the upsampling process. A 1x1 convolution is used in the last layer to transfer each feature vector to the required number of classes.

This dual-path architecture is especially well-suited for image segmentation tasks because it efficiently combines contracting and expanding paths to capture both global and local information. The model is able to segment brain tumours in the study setting with accuracy because of the precise arrangement of transposed convolutional layers in the expansive path and convolutional and pooling layers in the contracting path.

C. Data pre-processing

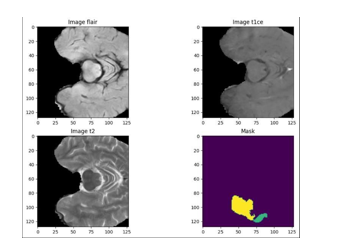

The datasets cover four different MRI modalities, and it takes a lot of computing power to train the CNN model using all of them. Since FLAIR and T1ce contain the most important information in the dataset, they are chosen as the 2D U-Net's inputs. Every MRI modality contains an extra black background that isn't needed for the training phase but adds to the computational load. To solve this, the photos are divided into multiple sections (100 slices for 2D U-Net) in order to reduce background and highlight the visually informative areas.

The necessary shape is applied to both the input and output images for maximum compatibility with the U-Net model. Additionally, both the input and output images are normalised using MinMaxScaler to improve computing performance and account for the 16-bit intensity of each channel. One-hot encoding is applied to segmented pictures before further processing.The dataset is then methodically divided into training, validation, and test subsets, which make up 68%, 20%, and 12% of the whole dataset, respectively, after these preparation stages. Because of the partitioning, robust model training and evaluation are made possible by ensuring a balanced representation across the various subsets.

D. Experimental setup:

Tenserflow and Keras are used in the creation of the model. The experiments are conducted using Tensorflow version 2.6.4 and Python version 3.7.12 in Kaggle and Google Colaboratory.

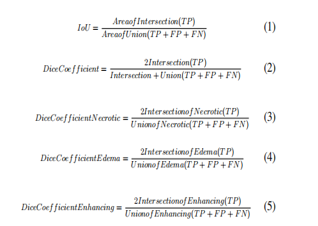

E. Evaluation Metrics:

A variety of assessment criteria are utilised to gauge how well the created models perform, with particular focus on the dice coefficient that is commonly used in the field of medical picture segmentation. The dice coefficient is computed for each class in this experiment, including necrotic (Eq. 3), edoema (Eq. 4), enhancing (Eq. 5), and the anticipated segmentation. Additionally, metrics like accuracy, Mean IoU, precision, sensitivity, and specificity are used to assess the overall performance and generality of the model. Greater segmentation performance is indicated by elevated scores across these parameters.

True Positive (TP) in the above equations indicates the precise identification of tumour sites when the real and projected tumours line up. When the expected and actual non-tumor areas coincide, the resultant True Negative (TN) represents a correct identification of non-tumor regions. False Negative (FN) describes situations in which the expected non-tumor area is mistakenly identified as the real tumour location, whereas False Positive (FP) refers to situations in which the predicted tumour area does not match the actual tumour. Together, these metrics provide a strong foundation for assessing the model's discriminatory power and accuracy, guaranteeing a thorough evaluation of the segmentation quality.

F. MRI Modalities in use

- FLARE: An important MRI sequence used frequently in neuroimaging, especially for brain tumour segmentation, is Fluid Attenuated Inversion Recovery (FLAIR). FLAIR works on the basis of an inversion recovery approach, in which the signal from the cerebrospinal fluid (CSF) is selectively suppressed by a radiofrequency pulse. This CSF signal suppression is important because it greatly increases the contrast in the final images, revealing previously hidden structures. To provide a comprehensive dataset for precise tumour component delineation, FLAIR images are frequently coupled with other MRI modalities, such as T1-weighted and T2-weighted images, in the context of brain tumour segmentation. The precision of segmentation tasks is enhanced by FLAIR's unique ability to reveal anomalies, such as tumor-associated edoema and lesions. FLAIR is clinically significant because it can increase sensitivity by specifically darkening the CSF signal. This increased sensitivity is especially helpful for the early identification of anomalies in the brain, which helps with the prompt diagnosis, planning of treatments, and tracking of different brain disorders, including tumours. To sum up, FLAIR is an essential tool for neuroimaging since it provides improved contrast qualities that are useful for the precise examination and division of brain tumours.

- T1CE(T1 Contrast Enhancement): T1-weighted imaging with contrast enhancement, or T1ce, is a crucial magnetic resonance imaging sequence that is widely used in neuroimaging, particularly in the evaluation of brain tumours. T1-weighted imaging provides extensive anatomical information by enabling the determination of tissue changes in longitudinal relaxation time (T1). In order to highlight particular characteristics, contrast agents like gadolinium are injected intravenously as part of the contrast enhancement process. When evaluating brain tumours, T1ce imaging is especially helpful since it highlights regions where the blood-brain barrier integrity has been compromised. The build-up of contrast in these areas helps distinguish and characterise enhancing lesions, which is important for brain tumour detection and tracking. All things considered, T1ce is a vital instrument that improves neuroimaging diagnostic abilities, particularly when it comes to characterising brain pathology.

- T2: T2, or T2-weighted imaging, is a basic magnetic resonance imaging sequence that is widely employed in neuroimaging because it may produce exact anatomical information. Because various tissues exhibit varying signal intensities due to differences in their transverse relaxation durations (T2), T2-weighted images offer strong contrast for the identification of abnormalities like brain lesions and edoema. Curiously, cerebrospinal fluid appears bright on T2- weighted imaging, which enhances the visibility of ventricles and fluid gaps. This imaging modality is very helpful for neurological diagnostics; it may be used to detect and define a wide range of brain illnesses, including tumours, inflammation, and vascular abnormalities. T2-weighted imaging is a crucial component of comprehensive brain exams due to its sensitivity to tissue water content and adaptability in multimodal studies.

VI. EXPECTED OUTPUT & RESULTS

The figures below illustrate how various modalities, including our suggested modality, Image Flair, Image T1CE, and Image T2, provide distinct results for a single brain scan. Since it can be challenging to visually identify any type of tumour in the flair, t1ce, and t2 modalities, the suggested system aids professionals in precisely identifying the location, form, and size of tumours. The anticipated result at the end of our project is represented by the final graph, or Mask.

VII. APPLICATIONS

Early Diagnosis and Treatment Planning: Timely medical intervention and treatment planning are made possible by early diagnosis of anomalies, which is made possible by accurate brain tumour segmentation. Patient outcomes can be considerably improved by early diagnosis.

Precision medicine: Accurate data regarding the location, size, and properties of tumours is necessary to customise therapy for specific patients. A thorough grasp of the tumour morphology is made possible by segmentation, which supports the development of individualised treatment plans.

Surgical Planning: Surgeons can more efficiently plan and direct brain tumour procedures by using segmented pictures. During surgical procedures, it helps to minimise damage to healthy brain tissue to know the precise position and borders of the tumour.

Radiation Guidance: Planning and directing radiation sessions requires accurate segmentation. The accurate demarcation of tumour boundaries guarantees that radiation therapy is directed precisely towards the tumour, optimising its efficacy and reducing harm to healthy tissue.

Tracking Response to Treatment: Throughout the course of treatment, periodic segmentation enables physicians to evaluate the tumor's reaction to different medications. To improve patient care and modify treatment strategies, this information is essential.

Research and Clinical studies: By offering standardised, quantifiable metrics for assessing the efficacy of various therapies and interventions, segmented datasets support research endeavours and clinical studies. This is crucial for expanding our knowledge of brain tumours and creating fresh treatment strategies.

Efficiency and Automation: Using automated segmentation models, such the 2D U-Net, improves medical image analysis efficiency. It expedites the diagnosis process and lessens the manual labour of radiologists, producing faster and more reliable results.

Education and Training: Medical practitioners can improve their ability to identify and comprehend different characteristics of brain tumours by using the valuable datasets that segmentation initiatives give for educational purposes. Optimising segmentation algorithms is facilitated by training models on a variety of datasets.

Conclusion

Many innocent people lose their lives each year as a result of brain tumor-related deaths brought on by botched brain surgery. However, the creation of a model capable of precise data segmentation gives thousands of people hope and increases the likelihood of successful surgeries. For this admirable goal, this study does a thorough comparison analysis using the benchmark Brain Tumour Dataset and state-of-the-art models to predict brain tumour segmentation. The research is based on the BraTS statistics covering the years 2017 to 2020. According to our research, there aren\'t many significant differences between the datasets from 2017 to 2020, which suggests that models that show promise on one dataset are probably going to perform well on others. Different CNN architectures or customised CNN models perform consistently better in segmentation than conventional machine learning models. Using the 2019 BraTS dataset, the 2D UNet demonstrated an impressive DICE score of 0.8409 for tumour prediction and segmentation; nevertheless, information loss resulted from its inability to fully utilise detailed information. We plan to investigate several 3D UNet models in our search for improved segmentation outcomes in order to address this problem.

References

[1] Du, X. Cao, J. Liang, X. Chen, and Y. Zhan, “Medical image segmentation based on u-net: A review,” Journal of Imaging Science and Technology, vol. 64, pp. 1–12, 2020. [2] S. Bauer, R. Wiest, L.-P. Nolte, and M. Reyes, “A survey of mri-based medical image analysis for brain tumor studies,” Physics in Medicine & Biology, vol. 58, no. 13, p. R97, 2013. [3] S. Al-Qazzaz, “Deep learning-based brain tumour image segmentation and its extension to stroke lesion segmentation,” Ph.D. dissertation, Cardiff University, 2020. [4] R. Raza, U. I. Bajwa, Y. Mehmood, M. W. Anwar, and M. H. Jamal, “dresu-net: 3d deep residual u-net based brain tumor segmentation from multimodal mri,” Biomedical Signal Processing and Control, p. 103861, 2022. [5] S. Pereira, A. Pinto, V. Alves, and C. A. Silva, “Brain tumor seg- mentation using convolutional neural networks in mri images,” IEEE transactions on medical imaging, vol. 35, no. 5, pp. 1240–1251, 2016. [6] M. Nasim, A. Dhali, F. Afrin, N. T. Zaman, and N. Karim, “The prominence of artificial intelligence in covid-19,” arXiv preprint arXiv:2111.09537, 2021. [7] M. Abdullah Al Nasim, A. Dhali, F. Afrin, N. T. Zaman, and N. Karim, “The prominence of artificial intelligence in covid-19,” arXiv e-prints, pp. arXiv–2111, 2021. [8] M. A. H. Palash, M. A. Al Nasim, A. Dhali, and F. Afrin, “Fine- grained image generation from bangla text description using attentional generative adversarial network,” in 2021 IEEE International Conference on Robotics, Automation, Artificial-Intelligence and Internet-of Things (RAAICON). IEEE, 2021, pp. 79–84. [9] N. Karim, A. Zaeemzadeh, and N. Rahnavard, “Rl-ncs: Reinforcement learning based data-driven approach for nonuniform compressed sens- ing,” in 2019 IEEE 29th International Workshop on Machine Learning for Signal Processing (MLSP). IEEE, 2019, pp. 1–6. [10] N. Karim and N. Rahnavard, “Spi-gan: Towards single- pixel imaging through generative adversarial network,” arXiv preprint arXiv:2107.01330, 2021. [1] O .C ic ek,A.Abdulkadir,S.S.Lienkamp,T.Brox,andO.Ronne berger, “3d u-net: learning dense volumetric segmentation from sparse anno- tation,” in International conference on medical image computing and computer-assisted intervention. Springer, 2016, pp. 424–432. [2] M. Havaei, A. Davy, D. Warde-Farley, A. Biard, A. Courville, Y. Bengio, C. Pal, P.-M.Jodoin, and H. Larochelle, “Brain tumor segmentation with deep neural networks,” Medical image analysis, vol. 35, pp. 18–31, 2017. [3] B. H. Menze, A. Jakab, S. Bauer, J. Kalpathy-Cramer, K. Farahani, J. Kirby, Y. Burren, N. Porz, J. Slotboom, R. Wiest et al., “The multimodal brain tumor image segmentation BRAIN benchmark (brats),” IEEE transactions on medical imaging, vol. 34, no. 10, pp. 1993–2024,2014. [4] R. R. Agravat and M. S. Raval, “3d semantic segmentation of brain tumor for overall survival prediction,” in International MICCAI Brain- lesion Workshop. Springer, 2020, pp. 215–227. [5] V. K. Anand, S. Grampurohit, P. Aurangabadkar, A. Kori, M. Khened, R. S. Bhat, and G.Krishnamurthi, “Brain tumor segmentation and survival prediction using automatic hard mining in 3d cnn architecture,” in International MICCAI Brainlesion Workshop. Springer, 2020, pp. 310–319. DECLARATION The project team certifies that the information in the manuscript is accurate. We reaffirm that there are no conflicts of interest or conflicting interests, highlighting our own analysis that was informed by prior research. There is no requirement for financial support because the tools and resources employed are publicly available. Permission for paper publishing is contingent to our institution\'s ethical approval.

Copyright

Copyright © 2024 M Hemanth Kumar , Madhusmita Priyadarshini , Saurabh Sarang Bhide , Saakshi Singh , Prof. Sumathi S. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61857

Publish Date : 2024-05-09

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online