Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

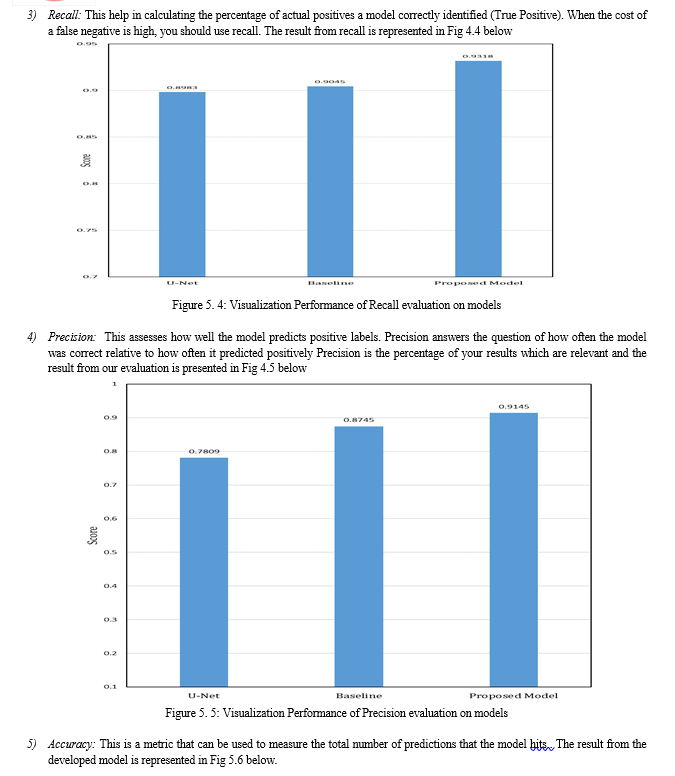

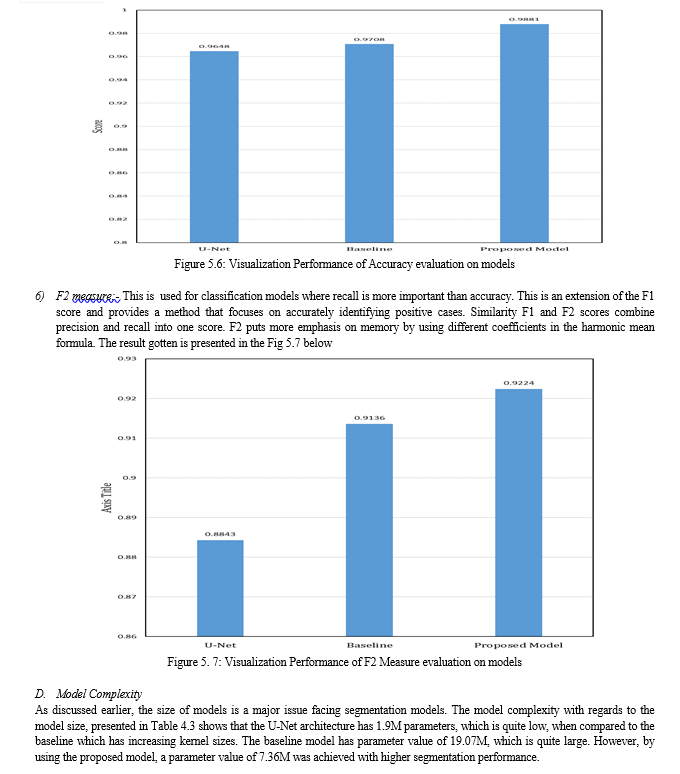

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Breast Cancer Tumour Masks Segmentation Using Modified U-Net with Channel Attention

Authors: Akin-Olayemi Titilope.Helen, Ojo Abayomi Fagbuagun, Oguntuase R. Abimbola, Ojo Olufemi A., Makinde Bukola Oyeladun

DOI Link: https://doi.org/10.22214/ijraset.2024.59592

Certificate: View Certificate

Abstract

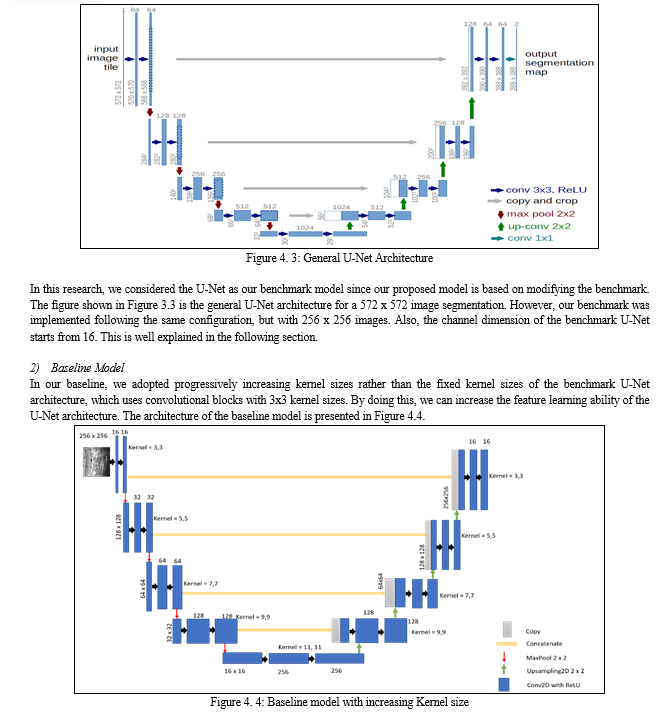

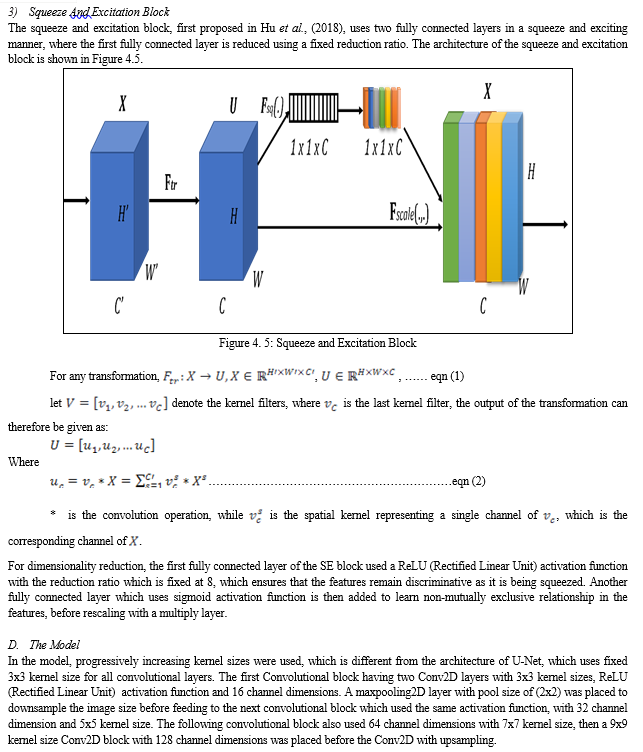

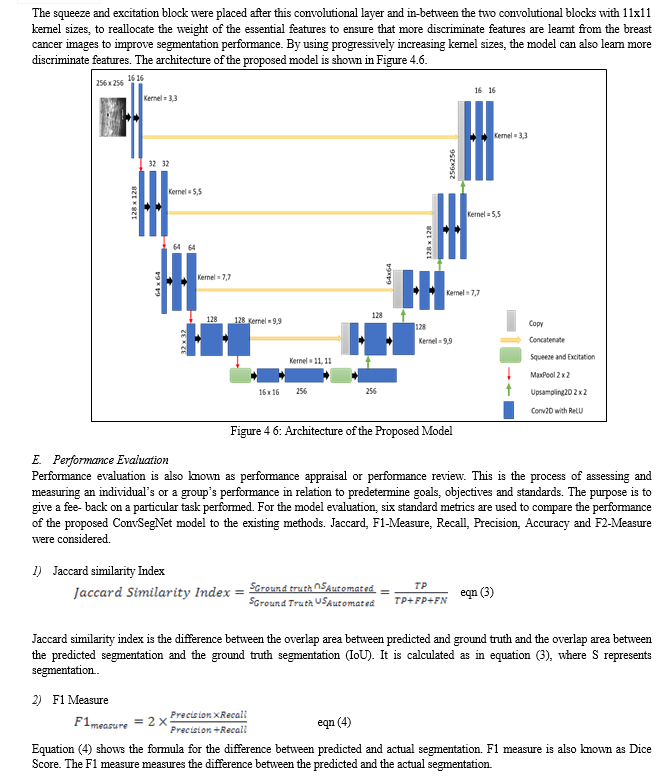

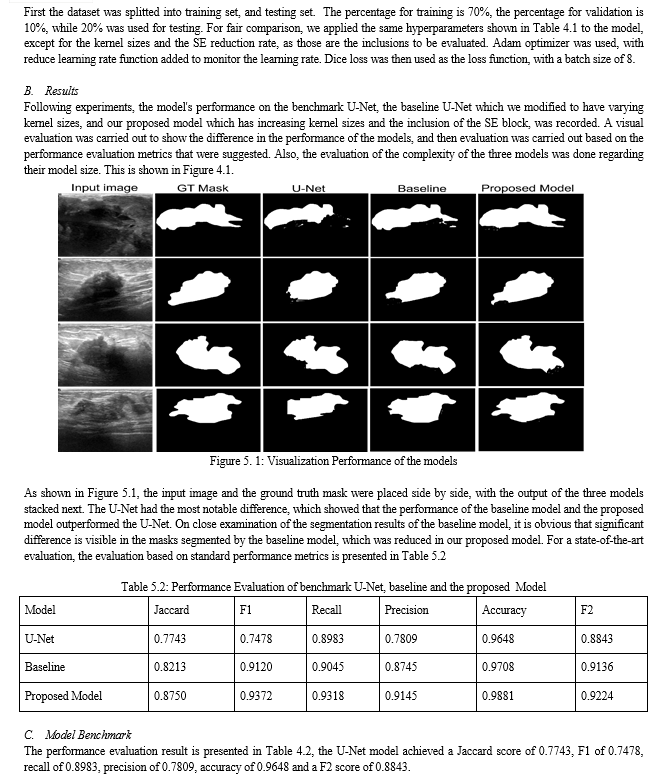

A modified U-Net for biomedical image segmentation with channel attention is developed for breast cancer image segmentation. A unique step in biomedical image analysis is segmentation because it determines the accuracy of image analysis algorithms. Algorithms for accurate and early breast cancer detection in women using mammograms depend highly on the accuracy of the segmentation stage. In this thesis, in order to improve on the segmentation ability of the traditional U-Net architecture, it was modified by varying the fixed kernel sizes that it was originally designed for. In this model, progressively increasing kernel sizes of 3x3, 5x5, 7x7, 9x9 and 11x11 were used, which is different from the architecture of U-Net that depended on fixed 3x3 kernel sizes for all convolutional layers. The first Convolutional block of the proposed model contains two Conv2D layers with 3x3 kernel sizes, ReLU (Rectified Linear Unit) activation function, and 16 channel dimensions. A maxpooling2D layer with pool size of (2x2) was placed to downsample the image size before feeding to the next convolutional block which used the same activation function, with 32 channel dimension and 5x5 kernel size. The next convolutional block used 64 channel dimensions with 7x7 kernel size, then a 9x9 kernel size Conv2D block with 128 channel dimensions was placed before the Conv2D with upsampling. The squeeze and excitation block was placed after this convolutional layer and in-between the two convolutional blocks with 11x11 kernel sizes, to reallocate the weight of the essential features to ensure that more discriminate features are learnt from the breast cancer images to improve the overall representational strength of the network through the performance of dynamic feature recalibration of the image channels. This significantly improved the performance of the segmentation by explicitly modeling the interdependencies between channels in the convolutional layers. The increasing kernel sizes enables the model to learn more discriminate features from the images. Jaccard similarity index, F1 score, Recall, Precision, F2 score, and accuracy results were used to compare the result of the proposed model with other models such as U-Net model and Baseline model. The results shows a better performance of 0.88 for Jaccard similarity index, 0.94 for F1 score, 0.93 for recall, 0.92 for precision, 0.92 for F2 score, and an accuracy score of 0.98%. The model is good for biomedical image segmentation.

Introduction

I. INTRODUCTION

When division of cells in the breasts goes out of control, it leads to breast cancer. Breast cancer is widely known, and research has shown that one out of eight women will possibly be diagnosed with cancer of the breast through their life span, and over 40,000 women die of cancer of the breast yearly (National Breast Cancer Fundation (NBCF), 2016). Early research on breast cancer, according to Moody et al., (2005), proved that metastization, which means cancer’s spread from the breast to other body parts mainly causes death in many women. Breast cancer diagnosis could be done by tumor classification. Tumour classification could be achieved in four (4) ways; which are Mammography, biopsy, self-examination of the breast, and near infrared fluorescence (NIF). However, mammography and biopsy are the generally accepted ways through which classification can be done (Khan, et al., 2019). In mammography, the images of the breast are used by the radiologist to detect early symptoms of cancer in women.. While in the biopsy, a pathologist analyzes tissue samples from an affected breast region under the microscope to detect and classify the tumor. Breast tumors can be of two lesions (benign and malignant).

The non-cancerous tumors are being classified as benign lesion while the malignant lesions are cancerous tumors. Mammography is the most accepted way of diagnosing cancer because of the complex nature of biopsy (Chekkoury et al., 2012). The world health organization emphasized that the early diagnosis of breast cancer leads to an increases in the survival rate in women to over 80% (WHO, 2014). To ensure that breast cancer diagnose early, various methods of detection have been proposed by the radiologists. However, the development in artificial intelligence have seen different computer scientists contribute their quota through the deep and machine learning techniques. Nevertheless, the problems of low accuracy that is being attributed to insufficient datasets and defective data pre-processing still linger.

Recently, the challenges of insufficient datasets have been minimized through adopting various data augmentation and data generation techniques. An example of such methods is the use of variable auto-encoders (Doersch, 2016), and also Generative Adversarial Networks (GANs) (Goodfellow, 2016), to generate synthetic images. For easy diagnosis and treatment, it is crucial to segment biomedical images. Segmentation involves delineating tumor areas from the whole image. However, problems of effectively segmenting tumors from breast cancer images for easy diagnosis and treatment have not been fully addressed. Also, the existing segmentation models often come with high complexity based on the size of such models. This challenge is often attributed to the varying shapes and sizes of the tumors in breast cancer. This research aims to improve the success segmentation rate of tumors from breast cancer images by developing a biomedical segmentation model that is capable of detecting breast cancer tumors from breast images with improved performance, while minimizing model complexity.

Research reveals the early detection of breast cancer tumors often reduces the mortality rate since it will allow the medical expert to commence appropriate treatment as soon as it is detected. Generally, a breast cancer diagnosis is carried out through Mammography, self-examination of the breast, Biopsy or NIF.Nevertheless, biopsy and the use of mammograms are the general way of diagnosing breast cancer (Khan, et al., 2019). The limitations of the biopsy method is its complexity because the amount of tissue obtained from a biopsy needle may not be sufficient due to the fact that the examination is limited to the affected region of the breast as discussed in the previous section. This have made mammography the generally accepted method of diagnosis. Mammography involves checking the scanned images of the breast to detect the presence of malignant or benign cancers.

Generally, an expert is needed to check the mammograms to detect the presence of cancers manually. However, this method is not so reliable since the interpretation of mammograms is subject to variability due to individual interpretations. Moreover, manual detection is time-consuming, expensive, and reliant on the expert’s abilities and competence. Also, malignant cancers are challenging to identify. Because of this, recent advancements in artificial intelligence have been proposed as the solution to tackling issues relating to manual diagnosis.

In literature, many deep learning models and machine learning techniques have been proposed in order to achieve better breast cancer diagnosis. Several researchers have presented different machine learning methods for automated cell classifications in breast cancer detection throughout the last few decades. Some researchers have focused on mammogram analysis, collecting traits from mammograms that could be useful for classification of cancer into either malignant or benign cells. However, the complexity of data preprocessing, extraction of features, and classification techniques have not allowed machine learning methods to achieve very precise and state-of-the-art detection and segmentation accuracy. Because of this, deep learning methods have been adopted. (Khan, et al., 2019). Many layers of image representation can be learned by deep learning models to model complicated nonlinear connections in data, allowing them to find more abstract, and valuable characteristics that made it simpler to get relevant information for high-level decision making tasks like classification, segmentation, and prediction. Methods of deep learning requires a high number of labelled training images to be optimally trained due to the enormous list of parameters that are usually involved.

In breast cancer images, malignant tumors often come in various shapes and sizes. Therefore, it is paramount to segment these tumours for easy diagnosis and treatment to prevent mortality. A unique step in image analysis is image segmentation and it affects accuracy of image analysis (Michael et al, 2021). This means that the result of segmentation is presented to later stages of image analysis. Once this stage is not accurate, it affects the remaining stages of the image analysis. However, existing segmentation models developed for breast cancer segmentation have also been unable to achieve state-of-the-art result due to these tumours' varying shapes and sizes. Likewise, the existing models often come with bulky model sizes, and researchers have investigated how to realize state-of-the-art segmentation results without incurring additional model parameters.

Several researchers have proposed various techniques for achieving higher accuracy in early breast cancer detection and segmentation from cancer images. Researchers such as Usha, (2010), Boquete et al., (2012) Cardoso et al., (2016), (Khan et al., 2019), among others have proposed deep learning techniques.

However, limitations of low segmentation performance and bulky model segmentation model size are the motivation for this research.

II. THEORECTICAL BACKGROUND

A. Breast Cancer

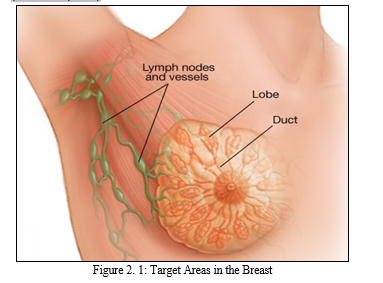

The female breast, also called mammary gland, it is situated on the anterior section of the chest wall. It is made up of glandular tissue having a very dense, and fibrous stromal. There are multiple ducts in the breast which connect the lobular units that secrete milk to the nipple of the breast. The milk is as a very significant source of food for babies (America Joint Committee on Cance (AJCC), 2002). The necessities of breastfeeding to babies cannot be overemphasized. However, the breast can be affected by various infections and the most common infection, among which the commonest is breast cancer, which is significant source of health challenge that affects women, which cut across all ages, and races. Cancer of the breast occurs when breast tissues are infected.

According to medical doctors, cancer of the breast occurs due to uncontrollable cell division in the breast. These cells develop faster than healthy cells and keep growing without control, resulting in lump or mass. Cancer cells in breast have the tendency to spread or metastasize to lymph nodes and other body parts. As shown in Figure 2.1, the most common source of breast cancer is attributed to cells in breast that produce milk being invaded, which is known as ductal carcinoma. Cancer of the breast begins in lobules which are glandular tissues. It can also begin from other cell in the breast. The increase in risk of cancer of the breast can be linked with hormonal, lifestyle, and environmental variables based on research (Rouhi et al., 2015).

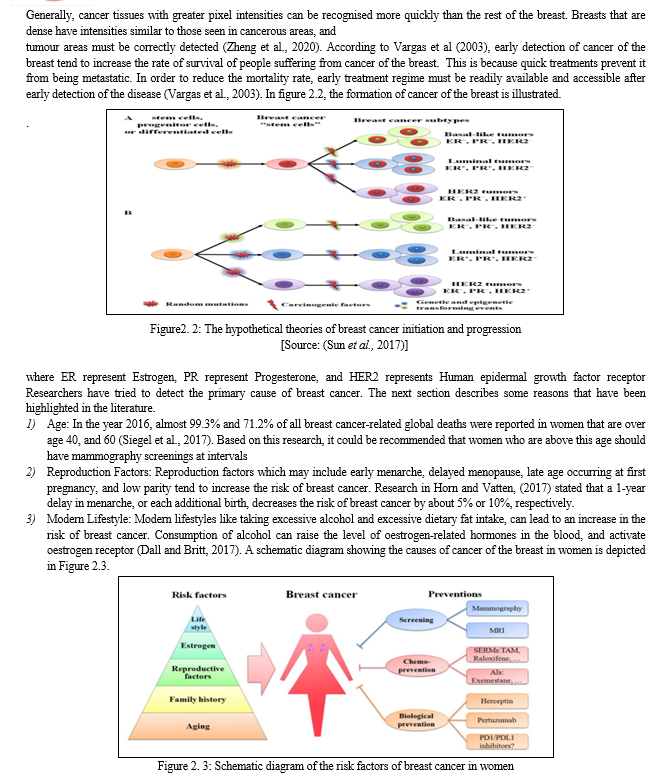

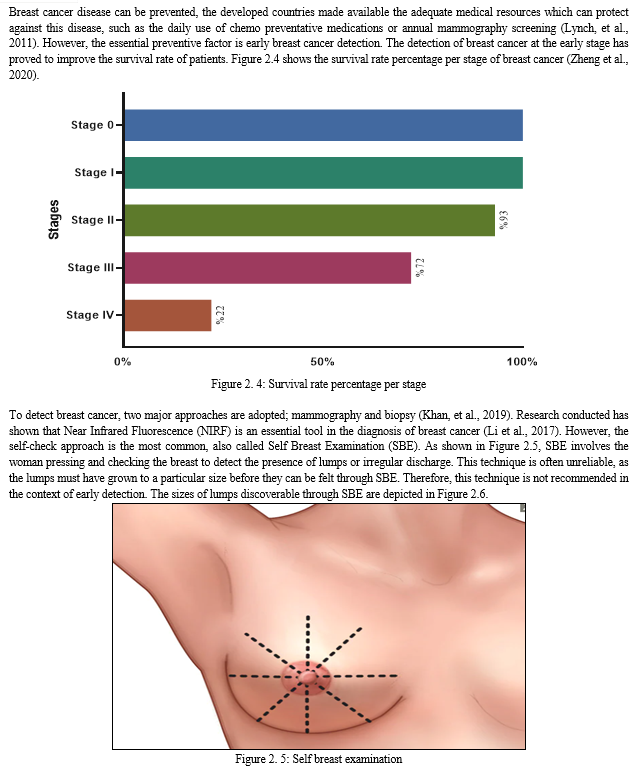

Early symptoms of breast might include a sudden change in the shape of the breast, swollen lymph node, breast lump, dimpling of the breast skin, and discharge of fluid from the nipple; among others. Fluid discharge is the commonest form of cancer that affects women globally, and it has resulted in roughly 570,000 death cases since the year 2015. Furthermore, more than 1.5 million women, accounting for 25% of all women suffering from cancer, are diagnosed of cancer of the breast worldwide. (Stewart & Wild, 2014).

Cancer of the breast occurs in steps and it involves many type of cells. Due to this phenomenon, the prevention of cancer of the breast is still a huge challenge for scientists worldwide. The metastatic ability of cancer of the breast enables it to migrate to other body organs which may include the bone, lung, brain, and the liver, among other body parts. This is responsible for the incurable nature of cancer (Moody et al., 2005). Although the incidence of global breast cancer increases on yearly basis, but the rate of mortality decreases because of early screening of the disease (Sun et al., 2017).

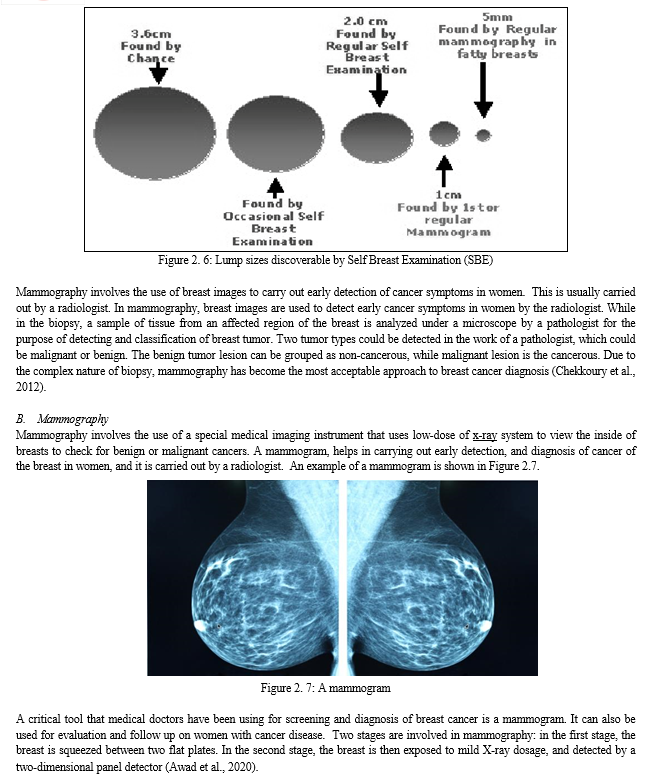

As stated earlier, breast cancer can be detected from the presence of lumps, micro-calcifications, and asymmetry regions of the breast. It can also be detected by the presence of distortion in the breast. The presence of masses are the most characteristic and prevalent sort of abnormality among them. On the other hand, masses can be disguised by overlaying tissues of the breast, and this makes the detection of breast cancer difficult. Furthermore, specific breast tissues morphologically resemble masses and are therefore mistaken as masses (Awad et al., 2020).

National Cancer Institute (NCI) attempted to classify mammograms into two types namely:

- Screening mammogram: This type involves breast X-rays that are used to detect changes in the breast of women without any sign or symptoms of breast cancer. It involved the X-rays of the two breasts. Mammograms helps in the detection of breast tumor which cannot be felt with the hands.

- Diagnostic Mammogram: Diagnostic mammogram can be used to diagnose the unusual changes that occur in the breast such as lumps, pains, and discharge from nipples. It can be used in the evaluation of abnormalities which are detected on a screening mammogram.

Mammography can be done in three ways. One of the ways is through digital mammography, the second way is computer-aided detection, while the third is breast tomosynthesis. A digital mammography system is a type of mammography where the x-rays are converted from a film to a digital image. Similar to digital cameras, digital mammography involves the use of electronics to convert the x-rays from a film into a digital image. The efficiency of these systems allows for better pictures with lower radiation doses. The digital images of the breast are sent to a computer, where they can be viewed by a radiologist, and stored for future reference (Rampun et al. 2018). During a digital mammogram, the patient’s experience is similar to that of a traditional film (Mambou et al., 2018).

Computer-aided detection (CAD) systems scan digitized images of your breast to look for areas of high density, high mass, or high level of calcification that may be indicative of cancer. CAD system are used to highlight the areas in the images, which prompt the radiologist to carry out an examination of the area. A process where several images of the breast from various angles are captured for the purpose of reconstruction into a three dimensional image set is known as breasts tomosynthesis. It is an advanced form of breast imaging system.

Mammographic screening has some drawbacks which include cost and technical complexity. One of the main criticisms of the mammography technology is that some women may get False-positive results, which can be harmful to women who do not have breast cancer (see Smith, et al., 2003). Benefits and Risks of Mammography: Table 2.1.

Table 2. 1: Comparison of Benefits and Risks of Mammography

|

S/N |

BENEFITS |

RISKS |

|

1 |

Physician’s ability in detecting tumors improves through mammography. Early stage cancers is open to treatment options. |

There is always a slight chance of cancer from excessive exposure to radiation |

|

2 |

Screening mammography can detect small abnormal tissue growth that are limited to milk ducts in breasts. |

Five per cent to 15 per cent of screening mammograms require more testing, such as additional mammograms or ultrasound |

|

3 |

X-ray examination does not have remains of radiation in the body of patients after X-ray examination. |

The effective radiation dose for mammography varies per procedure |

|

4 |

There is no side-effects in the diagnostic range for x-ray examination. |

It is not advisable during pregnancy |

C. BIOPSY

Biopsy of the breast is a procedure that removes a breast sample before it is sent to the laboratory for testing purposes. The biopsy can be done in three ways (fine needle, core needle and stereotactic biopsy). During a fine needle biopsy, breast cancer patients lies on a table while a small needle and syringe are inserted into the breasts lump to extracts a sample (Spanhol et al., 2016). By this procedure, the main difference that exists between liquid-filled cyst and that of a solid lump could be determined. Several needles are inserted in order to collect samples, which are about the size of rice grain in Core needle biopsy.

In stereotactic biopsy, the patient lie with face down on a table that has a hole, which is electrically powered, and could be adjusted. As the patient’s breast is put in place between two plates, he surgeon works underneath the table. The surgeon then create a small incision in order to remove samples with a needle. An illustration of needle biopsy is presented in Figure 2.8

The major differences that exists between malignant tumour and benign tumour are highlighted in Table 2.2

Table 2.2: Differences between benign and malignant tumours

|

S/N |

Benign tumours |

Malignant tumours |

|

1 |

It doesnt invade nearby tissue |

It has the ability to invade nearby tissue |

|

2 |

It cannot spread to other body parts. |

Cells that travel via the human blood or the lymph, or other body parts, can be shed, to form a new tumour. |

|

3 |

Typically, it doesn’t return after removal. |

It can return after removal. |

|

4 |

Possession of regular and smooth shape |

The shape is uneven |

|

5 |

If pushed on, it can move around |

They do not move around when pushed on. |

|

6 |

It does not threaten life |

It can threaten life. |

|

7 |

Treatment may be needed or not. |

Treatment is required |

Researchers and Radiologists at different levels have proposed various techniques for early detection of cancer of the breast in women because cancer at the early stage are typically more superficial, and cost-effective, than the treatment of advanced cancer (Carlson, et al., 2003). However, the issue remains a significant challenge due to the importance of accurate early detection of the disease (WHO, 2014). Artificial intelligence's advent has allowed computer scientists to contribute to solving this challenge. The following section discusses some researchers who have proposed machine learning technique, and deep learning techniques for early detection of cancer of the breast.

D. Image Analysis Steps

The steps involved in image analysis are image preprocessing, kernel, convolution and max pooling. The following subsection explains the steps.

- Image Processing

In image processing, images are classified into categories based on image features such as edges, intensity of pixels, and change in image pixel values. In other words, image processing involves the transformation of image into digital form and performing some convolution operations in order to get relevant and useful information from the image. In this process, images are treated as two dimensional signals while performing certain image processing methods such as filtering and convolution.

2. Kernel

Kernel represent the size of a convolution filter that is used to perform convolution on an image. It takes data as an input and then transform it into a required form by performing convolutional filtering operation, extracting features and contributing to the overall segmentation capability of the network.

3. Convolution

This is a mathematical operation that is commonly used in signal processing and image analysis. The term refers to the process of taking two functions and producing a third function, which represents how one function’s shape is altered by the shape of the other. In image analysis, convolution involves applying a filter or a kernel to an image. The kernel is a small matrix that slides over the image, performing a mathematical operation at each position.

The operation typically involves multiplying the values of the kernel with corresponding pixel values in the image, and then adding the results to produce a new pixel value in the output image. Convolution is useful in several applications, such as image enhancement, edge detection, smoothing, and feature extraction.

4. Max pooling

This is a pooling operation commonly used in CNN for features extraction in image processing tasks. Its operates by dividing the input image of feature map into non-overlapping rectangular regions, where the maximum value is selected and forwarded to the next layer, while the other values are discarded. The main purpose is twofold: The spatial down sampling and the Translation invariance.

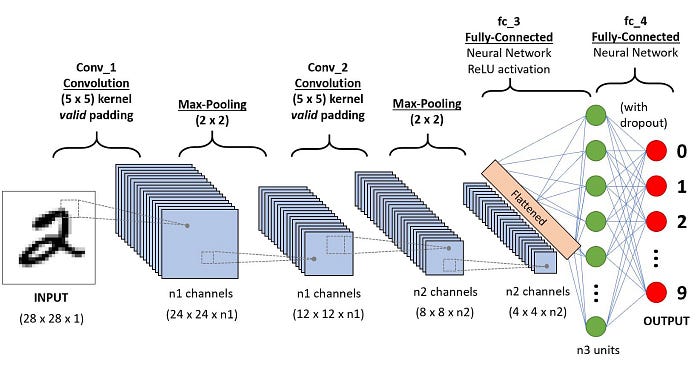

E. Convolutional Neural Network

Convolutional Neural Network (CNN) can be defined as an end-to-end system where the input to the network is an unprocessed image. The output from a network is a prediction based on distinguishing extracted features from the intermediate layers (Fang et al., 2017). CNN is a sub-class of artificial neural networks which is commonly in finding the solution to complicated problems and it is highly efficient and produce accurate results. (Indolia et al., 2018).

In the convolution layer, we feed the image we want to classify into the nodes of the input layer of the network. The output of the CNN is the predicted class label which is computed from the features that have been extracted from the image, as shown by Fang et al. (2017). There is a connection between the neurons in the present layer and the neurons in the previous layer. The correlation is called the receptive field. The local features can also be extracted from the input image, using the receptive field. For example, if a neuron in our previous layer is associated with a specific region, the receptive field of that neuron will form a weight vector, which stays the same at every point on our plane. The plane refers to our neurons in the following layer. Because the neurons in our plane have equal weights, we can detect similar characteristics at different locations in our input data (see Eghbalian et al. (2018). To create our function map, we slide the weight vector (or filter or kernel) over our input vector.

1. Pooling Layer

Once the position of a feature is located, the feature’s exact position becomes less important. Therefore, the pooling (or sub-sampling) layer is preceded by a convolution layer. The major advantage of pooling operation is that the number of training parameters is reduced as well as translational uniformity being introduced. The pooling operation is performed by choosing a window, and then passing the input elements that are within that window via a pooling function, as presented in Fig. 2.9.

The pooling function generates another vector. There exist few techniques for pooling operation such as max pooling, and average pooling. In these two techniques the max pooling is the most commonly used that is capable of significantly reducing the map size. When computing errors, the error is not propagated back to winning unit since it does not participate in forward flow (Lee et al., 2017).

2. The fully Connected Layer

The output from the previous phase, and this includes the repeated convolution operations and the pooling, are fed to the fully connected layer of the network. The dot product of the weighted vector is computed. The input vector is also computed for the final output. In order to reduce the cost function through estimation of the cost over a full dataset, a gradient descent learning is implemented. After the first epoch, the parameters are updated, after traversing the entire dataset. This returns global minima. However, a larger training dataset requires a longer training time. This method of getting the cost function reduced is superseded by the stochastic gradient descent. (Liu et al., 2019).

Fig 2.9 Basic architecture of Convolutional Neural Network.

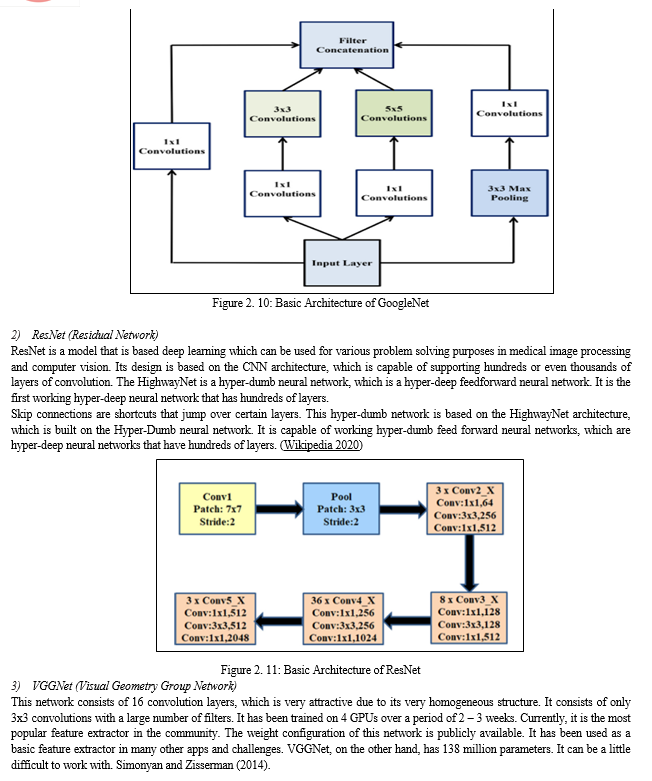

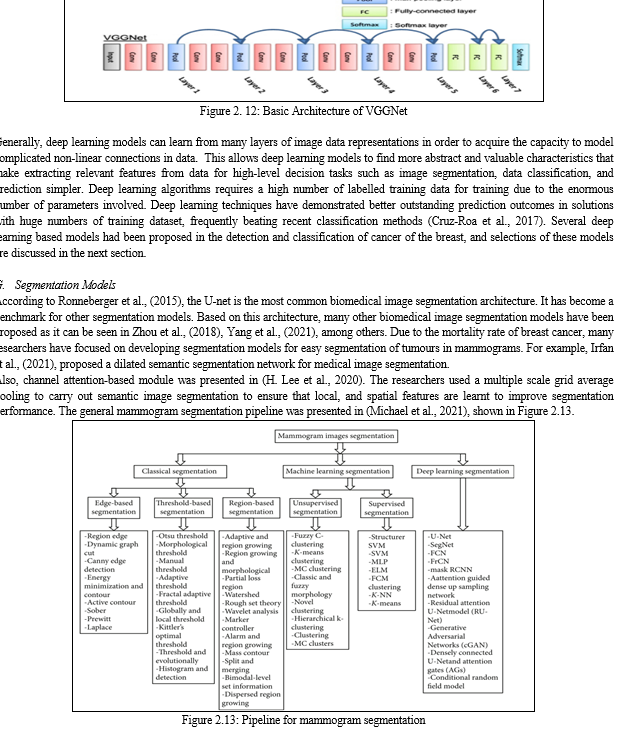

F. Deep Learning In Breast Cancer Detection

Algorithms are available in deep learning that could be used to predict and detect the presence of cancer of the breast in chest radiographs. But the widely used algorithms include Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), models that have been pre-trained on large image databases such as googleNet, VGGNet, and ResNet. This is presented in Figure 2.10 Figure2.12. The common, available, and accessible datasets for model training and testing include the use of mammograms images (Khuriwal, 2018).

Most of these pre-trained models were also based on CNN. A CNN comprises a series of convolutional layers that can extract features from images without requiring feature engineering. As a result, CNN is now the most extensively utilized approach for image interpretation tasks in various disciplines, including the identification and classification of breast cancer.

1)GoogleNet

This also known as inceptionv1, it is a CNN architecture primarily used for image recognition and classification task. It has introduced several innovative features and has the concept of inception modules. These modules uses parallel convolutional layers, with different sizes of filter which gives the network the ability to capture information at different scales and abstraction levels. The architecture have multiple convolution layers, fully connected layers, and pooling layers. It has total of 22 layers. It has been widely used in tasks in computer vision which include classification of images, detection of objects, and semantic segmentation. The architecture of GoogleNet is shown in Fig 2.10.

As discussed earlier, deep learning techniques have performed well in the detection and segmentation of brain tumours from mammograms. A model that is based on adversarial deep learning network for use in mass segmentation of brain tumour from mammograms was proposed by Zhu et al., (2018). This model is based on a contiguous adversarial FCN-CRF (Fully Convolutional Network with Convolutional Random Fields) network for mammography mass segmentation. Two open datasets, INbreast and DDSM-BCRP (Digital Database for Screening Mammography with Breast Cancer Research Program), were used to test the approach. The proposed strategy attained segmentation rate of 97.0 percent. In Li et al., (2019), the attention-dense U-Net model for automatically segmenting breast cancer in mammography images was presented. For breast mass segmentation, our method use a fully-automatic approach based on deep-learning technique. This technique combines attention gates (AGs) with densely linked U-Net for mammography segmentation. Additionally, the digital database for screening mammography (DDSM) database was used to test this strategy, and the results of the experiments revealed that dense U-Net coupled with AGs outperformed other approaches. The technique attained an overall accuracy of 78.38 percent, an F1 score of 82.24 percent, a sensitivity of 77.89 percent.

A deeply supervised U-Net was proposed by Ravitha et al., (2021) for bulk segmentation in digital mammograms (DS-U-Net). The DDSM and INbreast datasets were used in the evaluation of the approach, and cLare filter was used to boost the contrast in the photos. Whether the photos were preprocessed or not, the experiments were split into two groups. It was discovered that preprocessing had a positive impact on experiment results when compared to no preprocessing. Based on preprocessing, the technique achieved 99.70 percent in accuracy, 83.10 percent in sensitivity, and 99.80 percent in specificity. It also achieved a figure of 82.70 percent in Dice, and 85.70 percent in Jaccard coefficient.

When the work done in the area of joint segmentation and classification of mammography images was reviewed, it was discovered that Shen et al., (2020) proposed the mixed-supervision-guided, and residual-aided classification U-Net model (ResCU-Net). For noise reduction in the mammograms used, convolution filters were used. The MS-ResCU-Net model had 94.16% in accuracy, 93.11% in sensitivity, 95.02% in specificity, DI rates of 91.78 percent, Jaccard rates of 85.13 percent, and MCC (Mathew Correlation Coefficient), rates of 87.22%. While ResCU-Net had accuracy rates of 92.91 percent, Sensitivity of 91.51 percent, Specificity of 94.64 percent, DI of 90.50 percent, Jaccard of 83.02 percent, and MCC of 84.99 percent. Full-resolution Convolutional Network (FrCN), a new segmentation model for mammography images, was proposed in Al-antari et al., (2020).

Three conventional deep learning models were employed to categorize the identified, segmented lesions in breast as benign, or malignant. The models developed include CNN, ResNet-50, and InceptionResNet-V2. The INbreast database was accessed to obtain mammography pictures. The performance metrics of these models for breast lesion segmentation that was based on FrCN was 92.97% for accuracy, 85.93% achieved for MCC, while 92.69% was achieved for Dice. The accuracy for Jaccard similarity index was 86.37%.

A deep learning and Conditional Generative Adversarial Networks (CGAN) model for breast density segmentation was proposed by Saffari et al., (2020). The segmentation of dense tissues that were present in mammograms images were segmented by using CGAN network. In order to carry out noise reduction, the images were subjected to median filtering before the performance was carried out. A total of 410 images from 115 patients retrieved from the INbreast dataset was used. Performance metrics revealed 98.0% for accuracy, Dice coefficient of 88.0% percent, and Jaccard similarity index of 78.0% were achieved. RCNN and DeepLab neural networks were used by Ahmed et al., (2020) to create semantic segmentation for cancer of the breast. The performance metrics were evaluated on two datasets, namely MIAS, and DDSM. Noise was eliminated using the edge-based Savitzky Golay filter. For RCNN the performance was 95.0% but for DeepLab, the method's AUC was 98.0%. However, the segmentation task's average precision was 80.0%.

H. Modified U-Net Segmentation

Hossain, (2022) presented micro calcification segmentation. The Laplacian filter was used to eliminate noise after training the suggested approach on images obtained from the DDSM database. Five steps made up the procedure: image preprocessing, segmentation of breast regions, extraction of suspicious patches, selection of positive patches, and training of the segmentation network. The technique resulted in a Dice score of 97.80 percent and an F-measure of 98.50 percent.

Additionally, it was found that the Jaccard index was 97.40 percent, and the proposed method's average accuracy was 98.20 percent. Tsochatzidis et al., (2021) presented a modified convolutional layer of a CNN model based on the U-Net model. DDSM-400, and Curated Breast Imaging Subset-Digital Database for Screening Mammography (CBIS-DDSM), were used as hybrid datasets for the method's evaluation.

The evaluation was based on the ground-truth segmentation maps. The approach obtained diagnostic performance metric of 89.8%, AUC value of 86.20%, and a value of 88.0% for DDSM-400, and a value of 86.0% for U-Net-based segmentation for CBIS-DDSM.

Based on the presented literature, models with attention have improved segmentation performance. However, the existing segmentation architectures have not fully addressed the issues of salient feature learning of mammograms to achieve state-of-the-art segmentation results. In this thesis work, a modified U-Net model based on increasing kernel sizes was presented, which leverages the squeeze and excitation attention mechanism with dimensionality reduction in its contrastive module. By doing this, an improved segmentation performance with low model complexity was achieved. The following section presents some general descriptions of convolutional, fully connected and pooling layers leveraged for biomedical image segmentation.

I. Machine Learning Algorithm

Machine learning (ML) algorithms processes and analyzes data in order to learn the underlying patterns about people, business operations, transactions, occurrences, etc. There are 4 types of ML algorithms, which are discussed in the following section.

- Types of Machine Learning Algorithm

Machine Learning algorithms could be roughly divided into four groups: the supervised learning group, the unsupervised learning group, the semi-supervised learning group, and the reinforcement learning group.

a. Supervised machine learning Algorithms

In this group of machine learning, the machine learns how to map an input function into an output function based mainly on the supplied sample of the input-out pairs. This is done by making inference from a set of data supplied for training and another set supplied for testing. Learning becomes supervised if the goal to be achieved is given to the machine and a set of inputs are supplied as well. It is also known as task-driven approach to machine learning. Tasks that falls into this group include classification tasks and regression tasks. They make predictions based on patterns learned from input data. Examples are tasks such as predicting a class label, or sentiment for a piece of text such as a tweet, or product review, using text classification.

b. Unsupervised machine learning

This is the process of analyzing data that are not labeled without human intervention. It is called a data driven approach to learning. This type of learning is commonly used to extract features that are generated from datasets, to find meaningful trends and patterns, to group data in results, and for the purpose of exploration. Popular among tasks that can be considered unsupervised tasks are clustering, learning of features, estimation of density, dimensionality reduction, finding association rules. The most popular Unsupervised Learning tasks are: clustering, density estimation, feature learning, dimensionality reduction, anomaly detection, computing association rules.

c. Semi-supervised machine learning

This is a hybrid of supervised learning and unsupervised learning because it works on both data that are labeled, and data that are not labeled. Semi supervised learning lies somewhere between supervised learning “unsupervised” and supervised learning “supervised”. In practice, data that are labeled may be sparse in many situations, while unlabeled data is abundant in many situations, where semi supervised learning can be useful.

d. Reinforcement Machine Learning

Reinforcement learning is a type of machine learning algorithm that can be used to train software agents, or machines, to be able to automatically assess the best behavior in a specific environment, or context, so as to improve its performance, which is based on an environment. This kind of learning is usually reward-based, or penalty-based. The goal of reinforcement learning is to be able to take action based on insights from environmental activities in order to increase reward, or minimize risk. Reinforcement Learning is a powerful AI model training tool, which can improve automation, or optimize the performance of complex systems like Robotics, Autonomous Driving, Manufacturing and Supply Chain Logistics, etc. However, it is not recommended to use reinforcement learning for the purpose of solving simple problems.

III. REVIEW OF RELATED WORKS

Zuluaga-Gomez, et al., (2019) developed a CNN-based methodology for the diagnosis of breast cancer using thermal images. They proposed a CNN hyper-parameters, fine-tuning optimization algorithm, using a tree parzen estimator. The result achieved accuracy of 92%. However, the researchers used 57 breast cancer datasets, which is insufficient. Asri et al., (2016) carried out the performance comparison of selected machine learning algorithms which include SVM, decision tree, KNN, and Naïve Bayes.

The Wisconsin Breast Cancer dataset was used in the experiment, which contains 699 instances of both malignant and benign cancer. Schaefer et al., (2009) carried out a fuzzy logic based classification algorithm on 150 samples of breast cancer mammographic images. The performance evaluation carried out shows accuracy of 80%. This result was attributed to the use of statistical feature analysis, which is a key source of data for achieving such accuracy. The drawback of the research is mainly insufficient dataset used.

Sumathi et al., (2007) proposed breast cancer diagnosis model that is based on genetic algorithm, and adaptive resonance theory neural network. The data used was sourced from Wisconsin Breast Cancer Data (WBCD). The data samples trained were 699 samples which were taken through Biopsy technique, also called the fine needle aspirates. The number of missing data were 16. Samples that contained breast tumours were 683 samples, which were used in this research. Sixty-five (65%) of the data were benign, and thirty-five (35%) were malignant. The developed model combined Probabilistic Neural Network (PNN) with Multilayer Perceptron (MLP) and the dataset used was sourced through Biopsy technique. This is very tedious when training the data. Adam and Omar, (2006) proposed a model for the detection of breast cancer. The work used a hybrid of genetic algorithm and Back Propagation Neural Network. This was done with the aim of reducing the detection time and increasing the detection accuracy. Different cleaning processes and data preprocessing were carried out on the dataset. The system recorded the detection accuracy of 83.36% Research in Mambou et al., (2018) developed a breast cancer detection system using infrared thermal imaging and deep learning. The model was trained using 67 images obtained through the dataset obtained from Research Data Base (DMR) containing frontal thermogram images that were acquired by the use of FLIR SC-620 IR camera, which has a resolution of 640 × 480 pixels. The testing of the model was carried out using 12 images that have breast cancer. Each image was augmented in order to generate additional 20 images each image. Performance evaluation of the model shows 88.5% in accuracy.

Khan et al., (2019) presented a framework based on deep learning for the detection of cancer of the breast using transfer learning. Feature extraction was carried out and they used prtrained networks such as GoogLeNet, Visual Geometry Group Network (VGGNet), and Residual Networks (ResNet). The sample images were fed into a fully connected layer of the network. Classification into benign and malignant tumours were done using average pooling. Performance evaluation metric shows accuracy of 97.52%. in the preprocessing stage, 82 images were used, which were augmented to make a total of 8,000 images. Deep learning technique was used to extract features from raw images for classification process as stated in LeCun et al.,(2015). However, the concept of detection is general, and it does not entirely focus on segmenting the affected areas after detection. For this reason, researchers have adopted and proposed various segmentation models to segment tumours from breast cancer images.

IV. METHODOLOGY

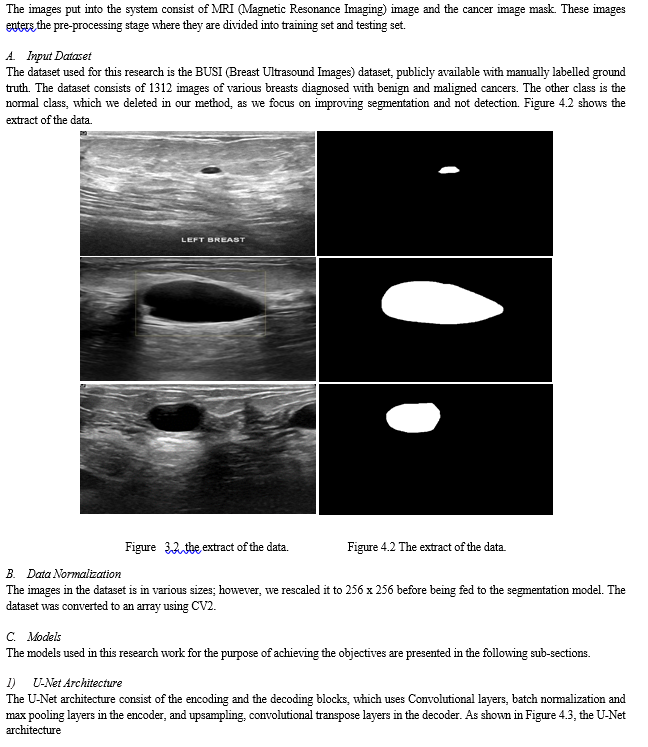

The details of the algorithm, the system architecture, the description of the input, the preprocessing methods, and the feature extraction techniques are presented in this chapter. The design will help realize the overall objectives of this research. This research proposes adopting a modified U-Net model as the solution approach to achieve effective segmentation of tumours from breast cancer. The proposed system flow diagram of the whole method is presented in Figure 4.1.

Table 4.3 Size of Model Parameters

|

MODEL |

TRAINABLE PARAMETERS |

NON-TRAINABLE PARAMETERS |

|

U-Net |

1.9M |

0 |

|

Our Baseline |

19.07M |

0 |

|

Proposed Model |

7.36M |

0 |

E. Discussion of Result

The experiments carried out in this research considered Jaccard, F1 score, recall, precision, accuracy and F2 measure as metrics. Also, we considered the model size for evaluation. As shown in Figure 4.1, the output of each segmentation model is visualized, and it can be seen that the segmentation obtained by the proposed model looks more similar to the ground truth mask. This was also validated, as shown in Table 4.2, where the performance of the proposed model outperformed the U-Net benchmark, and the baseline modified U-Net. This shows that by adding the squeeze and excitation block in our proposed model right after the contraction layer, more discriminant features were learnt.

The visualization of the metrics were presented in Figure 4.2 to Figure 4.7. Also, in Table 4.3, the model size of the three segmentation models were presented. The results showed that the proposed model had a 7.36 million parameters, which is high when compared to the benchmark U-Net. However, when compared to the baseline model which has 19.07 million parameters, the size of our proposed model is quite small, given that the proposed model outperformed the U-Net and the baseline U-Net, with few model complexity.

Several researchers have emphasized the importance of early and accurate breast cancer diagnosis, because it invariably reduces mortality rate. Early and accurate detection curbs issues arising from the metastatic nature of the ailment. Because of this, several researchers from various fields have proposed techniques to optimize the detection accuracy of breast cancer detection systems.

The advent of Artificial Intelligence (AI) has motivated computer scientists to also contribute their quota into tackling the challenges.

Before accurate diagnosis can be done, segmentation of tumors from mammograms and ultrasound images of breast cancers is important. For this reason, various biomedical segmentation models have been proposed. However, challenges of low segmentation performance and bulky model size has been attributed to breast cancer segmentation images. To contribute to tackling this challenges, this research propose a novel biomedical segmentation model based on U-Net architecture. Progressively increasing kernel sizes were incorporated into the U-Net architecture, with two squeeze and excitation blocks placed at the last layer of the contraction block in our proposed model to act as channel attention with dimensionality reduction. By doing this, we were able to learn more discriminative features from the images and corresponding masks.

Results showed that our model outperformed the benchmark U-Net architecture which used a fixed 3x3 kernel size without the SE block and our baseline model which used progressively increasing kernel sizes without the SE blocks. The state-of-the-art segmentation performance achieved by our model came at a reduced model complexity, as a model size of 7.36M was recorded, against the 19.07M parameters of the baseline U-Net.

Conclusion

A. Conclusion This research have proposed a novel segmentation model which uses channel attention with dimensionality reduction. In our model, we incorporated two squeeze and excitation blocks with the same configuration. Ablation studies were done to investigate the best reduction dimension in our model. This enabled us to balance the tradeoff of model complexity and model performance. Likewise, we experimented by adding more SE blocks to the proposed model, and negligible increase was recorded in the segmentation performance, but it came at an increase complexity. The proposed model in this research outperformed the baseline and the benchmark U-Net across all the considered performance evaluation metrics. Also, the size of our model is minimal, when compared to the baseline U-Net architecture. B. Recommendation The developed model is recommended for accurate segmentation of benign and malignant breast cancer tumours. The relatively small model size achieved in this research work make it suitable for deployment on mobile and embedded systems. This will make it readily available for use anywhere, anytime. Even though the segmentation model in this research was developed for breast cancer tumour segmentation, it can be easily trained and extended for other biomedical segmentation tasks such as segmentation of areas affected by tuberculosis in the lungs of human beings. It can also be deployed after training, for segmentation of brain tumours.

References

[1] Adam, A., & Omar, K. (2006). Computerized breast cancer diagnosis with Genetic Algorithm and Neural Network. Proc. of the 3rd International Conference on Artificial Intelligence and Engineering Technology (ICAIET), 22–24. [2] Ahmed, L., Iqbal, M. M., Aldabbas, H., Khalid, S., Saleem, Y., & Saeed, S. (2020). Images data practices for Semantic Segmentation of Breast Cancer using Deep Neural Network. Journal of Ambient Intelligence and Humanized Computing, 0123456789. https://doi.org/10.1007/s12652-020-01680-1 AJCC. (2002). Breast. American Joint Committee on Cancer. Cancer Staging Manual, 223–240. [3] Al-antari, M. A., Al-masni, M. A., & Kim, T.-S. (2020). Deep Learning Computer-Aided Diagnosis for Breast Lesion in Digital Mammogram. In G. Lee & H. Fujita (Eds.), Deep Learning in Medical Image Analysis?: Challenges and Applications (pp. 59–72). Springer International Publishing. https://doi.org/10.1007/978-3-030-33128-3_4 [4] Asri, H., Mousannif, H., Al Moatassime, H., & Noel, T. (2016). Using Machine Learning Algorithms for Breast Cancer Risk Prediction and Diagnosis. Procedia Computer Science, 83(Fams), 1064–1069. https://doi.org/10.1016/j.procs.2016.04.224 [5] Awad, A., Ali, A., & Gaber, T. (2020). Feature selection method based on chaotic maps and butterfly optimization algorithm. Proceedings of the International Conference on Artificial Intelligence and Computer Vision (AICV2020), 1153, 159–169. https://doi.org/10.1007/978-3-030-44289- [6] Boquete, L.; Ortega, S.; Miguel-Jimenez, J.M.; Rodr?guez-Ascariz, J.M.; Blanco, R. (2012). Automated Detection of Breast Cancer in Thermal Infrared Images, Based on Independent Component Analysis. J. Med. Syst., 36, 103–111. [7] Cardoso, F.; Harbeck, N.; Barrios, C.H.; Bergh, J.; Cortés, J.; El Saghir, N.; Francis, P.A.; Hudis, C.A.; Ohno, S.; Partridge, A. H. (2016). Research needs in breast cancer. Ann. Oncol., 28, 208–217. [8] Carlson, R. W., Anderson, B. O., Chopra, R., Eniu, A. E., & Love, R. R. (2003). Treatment of breast cancer in countries with limited resources. Breast J, 9(2). [9] Chekkoury, A., Khurd, P., Ni, J., Bahlmann, C., Kamen, A., Patel, A., Grady, L., Singh, M., Groher, M., & Navab, N. (2012). Automated malignancy detection in breast histopathological images. Medical Imaging 2012: Computer Aided Diagnosis, 8315, International Society for Optics and Photonics. [10] Cruz-Roa, A., Gilmore, H., Basavanhally, A., Feldman, M., Ganesan, S., Shih, N. N. C., Tomaszewski, J., González, F. A., & Madabhushi, A. (2017). Accurate and reproducible invasive breast cancer detection in whole-slide images: A Deep Learning approach for quantifying tumor extent. Scientific Reports, 7(April), 1–14. https://doi.org/10.1038/srep46450 [11] Dall, G., & Britt, K. (2017). Estrogen Effects on the Mammary Gland in Early and Late Life and Breast Cancer Risk. Front Oncol, 7, 110 [12] Doersch, C. (2016). Tutorial on variational autoencoders. ArXiv Preprint ArXiv:1606.05908. [13] Eghbalian, S., & Ghassemian, H. (2018). Multi spectral image fusion by deep convolutional neural network and new spectral loss function. International Journal of Remote Sensing, 39(12), 3983–4002. https://doi.org/10.1080/01431161.2018.1452074 [14] Fang, J., Zhou, Y., Yu, Y., & Du, S. (2017). Fine-Grained Vehicle Model Recognition Using A Coarse-to-Fine Convolutional Neural Network Architecture. IEEE Transactions on Intelligent Transportation Systems, 18(7), 1782–1792. https://doi.org/10.1109/TITS.2016.262049 [15] Goodfellow, I. (2016). NIPS 2016 Tutorial: Generative Adversarial Networks. http://arxiv.org/abs/1701.00160 [16] Horn, J., & Vatten, L. J. (2017). Reproductive and hormonal risk factors of breast cancer: a historical perspective. International Journal of Women’s Health, 9, 265–272. [17] Hossain, M. S. (2022). Microc alcification Segmentation Using Modified U-net Segmentation Network from Mammogram Images. Journal of King Saud University - Computer and Information Sciences, 34(2), 86–94. https://doi.org/https://doi.org/10.1016/j.jksuci.2019.10.014 [18] Hu, J., Shen, L., & Sun, G. (2018). Squeeze-and-Excitation Networks. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 7132–7141. https://doi.org/10.1109/CVPR.2018.00745 [19] Indolia, S., Goswami, A. K., Mishra, S. P., & Asopa, P. (2018). Conceptual Understanding of Convolutional Neural Network- A Deep Learning Approach. Procedia Computer Science, 132, 679–688. https://doi.org/10.1016/j.procs.2018.05.069 [20] Irfan, R., Almazroi, A. A., Rauf, H. T., Damaševi?ius, R., Nasr, E. A., & Abdelgawad, A. E. (2021). Dilated semantic segmentation for breast ultrasonic lesion detection using parallel feature fusion. Diagnostics, 11(7), 1–20. https://doi.org/10.3390/diagnostics11071212 [21] Khan, S. U., Islam, N., Jan, Z., Ud Din, I., & Rodrigues, J. J. P. C. (2019). A novel deep learning based framework for the detection and classification of breast cancer using transfer learning. Pattern Recognition Letters, 125, 1–6. https://doi.org/10.1016/j.patrec.2019.03.022 [22] Khuriwal, N., & Mishra, N. (2018). Breast Cancer Detection from Histopathological Images Using Deep Learning. 3rd International Conference and Workshops on Recent Advances and Innovations in Engineering, ICRAIE 2018, 2018(November), 1–4. https://doi.org/10.1109/ICRAIE.2018.8710426 [23] LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature 521, 7553, 436. [24] Lee, H., Park, J., & Hwang, J. Y. (2020). Channel Attention Module with Multiscale Grid Average Pooling for Breast Cancer Segmentation in an Ultrasound Image. IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 67(7), 1344–1353. https://doi.org/10.1109/TUFFC.2020.2972573 [25] Lee, K. B., Cheon, S., & Kim, C. O. (2017). A convolutional neural network for fault classification and diagnosis in semiconductor manufacturing processes. IEEE Transactions on Semiconductor Manufacturing, 30(2), 135–142. https://doi.org/10.1109/TSM.2017.2676245 [26] Li, S., Dong, M., Du, G., & Mu, X. (2019). Attention Dense-U-Net for Automatic Breast Mass Segmentation in Digital Mammogram. IEEE Access, 7, 59037–59047. https://doi.org/10.1109/ACCESS.2019.2914873 [27] Li, S., Johnson, J., Peck, A., & Xie, Q. (2017). Near infrared fluorescent imaging of brain tumor with IR780 dye incorporated phospholipid nanoparticles. J. Transl. Med., 15. [28] Liu, X., Jiao, L., Tang, X., Sun, Q., & Zhang, D. (2019). Polarimetric Convolutional Network for PolSAR Image Classification. IEEE Transactions on Geoscience and Remote Sensing, 57(5), 3040–3054. https://doi.org/10.1109/TGRS.2018.2879984 [29] Lynch, B., Neilson, H., & Friedenreich, C. (2011). Physical activity and breast cancer prevention. Recent Results Cancer Res., 186, 13–42. [30] Mambou, S. J., Maresova, P., Krejcar, O., Selamat, A., & Kuca, K. (2018). Breast cancer detection using infrared thermal imaging and a deep learning model. Sensors (Switzerland), 18(9). https://doi.org/10.3390/s18092799 [31] Michael, E., Ma, H., Li, H., Kulwa, F., & Li, J. (2021). Breast Cancer Segmentation Methods: Current Status and Future Potentials. BioMed Research International, 2021. https://doi.org/10.1155/2021/9962109 [32] Moody, S. E., Perez, D., Pan, T. C., Sarkisian, C. J., Portocarrero, C. P., Sterner, C. J., Notorfrancesco, K. L., Cardiff, R. D., & Chodosh, L. A. (2005). The transcriptional repressor snail promotes mammary tumor recurrence. Cancer Cell 8, 3 (2005), 197–209. Cancer Cell, 8(3), 197–209. [33] NBCF. (2016). National Breast Cancer Foundation. Breast Cancer Facts; National Breast Cancer Foundation: Sydney, Australia. [34] Rampun, A., Scotney, B. W., Morrow, P. J., & Wang, H. (2018). Breast Density Classification Using Local Quinary Patterns with Various Neighbourhood Topologies. J. Imaging, 4, 14. [35] Ravitha Rajalakshmi, N., Vidhyapriya, R., Elango, N., & Ramesh, N. (2021). Deeply supervised u-net for mass segmentation in digital mammograms. International Journal of Imaging Systems and Technology, 31(1), 59–71. [36] Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 9351(Cvd), 234–241. https://doi.org/10.1007/978-3-319-24574-4_28 [37] Rouhi, R., Jafari, M., Kasaei, S., & Keshavarzian, P. (2015). Benign and malignant breast tumors classification based on region growing and CNN segmentation. Expert Systems with Applications, 42(3), 990–1002. https://doi.org/10.1016/j.eswa.2014.09.020 [38] Saffari, N., Rashwan, H. A., Abdel-Nasser, M., Kumar Singh, V., Arenas, M., Mangina, E., Herrera, B., & Puig, D. (2020). Fully Automated Breast Density Segmentation and Classification Using Deep Learning. Diagnostics, 10(11). https://doi.org/10.3390/diagnostics10110988 [39] Schaefer, G., Závišek, M., & Nakashim, T. (2009). Thermography based breast cancer analysis using statistical features and fuzzy classification. Pattern Recognition, 42(6), 1133–1137. [40] Shen, T., Gou, C., Wang, J., & Wang, F.-Y. (2020). Simultaneous Segmentation and Classification of Mass Region From Mammograms Using a Mixed-Supervision Guided Deep Model. IEEE Signal Processing Letters, 27, 196–200. https://doi.org/10.1109/LSP.2019.2963151 [41] Siegel, R., Miller, K., & Jemal, A. (2017). Cancer Statistics. CA Cancer J Clin, 67, 7–30. [42] Smith, R. A., Cokkinides, V., & Eyre, H. J. (2003). American Cancer Society guidelines for the early detection of cancer. CA Cancer J Clin, 53, 27–43. [43] Spanhol, F. A., Oliveira, L. S., Petitjean, C., & Heutte, L. (2016). A Dataset for Breast Cancer Histopathological Image Classification. In IEEE Transactions on Biomedical Engineering, 7, 1455–1462. https://doi.org/doi: 10.1109/TBME.2015.2496264. [44] Stewart, B. ., & Wild, C. P. (2014). World Cancer Report 2014. WHO Press. [45] Sumathi, C. P., Santhanam, T., & Punitha, A. (2007). Combination of genetic algorithm and ART neural network for breast cancer diagnosis. Asian Journal of Information Technology, Medwell Journals. [46] Sun, Y. S., Zhao, Z., Yang, Z. N., Xu, F., Lu, H. J., Zhu, Z. Y., Shi, W., Jiang, J., Yao, P. P., & Zhu, H. P. (2017). Risk factors and preventions of breast cancer. International Journal of Biological Sciences, 13(11), 1387–1397. https://doi.org/10.7150/ijbs.21635 [47] Tsochatzidis, L., Koutla, P., Costaridou, L., & Pratikakis, I. (2021). Integrating segmentation information into CNN for breast cancer diagnosis of mammographic masses. Computer Methods and Programs in Biomedicine, 200, 105913. https://doi.org/https://doi.org/10.1016/j.cmpb.2020.105913 [48] Usha, R. (2010). Parallel Approach for Diagnosis of Breast Cancer using Neural Network Technique. International Journal of Computer Applications, 10(3). [49] Vargas, H. I., Anderson, B. O., Chopra, R., Lehman, C. D., Ibarra, J. A., Masood, S., & Vass, L. (2003). Diagnosis of breast cancer in countries with limited resources. Breast Journal, 9(SUPPL. 2). https://doi.org/10.1046/j.1524-4741.9.s2.5.x [50] WHO. (2014). WHO Position paper on mammography screening, World Health Organization. [51] Wikipedia. (2020. .Residual Neural Network http://en.m.wikipedia.org [52] Yang, J., Zhu, J., Wang, H., & Yang, X. (2021). Dilated MultiResUNet: Dilated multiresidual blocks network based on U-Net for biomedical image segmentation. Biomedical Signal Processing and Control, 68(April), 102643. https://doi.org/10.1016/j.bspc.2021.102643 [53] Zheng, J., Lin, D., Gao, Z., Wang, S., He, M., & Fan, J. (2020). Deep Learning Assisted Efficient AdaBoost Algorithm for Breast Cancer Detection and Early Diagnosis. IEEE Access, 8, 96946–96954. https://doi.org/10.1109/ACCESS.2020.2993536 [54] Zhou, Z., Rahman Siddiquee, M. M., Tajbakhsh, N., & Liang, J. (2018). Unet++: A nested u-net architecture for medical image segmentation. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 11045 LNCS, 3–11. https://doi.org/10.1007/978-3-030-00889-5_1 [55] Zhu, W., Xiang, X., Tran, T. D., Hager, G. D., & Xie, X. (2018). Adversarial deep structured nets for mass segmentation from mammograms. 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), 847–850. https://doi.org/10.1109/ISBI.2018.8363704 [56] Zuluaga-Gomez, J., Al Masry, Z., Benaggoune, K., Meraghni, S., & Zerhouni, N. (2021). A CNN-based methodology for breast cancer diagnosis using thermal images. Computer Methods in Biomechanics and Biomedical Engineering: Imaging and Biomedical Engineering: Imaging and Visualization, 9(2), 131–145. https://doi.org/10.1080/21681163.2020.1824685 Journal of Innovative Research in Computer and Communication Engineering. 4. 15772-15775.

Copyright

Copyright © 2024 Akin-Olayemi Titilope.Helen, Ojo Abayomi Fagbuagun, Oguntuase R. Abimbola, Ojo Olufemi A., Makinde Bukola Oyeladun. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59592

Publish Date : 2024-03-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online