Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Cancer Detection Using Deep Learning

Authors: Lalitha Rajeswari B, Hema Latha M, Karthikeya N, Mohana Venkata Raghuram N, Pavani P

DOI Link: https://doi.org/10.22214/ijraset.2024.59111

Certificate: View Certificate

Abstract

One of the most prevalent diseases is cancer. Cancer is a dangerous condition with a high death rate if it is not found and treated at an early stage. This study aims to build a precise and impartial model for detecting two types of cancers (Skin Cancer, and Oral Cancer) using Deep-Learning techniques. Machine learning and computer vision techniques, particularly Convolutional Neural Networks (CNNs), help to develop this system. Deep learning techniques can help us to detect and classify skin cancer from dermoscopy images, and oral cancer from histopathological images. Cancer will be more correctly identified with Convolutional Neural Network (CNN) technology. It increases the likelihood of curing cancer before it spreads. The advantage of using CNN architectures for cancer detection is that they can learn complex features from the images and classify them. This paper proposes a deep-learning-based approach for the diagnosis of skin cancer, and oral cancer based on predefined CNN architecture DenseNet. This model intends to improve detection and clinical decision-making. It helps healthcare to fight against cancer.

Introduction

I. INTRODUCTION

Cancer is a disorder characterized by the uncontrolled growth and distribution of certain cells within the body, potentially infiltrating other bodily tissues. In the usual course, human cells undergo controlled division to generate new cells in response to the body's requirements. Aging or damaged cells are expected to undergo automatic cell death, making way for fresh cells. However, in cancer, cell division will not be done properly, leading to unrestrained propagation. There exist over 200 distinct types of cancer, posing a global health challenge that adversely impacts millions of lives annually.

The severity of cancer is underlined by its potential to spread to other tissues if not detected and addressed in its early stages. Projections indicate a worrisome trend, with a 12.8% anticipated increase in cancer incidence in India from 2020 to 2025. Looking ahead to 2040, the global landscape is expected to witness a surge, with estimates reaching 27.5 million new cancer cases and 16.3 million cancer-related deaths [11].

Skin cancer stands as the most prevalent form of cancer globally, predominantly triggered by prolonged exposure to ultraviolet radiation from the sun or tanning beds. Skin tumors are primarily categorized into two types. Malignant tumors are cancerous, spreading to other body organs through the lymphatic system or blood vessels, a process referred to as metastasis. On the other hand, benign tumors are non-cancerous and do not extend to other organs. Early detection of melanoma boasts an estimated five-year survival rate exceeding 99 percent. However, this rate diminishes to 74 percent when the disease reaches the lymph nodes and further drops to 35 percent when metastasizing to distant organs [13].

In the initial phase of skin cancer diagnosis, a physician undergoes a series of steps. Initially, the naked eye is used to inspect lesions. Dermoscopy is then utilized to delve deeper into the pattern of skin lesions, applying a gel for enhanced visualization under a magnifying tool. Experts often employ the ABCDE technique, evaluating factors like asymmetry, border, color, diameter, and lesion development over time. The accuracy of diagnosis heavily relies on the expertise of dermatologists and the available clinical facilities. Early detection and diagnosis of skin cancer are crucial for effective treatment, preventing further spread and reducing mortality rates and costly medical procedures. Nevertheless, the manual inspection process is time-consuming and susceptible to human errors.

Numerous countries, including the United States of America, are experiencing a shortage of experienced dermatologists proficient in identifying skin cancer. A study conducted at the National Hospital of Sri Lanka (NHSL) states that among 123 surveyed doctors, only 10 had undergone formal training in performing full body examinations. Additionally, out of the 13 doctors who had conducted such examinations, merely 2 had completed more than five screenings in the past 12 months. The scarcity of adequately trained dermatologists called for an automated skin screening tool to assist and enhance the screening process for detection of skin cancer.

Oral cancer includes malignancies that arise within the oral cavity, including the lips, tongue, cheeks, and throat. Common risk factors for the development of oral cancer include the use of tobacco, consumption of alcohol, and infection with the human papillomavirus (HPV).

To diagnose oral cancer, a biopsy is conducted to extract a tissue sample from the suspicious area in the oral cavity. Subsequently, these tissue sections are subjected to staining and microscopic examination by a pathologist. The pathologist assesses for specific indicators associated with oral cancer, such as deviations in the size, shape, and structure of cells, irregular tissue arrangement, heightened rates of cell division signifying rapid growth, the infiltration of neighboring tissues by cancer cells, and the presence of areas with cell death within the tissue.

Reference [12] says that only 68 percent of people affected by oral cancer can survive for 5 years. These survival rates are still lower for black and American native people.

II. LITERATURE REVIEW

Recently, many plans have been created to sort out cancer issues using advanced learning methods.

In Classification of Skin Cancer Lesions Using Explainable Deep Learning [9], a modified MobileNetV2 and modified DenseNet201 models are used for detecting skin cancer and obtained a 95% accuracy. Inthiyaz et al. [1] trained CNN model with Xiangya-Derm dataset and obtained an accuracy of 87% in detecting skin cancer. This work used a small dataset. Inthiyaz et al. achieved an AUC of 0.87. Gouda et al. [2] trained CNN model with ISIC 2018 dataset and achieved 83.2% accuracy in detecting skin cancer. The proposed work used ISIC 2018. An accuracy of 0.8576 was obtained using Inception50, which is low. Kousis et al. [3] trained DenseNet169 with ISIC2019 which results in 92.25% accuracy. Among the eleven architectures used in this work, DenseNet169 gave the best classification accuracy 92.5%.

Alam et al. [4] proposed a model S2C-DeLeNet by training with HAM10000 dataset and obtained 91.03% accuracy. Aljohani and Turki [5] used ISIC 2019 dataset to train DenseNet201, MobileNetV2, ResNet50V2, ResNet152V2, Xception, VGG16, VGG19, and GoogleNet. They obtained accuracy of 70% for both DenseNet201 and ResNet50V2 and 73% accuracy for GoogleNet. For remaining all models, they obtained less than 70% accuracy. Bechelli and Delhommelle [6] used HAM10000 dataset and trained CNN, VGG-16, Xception, ResNet50. They obtained 88% percent accuracy for VGG-16. Srinivasa et al. [7] proposed a technique that utilizes MobileNetV2 and LSTM networks trained on the HAM1000 dataset. The combination of LSTM with MobileNetV2 gave the accuracy up 85.34%.

S. Subha [10] explained about the difficulties in differentiating skin cancer from rashes in her paper. Agung W. Setiawan [8] explained about the effects of image downsizing and color reduction. Malak Abdullah [14] obtained an accuracy of 97% for CNN model in detecting the kidney cancer. They used dataset collected from KAUH hospital in Jordan. Madhusmita Das [15] obtained an accuracy of 97% for detecting oral cancer using CNN model. Other machine learning and related models [16-37] are also referred and helped us to develop our proposed model to give solutions for the identified problem.

Existing researches are mostly done on smaller datasets with less than 5000 images. When the model is trained with smaller datasets model’s accuracy is up to the mark. But when the same methodology is trained with larger datasets their accuracy is not up to the mark. Some models developed already are overfitting due to the usage of a smaller number of images for training.

III. PROPOSED METHODOLOGY

A. DenseNet

DenseNet, or Densely-Connected Convolutional Network, facilitates enhanced feature reuse by incorporating multiple dense blocks. In this architecture, the output of each dense block serves as input for subsequent dense blocks. The dense blocks consist of convolutional layers with batch normalization, ReLU activation functions, dropout, effectively managing the spatial dimensions of feature maps.

The utilization of dense blocks is instrumental in mitigating the vanishing gradient problem commonly encountered in extremely deep neural networks. Each transition layer consists of Batch Normalization, ReLU activation function, convolutional layer, dropout and pooling layer.

The difference between original architectures of DenseNet201 and DenseNet169 occurs at dense block 3. In DenseNet201 dense block 3 iterates for 48 times whereas in DenseNet169 dense block 3 iterates for 32 times.

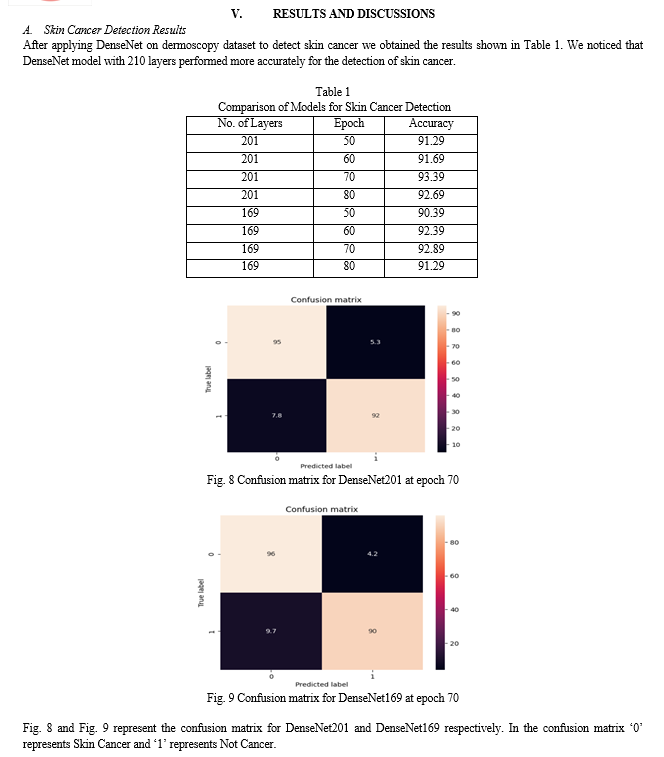

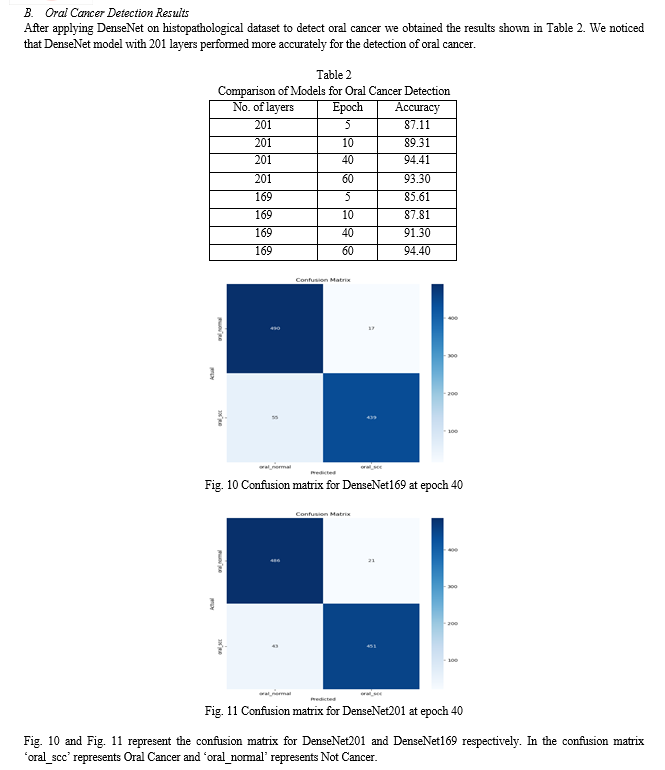

In this study we have added flatten layer, dropout layer, two dense layers along with batch normalization and dropout at the end of both DenseNet201 and DenseNet169 pre-trained models. We have used Softmax activation function to normalize the output of the network to get the final output. The architecture of modified DenseNet201 is shown in Fig. 1. The architecture of modified DenseNet169 is shown in Fig. 2. Fig. 3 represents the workflow of the proposed method for the classification of Skin cancer and Oral cancer.

IV. IMPLEMENTATION

We used Python programming language which contains several libraries and frameworks that will ease the implementation of deep learning models. From the Tensorflow library in Python we have imported the pre-trained DenseNet201 and DenseNet169 models. Then flatten layer, dropout layer, two dense layers each with 128 units and ReLU activation function are added along with batch normalization and dropout at the end of both the models. Google colab is used for running the proposed modified DenseNet methods.

Dermoscopy images in the datasets undergo resizing to 128 x 128 pixels. Histopathological images undergo resizing to 224 x 224 pixels. Normalization stands out as a vital pre-processing step, pivotal for improving training speed. To achieve this, the images are converted by scaling their values to fall within the range of 0 to 1, employing the formula 1/255.

After applying data preprocessing techniques, the models are trained with datasets. The dataset is divided in 7:2:1 ratio i.e. 70% is used for training, 20% is used for validation and 10% is used for testing.

The HAM10000 dataset used in this study for detecting skin cancer comprises 10,015 dermoscopy images. It was taken from the Harvard Dataverse which can also be downloaded from Kaggle. These images were gathered by the Department of Dermatology at the Medical University of Vienna in Vienna, Austria.

VI. FUTURE SCOPE

Future work includes developing models that can adapt to new data over time and incorporate continuous learning mechanisms for maintaining the efficiency of the detection system. Developing a dataset by incorporating images of diversified skin tones and environments is necessary to remove bias in cancer detection. Further testing is required to check whether the proposed modified DenseNet model works on other types of cancer diagnosis.

VII. ACKNOWLEDGEMENTS

We extend our sincere appreciation to all those who played a crucial role in the completion of this research work. Our gratitude goes out to the individuals who graciously shared the essential dataset, forming the backbone of our study.

Special thanks to Mrs. B. Lalitha Rajeswari Madam and Dr. N. Sri Hari Sir. Their guidance, mentorship, and support were invaluable throughout the research journey, enhancing the quality and depth of our work.

This research is dedicated to individuals and families impacted by cancer. We hope that our work contributes to ongoing initiatives for detection of cancer.

Conclusion

From the observations we concluded that modified DenseNet201 performed better than modified DenseNet169 in detecting Skin Cancer, and Oral cancer. We obtained 93.39% accuracy for Skin Cancer detection and 94.41% accuracy for Oral Cancer detection.

References

[1] Syed Inthiyaz, Baraa Riyadh Altahan, SK Hasane Ahammad, V Rajesh, Ruth Ramya Kalangi, Lassaad K. Smirani, Md. Amzad Hossain, Ahmed Nabin Zaki Rashed “Skin disease detection using deep learning”, Advances in Engineering Software, Volume 175, January 2023 [2] Walaa Gouda, Najm Us Sama, Ghada Al-Waakid, Mamoona Humayun, and Noor Zaman Jhanjhi “Detection of Skin Cancer Based on Skin Lesion Images Using Deep Learning”, MDPI Healthcare, Volume 10, June 2022 [3] Ioannis Kousis, Isidoros Perikos, Ioannis Hatzilygeroudis, and Maria Virvou “Deep Learning Methods for Accurate Skin Cancer Recognition and Mobile Application”, MDPI Electronics, Volume 11, April 2022 [4] Md. Jahin Alam, Sayeed Mohammad, Md Adnan Faisal Hossain, Ishtaque Ahmed Showmik, Munshi Sanowar Raihan, Shahed Ahmed, Talha Ibn Mahmud “S2C-DeLeNet: A parameter transfer based segmentation-classification integration for detecting skin cancer lesions from Dermoscopic images”, Computers in Biology and Medicine, Volume 150, November 2022 [5] Khalil Aljohani and Turki “Automatic Classification of Melanoma Skin Cancer with Deep Convolutional Neural Networks”, MDPI AI, Volume 3, June 2022 [6] Solene Bechelli, and Jerome Delhommelle “Machine Learning and Deep Learning Algorithms for Skin Cancer Classification from Dermoscopic Images”, MDPI Bioengineering, Volume 9, February 2022 [7] Parvathaneni Naga Srinivasu, Jalluri Gnana SivaSai, Muhammad Fazal Ijaz, Akash Kumar Bhoi, Wonjoon Kim, and James Jin Kang “Classification of Skin Disease Using Deep Learning Neural Networks with MobileNet V2 and LSTM”, MDPI Sensors, Volume 21, 18 April 2021 [8] Agung W. Setiawan, Amir Faisal, Nova Resfita “Effect of Image Downsizing and Color Reduction on Skin Cancer Pre-screening”, IEEE Xplore, August 2020 [9] Muhammad Zia Ur Rehman, Fawad Ahmed, Suliman A. Alsuhibany, Sajjad Shaukat Jamal, Muhammad Zulfiqar Ali and Jawad Ahmad “Classification of Skin Cancer Lesions Using Explainable Deep Learning”, MDPI Sensors, Volume 22, September 2022 [10] S. Subha, Dr. D.C. Joy Winnie Wise, S. Srinivasan, M. Preetham, B. Soundarlingam “Detection and Differentiation of Skin Cancer from Rashes”, IEEE Xplore, August 2020 [11] Krishnan Sathishkumar, Meesha Chaturvedi, Priyanka Das, S. Stephen, and Prashant Mathur “Cancer incidence estimates for 2022 & projection for 2025: Result from National Cancer Registry Programme, India”, NIH, National Center for Biotechnology Information, 11 March 2023 [12] “Oral Cancer 5-Year Survival Rates by Race, Gender, and Stage of Diagnosis”, NIH, National Institute of Dental and Craniofacial Research, April 2023 [13] “Skin Cancer Facts and Statistics” Skin Cancer Foundation [14] Dalia Alzu’bi, Malak Abdullah, Ismail Hmeidi, Rami AlAzab, Maha Gharaibeh, Mwafaq El-Heis, Khaled H. Almotairi, Agostino Forestiero, Ahmad MohdAziz Hussein, and Laith Abualigah “Kidney Tumor Detection and Classification Based on Deep Learning Approaches: A New Dataset in CT Scans” Hindawi, Journal of Healthcare Engineering Volume 2022, 22 October 2022 [15] Madhusmita Das, Rasmita Dash, and Sambit Kumar Mishra “Automatic Detection of Oral Squamous Cell Carcinoma from Histopathological Images of Oral Mucosa Using Deep Convolutional Neural Network” MDPI, International Journal of Environmental Research and Public Health Article, 23 January 2023 [16] Sri Hari Nallamala, Dr. Pragnyaban Mishra, KLEF, Dr. Suvarna Vani Koneru, VRSEC, Breast Cancer Detection using Machine Learning Way, International Journal of Recent Technology and Engineering (IJRTE), Vol.8, Issue-2S3, July 2019, ISSN: 2277-3878. [17] Sri Hari Nallamala, Dr. Pragnyaban Mishra, KLEF, Dr. Suvarna Vani Koneru, VRSEC, Pedagogy and Reduction of K-NN Algorithm for Filtering Samples in the Breast Cancer Treatment, International Journal of Scientific & Technology Research (IJSTR), Vol.8, Issue 11, November 2019, ISSN: 2277-8616. [18] N B Naidu, Sri Hari Nallamala, Chukka Swarna Lalitha, Syed Seema Anjum, VVIT, Pertaining Formal Methods for Privacy Protection, International Journal of Grid & Distributed Computing, ISSN: 2005-4262, Vol. 13, No. 1, March – 2020. [19] Kranthi Madala, Sushma Chowdary Polavarapu, VRSEC, Sri Hari Nallamala, Automatic Signal Indication System through Helmet, International Journal of Advanced Science and Technology, Vol. 29, No. 05, April/May – 2020, ISSN: 2005-4238. [20] Sri Hari Nallamala, Dr. D. Durga Prasad, PSCMRCET, J. Ranga Rajesh, MICT, Dr. Pragnaban Mishra, KLEF, Sushma Chowdary P, VRSEC, A Review on Applications, Early Successes & Challenges of Big Data in Modern Healthcare Management, TEST Engineering and Management Journal Vol. 83, Issue 3, May – June 2020, ISSN: 0193-4120. [21] K B Prakash, KLEF, Rama Krishna E, NEC, Nalluri Brahma Naidu, Sri Hari Nallamala, VVIT, Dr. Pragyaban Mishra, P Dharani, KLEF, Accurate Hand Gesture Recognition using CNN and RNN Approaches, International Journal of Advanced Trends in Computer Science and Engineering, Vol. 9, No. 3, May – June 2020, ISSN: 2278-3091. [22] Sushma Chowdary P, Kranthi Madala, VRSEC, M Sailaja, PVPSIT, Sri Hari Nallamala, Investigation on IoT System Design & Its Components, Jour of Adv Research in Dynamical & Control Systems, Vol. 12, Issue-06, June – 2020, ISSN: 1943-023X. [23] Sri Hari Nallamala, Bajjuri Usha Rani, LBRCE, Anandarao S, LBRCE, Dr. Durga Prasad D, PSCMRCET, Dr. Pragnyaban Mishra, KLEF, A Brief Analysis of Collaborative and Content Based Filtering Algorithms used in Recommender Systems, IOP Conference Series: Materials Science and Engineering, 981(2), 022008, December 2020, ISSN: 1757-899X. [24] Manukonda Vinay, Gonugunta Bhanu Sankara Sai Venkatesh, Malempati Venkata Priyanka, Dogiparthi Venkata Sai, Dr. Sri Hari Nallamala, VVIT, Deep Learning Based Face Mask Detection for User Safety from Covid-19, International Journal of Innovative Research in Computer and Communication Engineering (IJIRCCE), e-ISSN: 2320-9801, p-ISSN: 2320-9798, Volume 10, Issue 5, May 2022. [25] P. Radha Vyshnavi, M.V.N. Sai Niharika, M. Summayya, P. Pravallika, Dr. Sri Hari Nallamala, VVIT, Liver Disease Prediction Using Machine Learning, International Journal of Innovative Research in Science, Engineering and Technology (IJIRSET), e-ISSN: 2319-8753, p-ISSN: 2320-6710, Volume 11, Issue 6, June 2022. [26] Y. Vineela Devi, T. Akshara, S. Mohitha, V. Venkatesh, N. Sri Hari, VVIT, Precision Farming by Analyzing Soil Moisture and NPK Using Machine Learning, International Journal of Innovative Research in Science, Engineering and Technology (IJIRSET), e-ISSN: 2319-8753, p-ISSN: 2320-6710, Volume 11, Issue 6, June 2022. [27] Dr. N. Sri Hari, M. Ramya Sri, Mythri P, N. Sai Harshitha, M. Venkata Naga Sai Kumar, Detection of Covid-19 using Deep Learning, IJFANS International Journal of Food and Nutritional Science, UGC CARE Listed (Group -I) Journal, Volume 11, Issue 12, Dec 2022; P-ISSN: 2319 1775, Online-ISSN: 2320 7876. [28] Dr. N. Sri Hari, P. Vanaja, M. Ajay Kumar, M.D.V.S. Akash, K. Sivaiah, Multi Disease Detection using Machine Learning, IJFANS International Journal of Food and Nutritional Science, UGC CARE Listed (Group -I) Journal, Volume 11, Issue 12, Dec 2022; P-ISSN: 2319 1775, Online-ISSN: 2320 7876. [29] Dr. N. Sri Hari, Shaik Nelofor, Siramdasu Leela Vardhan, Sura Rana Prathap Reddy, Sakhamuri Devendra, CycleGAN Age Regressor, International Journal for Innovative Engineering and Management Research, ISSN: 2456-5083, Volume 12, ISSUE 04, Pages: 45-51, April 2023. [30] Sudheer Mangalampalli, Ganesh Reddy Karri, Amit Gupta, Tulika Chakrabarti, Sri Hari Nallamala, Prasun Chakrabarti, Bhuvan Unhelkar, Martin Margala, Fault-Tolerant Trust-Based Task Scheduling Algorithm Using Harris Hawks Optimization in Cloud Computing, Sensors 2023, 23(18), 8009; https://doi.org/10.3390/s23188009. [31] K. Sudharson, …, Sri Hari Nallamala, et al., Hybrid Quantum Computing and Decision Tree based Data Mining for Improved Data Security, 7th International Conference on Computing, Communication, Control and Automation (ICCUBEA), August 18-19, 2023, 979-8-3503-0426-8/23/$31.00 ©2023 IEEE. [32] G Sanjay Gandhi, K Vikas, V Ratnam, K Suresh Babu, IET Communications 14 (16), 2840-2848, Grid clustering and fuzzy reinforcement?learning based energy?efficient data aggregation scheme for distributed WSN [33] KV Prasad, GS Gandhi, S Balaji, Inexpensive colour image segmentation by using mean shift algorithm and clustering, International Journal of Graphics and Image Processing 4 (4), 260-266 [34] PSK venkatraomaddumala, sanjay gandhi gundabatini, P Anusha Classification of Cancer cells detection using Machine Learning Concepts, International Journal of Advanced Science and technology 29 (3), 9177-9190 [35] Sanjay Gandhi Gundabatini, Suresh Babu Kolluru, C. H. Vijayananda Ratnam & N. Nalini Krupa, DAAM: WSN Data Aggregation Using Enhanced AI and ML Approaches Conference paper First Online: 27 June 2023, LNEE, volume 976 [36] Gundabatini, S.G. Rayachoti, E., Vedantham, R Recurrent Residual Puzzle based Encoder Decoder Network (R2-PED) model for retinal vessel segmentation. Multimed Tools Appl (2023). https://doi.org/10.1007/s11042-023-16765-0 [37] Gundabatini, S.G. Rayachoti, E., Vedantham, R EU-net: An automated CNN based ebola U-net model for efficient medical image segmentation. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-18482-8.

Copyright

Copyright © 2024 Lalitha Rajeswari B, Hema Latha M, Karthikeya N, Mohana Venkata Raghuram N, Pavani P. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59111

Publish Date : 2024-03-18

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online