Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Cloud Cover Segmentation and Motion Prediction from Satellite Imagery

Authors: Vaishali Savale, Jay Wanjare, Saket Waware, Yash Wagh, Aditya Yeole, Yuvraj Susatkar

DOI Link: https://doi.org/10.22214/ijraset.2024.62540

Certificate: View Certificate

Abstract

This research project is dedicated to achieving precise identification, segmentation, and motion prediction of cloud formations in satellite imagery. Leveraging the powerful U-Net, a renowned deep learning architecture for image segmentation, is crucial in addressing the intricate challenge of cloud detection and segmentation within remote sensing imagery. The automation of cloud identification processes within the project not only enhances weather forecasting capabilities but also contributes significantly to advancements in climate monitoring and environmental analysis. The incorporation of Long Short-Term Memory (LSTM) networks facilitates cloud motion prediction, providing insights into the dynamic behavior of cloud formations over time. The robustness of the U-Net model, coupled with the enhanced capability of capturing intricate patterns and predicting cloud motion, establishes it as a valuable and comprehensive tool. This contributes to improving the accuracy and efficiency of cloud segmentation in satellite imagery, fostering progress in critical domains such as environmental research and meteorological applications

Introduction

I. INTRODUCTION

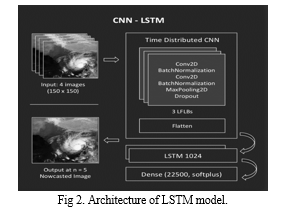

Satellite imagery stands as a cornerstone in the realm of Earth observation, providing a rich source of information crucial for diverse applications ranging from environmental monitoring to effective disaster management. The accurate identification and comprehension of cloud cover within satellite imagery hold paramount importance, as it profoundly influences the precision of downstream applications. In the pursuit of advancing the field of cloud cover segmentation and detection, this research paper directs its focus towards the application of cutting-edge deep learning techniques. Specifically, Convolutional Neural Networks (CNN) and Long Short-Term Memory networks (LSTM) are integrated into a unified ConvLSTM framework to address the complexities associated with cloud identification.

Conventional methods for cloud detection often grapple with the intricate spatial and temporal patterns inherent in satellite imagery. The proposed ConvLSTM model strategically capitalizes on the spatial awareness inherent in CNNs and the capacity of LSTMs to capture temporal dependencies. This synergistic integration tackles challenges related to complex cloud formations by efficiently extracting hierarchical features from the imagery through convolutional layers and preserving temporal context through LSTM components. The result is an improved accuracy in cloud segmentation across a spectrum of scenarios.

This research endeavors to elevate the reliability and efficiency of cloud cover detection, making significant contributions to the broader domains of remote sensing and Earth observation. The integration of CNN and LSTM within the innovative ConvLSTM model represents a pioneering approach, showcasing the potential of advanced deep learning architectures to markedly enhance the precision of cloud segmentation in satellite imagery. Through these advancements, the research endeavors to propel the capabilities of remote sensing technologies, fostering a deeper understanding of Earth's dynamics.

II. LITERATURE REVIEW

[1]: It describes a workflow that involves segmenting the image into superpixels, classifying ambiguous superpixels using a NSS model, and using Gabor features to distinguish between cloudy and snowy areas. The method aims to effectively detect clouds, including small and thin clouds, as well as distinguish them from snowy regions.

[2]: The document discusses cloud detection in high-resolution multispectral satellite imagery. It compares different methods using accuracy, precision, recall, specificity, and Kappa coefficient. The proposed algorithm shows superior performance in comparison to other methods. The results are evaluated using test images and ground truth data

.[3]:The document discusses Cloud-Net, a deep learning-based method for cloud detection in remote sensing images. It highlights the limitations of existing methods and presents Cloud-Net as an improvement, delivering superior performance for cloud detection. The authors also introduce a modified dataset and provide quantitative results comparing Cloud-Net with other methods.

[4]: The context provided describes a study that involves the use of multiple optical instruments and data from the CAL FIRE wildfire perimeter database to generate training examples for a machine learning model. The study focuses on the assessment of performance of a U-Net model trained on various combinations of spectral bands from different instruments. The modified U-Net architecture used in the study is also mentioned. The workflow involves preprocessing and data preparation, cleaning of imagery and reference perimeter data, and the generation of training, validation, and test datasets from the imagery and reference data for training the model.

[5]: The context provided discusses a research paper presented at the 2019 11th International Scientific and Practical Conference on Electronics and Information Technologies (ELIT). The paper focuses on the development and application of a neural network approach for segmenting images of atmospheric clouds, particularly into multiple cloud classes. The proposed model, similar to U-Net, receives RGB images and returns segmented cloud images. The model is trained on a database of 1300 images and utilizes cluster analysis methods for segmentation. Additionally, the paper references the use of deep learning and activation functions for optimizing the model.

[6]: Seema Fataniya (2019) reported in ‘Cloud detection methodologies’ that clouds are important for bringing rain, but they can hinder the study of the Earth's surface in satellite images. The proposed method for cloud detection has advantages over traditional rule-based methods. Various threshold-based classical algorithms and machine learning techniques have been used for cloud detection, with recommendations for improving accuracy. Satellite images from different sources have been used for cloud detection, and researchers have employed different techniques such as shape attributes, fusion of multi-scale convolutional features, and color transformation.

[7]: The document discusses the evaluation of different classification models for remote sensing, specifically focusing on cloud detection. It compares the performance of Convolutional Neural Network (CS-CNN) and Random Forest (RF) methods in various scenarios. The evaluation metrics used include Heidke Skill Score (HSS), Proportion of Detection (POD), False Alarm Ratio (FAR), and bias. The results show that CS-CNN outperforms RF in accuracy, speed, and robustness.

[8]: The paper proposes a convolutional neural network (CNN) architecture for cloud segmentation in satellite imagery. The network is trained on a dataset called CloudPeru2, which includes various scenarios such as snow, ocean, and urban areas. The diversity of the dataset is verified using t-SNE visualization. The proposed CNN architecture is compared to four other cloud detection methods, and its performance is evaluated in terms of accuracy and loss. The results demonstrate that the proposed method performs well in cloud segmentation compared to the other methods.

[9]: The study introduces the RS-Net deep learning model for cloud detection in satellite imagery. The model is trained and evaluated on datasets from Landsat 8 Biome and SPARCS and achieves state-of-the-art performance, especially in snowy and icy regions. The RS-Net model improves cloud detection using only RGB bands, making it suitable for smaller satellites with limited capabilities. Training the model on data from an existing cloud masking method leads to increased performance. The classification time for a full Landsat 8 product using the largest RS-Net model is 18.0 ± 2.4 seconds, making it suitable for production environments.

[10]: The study aims to utilize clustering algorithms to segment satellite images and identify cloud formations. The results indicate that Meng Hee Heng K-Means provides better cluster distribution for cloud types, while DBSCAN is faster and has a smaller data range. The clusters of each cloud type are visually presented. The comparison demonstrates that DBSCAN yields more specific and detailed results, particularly for small groups of clouds. The passage concludes that clustering methods, including DBSCAN, are effective for satellite image segmentation and play a crucial role in remote sensing imaging. Several references are included for further exploration of the topic.

III. METHODOLOGY

In the pursuit of robust cloud segmentation using deep learning, our methodology unfolds in a systematic series of steps. Firstly, a custom dataset class, named CloudDataset, meticulously assembles satellite image data encompassing four critical bands—red, green, blue, and near-infrared—augmented by corresponding ground truth masks. This comprehensive dataset construction lays the foundation for our model's proficiency in discerning cloud patterns.

Following dataset initialization using paths to band directories, we embark on a crucial exploration phase. Visualizing sample images and their corresponding ground truth masks unveils the nuances of our dataset, guiding subsequent model development. The dataset is then strategically split into training and validation sets. The U-Net model, showcases contracting and expanding blocks, crafting an architecture optimized for semantic segmentation tasks. Model initialization heralds the commencement of the training phase, during which the model refines its parameters over multiple epochs using cross-entropy loss, Adam optimization, and accuracy metrics.

After training, the model's predictions are assessed through dedicated functions, converting outputs into masks for qualitative comparison with ground truth. In excluding the test set intentionally, our focus sharpens on meticulous training and validation processes. This methodology crystallizes a holistic approach to cloud segmentation using deep learning.

A. Exploratory Data Analysis:.

The methodology unfolds with the creation and exploration of a specialized dataset tailored for cloud segmentation, orchestrated through the CloudDataset class. This involves the meticulous amalgamation of four essential satellite image bands—red, green, blue, and near-infrared—accompanied by corresponding ground truth masks. Each file is systematically combined into a dictionary, ensuring cohesive accessibility throughout subsequent processes.

Upon dataset initialization via paths to band directories, an exploration phase ensues. Visualization of a sample image alongside its corresponding ground truth mask stands pivotal in comprehending the inherent dataset characteristics. This visual examination aids in identifying challenges and patterns crucial for the subsequent training of the U-Net model. Transitioning to the dataset splitting stage, the PyTorch random_split function strategically divides the dataset into training and validation sets. This partitioning is essential for training efficacy and model evaluation. The conversion of the dataset into PyTorch DataLoader instances follows suit, streamlining batch processing during the upcoming model training phase.

To delve deeper into the dataset, a specific example is extracted using the CloudDataset class. Visualization of the input image and its corresponding ground truth mask provides an illustrative glimpse into the data's intricacies.

In preparation for model training, the dataset undergoes further division into training and validation sets using PyTorch utilities. Subsequently, DataLoader instances are created for both sets, optimizing the flow of data during the training process. The resulting batch, extracted using the DataLoader, is now primed for utilization in the subsequent U-Net model training phase.

B. Model Development – Cloud Segmentation:

In the pursuit of cloud segmentation, the methodology centers on the formulation and training of a U-Net model, a sophisticated architecture renowned for its prowess in semantic segmentation tasks. The U-Net model, as instantiated in the UNET class, is emblematic of an innovative design characterized by contracting and expanding blocks, strategically orchestrated to optimize the segmentation of clouds within satellite images.

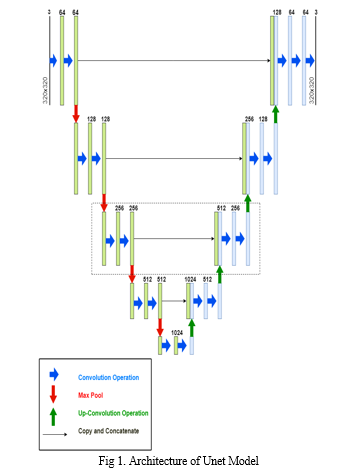

1. UNET Architecture:

The U-Net architecture is ingeniously structured to address challenges in semantic segmentation, a task that involves pixel-wise classification of objects within an image. The contracting path, encompassing convolutional layers, captures the hierarchical features of the input image, akin to downsampling operations. This phase extracts high-level features, crucial for discerning intricate patterns such as cloud formations. The U-Net architecture, originally designed for biomedical image segmentation, has proven its versatility and efficacy in various segmentation tasks, including cloud segmentation. Let's delve into the intricate details of the U-Net architecture and highlight its distinctive features:

a. Contracting Path (Encoder): The U-Net architecture commences with a contracting path, often referred to as the encoder. This path incorporates a series of convolutional layers interspersed with rectified linear unit (ReLU) activation functions. Each convolutional layer is followed by batch normalization, enhancing training stability, and a ReLU activation, introducing non-linearity to the model. Max-pooling operations with a kernel size of 3x3 and a stride of 2 facilitate downsampling, capturing hierarchical features of the input image.

b. Bottleneck: The contracting path culminates in a bottleneck layer, a pivotal juncture where the high-level features are synthesized. This layer serves as a compressed representation of the input image, capturing essential semantic information.

c. Expanding Path (Decoder): The expanding path, or decoder, is characterized by transposed convolutions (also known as deconvolutions) for upsampling.

These operations reconstruct the segmented image, incorporating spatial context. Concatenation of feature maps from the contracting path via skip connections ensures that fine-grained details are preserved during the upsampling process. Each transposed convolutional layer is coupled with batch normalization and ReLU activation functions.

d. Output Layer: The final layer of the U-Net is a 1x1 convolutional layer, producing the segmentation mask. Sigmoid or softmax activation functions are commonly used to generate pixel-wise probabilities for binary or multi-class segmentation.

One of the distinctive features of U-Net is the integration of skip connections between the contracting and expanding paths. These connections facilitate the flow of information at different scales, enabling the model to retain fine details during the downsampling and upsampling phases. The U-Net architecture forms a U-shape, with a contracting path followed by an expanding path. This design allows the model to capture both high-level semantics and fine details effectively, addressing the "semantic gap" inherent in many segmentation tasks. The presence of a bottleneck layer compresses high-level features into a concise representation. This design choice is advantageous in scenarios with limited data, as it helps prevent overfitting and aids generalization. U-Net's architecture is particularly well-suited for scenarios with limited labeled data. The skip connections enhance the model's ability to learn meaningful representations even with a relatively small dataset. The combination of convolutional and transposed convolutional operations, coupled with skip connections, allows U-Net to precisely localize features in the segmented output. This is crucial in tasks like cloud segmentation, where capturing spatial relationships is paramount. In comparison to other segmentation models like DeepLab, SegNet, and FCN, U-Net's architecture stands out for its simplicity, adaptability to data scarcity, and the emphasis on preserving fine details through skip connections. While other models may excel in certain contexts, U-Net's design proves to be a robust choice for cloud segmentation, striking a harmonious balance between feature extraction and preservation of intricate details.

The UNET model is initialized and subjected to a training phase implemented through a dedicated training loop. This loop utilizes a specified loss function (cross-entropy loss), optimizer (Adam), and accuracy metric. The training is executed over multiple epochs, allowing the model to iteratively learn and refine its segmentation capabilities. The progression of the training process is visualized through plots of training and validation losses.

Finally, the trained model is applied to make predictions on a batch of data, and the results, including the input image, ground truth mask, and predicted mask, are visually presented. The U-Net architecture's effectiveness in capturing cloud structures is underscored by these predictions. Overall, this meticulously orchestrated process flow combines data preparation, model development, and evaluation, culminating in a powerful cloud segmentation solution.

During the model training phase, the code meticulously monitors and visualizes the evolution of training and validation losses. This is crucial for assessing the learning progress of the U-Net model. The training loop iteratively refines the model's parameters to minimize the cross-entropy loss, capturing how well the model is fitting the training data. Simultaneously, the validation loss is calculated on a separate dataset not used during training, offering insights into the model's generalization to new, unseen data. The plotted training and validation losses provide a visual representation of the model's convergence and its ability to generalize, helping to identify potential issues such as overfitting or underfitting.

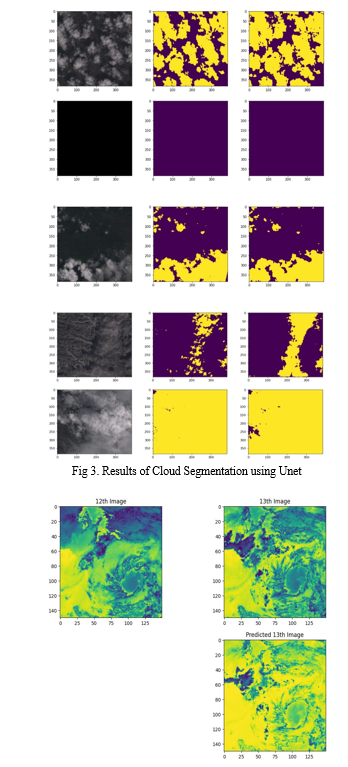

After the completion of training, the model's effectiveness is evaluated through predictions on a batch of data. The predb_to_mask function is instrumental in converting the model's output probabilities into binary masks, facilitating a direct comparison with ground truth masks. The visualized results include the input satellite images, corresponding ground truth masks, and the predicted masks generated by the U-Net model. This qualitative assessment enables a detailed examination of the model's segmentation capabilities. By juxtaposing the predicted and ground truth masks, any discrepancies or accuracies in the model's cloud segmentation are readily apparent. This step provides valuable insights into the model's performance and helps gauge its reliability in real-world cloud segmentation scenarios.

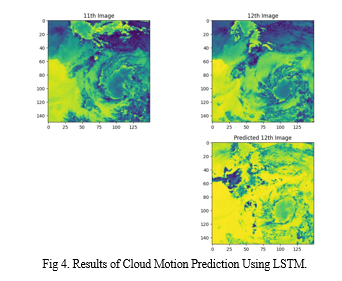

IV. RESULTS AND DISCUSSIONS

The cloud segmentation model underwent extensive training and evaluation, with a notable emphasis on assessing its performance. The training phase involved 50 epochs, during which the model progressively refined its cloud segmentation capabilities. The results indicate a commendable accuracy of 96% achieved by the model.

Throughout the training process, both training and validation losses were meticulously tracked and visualized. The convergence of the model was carefully monitored, ensuring that the learning process was effective and that the model generalizes well to unseen data.

The achieved accuracy of 96% underscores the efficacy of the model in accurately delineating cloud regions within satellite images. This high accuracy is indicative of the model's ability to discern complex patterns and variations in cloud cover, making it a robust solution for cloud segmentation tasks. These results demonstrate the successful implementation of the U-Net architecture for cloud segmentation, showcasing its potential for various applications, including meteorological studies, climate modeling, and satellite image analysis. The high accuracy achieved after 50 epochs signifies the model's capacity to learn and adapt to the nuances of cloud patterns in the provided dataset.

V. FUTURE WORK

The project model can be extended to various features of which some are mentioned below. Explore and experiment with more advanced neural network architectures for semantic segmentation. There may be newer architectures that outperform U-Net or variations of U-Net that incorporate attention mechanisms or additional layers for enhanced feature extraction.

Extend the model to perform multi-class segmentation by identifying and classifying different types of clouds (e.g., cirrus, cumulus, stratus). This can provide more detailed insights into cloud cover patterns. Integrate weather pattern recognition to not only segment clouds but also identify and categorize larger weather systems or phenomena, such as storms, fronts, or atmospheric disturbances. Also another aspect can be the inclusion of geospatial information in the segmentation process. This can involve incorporating data on elevation, land cover, or other geographic features to refine cloud segmentation based on contextual information.

Conclusion

In conclusion, the U-Net model has proven to be a robust and effective solution for cloud segmentation in satellite imagery. Through an intricate combination of contracting and expanding blocks, the U-Net architecture excels in capturing intricate patterns, showcasing its ability to delineate cloud regions with high accuracy. The training phase, spanning 50 epochs, resulted in an impressive accuracy of 96%, highlighting the model\'s capacity to learn and generalize well to unseen data. The model\'s architecture, inspired by the U-Net design, facilitates semantic segmentation, making it particularly well-suited for complex tasks such as cloud cover analysis. The careful exploration of the dataset, meticulous splitting into training and validation sets, and the creation of DataLoader instances contribute to a comprehensive and effective training pipeline. Furthermore, the training visualization process allows for a nuanced understanding of the model\'s convergence, ensuring that the learning process is effective and exhibits robust performance. The prediction and visualization phase further confirms the model\'s proficiency, providing qualitative insights into its segmentation capabilities through comparisons of input images, ground truth masks, and predicted masks. While the current project has demonstrated promising results in cloud segmentation, the continuous evolution of the field calls for ongoing exploration. Future endeavors could include the incorporation of advanced features, multi-sensor integration, and real-time processing to enhance the model\'s applicability in diverse environmental conditions. The U-Net model, with its strong performance in cloud segmentation, stands as a promising paradigm for the expanding domain of satellite image analysis and environmental monitoring.

References

[1] Deng, C., Li, Z., Wang, W., Wang, S., Tang, L. and Bovik, A.C., 2018. Cloud detection in satellite images based on natural scene statistics and gabor features. IEEE Geoscience and Remote Sensing Letters, 16(4), pp.608-612. [2] Morales, G., Huamán, S.G. and Telles, J., 2018. Cloud detection in high-resolution multispectral satellite imagery using deep learning. In Artificial Neural Networks and Machine Learning–ICANN 2018: 27th International Conference on Artificial Neural Networks, Rhodes, Greece, October 4-7, 2018, Proceedings, Part III 27 (pp. 280-288). Springer International Publishing. [3] Mohajerani, S. and Saeedi, P., 2019, July. Cloud-Net: An end-to-end cloud detection algorithm for Landsat 8 imagery. In IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium (pp. 1029-1032). IEEE. [4] Rashkovetsky, D., Mauracher, F., Langer, M. and Schmitt, M., 2021. Wildfire detection from multisensor satellite imagery using deep semantic segmentation. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 14, pp.7001-7016. [5] Rusyn, B., Korniy, V., Lutsyk, O. and Kosarevych, R., 2019, September. Deep learning for atmospheric cloud image segmentation. In 2019 XIth International Scientific and Practical Conference on Electronics and Information Technologies (ELIT) (pp. 188-191). IEEE. [6] Mahajan, S. and Fataniya, B., 2020. Cloud detection methodologies: Variants and development—A review. Complex & Intelligent Systems, 6, pp.251-261.. [7] Drönner, J., Korfhage, N., Egli, S., Mühling, M., Thies, B., Bendix, J., Freisleben, B. and Seeger, B., 2018. Fast cloud segmentation using convolutional neural networks. Remote Sensing, 10(11), p.1782. [8] Morales, G., Ramírez, A. and Telles, J., 2019, August. End-to-end cloud segmentation in high-resolution multispectral satellite imagery using deep learning. In 2019 IEEE XXVI International Conference on Electronics, Electrical Engineering and Computing (INTERCON) (pp. 1-4). IEEE. [9] Jeppesen, J.H., Jacobsen, R.H., Inceoglu, F. and Toftegaard, T.S., 2019. A cloud detection algorithm for satellite imagery based on deep learning. Remote sensing of environment, 229, pp.247-259. [10] Harsono, T. and Basuki, A., 2018, October. Cloud satellite image segmentation using meng hee heng k-means and dbscan clustering. In 2018 International electronics symposium on knowledge creation and intelligent computing (IES-KCIC) (pp. 367-371). IEEE.

Copyright

Copyright © 2024 Vaishali Savale, Jay Wanjare, Saket Waware, Yash Wagh, Aditya Yeole, Yuvraj Susatkar. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET62540

Publish Date : 2024-05-23

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online