Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Review of CNN-Based Object Recognition and Tracking Systems for Visually Impaired People

Authors: Akshay Dhumal, Divya Gharate, Samruddhi Patil, Atharva Shinde, Prof. S. G. Chordiya

DOI Link: https://doi.org/10.22214/ijraset.2024.64986

Certificate: View Certificate

Abstract

Visually impaired persons (VIPs) face significant challenges in navigating their environments, impacting their independence and quality of life. This paper reviews recent advancements in assistive technologies aimed at enhancing mobility and safety for VIPs. It explores various systems, including wearable devices, object detection technologies, and navigation aids, highlighting their functionalities, limitations, and potential future develop- ment. The integration of machine learning and sensor technologies has led to innovative solutions that provide realtime feedback and improved spatial awareness for users. VIPs need assistance in performing daily tasks such as object detection, obstacle recognition, and navigation, particularly in indoor and outdoor environments. The paper emphasizes the importance of protecting and ensuring the safety of VIPs. Devices typically use sensors like infrared, ultrasonic, and imagery to gather environmental data, which is then processed by machine learning techniques. Users receive feedback via auditory or vibratory means. The paper provides a comprehensive comparative analysis of assistive devices, discussing their key attributes, challenges, and limitations, along with a score-based evaluation to help select appropriate devices for specific needs.

Introduction

I. INTRODUCTION

The World Health Organization (WHO) estimates that 285 million people worldwide are visually impaired, with 39 million classified as blind [2]. Traditional aids such as white canes and guide dogs have limitations in range, functionality, and practicality, making it crucial to develop advanced assistive technologies. Modern solutions leverage advancements in machine learning, computer vision, and sensor integration technology for the visually impaired, object detection, distance estimation, wearable devices, and real-time feedback. There is a global need for assistive technologies for the visually impaired, with WHO estimating around 2.2 billion people worldwide experiencing some form of visual impairment. Traditional aids such as canes and guide dogs are limited, hence the need for advanced technological solutions.

The devices assist VIPs with object detection, navigation, and daily activities, often using sensors like infrared, ultrasonic, or cameras to gather environmental data. The paper intro- duces essential attributes required for these devices, such as reliability and efficient feedback mechanisms. A score-based analysis evaluates each device’s performance based on real-time processing, accuracy, and adaptability to indoor and outdoor environments. The analysis reveals that while many devices perform well in specific areas, no single device meets all the ideal requirements, suggesting a need for future development.

A. Problem Statement

Visually impaired individuals face significant challenges in navigating their environment safely and independently. Traditional assistive tools provide limited real- time feedback and lack comprehensive features for object recognition and location tracking.

B. Objectives

- Object Detection Accuracy: Improve the accuracy of object detection for visually impaired individuals.

- Real-Time Navigation Assistance: Provide real-time navigation feedback to help users move safely and independently.

- Safety and Tracking Features: Include safety and tracking features to enhance user confidence in navigating various environments.

II. LITERATURE SURVEY

A. Wearable Devices

Recent advancements have led to the development of wearable devices that integrate object detection and distance estimation capabilities. These devices often use lightweight, portable technologies like the Raspberry Pi and camera modules to identify objects and provide auditory or haptic feedback about their proximity [4]. For example, smart glasses equipped with these modules can alert users to obstacles in real-time, enhancing their spatial awareness and autonomy without requiring hands-on interaction.

B. Object Detection Systems

Object detection remains a cornerstone of assistive technologies for VIPs, enabling the recognition of obstacles and landmarks in their environment. Convolutional neural networks (CNN) have proven to be highly effective in this domain, allowing real-time visual analysis with high accuracy [5]. Systems like Mobile-Net have been optimized for low-power devices, making them suitable for portable applications. However, while CNN-based systems excel at object identification, they often struggle with precise distance estimation, which is essential for safe navigation [6].

C. Navigation Aids

Navigation aids have evolved from simple obstacle detectors to sophisticated systems that utilize multisensory fusion techniques. By combining data from ultrasonic sensors, LiDAR, cameras, and GPS modules, these systems can create detailed maps of the user’s surroundings, facilitating smooth navigation through complex environments [7], [8]. Integrating artificial intelligence (AI) into these devices has allowed for adaptive learning, enabling the system to improve its obstacle-detection capabilities and decision-making processes over time [9]. This evolution in technology significantly enhances the user’s ability to move safely and independently.

D. Hybrid Devices

These devices combine object detection and navigation functionalities, offering more com- prehensive assistance. They use both vision and non-vision-based sensors, and some even incorporate GPS [7]. For instance, the project integrates a stereoscopic camera and GPS to offer both navigation and obstacle detection.

E. ADL (Activities of Daily Life) Devices

Devices supporting daily tasks like currency recognition, reading text, or identifying objects in the user’s immediate surroundings [7]. Technologies in this category often utilize portable, wearable designs, making them more accessible for everyday use.

Table I: Literature Survey

|

|

Sr. No |

Title |

Author |

Publication and Year |

Pros |

|

|

|

1 |

CNN-Based Object Recognition and Tracking System to Assist Visually Impaired People |

F. Ashiq et al. |

IEEE Access, 2022 |

|

|

|

|

2 |

Real-Time Image and Video Processing Techniques in Assistive Technology |

M. Asif |

Journal of Computer Science, 2016 |

lighting and occlusions |

|

|

|

3 |

Integrating Web- Engineering with Assistive Technologies for Improved User Experience |

S. Zafar |

Web Engineering Journal, 2020 |

|

|

|

|

4 |

Towards Assisting Visually Impaired: A Review on Techniques for Decoding the Visual Data From Chart Images |

K.C. Shakira, A. Lijiya |

IEEE Access, 2021 |

|

|

|

5 |

Obstruction Avoidance Through Object Detection and Classification |

Usman Masud et al. |

IEEE Access, 2022 |

machine learning techniques for obstruction avoidance

|

||

|

6 |

Assistant for Visually Impaired using Computer Vision |

Pratik Vyavahare, Syed Habeeb |

Journal of Computer Vision, 2019 |

|

||

|

7 |

Wearable Vision Assistance System Based on Binocular Sensors for Visually Impaired User |

Bin Jiang et al. |

2019 |

|

||

|

8 |

Be My Eyes: Android App for Visually Impaired People |

Rucha Doiphode et al. |

2017 |

volunteers for real-time help |

||

|

9 |

Depth Detection for Assistance in Navigation for Visually Impaired |

A. Kumar |

Journal of Computer Engineering, 2020 |

|

||

III. PROPOSED SYSTEM OVERVIEW

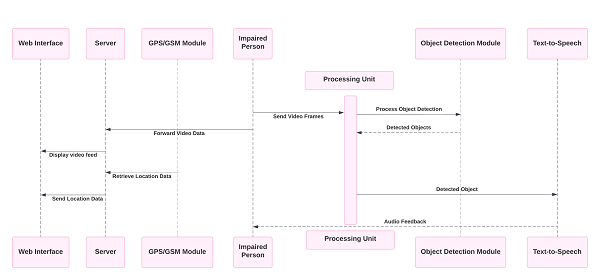

The primary objective of CNN-based assistive systems is to recognize objects and pro- vide audio feedback to VIPs in real time. Many of these systems use Mobile-Net, a CNN architecture designed for mobile and embedded devices. The system architecture typically consists of a camera for input, a CNN for object recognition, and a text-to-speech engine for delivering audio feedback to users. This section elaborates on the technical components of such systems. The application helps blind people to walk alone, and they can move anywhere without anyone’s help. Normal people can register the images in their minds, but blind people are not able to identify with their vision. As we have mentioned in the existing system, many applications are there that are not in sequence and cannot be used easily by blind people. So, we have developed an application that manages the handling of difficulties faced by blind people in identifying reading, directing the location, object detection, setting reminders, and identifying trusted people by connecting with each individual. To solve those difficulties, this model provides a good and efficient solution.

Fig. 1. Proposed System Architecture for Assistive Technology

IV. EVALUATION AND ANALYSIS

Performance evaluation in these systems is typically based on accuracy, processing time, and ease of use. For example, Ashiq et al.[1] achieved 83.3% accuracy in object detection with their proposed system. The evaluation also considers user feedback and real-world testing in various indoor and outdoor environments. In comparison to previous systems, newer models using advanced CNN techniques have shown significant improvements in both accuracy and processing speed. Integrating Dialog flow API The Dialog flow API is integrated to recognize the voices of visually challenged people, which is the basis of this project. Dialog Flow is a human-computer interaction technology based on natural language conversations that is a Google-owned developer. Object Detection The spy camera is connected to an Oculus to detect objects and indicate to the user by voice commands that an object is present on its way. This may indicate him to either wait or move in some other direction to proceed further. Thus, it helps the user to walk on the roads safely.

V. CHALLENGES AND FUTURE DIRECTIONS

Despite the significant advancements in assistive technologies, several challenges remain to be addressed for these devices to become more effective and accessible.

TABLE II

PERFORMANCE COMPARISON OF ASSISTIVE DEVICES

|

Device Type |

Performance Score |

Key Features |

|

Optical Sensor-Based Aids |

62% |

Speed and lightweight design, but limited functionality |

|

AI-Based Aids |

44% |

Comprehensive scene understanding but resource-intensive |

|

Multi-sensor Fusion Aids |

51% |

Robust functionality with essential assistive features |

|

Hybrid-Based |

30% |

Smartphone-based with wearable camera; pro- vides audio feedback for both navigation and detection |

|

Arduino Based |

24% |

Uses ultrasonic and RGB sensors to assist with navigation and object recognition tasks |

- Complexity and Cost: Many state-of-the-art devices require expensive hardware compo- nents and sophisticated algorithms, which can limit their availability and affordability for users [10].

- Real-Time Performance:Ensuring that devices process data and provide real-time feed- back is crucial for navigation safety. Optimization of computational efficiency remains a key development area to improve response times[11].

- User Acceptance: The success of assistive technologies largely depends on their accep- tance by VIPs. Engaging with end-users throughout the development process is essential to address their specific needs, preferences, and usability concerns[12].

- Integration with AI and Machine Learning: There is potential to enhance these tech- nologies through AI-driven personalization, allowing devices to learn and adapt to a user’s specific navigation patterns and behaviors over time[13].

- Limited Functionality: Many existing assistive devices are constrained in their capabil- ities, often excelling in one area (like object detection) while lacking in others (such as accurate distance measurement or navigation [14].

- Cost and Accessibility: Advanced assistive devices often come with high costs due to sophisticated hardware and software requirements, limiting their availability to users who need them [15].

Conclusion

The review of CNN-based object recognition and tracking systems for visually impaired people underscores the significant advancements in assistive technologies designed to enhance mobility and independence. While many systems, including wearable devices and navigation aids, offer promising functionalities, no device fully meets all the needs of visually impaired individuals. Challenges such as high costs, complexity, and user acceptance remain barriers to widespread adoption. Engaging users in the development process is crucial to creating so- lutions that cater to their specific requirements. Furthermore, integrating artificial intelligence could lead to personalized devices that adapt to users over time, improving their overall expe- rience. Continued research and collaboration among developers, researchers, and the visually impaired community are essential for refining these technologies. Ultimately, the future of assistive technology holds great potential, aiming to empower visually impaired individuals with the tools they need to navigate their environments confidently and independently.

References

[1] F. Ashiq et al., “CNN-Based Object Recognition and Tracking System to Assist Visually Impaired People,” IEEE Access, vol. 10, pp. 14819-14834, 2022. [2] World Health Organization, ”Blindness and Visual Impairment,” [Online]. Available: https://www.who.int/en/news- room/fact-sheets/detail/blindness-and-visual-impairment, 2018. [3] W. Elmannai and K. Elleithy, ”Sensor-based assistive devices for visually-impaired people: Current status, challenges, and future directions,” Sensors, vol. 17, no. 3, p. 565, 2017. [4] J. Madake, S. Bhatlawande, and A. Solanke, ”Qualitative and Quantitative Analysis of Research in Mobility Technologies for Visually Impaired People,” IEEE Access, 2023. [5] X. Leong and R. K. Ramasamy, ”Obstacle Detection and Distance Estimation for Visually Impaired People,” IEEE Access, 2023. [6] T. Schwarze et al., ”A camera-based mobility aid for visually impaired people,” KI Ku¨nstliche Intelligenz, vol. 30, no. 1, pp. 29-36, 2016. [7] R. Jiang, Q. Lin, and S. Qu, ”Let blind people see: Real-time visual recognition with results converted to 3D audio,” Tech. Rep., 2016. [8] B. Kuriakose, R. Shrestha, and F. E. Sandnes, ”Tools and technologies for blind and visually impaired navigation support: A review,” IETE Tech. Rev., vol. 39, no. 1, pp. 3-18, 2022. [9] P. Chanana et al., ”Assistive technology solutions for aiding travel of pedestrians with visual impairment,” J. Rehabil. Assistive Technology. Eng., vol. 4, 2017. [10] X. Zhang et al., ”Trans4Trans: Efficient transformer for transparent object and semantic scene segmentation in real- world navigation assistance,” IEEE Trans. Intell. Transp. Syst., vol. 23, no. 10, pp. 19173-19186, 2022. [11] R. Minhas and A. Javed, ”X-Eye: A bio-smart secure navigation framework for visually impaired people,” in Proc. Int. Conf. Signal Process. Inf. Secur., 2018. [12] R. K. Megalingam, S. Vishnu, and S. Sreekumar, ”Autonomous path guiding robot for visually impaired people,” Cognitive Informatics and Soft Computing, 2019. [13] A. Berger et al., ”Google Glass used as assistive technology for blind and visually impaired people,” Sensors, vol. 17, no. 3, p. 565, 2017. [14] W.-J. Chang et al., ”An AI edge computing-based assistive system for visually impaired pedestrian safety at zebra crossings,” IEEE Trans. Consum. Electron., 2021. [15] Sadia Zafar, Muhammad Asif, and Maaz Bin Ahmad, ”Discusses the challenges of cost and accessibility in assistive devices for visually impaired people,”IEEE Trans.,2024

Copyright

Copyright © 2024 Akshay Dhumal, Divya Gharate, Samruddhi Patil, Atharva Shinde, Prof. S. G. Chordiya. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64986

Publish Date : 2024-11-04

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online