Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

The Communicative Assistance System for Deaf and Dum

Authors: Preethi M S, Shalini K V, Sharmila U, Sinchana M, Mr. Pradeep K

DOI Link: https://doi.org/10.22214/ijraset.2024.61201

Certificate: View Certificate

Abstract

Communication is essential for all human lives to explore their requirements and interactions with other people. The communication gap between those signing and those who don\'t can be bridged by creating software that acts as a real-time translator, deciphering sign language and making it accessible to everyone. This project creates a smart system that understands sign language gestures and turns them into textual output and it also create videos of sign language gestures for a given sentence in real time. It\'s like a bridge between sign language users and others, making communication easy and natural. The system learns different signs and expressions, ensuring it works well for various cultures. With a user-friendly design, it helps people with hearing impairments communicate effortlessly.

Introduction

I. INTRODUCTION

In a large country like India, 63 million people have speaking and hearing disabilities according to WHO. Such people converse with each other through sign language. But they find it difficult to converse with people who don’t know sign language. This can be due to two reasons: There isn’t a well- established sign language system in India and there are not many teachers available to teach sign language. Most of the sign language systems used in the Indian media is based on the British sign language system whereas schools and other educational institutes use the American Sign Language system. This creates a language barrier between the people who use American Sign Language and British Sign Language and many more. Here's a regeneration of the paragraph that avoids outdated terms and clarifies the concept: While sign language serves as a vital communication method for deaf and hard-of-hearing communities, it can create a barrier for those who don't understand it. To bridge this gap, sign language translation technology is emerging. This technology acts as a real-time interpreter, analyzing and converting sign language gestures into spoken words or text, fostering inclusive communication for everyone. which is done at the time of the exposure to the source language, and consecutive interpreting, which is done at breaks to this exposure. Language interpretation is defined by the International Standards Organization (ISO). It states that rendering a spoken or signed message into another spoken or signed language, preserving the register and meaning of the source language content. To do so we make use of ISL, which is Indian Sign Language. Indian Sign Language is a language that uses hand signs, facial expressions, and body postures to communicate ideas.

II. LITERATURE SURVEY

Sign language, a vital means of communication for the deaf and hard-of-hearing communities, has garnered significant attention in the realm of technological advancements, particularly in the domain of sign language recognition (SLR). Recognizing the intricate gestures and movements inherent to various sign languages presents a multifaceted challenge that intertwines linguistics, computer vision, and machine learning. As technology continues to evolve, the quest to develop robust, efficient, and inclusive SLR systems has intensified, catalysing a plethora of research endyors across the globe [1].

The essence of sign language recognition transcends mere gesture identification; it encapsulates the broader vision of fostering communication inclusivity, bridging linguistic divides, and empowering individuals with hearing impairments. Over the years, researchers, academicians, and technologists have delved deep into deciphering the complexities of sign languages, striving to devise innovative methodologies, algorithms, and frameworks capable of interpreting, translating, and transcribing sign gestures into comprehensible formats [2].

This literature survey embarks on a comprehensive exploration of the evolving landscape of sign language recognition, delineating seminal works, groundbreaking methodologies, technological innovations, and emerging trends. Through a meticulous examination of research papers, scholarly articles, technological advancements, and case studies, this survey aims to elucidate the foundational pillars, challenges, advancements, and future prospects shaping the trajectory of sign language recognition.

By navigating the intricate tapestry of sign language recognition, this survey endeavors to provide readers, researchers, practitioners, and stakeholders with a holistic perspective, fostering knowledge dissemination, innovation, and collaboration in this pivotal domain. As we traverse this enlightening journey, we shall unravel the myriad facets of sign language recognition, celebrating its transformative potential, and envisioning a future replete with inclusive communication paradigms [3].

III. METHODOLOGY

- Neural Networks Machine learning, especially deep learning, relies on artificial neurons to perform learning tasks. These neurons form networks called artificial neural networks, inspired by the human nervous system. In these networks, neurons are organized into layers, similar to how the human brain works. Each neuron receives input signals through connections called dendrites, processes them using a mathematical function (activation function), and produces an output. The connections between neurons have weights, influencing the strength of signals. Learning occurs by adjusting these weights. In summary, artificial neural networks use interconnected neurons to process information, mimicking the way our brains work, with learning happening through adjusting the connections weights.

- Convolutional Neural Networks (CNN) The main function of convolutional neural networks (CNN) is to essentially group or classify the pictures, cluster the images by similarity, and perform object detection with the assistance of artificial neural networks. The convolutional neural network takes the information of the image and processes the picture as a tensor, which is the matrices of numbers with extra dimensions and carries out a sort of search. The 3D objects are a portion of the instances that recognize the images as volumes. It is being uploaded in numerous applications, including recognition of the face and object identification. It's one of the best non-trivial assignments. In 11 constituents to neural networks, the convolutional layer, subsampling layers, and fully connected layer are the three distinctive layer types that are viewed as a part of CNN. CNN is mainly used for image recognition as this method has got more advantages in comparison with other strategies

- Bi-Directional Long Short-Term Memory (Bi- LSTM) A Bidirectional Long Short-Term Memory network, or Bi-LSTM, is a type of recurrent neural network (RNN) architecture used in machine learning and natural language processing tasks. LSTM networks are a specialized type of RNN designed to capture long-term dependencies in sequential data. The bidirectional aspect enhances the ability of the network to understand context from both past and future information. The distinctive feature of Bi- LSTM lies in its bidirectional structure. It comprises two LSTM layers, one processing the input sequence in the forward direction and the other in the backward direction. This bidirectionality allows the network to consider information from both the past and the future during the learning process. LSTMs have a memory cell that can maintain information over long sequences, addressing the vanishing gradient problem often encountered in traditional RNNs. Bi-LSTM networks are a powerful architecture for modelling sequential data, offering the advantage of capturing dependencies in both directions along a sequence. They have found success in various machine learning applications, particularly in tasks where understanding context from both past and future information is crucial.

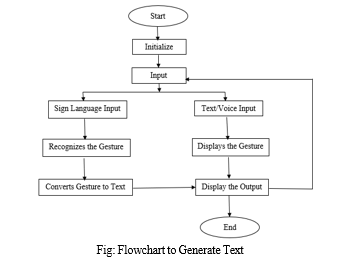

4. Initialize: The system is initialized, which likely means it’s prepared to receive and process input.

5. Input: The system accepts input in the form of either sign language or text/voice. If the input is sign language, the system moves on to the gesture recognition stage. If the input is text/voice, it likely means the system is designed to convert text or spoken words into sign language. How this would be displayed visually isn’t shown in this flowchart.

6. Recognize the Gesture: If the input is sign language, the system attempts to recognize the specific handshapes, movements, and facial expressions used to convey meaning.

7. Convert Gesture to Text: Once the gesture is recognized, the system converts it into written text.

8. Display the Output: The converted text is then displayed on a screen or another output device.

9. End: The process ends.

It’s important to note that this is a simplified flowchart, and real-world sign language recognition systems are much more complex. They typically use machine learning algorithms to identify and interpret signs.

IV. SYSTEM DESIGN

Description of first Objective Implementation The below code establishes a real-time hand gesture recognition system using the MediaPipe Hands module and a TensorFlow-based pre-trained model. The script captures video from the default camera, processing each frame by detecting hand landmarks. It then utilizes these landmarks as input to a pre-trained hand gesture recognition model, producing a predicted gesture class. The class name is extracted from a file named 'gesture.names.' The script continuously displays the processed video frames with the predicted gesture class overlaid until the user presses the 'q' key. The implementation incorporates the popular OpenCV and MediaPipe libraries, streamlining the process of capturing and manipulating video frames. The TensorFlow model is loaded and applied to the hand landmarks for efficient gesture prediction. The code's simplicity lies in its concise structure, utilizing familiar libraries and techniques for real-time computer vision applications. The while loop ensures the continuous execution of the gesture recognition process until the user decides to exit by pressing 'q'. Overall, the code efficiently combines different components to create an accessible and functional hand gesture recognition system.

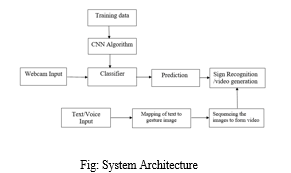

- Training Data: The system is trained on a dataset of text and corresponding sign language gestures. This dataset is likely used to train a machine learning model that can later recognize gestures from user input.

- CNN Algorithm: A CNN (Convolutional Neural Network) algorithm is used on the webcam input. CNNs are a type of artificial neural network particularly well-suited for image and video recognition tasks.

- Webcam Input: The system captures video from a webcam which is then fed into the CNN algorithm.

- Classifier: The CNN algorithm classifies the webcam input, which likely means it’s trying to identify the specific signs the user is making.

- Prediction - Sign Recognition: Based on the classification, the system predicts the sign language gesture the user is making.

- Text/Voice Input: The user can also provide input in the form of text or voice.

- Mapping Text to Gesture Image: If the user provides text or voice input, the system finds a corresponding image of a sign language gesture in its database.

- Sequencing the Images to Form a Video: Once the system has a sign language image (either from user input or by recognizing webcam input), it sequences the images together to form a video.

- Video Generation: The final step is video generation, where the sequenced images are compiled into a video.

Overall, this flowchart depicts a system that can take user input in the form of sign language or text/voice and generate a video that shows a person making the corresponding signs.

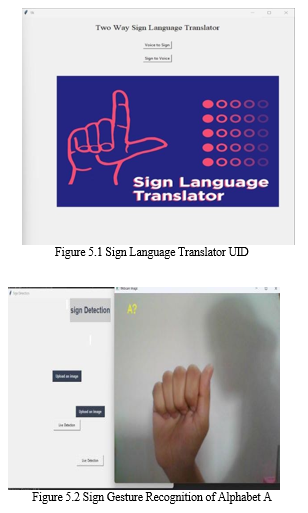

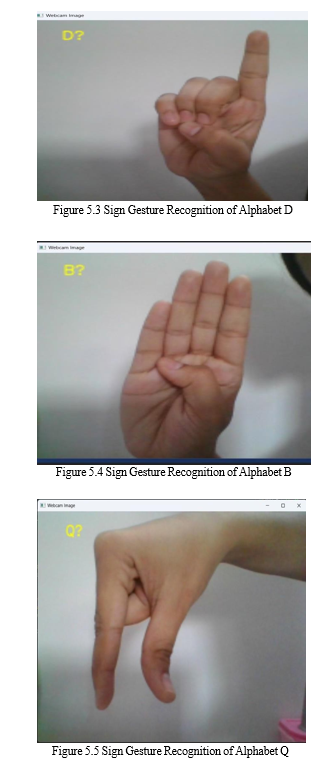

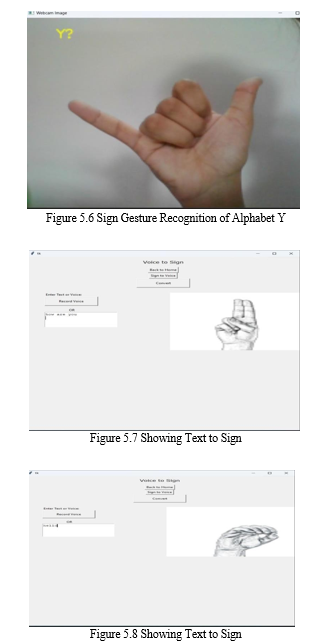

V. SYSTEM IMPLEMENTATION AND RESULTS

Implementation the below code establishes a real-time hand gesture recognition system using the MediaPipe Hands module and a TensorFlow-based pre-trained model. The script captures video from the default camera, processing each frame by detecting hand landmarks. It then utilizes these landmarks as input to a pre-trained hand gesture recognition model, producing a predicted gesture class. The class name is extracted from a file named 'gesture.names.' The script continuously displays the processed video frames with the predicted gesture class overlaid until the user presses the 'q' key. The implementation incorporates the popular OpenCV and MediaPipe libraries, streamlining the process of capturing and manipulating video frames. The TensorFlow model is loaded and applied to the hand landmarks for efficient gesture prediction. The code's simplicity lies in its concise structure, utilizing familiar libraries and techniques for real-time computer vision applications. The while loop ensures the continuous execution of the gesture recognition process until the user decides to exit by pressing 'q'. Overall, the code efficiently combines different components to create an accessible and functional hand gesture recognition system.

Conclusion

This innovative project holds the promise of significantly improving the quality of life for the deaf and mute community by providing a user-friendly and inclusive means of communication. By leveraging hand gesture recognition technology, individuals facing communication challenges can seamlessly integrate into society and engage in daily activities. The project\'s role as a smart assistant for people with disabilities highlights its potential impact beyond the specific needs of the deaf and mute community. Its language- independent nature ensures accessibility for users worldwide, transcending linguistic barriers. Through its commitment to fostering inclusivity, this technology not only empowers individuals with diverse abilities but also contributes to the advancement of assistive technologies that have broader applications in promoting accessibility and equal participation in society.

References

[1] R Rumana, Reddygari Sandhya Rani, Mrs. R. Prema, 2021, A Review Paper on Sign Language Recognition for The Deaf and Dumb [2] Ms Kamal Preet Kour, Dr. (Mrs) Lini Mathew, 2017, Literature survey on hand gesture techniques for sign language recognition [3] Kohsheen Tiku, Jayshree Maloo, Aishwarya Ramesh, Indra R, 2020, Real-time Conversion of Sign Language to Text and Speech [4] Victoria A. Adewale, Dr. Adejoke O. Olamiti, 2018, Conversion of sign language to text and speech using machine learning techniques [5] Advaith Sridhar, Rohith Gandhi Ganesan, Pratyush Kumar, Mitesh Khapra,2020, Include: A large scale Dataset for Indian sign language recognition [6] Kartik Shenoy, Tejas Dastane, Varun Rao, Devendra Vyavaharkar, 2018, Realtime Indian Sign Language (ISL) Recognition [7] Karmanya Verma, Mansi Bansode, Navaditya Kaushal, Mrs. Shubhangi Vairagar, 2022, A Literature Survey on Real-Time Indian Sign Language Recognition System [8] Sukanya Dessai, Siddhi Naik, 2022, Literature Review on Indian Sign Language Recognition System [9] Sujay R, Somashekar M, Aruna Rao B P,2022, Literature Survey: Sign Language Translator [10] Utkarsh Jagtap, Vinayak Nangnurkar, Suhas Chalwadi, Neelam Jadhav, 2023, Indian Sign Language Recognitio.

Copyright

Copyright © 2024 Preethi M S, Shalini K V, Sharmila U, Sinchana M, Mr. Pradeep K. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61201

Publish Date : 2024-04-28

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online