Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Comparative Analysis of Firefly and Modified Firefly Algorithms in Multimodal Biometric Authentication Systems

Authors: Oyelakun T. A., Agbaje M.O., Ayankoya F.Y, Ogu E. C

DOI Link: https://doi.org/10.22214/ijraset.2023.54260

Certificate: View Certificate

Abstract

This research focuses on addressing the challenges of high-dimensional feature spaces and selecting significant features in multimodal biometric systems. Feature-level fusion poses persistent issues that require extensive investigation due to its potential for enhancing biometric recognition accuracy. This study proposes the integration of meta-heuristic optimization techniques into the feature selection phase of a multimodal biometric system, prior to the classification phase, to identify the most relevant features from two distinct biological traits. To determine the authorization status of an individual, the fused feature vectors are inputted into a Support Vector Machine (SVM) classifier. The study leverages the physiological biometrics of the face and iris to validate the findings of previous research, highlighting the exceptional accuracy of iris recognition and the natural acceptability of face recognition for identity verification purposes.

Introduction

I. INTRODUCTION

A biometric system recognizes people based on feature vectors obtained from their biological characteristics and, has emerged as the most promising recognition approach in recent years [1]. Due to advancements in imaging and computations, identification of criminals, which reduces identity theft or forgeries, and enhanced security in electronic transactions, biometric technology application fields have attracted a lot of interest in recent years [2]. Healthcare, education, time and attendance, e-commerce, forensic, banking and finance are just a few of the application sectors for biometrics [3]. A unimodal biometric system uses only one property to perform a recognition operation, such as a fingerprint, nose, gait, voice, face, iris, or ear [4]. However, [5] observed that existing biometric systems have to deal with a variety of problems with the use of a single trait, such as a fingerprint image with a scar or poor illumination of the subject in face recognition. In real-world scenarios, the majority of biometric technologies are unimodal [6]. The limits of unimodal biometric systems have prompted researchers to focus their efforts on multimodal biometric systems, as the biometric source may become unreliable owing to a variety of factors such as sensor or software failure, noisy data, non-universality, and so on [7]. Also, [8] examined that most problems caused by unimodal biometric systems can be overcome by applying multimodal biometric approaches. Combining two or more biometric systems is a promising solution to provide more security according to [9] and, avoiding the falsification of several biometric traits at the same time [10].

A multimodal biometric system recognizes people using data from many biometric sources [11] and created by combining two or more biometric features to create a recognition system. In multimodal biometric systems, information fusion is a crucial step [12]. At different levels, fusion of different modalities can take place[13]. A multimodal biometric must consider a fusion of features to be unique, in which at several phases of a recognition system, biometric features can be fused [14] either at fusion-before-matching, that involves integrating biometric data before matching templates i.e. sensor level and feature level; or fusion-after-matching, which involves integrating data after the matcher/classification step i.e. score level, match level, rank level and decision level [15]. However, duplication of feature sets from different modalities, incompatibility of the numerous biometric modalities, higher complexity of extracted feature vectors, features redundancy and irrelevant features in high dimensional data that occur as a result of a concatenation-based fusion, and the presence of noisy data all pose significant challenges thus making fusion at the feature level a hard task in practice [9] and difficult to accomplish [16]. Hence, an optimization technique is required as an optimized feature selection method [17] to select the optimal subset of features from the extracted feature vectors at feature level fusion to reduce feature dimensionality space, minimize redundancy and irrelevant features thereby enhancing the classification's performance.

In order to address the issues raised above, particularly high redundancy and irrelevant features, various meta-heuristic optimization techniques, such as Genetic Algorithm (GA), Ant Colony Optimization (ACO), Simulated Annealing (SA), Particle Swarm Optimization (PSO) and Firefly Algorithm (FFA) have been used in the literature as feature selection techniques to choose the best subset of the original features. However, it still has significant limitations. Though, FA has been widely used for dimensionality reduction technique due to its effectiveness, simplicity, and eases of implementation but still suffers from premature convergence, an imbalance between exploitation and exploration, and a significant risk of becoming stuck in a local optimum, especially when applied to high-dimensional optimization problems like fusion [18]. Therefore, in order to further improve the performance of multimodal biometric authentication system, this research introduced a meta-heuristic optimization approach using a modified firefly algorithm for feature level fusion as an efficient feature selection algorithm to select optimal features, reduce redundant features in the feature space and speed up convergence rate for better classification and employed Support Vector Machine (SVM) as the classifier.

II. LITERATURE REVIEW

Feature level fusion was adopted in [19] to fuse the feature vectors of the iris and ear extracted by Principal Component Analysis technique, which also reduced the dimension of the feature vectors. Fingerprint and iris were fused at the feature level using a hierarchical data fusion model that combined intra- and inter-modal information in [9]. The recommended data fusion approach allows a better exploitation of the gathered characteristics with an appropriate combination of intra- and inter-modal fusion rules. Despite the practical challenges of putting feature-level fusion into practice, it shows promise for enhancing the precision of human identification.

A multimodal biometric identification method was provided in a study [7] to validate a person's identity based on his face and iris traits. The study used a novel fusion method that combined the canonical correlation process with the proposed serial concatenation to perform feature-level fusion. For the recognition process, a deep belief network was utilized. When the findings were compared to other existing systems, it was discovered that the fusion time was lowered by 34.5 percent. The proposed system also achieved a decreased equal error rate (EER) and 99 percent recognition accuracy. In another study, [20] proposed a multimodal biometrics technique based on the profile face and ear that not only fixes the problems with ear biometrics but also improves the overall rate of recognition. Fusion was performed using PCA at the feature level. In order to produce more discriminative and non-linear features for the KNN classifier's identification of individuals, the combined feature set is finally exploited using the kernel discriminative common vector (KDCV) method. Experimental results on two benchmark databases demonstrated superior performance of the proposed method over individual modalities and other state-of-the-art technologies.

A feature-level fusion and binarization framework for designing a multimodal template protection strategy that creates a single safe template from each user's various biometrics using deep hashing was developed by [22]. While adopting the proposed secure multimodal system, the matching efficiency and template security both increased. A safe face features based authentication system was built in [21] using a multimodal and multi-algorithmic biometric system incorporating ear, iris and face modalities at feature level fusion. PCA was employed for feature extraction, SVM for classification and multiple methods that included features from the human iris, ear, and face were used for person verification. There was a considerable improvement in verification performance and recognition accuracy when compared to unimodal and privies systems.

A multimodal biometric system architecture based on canonical correlation analysis (CCA) and a Support Vector Machines (SVMs) classifier for feature level fusion was introduced in [23] study. The merged features of Iris and Fingerprint modalities are trained and classified using an SVM. The proposed system's performance was measured in terms of SVM classifier average accuracy and reaction time which demonstrated that feature level fusion using CCA and an SVM classifier improves classification accuracy while requiring less training and testing time. Another study [24] built a reliable multimodal identification system using feature-level fusion. For 40 people from the ORL and CASIA-V1 databases, the system's recognition accuracy was improved by combining face and iris features. Using low-resolution iris images from the MMU-1 database, a further evaluation of recognition precision was carried out. The face-iris features were extracted using four comparative procedures. Principle Component Analysis (PCA) and Fourier Descriptors (FDs) approaches obtained 97.5 percent accuracy, while Gray Level Co-occurrence Matrix (GLCM) and Local Binary Pattern (LBP) texture analysis techniques reached 100% accuracy.

The performance of multimodal biometric identification was improved by merging fingerprint and iris at the feature level fusion (25). Multimodal biometrics' security phase was made possible by the method of fused picture encryption using the AES algorithm and matching to validate the encryption.

The main goal of [26] novel strategy is to protect fingerprint and signature data against theft and infiltration by fusing at the feature level, which eliminates the possibility of faked data. [27] fused biometric information at the feature level from a person's face, iris, and signature modalities. A wavelet-based feature extraction method that is incredibly effective, reliable, and straightforward was presented for all the three biometric traits. The proposed multi-biometrics system improved the unimodal system with notable results in terms of FAR and FRR, obtaining a maximum accuracy of 98.77 percent for the chimera database.

In another study, [12] introduced Discriminant Correlation Analysis (DCA) as a feature level fusion technique that incorporates class associations in feature set correlation analysis with the goal of finding transformations that maximize pair-wise correlations across the two feature sets while also separating the classes within each set. The study's computing complexity was extremely low, and it can be used in real-time applications. Finger print, palm and finger knuckle prints were fused at the feature level for personal authentication in [28] study. The distinctive properties of these Modalities are extracted using the Grey Level Co Occurrence Matrix (GLCM) feature extraction technique. Using an improved Artificial Neural Network (ANN) with the particle swarm optimization (PSO) algorithm to recognize a person resulted in high levels of security, specificity, and sensitivity during classification.

Another study, [29] examined numerous security flaws, various forms of data transfer and errors such as FAR, FRR, and FTE that occur during data collection, as well as a comparison of multimodal biometric systems against unimodal biometric systems. This method compares an individual's identity using many biometric identifiers and proposed method of enhancing the multimodal system by merging more than one or two biometric samples. Three biometric modalities; finger vein, face and fingerprint were fused at feature level in an effective matching technique that was put forth in [30]. It is based on the secondary calculation of the Fisher vector. Experimental findings show that the suggested technique achieves a superior identification rate and offers higher security than unimodal biometric-based systems, providing a useful strategy for enhancing the security of the Internet of Mobile Things (IoMT) platform.

III. METHODOLOGY

A. Image Acquisition

Face and iris images acquisition refers to the capture of both face and iris images simultaneously using an iris camera. A CMITECH IRIS Camera device was used to accomplish this. The subjects involved some interested Ladoke Akintola University of Technology, Ogbomoso (LAUTECH) students’ and staff within the campus. The study took into consideration 840 subjects with three different expressions each for the two biometric traits. The total datasets captured was 7560; 70 percent of the dataset were used for training and 30 percent for testing.

B. Image Preprocessing

Face and iris images were preprocessed to extract only the parts of the image that contain useful information. Preprocessing techniques were performed differently on face images and iris images in their datasets. The preprocessing phase of facial images involved were image cropping, image resizing and image enhancement using Histogram Equalization. The preprocessing phases of iris images involved were iris localization/segmentation and iris normalization.

C. Feature Extraction

Through the process of feature extraction, enormous amounts of redundant data are minimized and reduced computational complexity of the system is made possible. Using Principal Component Analysis, the feature values from the preprocessed data are extracted for this study's feature extraction. A fixed length FaceCode and IrisCode were constructed by extracting the features from the preprocessed images of face and iris using the Principal Component Analysis (PCA) to achieve good performance of the system. The PCA approach was used to extract the face and iris's distinguishing traits and created a set of Eigenfaces and Eigeniris. The steps taken during this period are outlined below.

- Creation of a set of Eigenfaces and Eigeniris: Eigenfaces and Eigeniris was achieved following the steps below:

a. A training set of both face and iris images were created, which were taken under the same lighting circumstances.

b. Average/mean image vector of all learned images were considered and calculated.

c. Each 1D image was subtracted from the mean image vector.

d. The unique image vectors were obtained by subtracting the mean image vector from each 1D picture vector. The normalized image vectors are the resultant vectors.

e. The eigenvectors and eigenvalues of this covariance matrix were calculated when the covariance matrix was calculated. Each eigenvector has the same dimensionality (number of components) as the original images, allowing it to be regarded as an image; consequently, the eigenvectors are referred to as Eigenfaces and Eigeniris.

f. Then, the eigenvalues were sorted in descending order, and the eigenvectors were arranged in the same manner. Only the Eigenfaces and Eigeniris with the highest eigenvalues were picked. These Eigenfaces and Eigeniris were later used to build the feature vectors for both training and testing images, representing both existing and new faces and irises.

2. Calculation of Feature Vectors: For each image in the Training set, the weight/feature vector was calculated.

3. Input a Test Image: An image is taken from the set of testing images.

4. Calculation of Feature Vector for Test Images: The feature vector for the test images was calculated.

D. Feature Selection Using Modified Firefly Algorithm

To enhance performance and reduce extracted feature dimensions for better classification in this study, the best features were selected using a feature selection technique, firefly algorithm (FFA).

Conclusion

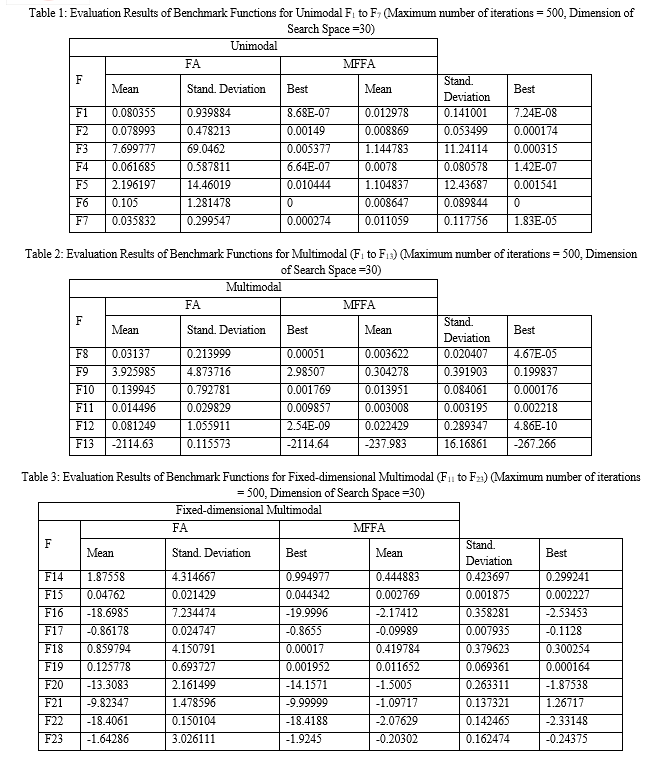

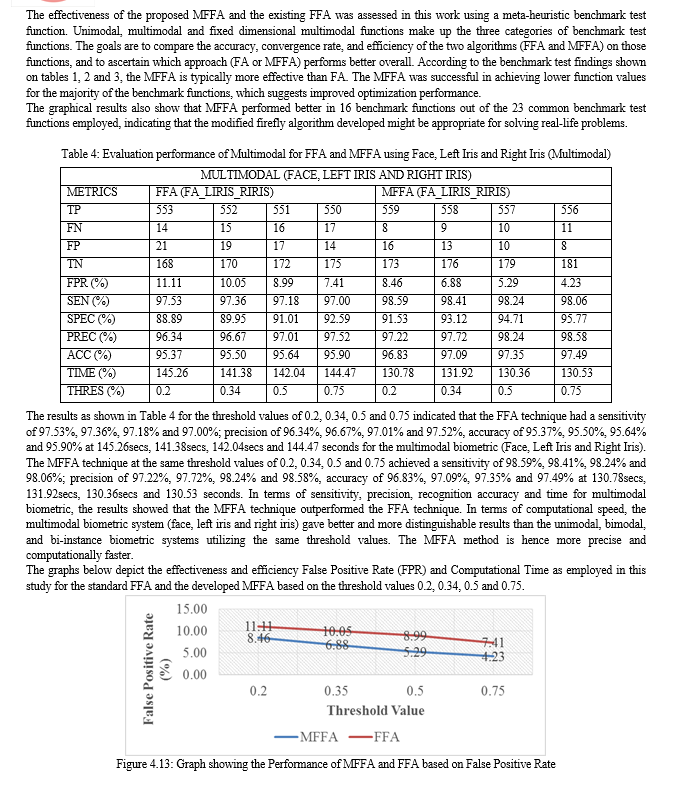

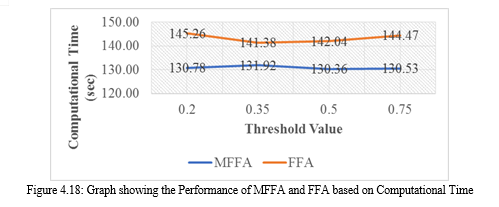

To enhance a multimodal biometric authentication system, a novel approach was devised for feature selection using a modified firefly algorithm (MFFA) based on meta-heuristic principles. The objective was to minimize the dimensionality of feature vectors and identify the most crucial and well-balanced features from facial images and locally acquired iris samples of individuals with African ancestry. By incorporating a chaotic sinusoidal map function and the roulette wheel approach into the existing FFA through the newly developed MFFA technique, the system aimed to improve classification performance and avoid getting trapped in local optima. The MFFA effectively identified relevant characteristics while reducing the high-dimensional feature vector space, making it a valuable feature selection method for biometric identification systems. Our experiments demonstrated the efficacy of this technique in integrating multimodal feature sets. Furthermore, the MFFA technique exhibited high computational efficiency and suitability for real-time applications.

References

[1] S. Shrivastava, “Biometric?: Types and its Applications,” Int. J. Sci. Res., pp. 10–11, 2015. [2] E. O. Eludire, A. A., & Adio, “Biometrics Technologies for Secured Identification and Personal Verification,” Biostat. Biometrics Open Access J., vol. 6, no. 2, pp. 42–45, 2018, doi: 10.19080/bboaj.2018.06.555683. [3] A. Kavitha and A. Vanaja, “ANALYSIS OF AUTHENTICATION SYSTEM IN DIFFERENT,” Int. J. Latest Trends Eng. Technol., pp. 13–17, 2017. [4] S. M. Prakash, P. Betty, and K. Sivanarulselvan, “Fusion of Multimodal Biometrics using Feature and Score Level Fusion,” Iinternational J. Appl. Inf. Commun. Engineeering, vol. 2, no. 4, pp. 52–56, 2016. [5] F. Yahya, H. Nasir, and K. Kadir, “Multimodal Biometric Algorithm?: A Survey,” Biotechnology, vol. 15, no. 5, pp. 119–124, 2016, doi: 10.3923/biotech.2016.119.124. [6] H. Jaafar and D. A. Ramli, “A Review of Multibiometric System with Fusion Strategies and Weighting Factor,” Int. J. Comput. Sci. Eng., vol. 2, no. 04, pp. 158–165, 2013. [7] R. O. Mahmoud, M. M. Selim, and O. A. Muhi, “Fusion time reduction of a feature level based multimodal biometric authentication system,” Int. J. Sociotechnology Knowl. Dev., vol. 12, no. 1, pp. 67–83, 2020, doi: 10.4018/IJSKD.2020010104. [8] D. Lathika, B . A., &Devaraj, “Artificial Neural Network Based Multimodal Biometrics Recognition System,” in International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), 2014, pp. 973–978. [9] E. S. Nil and A. Chilambuchelvan, “Multimodal biometric authentication algorithm at score level fusion using hybrid optimization,” Wirel. Commun. Technol., vol. 2, no. 1, pp. 1–12, 2018, doi: 10.18063/wct.v2i1.415. [10] S. Soviany, V. Sandulescu, S. Pu?coci, C. Soviany, and M. Jurian, “An optimized biometric system with intraand inter-modal feature-level fusion,” Proc. 9th Int. Conf. Electron. Comput. Artif. Intell. ECAI 2017, vol. 2017-Janua, pp. 1–8, 2017, doi: 10.1109/ECAI.2017.08166466. [11] A. Saha, “An Expert Multi-Modal Person Authentication System Based on Feature Level Fusion of Iris and Retina Recognition,” 2019 Int. Conf. Electr. Comput. Commun. Eng., pp. 1–5, 2019. [12] M. Haghighat, M. Abdel-Mottaleb, and W. Alhalabi, “Discriminant Correlation Analysis: Real-Time Feature Level Fusion for Multimodal Biometric Recognition,” IEEE Trans. Inf. Forensics Secur., vol. 11, no. 9, pp. 1984–1996, 2016, doi: 10.1109/TIFS.2016.2569061. [13] C. Kant, “Analysis of Different Fusion and Normalization Techniques,” Int. J. Adv. Res. Comput. Commun. Eng. ISO, vol. 3297, pp. 363–368, 2007, doi: 10.17148/IJARCCE.2018.7369. [14] P. Sai Shreyashi, R. Kalra, M. Gayathri, and C. Malathy, “A Study on Multimodal Approach of Face and Iris Modalities in a Biometric System,” Lect. Notes Networks Syst., vol. 176 LNNS, no. May, pp. 581–594, 2021, doi: 10.1007/978-981-33-4355-9_43. [15] S. N. Garg, R. Vig, and S. Gupta, “A Survey on Different Levels of Fusion in Multimodal Biometrics,” vol. 10, no. November, pp. 1–11, 2017, doi: 10.17485/ijst/2017/v10i44/120575. [16] U. Gawande, M. Zaveri, and A. Kapur, “A Novel Algorithm for Feature Level Fusion Using SVM Classifier for Multibiometrics-Based Person Identification,” Appl. Comput. Intell. Soft Comput., vol. 2013, pp. 1–11, 2013, doi: 10.1155/2013/515918. [17] M. Eskandari and O. Sharifi, “Optimum scheme selection for face-iris biometric,” IET Biometrics, vol. 6, no. 5, pp. 334–341, 2017, doi: 10.1049/iet-bmt.2016.0060. [18] I. Ahmia and M. Aïder, “A novel metaheuristic optimization algorithm: The monarchy metaheuristic,” Turkish J. Electr. Eng. Comput. Sci., vol. 27, no. 1, pp. 362–376, 2019, doi: 10.3906/elk-1804-56. [19] S. Sandhya and R. Fernandes, “Lip Print: An Emerging Biometrics Technology - A Review,” in 2017 IEEE International Conference on Computational Intelligence and Computing Research, ICCIC 2017, 2018, pp. 1–6, doi: 10.1109/ICCIC.2017.8524457. [20] R. Mythily and W. A. Banu, “Feature selection for optimization algorithms: Literature survey,” Journal of Engineering and Applied Sciences, vol. 12, no. Specialissue1. pp. 5735–5739, 2017, doi: 10.3923/jeasci.2017.5735.5739. [21] S. D. Jamdar and Y. Golhar, “Implementation of unimodal to multimodal biometrie feature level fusion of combining face iris and ear in multi-modal biometric system,” Proc. - Int. Conf. Trends Electron. Informatics, ICEI 2017, vol. 2018-Janua, pp. 625–629, 2018, doi: 10.1109/ICOEI.2017.8300778. [22] V. Talreja, M. C. Valenti, and N. M. Nasrabadi, “Deep Hashing for Secure Multimodal Biometrics,” IEEE Trans. Inf. Forensics Secur., vol. 16, pp. 1306–1321, 2021, doi: 10.1109/TIFS.2020.3033189. [23] C. Kamlaskar and A. Abhyankar, “Multimodal System Framework for Feature Level Fusion based on CCA with SVM Classifier,” Proc. 2020 IEEE-HYDCON Int. Conf. Eng. 4th Ind. Revolution, HYDCON 2020, 2020, doi: 10.1109/HYDCON48903.2020.9242785. [24] A. B. Channegowda and H. N. Prakash, “Image fusion by discrete wavelet transform for multimodal biometric recognition,” IAES Int. J. Artif. Intell., vol. 11, no. 1, p. 229, 2022, doi: 10.11591/ijai.v11.i1.pp229-237. [25] Z. T. M. Al-Ta’l and O. Y. Abdulhameed, “Features extraction of fingerprints using firefly algorithm,” SIN 2013 - Proc. 6th Int. Conf. Secur. Inf. Networks, no. November, pp. 392–395, 2013, doi: 10.1145/2523514.2527014. [26] M. Leghari, S. Memon, and A. A. Chandio, “Feature-Level Fusion of Fingerprint and Online Signature for Multimodal Biometrics,” pp. 2–5, 2018. [27] P. Cao, “Comparative Study of Several Improved Firefly,” no. 6117411, pp. 910–914, 2016. [28] L. N. Evangelin and A. L. Fred, “Feature level fusion approach for personal authentication in multimodal biometrics,” ICONSTEM 2017 - Proc. 3rd IEEE Int. Conf. Sci. Technol. Eng. Manag., vol. 2018-Janua, pp. 148–151, 2017, doi: 10.1109/ICONSTEM.2017.8261272. [29] G. Kaur and S. Singh, “Comparative Analysis of Multimodal Biometric System,” vol. 27, no. 63019, pp. 129–137, 2017. [30] Y. Xin, L. Kong, Z. Liu, C. Wang, and H. Zhu, “Multimodal Feature-level Fusion for Biometrics Identification System on IoMT Platform,” vol. 3536, no. c, 2018, doi: 10.1109/ACCESS.2018.2815540.

Copyright

Copyright © 2023 Oyelakun T. A., Agbaje M.O., Ayankoya F.Y, Ogu E. C. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET54260

Publish Date : 2023-06-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online