Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Comprehensive Overview of GAN Technology: Architecture, Training, and Applications

Authors: Vaishali Bhavani

DOI Link: https://doi.org/10.22214/ijraset.2024.61016

Certificate: View Certificate

Abstract

Generative Adversarial Networks (GANs) is a groundbreaking artificial intelligence technology that transforms generative modeling through the implementation of a novel adversarial training framework. GANs are made up of two neural networks, the generator and the discriminator, which compete in a minimax game in order to generate counterfeit samples of data and distinguish between real and fake data. This adversarial training method results in the creation of highly proficient generative models capable of producing data that is identical to real-world samples. GANs have been shown to have significant outcomes in a variety of programs, consisting of synthesizing images, style transfer, medical visualization, and natural language processing. However, GANs encounter difficulties with problems like mode collapse and operational irregularities. Presently, research is aimed at tackling these problems and strengthening GAN frameworks and training approaches. GANs’ adaptability and perspective contribute to an intriguing technology for a wide range of sectors and artistic activities, with consequences for artificial intelligence growth and generative modeling breakthroughs. GANs incorporate the potential of competitive training with their capacity to generate extremely realistic data in a variety of domains. We will begin by looking at the fundamental concepts, and underlying principles that reinforce GANs, and their general architecture, investigate their different possible uses, and discuss the obstacles and potential developments in this instantly transforming field.

Introduction

I. INTRODUCTION

In the constantly evolving field of AI, GANs are a potential answer for future developments. However, neural networks are readily deceived by noise and may be more confident in generating inaccurate predictions. In recent years, there has been a substantial shift in technology owing to difficulties in anticipating genuine outcomes. Ian Goodfellow and his colleagues introduced GANs in 2014. The success of GANs is determined by their architecture, which consists of two neural networks: a generator and a discriminator. The generator uses deconvolutional layers to transform an unknown input into an imitation data sample, whereas the discriminator employs convolutional layers to evaluate incoming data before making binary classification decisions. Put differently, the generator’s goal is to make false data, whereas the discriminator concentrates on differentiating between actual and artificial samples. This adversarial training process improves both networks, culminating in the generation of authentic information. GANs are a blend of Generative and Discriminator Models. The generator model generates all potential outcomes from given inputs, but the discriminator model distinguishes between actual and fraudulent inputs/outputs. Though the discriminator classifies actual pictures or data from the created machine, the generator model chooses an input vector at random from the Gaussian distribution. Within GANs, a zero-sum game is played between the discriminator and generator models as they attempt to outperform each other. In cases when the discriminator is able to distinguish between authentic and counterfeit photos, the generator is seen to have lost, but the discriminator wins if the generator can pass off its generated images as authentic. GANs have demonstrated effectiveness in both supervised and unsupervised learning strategies. To improve GAN stability, scalability, and performance, a number of architectural advancements and modifications have been developed. These include techniques like spectrum normalization, self-attention mechanisms, and gradual expansion. Several GANs have been created to meet various challenges and applications. Some notable varieties include Conditional GANs (cGANs), which generate data based on certain conditions or classifications: Wasserstein GANs (WGANs), which offer an unconventional tool for training to improve consistency; and StyleGANs, which excel at generating high-resolution images with a wide range of concepts. Because of its distinct features and advantages, each version is appropriate for a range of disciplines and uses.

II. LITERATURE SURVEY

The study [1], investigates the notion of GAN, its different forms, and applications. GANs are especially effective in image-based applications, as they produce high-quality pictures even at reduced resolutions. They can also build visuals from text descriptions. GANs are gaining popularity not just because of their capacity to generate phony samples, but also because of their promise for future prospects in the industry. Deep learning algorithms can open up a world of possibilities by making little adjustments to GAN structures. GANs provide theoretical and algorithmic possibilities, and when combined with deep learning algorithms, they can be employed in future applications.

The study [2], provides a complete overview of Generalized Autonomous Networks (GANs), a valuable tool for creating realistic data across several fields. It discusses the GAN architecture, training techniques, applications, assessment measures, problems, and future approaches.

The paper discusses GANs' historical evolution, significant design decisions, and various learning approaches. It also looks into GAN applications such as image synthesis, natural language processing (NLP), and audio synthesis. The report also highlights problems with GAN research, such as training instability, mode collapse, and ethical concerns. Improving scalability, creating new architectures, adding domain expertise, and investigating novel applications are some of the future paths that GAN research will pursue.

The authors in [3], examine the potential of Generalized Autonomous Networks (GANs) to leverage large amounts of unlabeled image data that are currently closed to deep representation learning and their capacity to learn deep, nonlinear mappings from latent spaces to data spaces and back are driving the increasing interest in GANs. This provides several potentials for theoretical and algorithmic advancement, as well as novel applications.

III. ARCHITECTURE OF GANs

A Generative Adversarial Network (GAN) consists of two main components: the Generator and the Discriminator.

A. Generator Model

The generator model is a critical component of a Generative Adversarial Network (GAN) that generates new, correct data. The generator accepts random noise as input and turns it into complex data samples such as text and graphics. It is usually referred to as a deep neural network. Through training, layers of learnable parameters represent the underlying distribution of the training data. As it is trained, the generator uses backpropagation to fine-tune its parameters, resulting in samples that closely resemble real data. The generator’s capacity to create high-quality, diverse samples that can mislead the discriminator is what makes it effective.

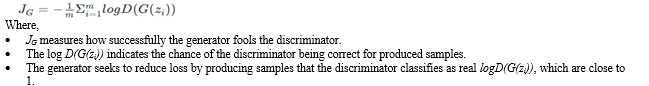

- Generator Loss

In a GAN, the generator’s goal is to create synthetic samples that are convincing enough to trick the discriminator. The generator does this by reducing its loss function, JG. The loss is lowest when the log probability is maximized, which means that the discriminator is very likely to categorize the produced samples as real. The following equation is shown below:

B. Discriminator Model

To distinguish between synthetic and authentic input, Generative Adversarial Networks (GANs) employ an artificial neural network known as a discriminator model. The discriminator operates as a binary classifier by analyzing incoming samples and assigning authenticity probabilities. progressively, the discriminator improves to distinguish between actual data from the dataset and false samples provided by the generator. This enables it to gradually refine its settings and improve its degree of expertise. When handling with image data, the architecture often includes convolutional layers or relevant structures for various additional styles. The adversarial training approach seeks to maximize the discriminator’s ability to correctly identify produced instances as counterfeit and genuine instances as legitimate. The discriminator becomes more discerning as a consequence of the generator and discriminator’s interaction, allowing the GAN to create exceptionally realistic-looking artificial data altogether.

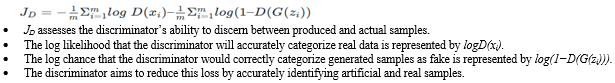

- Discriminator Loss

To accurately categorize both manufactured and actual samples, the discriminator lowers the negative log probability. Using the corresponding equation, this loss influences the discriminator to correctly classify produced instances as genuine and bogus instances:

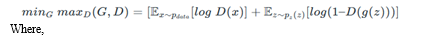

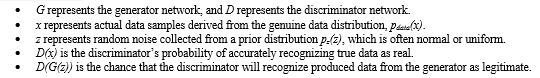

2. MinMax Loss

The minimax loss equation for a Generative Adversarial Network (GAN) is as follows:

IV. HOW DOES A GAN WORK?

A generative adversarial network system comprises two deep neural networks - the generator network and the discriminator network. Both networks train in an adversarial game, where one tries to generate new data, and the other attempts to predict if the output is fake or real data.

Technically, the GAN works as follows as shown in Fig. 1. A complex mathematical equation forms the basis of the entire computing process, but this is a simplistic overview:

- The generator neural network analyzes the training set and identifies data attributes.

- The discriminator neural network also analyzes the initial training data and distinguishes between the attributes independently.

- The generator modifies some data attributes by adding noise (or random changes) to certain attributes.

- The generator passes the modified data to the discriminator.

- The discriminator calculates the probability that the generated output belongs to the original dataset.

- The discriminator gives some guidance to the generator to reduce the noise vector randomization in the next cycle.

The generator attempts to maximize the probability of mistake by the discriminator, but the discriminator attempts to minimize the probability of error. In training iterations, both the generator and discriminator evolve and confront each other continuously until they reach an equilibrium state. In the equilibrium state, the discriminator can no longer recognize synthesized data. At this point, the training process is over.

V. TYPES OF GANs

Generative Adversarial Networks (GANs) have transformed throughout time, with several varieties tailored for specialized applications or enhancements to the basic architecture. Some well-known varieties of GANs are listed below:

- Standard GAN: The initial GAN comprises a generator and a discriminator. The generator aims to create indistinguishable data from genuine data, whereas the discriminator aims to discriminate between real and false data.

- Deep Convolutional GANs (DCGANs): DCGANs employ convolutional neural networks (CNNs) in both the generator and discriminator. They are ideal for picture-generating tasks and have consistent training features.

- Conditional GANs: In cGANs, both the generator and the discriminator are dependent on external data, such as labels. This enables the creation of specialized data depending on predefined circumstances.

- Wasserstein GANs (WGANs): WGANs employ the Wasserstein proximity as a training target, which can result in greater stability during training and convergence than normal GANs.

- Wasserstein GAN with Gradient Penalty (WGAN-GP): This is an improved version of WGANs that includes a gradient penalty term in the loss function to help stabilize training.

- CycleGAN: This form of GAN was created for unconstrained translation of images into image challenges. Using cycle consistency loss, it learns to translate pictures from one domain to another without the need for matched instances.

- StyleGAN and StyleGAN-2: StyleGAN and its sequel, StyleGAN2, are NVIDIA-developed style-based generators that provide users with greater authority over the looks and properties of the pictures they create.

- BigGAN: BigGAN is meant to produce high-resolution pictures. It employs an extremely constrained generator and an enhanced version of the pivot loss function as the discriminator.

- Progressive Growing of GANs: This technique begins with low-quality pictures and gradually improves complexity throughout training. It enables the creation of high-quality, high-resolution photographs.

- Self-Attention GANs: These GANs use self-awareness techniques to identify long-term dependencies in pictures, resulting in higher image quality.

- InfoGAN: InfoGAN extends the GAN goal with an information-theoretic normalization term, enabling the unsupervised learning of unraveled interpretations.

- Semi-supervised GANs: These GANs are intended for semi-supervised learning tasks in which just a subset of the data is labeled. They integrate GANs with categorization goals to use both labeled and unlabeled data.

- StarGAN: StarGAN is intended for multi-domain translation from image to image. It has the ability to translate pictures across various domains using just one model.

- Adversarial Autoencoders (AAEs): Although not precisely GANs, AAEs use the adversarial training framework in conjunction with automatic encoders in order to acquire concise and operationally relevant implicit representations.

- BEGAN: Boundary Equilibrium GANs use a balanced parameter to regulate the generator and discriminator during training, resulting in enhanced integration and reliability.

VI. APPLICATIONS

Generative Adversarial Networks (GANs) have proven useful in a variety of disciplines, thanks to their capacity to create realistic data. GANs have a wide range of applications, including:

- Image Synthesis: GANs excel in producing high-resolution, photorealistic images. They are utilized in various applications, including providing synthetic training data for computer vision tasks, producing realistic pictures for virtual worlds and gaming, and increasing image resolution and quality.

- Image-to-Image Translation: GANs help to translate pictures from one domain to another while retaining semantic meaning. Applications include style transfer, which transfers the style of one picture to another, as well as day-to-night image conversion, semantic segmentation, and object transfiguration.

- Medical imaging: It uses GANs for applications including image denoising, super-resolution, organ segmentation, and illness identification. They contribute to generating synthetic medical pictures for deep learning model training, augmenting constrained datasets, and increasing medical image interpretability.

- Natural Language Processing (NLP): GANs have been used to generate text, translate languages, and transfer styles in NLP. They may generate coherent and contextually appropriate content, design discussion systems, and assist with paraphrasing and summary chores.

- Video Generation and Editing: GANs can create realistic videos by extending their image synthesis skills to the temporal domain. They are used for tasks such as video prediction, super-resolution, and video-to-video synthesis, allowing for applications in video editing, content production, and special effects.

- Fashion and Design: GANs are used in the fashion and design sectors to create virtual garment designs, textile patterns, and product visualization. They assist designers in pursuing a wide range of creative opportunities, streamlining the design process, and personalizing fashion advice.

- Art and Creativity: GANs have stimulated creativity in art by allowing for the creation of unique artworks, creative style transfer, and remaking existing artworks. Artists and designers use them to experiment with new artistic styles, build digital art installations, and produce multimedia artworks.

- Drug Discovery: GANs are used in drug development to generate unique molecular structures with specific features. They help in virtual screening, molecular design, and chemical synthesis prediction, which speeds up drug discovery and lowers experimentation costs.

- Anomaly Detection: GANs are used for anomaly detection in a variety of sectors, including cybersecurity, manufacturing, and finance. They learn the typical data distribution and detect deviations from it, allowing for early identification of irregularities and possible dangers.

VII. DISCUSSION

A. Advantages

Generative Adversarial Networks (GANs) have various benefits, making them a popular artificial intelligence tool. Some of the main advantages of GANs are:

- High-Quality Data Generation: GANs can generate high-quality, realistic data samples in a variety of domains, including pictures, text, and music. The produced samples are quite similar to real data, making them suitable for applications in computer vision, natural language processing, and creative arts.

- Versatility: GANs may be used for a variety of tasks, including image synthesis, style transfer, text creation, video production, and others. Because of their versatility, they are appropriate for a wide range of applications in many fields and sectors.

- Unsupervised Learning: GANs may learn entirely from raw data without labels, making them ideal for unsupervised learning concerns where labeled data is rare or unavailable. This enables GANs to collect the fundamental information distributions without any requirement for explicit annotation.

- Data Augmentation: GANs may be used to supplement existing datasets by producing synthetic data samples. This contributes to boosting the diversity and amount of training datasets, hence enhancing the generalization and resilience of machine learning models trained on restricted data.

- Privacy-Preserving Data Generation: GANs enable the creation of synthetic data while protecting the privacy of sensitive information. GANs enable data sharing and cooperation while respecting privacy by producing data that resembles real data distributions but contains no personally identifying information.

- Adaptive Learning and Feedback Loop: GANs use an adaptive learning framework in which the generator and discriminator repeatedly improve their performance based on input from one another. This dynamic training process enables GANs to constantly improve the quality of their produced samples over time.

- Creative Applications: GANs have encouraged innovation in a variety of industries, including art, fashion, and design, by allowing for the creation of new and unique materials. Artists, designers, and makers use GANs to experiment with new artistic forms, develop digital artworks, and push the bounds of creativity.

- Domain adaption and style transfer: These are facilitated by GANs, which learn mappings between multiple data domains or styles. This allows applications like image-to-image translation, in which GANs may convert pictures from one domain to another while retaining semantic content and style.

- Reduced Manual Annotation Costs: By producing synthetic data, GANs eliminate the need for manual annotation efforts, which may be time-consuming and costly. This is especially useful in sectors like medical imaging, where labeled data is limited and costly to get.

B. Disadvantages

Generative Adversarial Networks (GANs) have demonstrated extraordinary ability to generate realistic data across several areas. However, they face various problems, which include:

- Mode Collapse: Mode collapse happens when the generator creates a restricted number of sample types, failing to capture the whole data distribution. This results in repeated or low-diversity outputs, which might impede the creation of new and varied samples.

- Training Instability: GAN training is frequently unstable and dependent on hyperparameters, architectural choices, and initialization. The generator and discriminator may achieve an equilibrium in which one network exceeds the other, resulting in oscillations or failure to converge.

- Assessment Criteria: The lack of established assessment criteria makes it difficult to assess the quality and variety of generated samples. Common measures like as the Inception Score and Frechet Inception Distance (FID) give some insights but may not cover all elements of generating quality.

- Gradient Vanishing and Explosion: During training, gradients in GANs can disappear or burst, especially in deep architectures. This can stifle learning and limit convergence, making it difficult to train deeper and more complicated models successfully.

- Sensitivity to Hyperparameters: GAN performance is susceptible to hyperparameters including learning rates, batch sizes, and architectural decisions. Fine-tuning these hyperparameters necessitates substantial testing and can be time-intensive.

- Model Interpretability: Because GANs are complex and multidimensional, it is difficult to understand and comprehend the learned representations. Interpretable models are required for applications in fields such as healthcare and finance, where openness and accountability are critical.

- Data Efficiency: GANs sometimes require significant volumes of data to produce high-quality results, which may not be easily available, particularly in fields with sparse data. Techniques for increasing data efficiency and using tiny datasets are required for practical applications.

- Generalization to Unseen Data: GANs may struggle to generalize to unknown data distributions, especially if the training data does not adequately match the variability seen in the target domain. Ensuring robustness and generalization capabilities is critical for implementing GANs in real-world applications.

- Ethical and Security Factors: GANs present ethical questions about the production of synthetic data, including data privacy, bias amplification, and the possibility of deepfakes or fraudulent material. Addressing these challenges necessitates a rigorous review of ethical principles and legislation.

Conclusion

GANs have attracted interest due to their capacity to generate actual samples and their potential for future use in deep learning algorithms. They can assist with picture and video development, AI can develop illness cures, and machine-generated music, movies, photographs, articles, and blogs. This study presents a full guide to GANs, including architecture, LFs, training techniques, applications, evaluation measures, problems, and future approaches. It discusses the historical evolution of GANs, significant design decisions, and several LFs used to train GAN models. It also looks at numerous GAN applications, such as image synthesis, natural language processing, and audio synthesis. Training instability, mode collapse, and ethical implications are all challenges in GAN research. This paper aims to promote GAN research and development, highlighting their potential for data creation, scientific discovery, and creative expression.

References

[1] Dipanshi Singh and Anil Ahlawat, “Generative Adversarial Networks, their various types, A Comparative Analysis, and Applications in Different Areas”, 3rd International Conference on Issues and Challenges in Intelligent Computing Techniques (ICICT), 2022. [2] Moez Krichen, “Generative Adversarial Networks”, 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), 2023. [3] Antonia Creswell, Tom White, Vincent Dumoulin, Kai Arulkumaran, Biswa Sengupta, and Anil A. Bharath, “Generative Adversarial Networks”, IEEE SIGNAL PROCESSING MAGAZINE, January 2020. [4] Jiayi Ma, Hao Zhang, Zhenfeng Shao, Pengwei Liang, and Han Xu, “GANMcC: A Generative Adversarial Network With Multiclassification Constraints for Infrared and Visible Image Fusion”, IEEE TRANSACTIONS ON INSTRUMENTATION AND MEASUREMENT, VOL. 70, 2021. [5] Chao Zhang, Caiquan Xiong, and Lingyun Wang, “A Research on Generative Adversarial Networks Applied to Text Generation”, 14th International Conference on Computer Science & Education (ICCSE 2019), Toronto, Canada, 2019. [6] Hongyi Lin, Yang Liu, Shen Li, and Xiaobo Qu, “How Generative Adversarial Networks Promote the Development of Intelligent Transportation Systems: A Survey”, IEEE/CAA Journal of Automatica Sinica, Volume: 10, Issue: 9, September 2023. [7] Mohamed Abdel-Basset, Nour Moustafa, and Hossam Hawash, “Generative Adversarial Networks (GANs)”, Wiley-IEEE Press, 2023.

Copyright

Copyright © 2024 Vaishali Bhavani. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61016

Publish Date : 2024-04-25

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online