Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Computer Aided Detection of Cancerous Areas through Steady Images of E.G.D(ESOPHAGOGASTRODUODENOSCOPY)

Authors: Harsh Jakhanwal, Kunal Pathak, Dr. N. K. Gupta, Awanish Kr. Shukla, Sunil Kr. Singh

DOI Link: https://doi.org/10.22214/ijraset.2024.63067

Certificate: View Certificate

Abstract

Stomach cancer, or gastric cancer, remains a significant global health concern due to its high mortality rate and prevalence. Despite advancements in medical technology and treatment, it continues to rank as the fourth most common cancer worldwide and the second leading cause of cancer-related deaths. However, there has been a decline in both incidence and mortality rates over the past decades, attributed to improved awareness, screening programs, and medical interventions. Early detection is crucial, as stomach cancer often begins with symptomless lesions that can progress over time. Various imaging modalities are utilized for detection, with endoscopy emerging as the preferred method due to its ability to provide high-resolution images and perform tissue sampling for accurate diagnosis. Recognizing the need for enhanced diagnostic support, a computer-aided analysis system has been developed, leveraging algorithms to enhance endoscopic images and detect cancerous areas more effectively. This system, employing MATLAB algorithms, enhances image quality and removes reflected flash spots, facilitating better visibility of lesions. The process involves manual marking of cancerous areas within images, dataset annotation using RoboFlow, and training the model using Google Collab for pattern recognition of cancerous lesions. Rigorous testing demonstrates high accuracy in real-time detection, promising significant advancements in early diagnosis and improved patient outcomes. This project represents a noteworthy stride in leveraging technology to combat stomach cancer, offering potential for further enhancements and broader clinical utility in the future.

Introduction

I. INTRODUCTION

Stomach cancer represents a significant global health burden, responsible for a considerable proportion of mortality worldwide. With approximately one in eight deaths attributed to the disease, its impact is profound, ranking as the second leading cause of death in developed nations and the third in developing countries. The demographic most affected typically falls between the ages of 50 and 70, although instances can also arise in younger individuals, with males under 40 exhibiting heightened susceptibility. Despite the strides made in medical science, unravelling the intricate mechanisms governing cancer's onset and progression remains a formidable task.

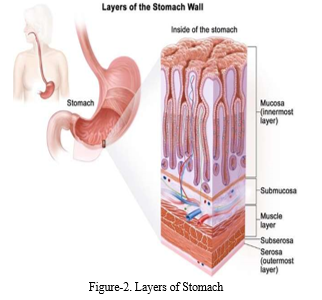

At its core, stomach cancer manifests as the uncontrolled proliferation of cells within the stomach lining, resulting in the formation of abnormal growths known as tumours. This unchecked cellular division disrupts the delicate balance that regulates cell growth and death, leading to the emergence of malignant growths capable of infiltrating nearby tissues and metastasizing to distant organs. The complexity of stomach cancer extends beyond local proliferation to its propensity for metastasis, disseminating tumours throughout the body via the bloodstream or lymphatic system. The disease progresses through several distinct stages, each signifying critical points in its advancement and prognosis. Stage 0, also known as carcinoma in situ, denotes the presence of abnormal cells confined to the innermost layer of the stomach lining. Stage I signifies localized cancer, where tumours are confined to the stomach wall without penetration into deeper tissues.

In Stage II, cancer cells penetrate deeper layers of the stomach wall, indicating a more advanced disease state. Stage III marks the spread of cancer to nearby lymph nodes, signifying an increased risk of metastasis and a more challenging treatment outlook. Finally, Stage IV denotes metastasis to distant organs, representing an advanced and often incurable stage of the disease [14].

Despite the daunting challenges posed by stomach cancer, advancements in medical interventions offer hope for improved outcomes. While the overall survival rate for gastric cancer remains relatively low, reaching as low as 10% for Stage IV cases, there have been notable improvements in survival rates in recent years. Some cases have achieved a 5-year survival rate as high as 94.75%, highlighting the progress made in treatment modalities such as surgery, chemotherapy, radiation therapy, and targeted therapies. However, the key to further improving outcomes lies in early detection and intervention, as early-stage diagnosis significantly increases the likelihood of successful treatment and long-term survival. Therefore, continued efforts in research, screening, and access to quality healthcare are essential in effectively addressing the global burden of stomach cancer and reducing its devastating impact on individuals and communities worldwide [13].

In the preprocessing phase, meticulous attention is given to enhancing the quality of medical images. Various techniques are applied to remove artifacts such as specular reflections, reduce noise, and standardize image contrast, ensuring optimal clarity for subsequent analysis. These steps are vital for refining the images and providing clean, standardized input data for the subsequent stages of the project [5].

Moving forward, detailed annotations are performed on the medical images to identify regions of interest, particularly those indicative of cancerous growths. This annotation process serves as the foundation for training the deep learning model, providing essential ground truth data for accurate identification and localization of cancerous regions in new images. Leveraging these annotated datasets, the deep learning algorithm learns to recognize patterns and features associated with cancerous lesions, facilitating accurate detection and diagnosis. This comprehensive approach aims to significantly improve cancer detection and ultimately enhance patient outcomes through timely intervention and treatment.

II. METHODOLOGY

The proposed method outlined in this paper aims to tackle the challenge of emerging Stomach cancer clots which arises on the various parts of the stomach. Our project aims to develop a Computer-Aided Diagnosis (CAD) system that uses advanced image processing and machine learning algorithms to analyse endoscopic images and differentiate between cancerous and non-cancerous areas. By enhancing image quality, manually marking cancerous regions, and creating a robust detection algorithm, the CAD system will provide supplementary insights to healthcare professionals, improving diagnostic accuracy and efficiency.

Despite the system's capabilities, final diagnoses and treatment decisions will be made by doctors based on comprehensive assessments, including biopsy results, ensuring the highest standard of patient care.

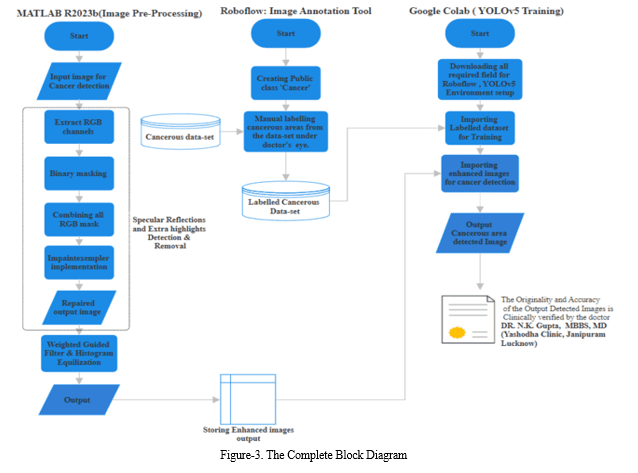

The figure 3 illustrates the comprehensive block diagram of our project, offering a detailed overview of the three primary methodologies employed: preprocessing, annotation, and dataset training. The preprocessing stage serves as the initial phase, where raw data undergoes various transformations to ensure compatibility and quality for subsequent analysis. This phase involves tasks such as data cleaning, normalization, and feature extraction, aimed at refining the dataset for optimal performance. Following preprocessing, the annotation stage involves the meticulous labelling of the pre-processed data, assigning relevant tags or categories to facilitate supervised learning. Annotation is crucial for training machine learning models as it provides the ground truth for the algorithm to learn from. Finally, the dataset undergoes training using a suitable machine learning or deep learning algorithm, leveraging the annotated data to develop predictive models or extract meaningful insights. This training phase involves iterative processes of model optimization, validation, and fine-tuning to enhance performance and accuracy. Overall, this block diagram encapsulates the systematic workflow of our project, from data preprocessing to model training, emphasizing the importance of each stage in achieving successful outcomes.

A. Database Acquisition

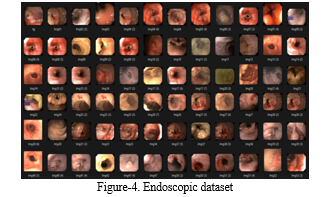

The cornerstone of our project lies in the comprehensive endoscopic dataset we've curated.

This dataset serves as the foundation upon which all our endeavours are built. In our pursuit of real-time images, we obtained invaluable assistance from Yashoda Hospital, located in Jankipuram, Lucknow, particularly from Dr. N.K. Gupta, MBBS, MD, and the hospital's esteemed radiologist, Mr. Anuj Virat. Additionally, we drew upon two additional sources: the Atlas of Gastric Cancer and the generous contribution of Mr. Xuan Ky, who graciously allowed us access to a portion of his dataset. However, our primary dataset originates from the Kvasir dataset, which holds paramount importance in our collection efforts. The significance of this dataset is underscored by its role in our primary objective: identifying images indicative of Gastric Carcinoma [4].

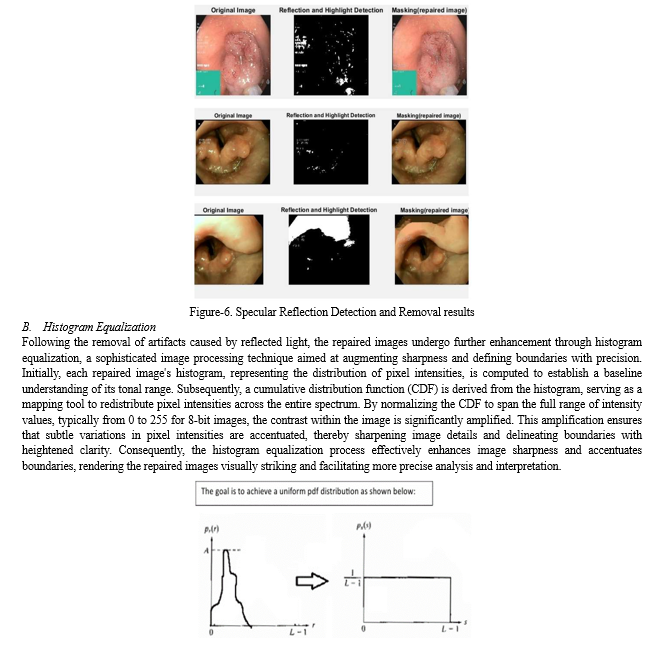

B. Specular Reflection Detection and Removal

In our project, addressing specular reflections and highlights in endoscopic images is crucial for improving visualization clarity, enhancing diagnostic accuracy, facilitating image analysis and processing, and ensuring patient safety and comfort during medical procedures. The process involves several key steps, as outlined in the flowchart provided. Initially, RGB channels are extracted from the images, separating the red, green, and blue colour components. Subsequently, binary masks are created for each RGB channel based on specific threshold values. These masks are then combined, and the 'inpaintExemplar' function is applied to remove specular reflections and highlights by filling in affected areas with information from surrounding regions. This comprehensive approach aims to effectively detect and remove artifacts, ultimately enhancing the utility and reliability of endoscopic imaging for medical diagnosis and treatment planning [1].

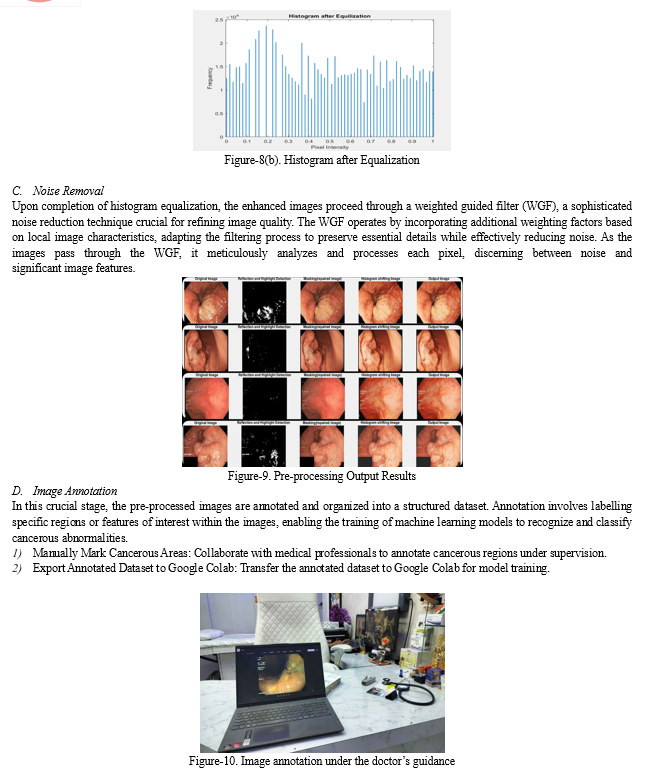

C. Histogram Equalization

Histogram equalization is a technique utilized to enhance image contrast and brightness by redistributing pixel intensity values. Initially, the histogram of the input image is computed, representing the distribution of pixel intensities. Subsequently, the cumulative distribution function (CDF) of the histogram is calculated, accumulating histogram values and providing a mapping of original intensity values to new ones. Following this, the CDF is normalized to map it to the full range of intensity values, typically ranging from 0 to 255 for 8-bit images. Using the normalized CDF, the pixel intensities of the input image are transformed, replacing each pixel's intensity value with its corresponding value in the normalized CDF.

D. Noise Removal

In our project, we utilize Weighted Guided Filter (WGF) to effectively remove noise from endoscopic images, enhancing their quality for subsequent analysis. The guided filter, known for edge-preserving smoothing, is adept at reducing noise while retaining crucial image details like edges and textures. With the weighted guided filter, we extend this capability by incorporating additional weighting factors based on local image characteristics, such as salient features derived from a Saliency Weightmap generated in MATLAB [2].

These weighting factors enable adaptive adjustment of the filtering process, ensuring better preservation of edges and textures amidst noise. In the context of endoscopic imaging, where maintaining fine details is essential for accurate diagnosis, the weighted guided filter proves invaluable in enhancing image quality by selectively smoothing noisy areas while preserving critical features. The resulting enhanced images are stored in an external folder for subsequent use in cancerous detection algorithms.

E. Image Annotation

In our project, the image annotation process is streamlined through the utilization of the widely recognized tool 'Roboflow'. Upon signing in or creating an account, a public project named 'Cancer' is established within the platform, facilitating the importation of the dataset for annotation. These images, acquired during live endoscopy sessions conducted under the guidance of Dr. N.K. Gupta, offer real-time insights into cancerous regions within the gastrointestinal tract. With meticulous supervision from medical professionals, the manual marking of cancerous areas is meticulously undertaken, with a dedicated class termed 'Cancerous' assigned for accurate annotation.

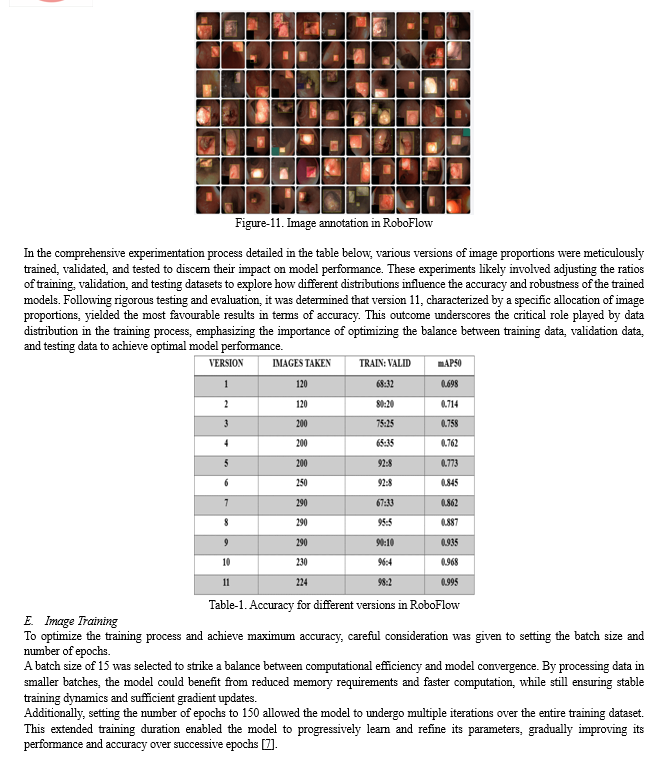

F. Image Training

Our project focusing on the detection of cancerous regions, the dataset training process is a pivotal step, employing the sophisticated YOLOv5 Deep-Learning algorithm. This process entails leveraging a meticulously pre-processed dataset, which serves as the bedrock for training the model to discern intricate patterns indicative of cancer in endoscopic images. Initially, within the Google Colab environment, we meticulously download all essential dependencies required for the training environment to ensure seamless execution. Subsequently, we import the labelled dataset from Roboflow, a crucial repository enriched with annotations precisely marking cancerous regions. These annotations, expertly crafted under the guidance of medical professionals, provide indispensable ground truth data, enabling the model to learn and generalize patterns associated with cancerous lesions effectively [3].

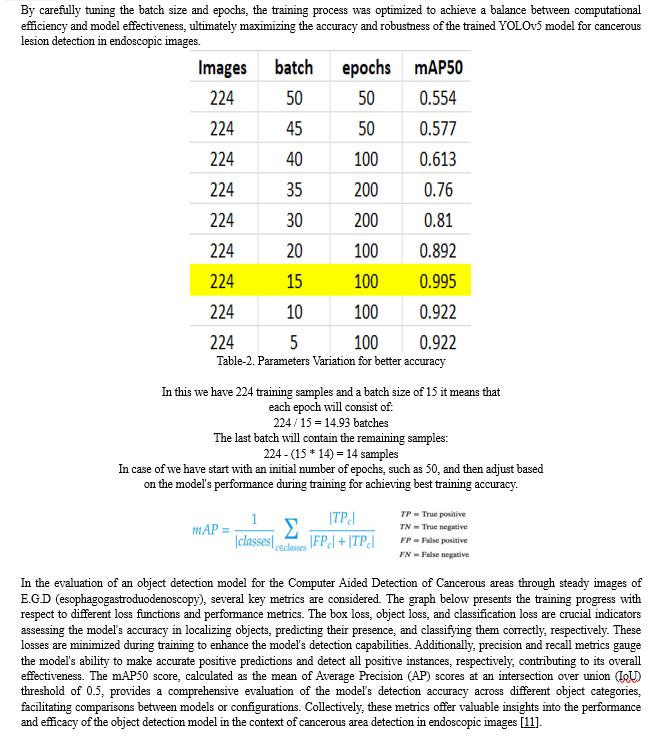

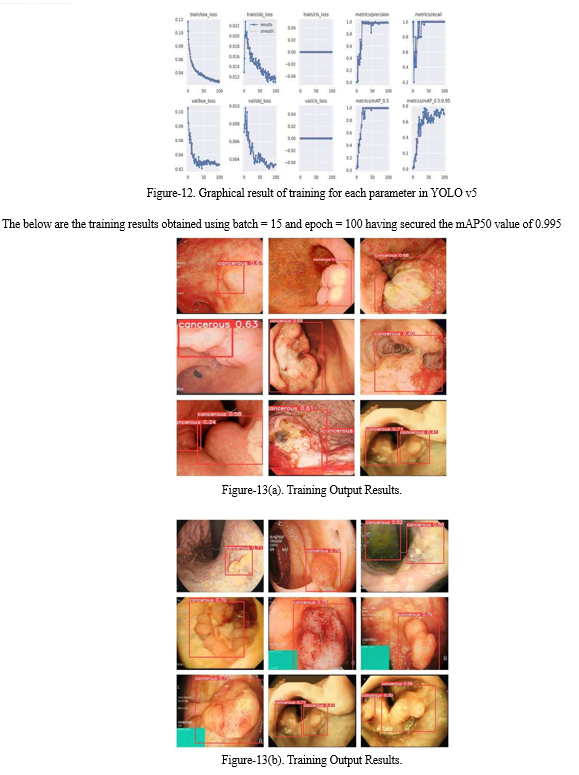

During the training phase, the model undergoes iterative adjustments to its parameters, guided by the examples within the dataset. This iterative process facilitates the gradual refinement of the model's predictive capabilities, enhancing its proficiency in accurately identifying and delineating cancerous regions within the endoscopic images. Parameters such as the number of images, epochs, and batches are meticulously configured to optimize the training process, ensuring maximal efficiency and effectiveness. Moreover, specialized techniques such as data augmentation may be employed to enrich the dataset further, augmenting the model's robustness and generalization capabilities.

Once the model is sufficiently trained, it is primed to analyze new images for cancerous regions. Enhanced images, containing annotations denoting areas suspected of harboring cancerous lesions, are imported into the model, specifying the designated path for detection. Leveraging its learned knowledge and fine-tuned parameters, the model diligently scrutinizes these images, identifying and flagging regions exhibiting characteristics indicative of cancer. The results of this analysis are then stored in the defined path, facilitating further evaluation and interpretation by medical professionals.

In essence, the dataset training process represents a critical milestone in our project, embodying the fusion of cutting-edge machine learning techniques with domain expertise in medical imaging. By harnessing the power of the YOLOv5 Deep-Learning algorithm and a meticulously curated dataset, we endeavor to develop a robust computer-aided detection system capable of augmenting diagnostic capabilities and revolutionizing patient care in the realm of gastrointestinal cancer detection.

IV. RESULTS AND DISCUSSIONS

Through the implementation of the methodologies outlined in this study, significant advancements are achieved in the processing and enhancement of endoscopic images. By meticulously following the prescribed steps, we observe notable outcomes:

A. Reflected Flash Spot detection and subtraction

In the process of reflected flashlight detection and subtraction, a multi-step procedure is employed to effectively identify and eliminate the undesired artifacts caused by reflected light in images sourced from a database.

Initially, when an image is input into the system, it undergoes an initial analysis to detect areas affected by reflected flashlight. Through a series of algorithms and image processing techniques, these areas are delineated and highlighted through the creation of a mask[9] . This mask effectively isolates the regions impacted by reflected light, providing a clear visual representation of the areas requiring correction. Subsequently, the identified mask is utilized to subtract the corresponding pixels from the original image, effectively eliminating the undesired effects of reflected light. The resulting output showcases the image with the mask removed, revealing a corrected version devoid of the artifacts caused by reflected flashlight [6]. This meticulous process ensures the preservation of image quality and enhances the overall visual clarity, facilitating more accurate analysis and interpretation of the images within the database.

IV. ACKNOWLEDGMENT

I extend my deepest gratitude to Dr.(Prof.) Induprabha Singh, the Head of Department at Shri Ramswaroop Memorial College of Engineering and Management, for her invaluable support and guidance throughout this project. Her leadership and mentorship have been instrumental in shaping our work and providing us with the opportunity to contribute to innovation in the field. I would also like to express my sincere appreciation to all the faculty members of our department for their unwavering support and encouragement. Their expertise and guidance have been invaluable in navigating the challenges of this project and achieving our goals.

Furthermore, I am profoundly grateful to Dr. N.K. Gupta, M.D. (Internal Medicine), for his invaluable guidance, support, and encouragement throughout the duration of this project. His expertise, timely suggestions, and unwavering enthusiasm have been pivotal in driving our progress and ensuring the successful completion of this endeavour. Dr. Gupta's profound insights, kindness, and dynamic encouragement have played a significant role in our project's accomplishments. I am truly indebted for his mentorship and support, which have been indispensable in our journey towards innovation and excellence in the field.

Our deepest gratitude is to our project guide, Prof. Awanish Kr Shukla and Prof. Sunil Kumar Singh (Asst. Professor, ECE Dept.). We have been fortunate to have an advisor who gave us the freedom to explore and at the same time the guidance to recover when our steps faltered. He taught us the art of questioning and expressing our ideas more precisely and convincingly.

The success of any project depends on the collective efforts of the numerous hands that have rendered their support in several ways. We hereby appreciate and extend our vote of thanks for the individuals who provided us with their support, creative ideas and valuable guidance in making this a work a success. Project is more than a task; it is a journey that involves continuous efforts of not just the one who develops it but also each and every hand who was involved in the project for even once.

Conclusion

In conclusion, the project represents a significant advancement in medical imaging, providing a comprehensive tool for enhancing endoscopic images and detecting stomach cancer regions, with the potential to improve patient outcomes and save lives. 1) The project aims to enhance endoscopic images to aid in the detection of stomach cancer regions, with the objective of supporting medical practitioners in diagnosing and treating the disease effectively. 2) Initial efforts focused on pre-processing the images to optimize their quality before inputting them into the detection model. This involved removing unwanted artifacts like highlights and specular reflections through techniques such as morphological operations and filtering. 3) Noise reduction was a critical step achieved through the use of Weighted Guided Filter (WGF), effectively reducing noise while preserving important image details. 4) Following pre-processing, the project proceeded to data annotation and model training, where endoscopic images were meticulously annotated to identify cancerous regions. This annotated data was then used to train the YOLOv5 model for accurate detection. 5) The trained model demonstrated impressive efficacy in detecting stomach cancer regions within endoscopic images, offering valuable support to medical professionals for diagnosis and treatment. 6) Endorsement by Dr. N.K. Gupta, a distinguished gastroenterologist, bolstered the project\'s credibility, affirming its potential for real-world application in clinical settings.

References

[1] Chao Nie, Chao Xu, Zhengping Li, Lingling Chu and Yunxue Hu “Specular Reflections Detection and Removal for Endoscopic Images Based on Brightness Classification”, January (2023). [2] Wang, X.; Li, P.; Lv, Y.; Xue, H.; Xu, T.; Du, Y.; Liu, P. Integration of Global and Local Features for Specular Reflection Inpainting in Colposcopic Images. J. Healthc. Eng. 2021, 5401308. https://doi.org/10.1155/2021/5401308 [3] Tomoyuki Shibata, Atsushi Teramoto, Hyuga Yamada, Naoki Ohmiya, Kuniaki Saito and Hiroshi Fujita “Automated Detection and Segmentation of Early Gastric Cancer from Endoscopic Images Using Mask R-CNN” 2021 [4] HyperKvasir Dataset (2020) https://doi.org/10.1038/s41597-020-00622-y [5] Ali Yasar, Ismail Saritas, Huseyin Korkmaz \"Computer-Aided Diagnosis System for Detection of Stomach Cancer with Image Processing Techniques\"; Journal of Medical Systems (2019). [6] Wang, X.X.Li, P.Du, Y.Z.Lv, Y.C.Chen, “Detection and Inpainting of Specular Reflection in Colposcopic Images with Exemplar-based Method.” IEEE 13th International Conference on Anti-counterfeiting, Security, and Identification (ASID), (2019). [7] L. Wu, W. Zhou, X. Wan, et al., “A deep neural network improves endoscopic detection of early gastric cancer without blind spots,” Endoscopy, vol. 51, pp. 522-531, 2019. [8] Toshiaki Hirasawa, Kazuharu Aoyama, Tetsuya Tanimoto, Soichiro Ishihara, Satoki Shichijo, Tsuyoshi Ozawa, Tatsuya Ohnishi, Mitsuhiro Fujishiro, Keigo Matsuo, Junko Fujisaki, Tomohiro Tada “Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images” Journal published on Springer, Gastric Cancer (2018) 21:653–660. [9] Akbari, M.Mohrekesh, M.Najarian, K.Karimi, N.Samavi, S.Soroushmehr, “Adaptive specular reflection detection and inpainting in colonoscopy video frames.”25th IEEE International Conference on Image Processing (2018). [10] Sakai, Y.; Takemoto, S.; Hori, K.; Nishimura, M.; Ikematsu, H.; Yano, T.; Yokota, H. Automatic detection of early gastric cancer in endoscopic images using a transferring convolutional neural network. In Proceedings of the 40th International Conference of the IEEE Engineering in Medicine and Biology Society, Honolulu, HI, USA, 17–21 July 2018; pp. 4138–4141. [11] Hirasawa, T.; Aoyama, K.; Tanimoto, T.; Ishihara, S.; Shichijo, S.; Ozawa, T.; Ohnishi, T.; Fujishiro, M.; Matsuo, K.; Fujisaki, J.; et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer 2018, 21, 653–660. [12] Mingjun Song, Tiing Leong Ang “Early detection of early gastric cancer using image-enhanced endoscopy” Journal published on ELSEVIER, Vol 3, pg. 1-2, 2014. [13] Bhagyashri G. Patil, Prof. Sanjeev N. Jain \"Cancer Cells Detection Using Digital Image Processing Methods\"; International Journal of Latest Trends in Engineering and Technology (IJLTET),2014. [14] Artusi, A.Banterle, F.Chetverikov, “Survey of Specularity Removal Methods.” (2011). [15] Arnold, M. Ghosh, A. Ameling, S. Lacey, “Automatic Segmentation and Inpainting of Specular Highlights for Endoscopic Imaging.” (2010). [16] El Meslouhi, O. Kardouchi, M. Allali, H. Gadi, T. Benkaddour, Y.A. “Automatic detection and inpainting of specular reflections for colposcopic images.” (2011)

Copyright

Copyright © 2024 Harsh Jakhanwal, Kunal Pathak, Dr. N. K. Gupta, Awanish Kr. Shukla, Sunil Kr. Singh. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63067

Publish Date : 2024-06-02

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online