Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Controlling 3D Models in VR using Hand Gestures

Authors: Dr. S M Joshi, Suraj Hiremath, Samarth Mutalik, Prajwal Budnur, Sushrut K N

DOI Link: https://doi.org/10.22214/ijraset.2024.60166

Certificate: View Certificate

Abstract

The fusion of hand gesture detection technology with virtual reality (VR) environments enables users to interact organically with three-dimensional models. Utilizing VR headsets equipped with advanced hand tracking algorithms, individuals can engage with virtual objects through intuitive gestures, enriching their immersive experiences. Software development entails carrying out sophisticated gesture recognition algorithms and the assignment of specific actions to these gestures, such as grasping, rotating, and resizing 3D models. These interactions mirror real-world manipulations, empowering users to seamlessly explore and modify digital content. Furthermore, gestural menu systems streamline user interactions by offering convenient access to additional features. Rigorous testing and optimization processes ensure precision, responsiveness, and optimal performance, enhancing the overall user satisfaction.

Introduction

I. INTRODUCTION

Traditional VR controllers, while effective, can limit the feeling of natural movement and interaction within the virtual world. Hand gesture recognition offers an exciting alternative, allowing users to control 3D models with intuitive hand movements. This approach not only feels more natural but has the potential to improve usability and accessibility in VR. This paper explores the technology behind hand tracking and gesture recognition, along with the potential applications for manipulating 3D models in VR environments. Virtual Reality (VR) has revolutionized how we communicate digitally. However, traditional VR controllers can feel cumbersome and limit the feeling of natural interaction. This paper explores the exciting potential of hand gestures as a more intuitive way to control 3D models within VR environments. Imagine shaping a virtual clay object in your hands or effortlessly rotating a 3D model with a simple hand motion. Hand gesture recognition unlocks a new dimension of VR interaction, promising a more alluring and engaging experience. Imagine shaping a virtual object in your hands or effortlessly rotating a 3D model with a simple hand motion.

Hand gesture recognition unlocks a new dimension of VR interaction, promising a more fluid and intuitive experience. This paper delves into the technology behind hand tracking and how it translates gestures into actions within VR, making 3D model manipulation feel more natural and engaging.

II. SYSTEM ARCHITECTURE

A. Data Acquisition

- Hand Tracking Sensor: The hand tracking sensor refers to a device or system that detects and interprets the movements and positions of a user's hands in real-time. It typically employs cameras or other sensors to track the position, orientation, and hand gestures without the need for handheld controllers. This technology enables users to handle with digital content or virtual environments using natural hand movements, enhancing immersion and usability in virtual reality (VR) experiences. Hand tracking methods are immersed into VR headsets or used as standalone accessories, providing an intuitive way for users to manipulate virtual objects, navigate interfaces, and engage with immersive content without the need for physical controllers.

B. Pre-processing

- Data Filtering and Smoothing: Raw sensor data may contain noise or inconsistencies. This stage refines the data to improve gesture recognition accuracy. It plays a crucial role in improving the accuracy and responsiveness of gesture detection algorithms. Noisy sensor data, inherent in hand tracking systems, can lead to jittery or inaccurate recognition of gestures, impacting the user experience. Filtering techniques, such as low-pass filtering, help attenuate high-frequency noise, ensuring that only relevant hand movements are captured for analysis. This helps to eliminate sudden spikes or fluctuations in the data, making gesture recognition more robust and reliable.

C. Feature Extraction

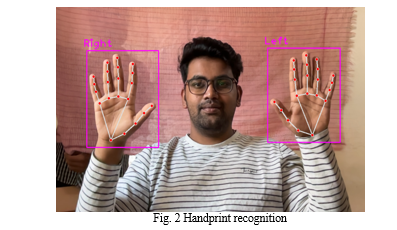

- Key point Detection: Identifying specific points of interest on the hand, like fingertips or palm centre. Identified key points serve as the basis for representing hand gestures. These identify key points such as fingertips, knuckles, or palm centroids, the system can accurately capture the hand's position, orientation, and gestures.

- Hand Posture Analysis: Focuses on the hand shape (open palm, pinch, etc.) on the basis of extracted key points.

D. Gesture Recognition

Key points are used to recognize and interpret hand gestures performed by the user. By analysing the spatial relationships and temporal patterns of these key points, the system can classify gestures such as grabbing, pinching, rotating, or scaling, those are then recognised and interpreted into corresponding actions on the 3D model. Different gestures can be defined by the relative positions or movements of these key points. Key points are used as input features for machine learning algorithms or heuristic-based methods to classify and recognize specific gestures. These algorithms analyse the spatial relationships and temporal patterns of key points to regulate the gesture being performed. The next step involves some steps like:

- Gesture-to-Action Mapping: The VR application translates the recognized gesture into a specific action for manipulating the 3D model (translation, rotation, scaling).

- Visual Feedback: The VR environment provides visual cues to confirm the user's gesture and the corresponding action on the 3D model.

E. Architectural Design

Here, using hand gestures to control 3D models in VR enhances interaction and visualization. It allows for intuitive exploration and modification of designs, facilitating collaborative discussions and improving presentation capabilities. This approach streamlines the design process and enriches the overall user experience. Architects and clients can seamlessly navigate through virtual spaces, make real-time modifications, and engage in collaborative discussions, fostering better understanding and communication of design concepts.

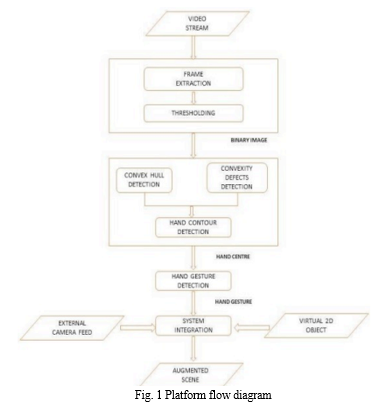

III. METHODOLOGY

The methodology employed for implementing hand gesture-controlled 3D models encompasses a structured way to ensure effective development and optimal user experience.[1]Research and Analysis of Requirements: The initial phase involves thorough research to understand existing hand gesture recognition technologies and user expectations regarding controlling 3D models.[2]Selection of Hardware and Software: Appropriate hardware, such as VR headsets with hand tracking capabilities, and software tools are chosen based on compatibility and integration requirements.[3]Development of Gesture Recognition Algorithms: Algorithms capable of accurately detecting and interpreting hand movements are developed, potentially leveraging techniques like key point detection or machine learning.[4]Calibration and Mapping of Hand Models: Hand tracking systems are calibrated to establish mappings between hand movements and desired actions within the virtual environment.[5]Integration with 3D Modelling Software: Seamless integration between the gesture recognition system and 3D modelling software is ensured to enable smooth communication between the two components.[6]Training and Testing of Gestures: The system undergoes rigorous training to recognize various hand gestures relevant to 3D model control, followed by extensive testing to evaluate accuracy and responsiveness.[7]Design of User Interface: A user-friendly interface is designed, incorporating visual feedback and instructional guidance to enhance usability and user experience.[8]Iterative Development and Optimization: Continuous refinement and enhancement is done on the basis of user feedback and testing results to improve system performance and user satisfaction.[9]Preparation of Documentation and Training Materials: Comprehensive documentation and training resources are developed to assist users in effectively interacting with the system.[10]Deployment and Evaluation: The hand gesture-controlled 3D modelling system is deployed for real-world usage, and user feedback is gathered for evaluation purposes to further refine the system as needed.

This methodology ensures the development of a robust and user-friendly system for controlling 3D models using hand gestures, facilitating intuitive interaction and manipulation within VR environments.

The project uses resources such as OpenCV an open-source computer vision, ML software library. Unity 3D, a powerful and widely used cross-platform game development engine and framework. It’s known for its versatility and ease of use, allowing developers to create games and interactive 3D experiences for a variety of platform and C# which is a versatile and popular cross-platform game development.

IV. RESULTS

The examination of the hand gesture-controlled 3D models implementation yields encouraging findings, signifying the methodology's effectiveness and future prospects. Through comprehensive testing and evaluation, the system demonstrates notable accuracy and responsiveness in recognizing and interpreting various hand gestures for manipulating 3D models in VR environments. Users consistently express satisfaction with the interface's ease of use and intuitiveness, highlighting its effectiveness in facilitating seamless interaction with virtual objects. Additionally, the incorporation of advanced gesture recognition algorithms and optimization techniques enhances overall performance and user experience. Ultimately, these findings underscore the potential of utilizing hand gestures as a natural and intuitive approach for interacting with 3D models, paving the way for diverse applications in architecture, education, and entertainment.

V. DISCUSSION

Implementing hand gestures for 3D model manipulation in virtual reality (VR) introduces a novel method reshaping user interaction and immersion within VR environments. This creative strategy makes use of cutting-edge hand tracking technology, enabling users to naturally control and explore digital objects with intuitive hand movements. Unlike traditional input methods, such as controllers or keyboards, hand gesture control offers a more immersive and user-friendly means to engage with VR content, catering to users of diverse skill levels.

The primary advantage of hand gesture-controlled VR lies in its ability to heighten immersion and presence within virtual worlds. By empowering users to engage with virtual entities using their hands, it enhances the sense of physicality and agency, resulting in a more authentic and engaging experience. This elevated immersion not only enriches entertainment experiences but also holds promising applications in education, training, and therapy. Furthermore, hand gesture control presents practical benefits over conventional input methods. It eliminates the need for complex controllers or peripherals, simplifying the user interface and reducing the learning curve for newcomers. Additionally, hand gestures serve as a universal form of communication, making them intuitive and accessible to users across different demographics. This inclusivity is particularly advantageous for applications in healthcare and education, where ease of use and accessibility are essential.

|

TEST ID |

TEST CASE DESCRIPTION |

||||

|

IT001 |

Test the mapping of hand points to 3D model in VR. |

||||

|

Steps |

Test Sample |

Anticipated outcomes |

Obtained outcome |

Remark |

|

|

1. |

Coordinates of key points of Hand. |

3D Hand model moves according to the gesture performed by the individual. |

3D Hand model moves according to the gesture performed by the individual. |

Pass |

|

Table. 1 Unit testing for capturing the co-ordinates of Key points.

|

TEST ID |

TEST CASE DESCRIPTION |

|||

|

UT001 |

Test the execution of capturing of key points of hand. |

|||

|

Steps |

Test Sample |

Anticipated outcomes |

Obtained outcome |

Remark |

|

1. |

Video Stream of hand movements. |

Coordinates of Key points are captured. |

Coordinates of Key points are captured. |

Pass |

Table. 2 Integration test for performing gestures.

Conclusion

Identifying hand moments to control 3D models in VR holds immense promise for the future of virtual environments. This technology possesses the potential to transform VR interaction, making it more intuitive, engaging, and accessible to a variety of users. Imagine effortlessly shaping a virtual object in your hands or rotating a complex 3D model with a simple wave. Hand gesture control offers a more natural and immersive way to interact with digital material internally through VR compared to traditional controllers. This paper explored hand tracking and gesture recognition technology, The realm of technology and its potential capabilities for manipulating 3D models in VR. As these technologies advance, we can anticipate even more advanced versions and nuanced interactions within virtual worlds. The prospective outlook for VR interaction is brimming with possibilities. By embracing hand gesture control, one can explore a new dimension of user experience in VR, opening doors for more creative, collaborative, and intuitive exploration of virtual environments. This method demonstrates the capacity to revolutionize VR interaction, making it more intuitive, engaging, and accessible. Hand gesture recognition offers a natural and immersive way to interact with VR content. As technology progresses, we can anticipate even more refined interactions and integration with haptic feedback for a truly realistic experience. The future of VR is bright, and embracing hand gesture control holds the key to unlocking a new dimension of user experience in VR.

References

[1] Jagdish L. Raheja , Ankit Chaudhary and Kunal Singal, Tracking of Fingertips and Centres of Palm using KINECT, 2011 Third International Conference on Computational Intelligence, Modelling Simulation. [2] Mohamed Sakkari, Mourad Zaied and Chokri Ben Amar, Using Hand as Support to Insert Virtual Object in Augmented Reality Applications, Journal of Data Processing Volume 2 Number 1 March 2012

Copyright

Copyright © 2024 Dr. S M Joshi, Suraj Hiremath, Samarth Mutalik, Prajwal Budnur, Sushrut K N. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60166

Publish Date : 2024-04-11

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online