Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Imperial Study on Convolutional Layer in CNN for Data Mining

Authors: Kunal P Raghuvanshi, Abhijeet R Kamble, Saurabh B Matre

DOI Link: https://doi.org/10.22214/ijraset.2024.64683

Certificate: View Certificate

Abstract

Convolutional Neural Networks (CNNs) have become a cornerstone in the field of data mining, particularly for tasks involving large-scale image and pattern recognition. This paper presents an imperial study on the efficacy of convolutional layers within CNN architectures in data mining applications. We analyze the impact of varying convolutional layer configurations, such as kernel size, stride, and depth, on performance metrics including accuracy, computational cost, and feature extraction quality. By conducting a series of experiments across different datasets, we explore how these configurations influence the model\'s ability to generalize and detect complex patterns in structured and unstructured data. Our findings indicate that optimizing the convolutional layers significantly improves the efficiency of CNNs for data mining tasks, offering insights into best practices for architectural design in such applications. This study provides valuable guidelines for leveraging CNNs in the realm of data mining, pushing the boundaries of automated data analysis and knowledge discovery.

Introduction

I. INTRODUCTION

This paper presents an imperial study on the convolutional layer in CNNs, focusing on its architecture, functionality, and applications in data mining. We explore the theoretical foundations of convolutional operations, including their mathematical underpinnings and the role of activation functions. Furthermore, we investigate the impact of various hyperparameters, such as filter size and stride, on the performance of CNNs in different data mining scenarios.

By examining real-world case studies and empirical results, this study aims to provide a comprehensive understanding of how convolutional layers can be optimized for effective data mining. Our findings will not only contribute to the existing body of knowledge but also serve as a practical guide for researchers and practitioners seeking to leverage CNNs in their data-driven endeavors. Through this exploration, we aspire to illuminate the pathways for future advancements in CNN architectures and their application in extracting valuable insightsfromlarge-scaledatasets. Algorithms for deep learning are mainly concern with Artificial Neural Network (ANN). Neural network with multiple layers, also called Multi-Layer Perception (MLP), is capable for matching sophisticated function to solve different tasks. Images commonly contains much of spatial information that are tricky for MLP to extract and utilize effectively. To better harness the spatial information of the input data, Convolutional Neural Network (CNN) is developed. Convolutional layers work like filters that have a significant output when facing a particular kind of spatial information such as edges or corners in the images which results in a better ability to utilize spatial information comparing with MLP.

In recent years, Convolutional Neural Networks (CNNs) have emerged as one of the most effective and widely used deep learning models, especially for tasks involving data mining, image recognition, and large-scale pattern detection. Originally designed for image classification, CNNs have since found applications across numerous fields, ranging from medical imaging and natural language processing to financial data analysis and even time series forecasting. The versatility and effectiveness of CNNs lie in their ability to automatically learn spatial hierarchies of features through multiple layers of convolutional operations. These features are extracted by the network through filters or kernels that slide over the input data, capturing intricate patterns that would be difficult or impossible to extract using traditional machine learning methods. The primary focus of this paper is to conduct an in-depth analysis of the convolutional layer within CNN architectures and its significant role in enhancing the performance of data mining tasks. The convolutional layer serves as the backbone of CNNs by applying filters to input data, transforming it into high-level feature maps. These transformations allow the network to capture essential patterns, such as edges, textures, or even complex shapes in the case of image processing. Beyond images, CNNs have also been shown to be highly effective in processing sequential data, such as time-series, audio, and textual data. These properties make

CNNs particularly suitable for the structured and unstructured data challenges prevalent in data mining.

The architecture of a CNN consists of several hyperparameters, such as filter size, stride, padding, and depth, that dictate how the convolutional layer functions. Each of these parameters directly impacts the model’s ability to extract features, its computational efficiency, and its overall accuracy. For instance, smaller filter sizes tend to capture fine-grained details, while larger filters can detect more global patterns within the data. Stride controls the step size of the filter as it convolves over the input, affecting the resolution of the output feature maps. Depth, determined by the number of filters in a layer, dictates how many features the model can learn at each stage of the network. Optimizing these hyperparameters is crucial for enhancing the network's performance and ensuring that it generalizes well to unseen data in real-world scenarios.

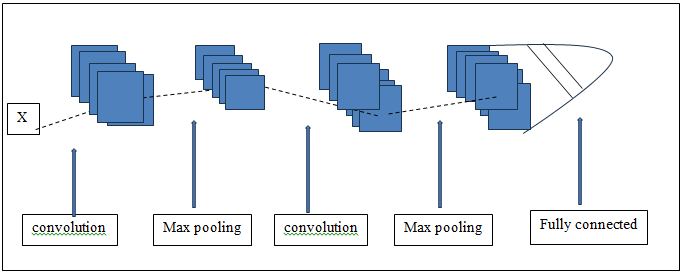

Figure A. Lenet-5 convolutional neural network architecture.

II. LITERATURE REVIEW

The collection of papers showcases significant advancements in applying deep learning techniques, particularly Convolutional Neural Networks (CNNs) across a range of domains.

|

Sr. No |

Author Name |

Conclusion |

Source |

Year |

|

|

Luca Comanducci et al. |

Developed a deep learning method for sound field synthesis with irregular loudspeaker arrays, improving performance. |

Springer [1] |

2024 |

|

|

Dina Salem and Mohamed Waleed |

Studied drowsiness detection models using CNNs, showing potential for various applications. |

Springer [2] |

2024 |

|

|

Clara I. Lopez-Gonzalez et al. |

Introduced ELFA-CNNs for better feature explanation in CNNs, improving interpretability. |

Elsevier [3] |

2024 |

|

|

Bilal Hassan Ahmed Khattak et al. |

Explored a hybrid CNN-LSTM model for cryptocurrency trend prediction, emphasizing Fibonacci indicators. |

Springer [4] |

2024 |

|

|

Wei Cui and Mingsheng Shang |

Proposed KAGN for rumor detection, incorporating external knowledge for improved text representation. |

Springer [5] |

2023 |

|

|

Bairen Chen et al. |

Created a GECCN framework for detecting false data injection in power systems, achieving high accuracy. |

Springer [6] |

2023 |

|

|

Yun Ju et al. |

Introduced a hybrid CNN-LightGBM model for wind power forecasting, improving accuracy. |

IEEE [7] |

2023 |

|

|

Sui sheng Chen et al. |

Introduced IGCN-based leak detection algorithm, improving detection rates; future focus on autocorrelation. |

IEEE [8] |

2023 |

|

|

Rasha Alshehhi and Claus Gebhardt |

Developed a mask R-CNN for detecting Martian dust storms in satellite imagery. |

Springer [9] |

2022 |

|

|

Mengchen Zhao et al. |

Proposed CNN-MIAD model for anomaly detection in structural health monitoring, improving performance through dataset balancing. |

Springer [10] |

2022 |

|

|

Emily R. Long et al. |

Investigated CNNs for corrosion detection, achieving high accuracy; suggests further model exploration. |

Springer [11] |

2022 |

|

|

Bo Wen Zan et al. |

Proposed a CNN-based approach for modeling aerodynamic data, reducing training costs. |

Springer [12] |

2022 |

|

|

Mengxi Liu et al. |

Proposed MSCANet for cropland change detection, enhancing feature extraction with CNN-transformer architecture. |

IEEE [13] |

2022 |

|

|

Yekta Said Ca et al. |

Utilized CNNs to extract information from historical maps, overcoming annotation challenges. |

IEEE [14] |

2021 |

|

|

Yiping Gong et al. |

Developed a context-aware CNN for remote sensing object detection, enhancing feature extraction. |

IEEE [15] |

2021 |

|

|

Hidenobu Takahashi et al. |

Created a CNN for classifying seismic events, achieving high accuracy across categories. |

Springer [16] |

2021 |

|

|

Mercedes E. Paoletti et al. |

Created a rotationally equivariant CNN for hyperspectral data, showing robustness without data augmentation. |

IEEE [17] |

2020 |

|

|

Feng Hu and Kai Bian |

Developed a CNN model for classifying coal and gangue in infrared images, optimizing performance. |

IEEE [18] |

2020 |

|

|

Xiaodong Yin et al. |

Proposed a CNN-based digital twin model for UHVDC system loss measurement, achieving high accuracy. |

IEEE [19] |

2020 |

|

|

Jong-Hyouk Lee |

Developed a CNN method for web spam detection using website screenshots. |

IEEE [20] |

2020 |

|

|

Pengxu Jiang et al. |

Developed PCRN model for speech emotion recognition, effectively combining different features. |

IEEE [21] |

2019 |

|

|

Xiaole Zhao et al. |

Introduced AWCNN for optimizing MR image windowing, improving efficiency. |

IEEE [22] |

2019 |

|

|

Zhongwen Guo |

Developed DPCRCN for time series classification; future work on new architectures. models. |

IEEE [23] |

2019 |

|

|

Yihan Xiao et al. |

Proposed a CNN for network intrusion detection, enhancing performance and speed; plans to use GANs for rare attacks. |

IEEE [24] |

2019 |

III. PROPOSED WORK

Author proposes a hybrid architecture that merges the strengths of LSTM networks and CNNs, effectively addressing datasets that possess both spatial and temporal characteristics. This flexibility allows our approach to be applied across diverse data types and mining scenarios, highlighting its versatility in practical applications.

A. Algorithm Architecture

The proposed algorithm comprises three key components:

- Data Pre-processing Module This module prepares the input data for analysis, ensuring it is clean, normalized, and formatted appropriately for subsequent processing.

- CNN-based Feature Extraction Module In this phase, pre-processed data is fed into the CNN, which focuses on extracting spatial features.

- LSTM-based Temporal Analysis Module Following spatial feature extraction, the LSTM network analyzes these features to capture temporal dependencies.

Conclusion

The study concludes that optimizing convolutional layer parameters—such as kernel size, stride, and depth—is essential for significantly enhancing the performance of Convolutional Neural Networks (CNNs) in data mining tasks. These parameters directly affect the network\'s ability to capture and extract features from complex datasets, which in turn impacts the overall accuracy and efficiency of the model. Specifically, careful selection of kernel size allows the network to effectively recognize patterns at different scales, while appropriate stride settings help control the movement of the filter across the input image, influencing both computation time and feature extraction quality. Additionally, the depth of the convolutional layers determines the number of filters applied, which can directly influence the complexity of the learned representations. The findings offer practical guidelines for designing CNNs tailored to specific tasks, underscoring the importance of a well-structured architecture for optimal performance. By fine-tuning these parameters, practitioners can enhance not only the accuracy of their models but also the computational efficiency, making CNNs more suitable for real-time or large-scale data mining applications. The study suggests that future research should focus on advanced optimization techniques such as automated hyperparameter tuning, evolutionary algorithms, and neural architecture search (NAS). These methods could further improve the ability of CNNs to adapt to diverse datasets and tasks, pushing the boundaries of what can be achieved in both performance and scalability. Overall, this research provides a strong foundation for CNN optimization and encourages the exploration of more sophisticated approaches to further enhance the capabilities of CNNs in data-driven domains.

References

[1] Luca Comanducci et al. \"A deep learning method for sound field synthesis with irregular loudspeaker arrays, improving performance.\" Springer, 2024. [2] Dina Salem and Mohamed Waleed. \"Drowsiness detection models using CNNs, showing potential for various applications.\" Springer, 2024. [3] Clara I. Lopez-Gonzalez et al. \"ELFA-CNNs for better feature explanation in CNNs, improving interpretability.\" Elsevier, 2024. [4] Bilal Hassan Ahmed Khattak et al. \"A hybrid CNN-LSTM model for cryptocurrency trend prediction, emphasizing Fibonacci indicators.\" Springer, 2024. [5] Wei Cui and Mingsheng Shang. \"KAGN for rumor detection, incorporating external knowledge for improved text representation.\" Springer, 2023. [6] Bairen Chen et al. \"GECCN framework for detecting false data injection in power systems, achieving high accuracy.\" Springer, 2023. [7] Yun Ju et al. \"Hybrid CNN-LightGBM model for wind power forecasting, improving accuracy.\" IEEE, 2023. [8] Sui Sheng Chen et al. \"IGCN-based leak detection algorithm, improving detection rates; future focus on autocorrelation.\" IEEE, 2023. [9] Rasha Alshehhi and Claus Gebhardt. \"Mask R-CNN for detecting Martian dust storms in satellite imagery.\" Springer, 2022. [10] Mengchen Zhao et al. \"CNN-MIAD model for anomaly detection in structural health monitoring, improving performance through dataset balancing.\" Springer, 2022. [11] Emily R. Long et al. \"Investigating CNNs for corrosion detection, achieving high accuracy; suggests further model exploration.\" Springer, 2022. [12] Bo Wen Zan et al. \"A CNN-based approach for modeling aerodynamic data, reducing training costs.\" Springer, 2022. [13] Mengxi Liu et al. \"MSCANet for cropland change detection, enhancing feature extraction with CNN-transformer architecture.\" IEEE, 2022. [14] Yekta Said Ca et al. \"Utilizing CNNs to extract information from historical maps, overcoming annotation challenges.\" IEEE, 2021. [15] Yiping Gong et al. \"Context-aware CNN for remote sensing object detection, enhancing feature extraction.\" IEEE, 2021. [16] Hidenobu Takahashi et al. \"A CNN for classifying seismic events, achieving high accuracy across categories.\" Springer, 2021. [17] Mercedes E. Paoletti et al. \"A rotationally equivariant CNN for hyperspectral data, showing robustness without data augmentation.\" IEEE, 2020. [18] Feng Hu and Kai Bian. \"A CNN model for classifying coal and gangue in infrared images, optimizing performance.\" IEEE, 2020. [19] Xiaodong Yin et al. \"A CNN-based digital twin model for UHVDC system loss measurement, achieving high accuracy.\" IEEE, 2020 [20] Jong-Hyouk Lee. \"A CNN method for web spam detection using website screenshots.\" IEEE, 2020 [21] Pengxu Jiang et al. \"The PCRN model for speech emotion recognition, effectively combining different features.\" IEEE, 2019. [22] Xiaole Zhao et al. \"AWCNN for optimizing MR image windowing, improving efficiency.\" IEEE, 2019. [23] Zhongwen Guo. \"DPCRCN for time series classification; future work on new architectures.\" IEEE, 2019. [24] Yihan Xiao et al. \"A CNN for network intrusion detection, enhancing performance and speed; plans to use GANs for rare attacks.\" IEEE, 2019.

Copyright

Copyright © 2024 Kunal P Raghuvanshi, Abhijeet R Kamble, Saurabh B Matre. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64683

Publish Date : 2024-10-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online