Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

COVID-19 Detection and Analysis Using Chest X-Ray and CT- Scan Images

Authors: Dawood Ahmad Dar, Ravinder Pal Singh, Dr. Monika Mehra

DOI Link: https://doi.org/10.22214/ijraset.2021.39393

Certificate: View Certificate

Abstract

COVID-19 seems to be the most devastating and lethal illness characterized by an unique coronavirus for the human body. Coronavirus, which is considered to have originated in Wuhan, China, and is responsible for a huge number of deaths, spread swiftly around the world in December 2019. Early discovery of COVID-19 by proper diagnosis, especially in situations with no evident symptoms, could reduce the death rate of patients. The primary diagnostic tools for this condition are chest X-rays and CT scans. COVID-19 may be detected using a machine vision technique from chest X-ray pictures and CT scans, according to this study.The model\'s performance was evaluated using generalised data throughout the testing step. According to recent studies gained using radiological imaging techniques, such images convey crucial data about the COVID-19 virus. This proposed approach, which makes use of modern artificial intelligence (AI) techniques, has shown to be effective in recognising COVID-19, and when combined with radiological imaging, can aid in the correct detection of this disease. The proposed approach was created in order to provide accurate assessments for COVID and non-COVID patients.The results demonstrate that VGG-16 is the best architecture for the reference dataset, with 98.87 percent accuracy in network evaluations and 95.91 percent success in patient status identification. Convolutional layers were developed, with distinct filtering applied to each layer. As a result, the VGG-16 design performed well in the classification of COVID-19 cases. Nevertheless, by modifying it or adding a preprocessing step on top of it, this architecture allows for significant gains. Our methodology can be used to help radiologists validate their first screenings and can also be used to screen patients quickly via the cloud.

Introduction

I. INTRODUCTION

COVID-19 disease, which is caused by the SARS-CoV-2 (Severe Acute Respiratory Syndrome Coronavirus 2) [1,3], has been potentially damaging to humanity due to extensive community transmission and a growing death rate on a daily basis. It is considered to have begun in the Chinese city of Wuhan [4]. SARS-CoV-2 has now spread all across the world [5,7] and has afflicted 13,471,862 people, resulting in 581,561 deaths. The geographic region of the respective country influences the virus's spread for infection [8]. Medical practitioners have been employing the Polymerase Chain Reaction (PCR) technique extensively to diagnose the transmission of this disease in the human body, which is not only costly but also time-consuming.Nonetheless, it takes time, and speedier outcomes are more likely to save people's lives. As a result, researchers are attempting to identify the most cost-effective and time-efficient Computer-Aided Diagnosis (CAD) approaches, such as Chest X-Ray (CXR) [9,11], Computed Tomography (CT) [12, 13], and others. Furthermore, the World Health Organization (WHO) has advised people who are not hospitalized but have minor symptoms to have chest imaging. The CXR-based technique is one of the least expensive ways for early identification of such disease among the CAD methods. [9, 13,15] suggest CXR-based approaches for COVID-19 diagnosis. These techniques are normally based on deep neural networks that have been pre-trained and outperform classic computer vision-based methods (also called hand-crafted features extraction methods) [16]. Furthermore, deep learning-based approaches extract characteristics at a higher level of abstraction. As a result, it possesses outstanding image analysis capabilities, particularly for CXR pictures. As a result, deep learning-based approaches for CXR image interpretation, particularly for COVID-19 diagnosis, have been highlighted in this chapter.

Deep learning methods are the most common and may be the only way to work with these medical pictures. Deep Intelligence is a broad discipline that has the potential to play a big role in COVID-19 detection in the future. Investigators have already employed computational mathematics learning methods to determine COVID-19 using medical imaging such as X-rays or CT scans, with encouraging results.The main goal of this study is to methodically review the methodology of previous studies, collect all of the numerous sources of lung CT and X-Ray image datasets, and summarize the most often used methods for autonomously diagnosing COVID-19 using medical pictures. The data that we need to efficiently train a model must be the first priority. This information will aid in the diagnosis of COVID-19 instances using Machine Learning (ML) or Deep Learning (DL) methods. Due to the drawbacks of RTPCR, researchers developed an alternate approach for diagnosing COVID-19 that involves using Artificial Intelligence on chest CT or X-Ray pictures.Even if a chest CT or X-ray suggests COVID-19, the viral test is the only specific method for diagnosis, according to the Centers for Disease Control (CDC) [42]. This work is an initial study for the continued expansion of a tool that confirms the viral test result or provides more details about the ongoing infection. VGG-16 earns a perfect score of 100 percent in the categorization of patients in the pneumonia class, the best result to date. The class normal also scored quite well, but the class COVID-19, despite achieving excellent results, had some small categorization issues. This model gets the greatest and most consistent outcomes in the COVID-19 class to date.

II. OBJECTIVES

We aim to present a unique deep learning model based on the VGG-16 and the concentration module, which we believe is one of the most suitable models for CXR picture categorization. Our suggested model can capture more probable deteriorating areas in both local and global levels of CXR pictures because it combines attention and convolution module (4th pooling layer) on VGG-16. Initially, we will use a Convolutional Neural Network (CNN), in which the input pictures are processed through a succession of layers, including convolutional, pooling, flattening, and fully connected layers, before the CNN output is formed, which classifies the images. Following the construction of CNN models from the ground up, we will attempt to fine-tune the model utilizing picture augmentation techniques. As a result, we'll use one of the VGG-16 pre-trained models to categorize images and assess accuracy for both training and validation data.

III. LITERATURE REVIEW

Using the Resnet18 model and image patches focusing on subregions, Xiaowei et al. [10] developed an early prediction model to differentiate COVID-19 pneumonia from Influenza-A viral pneumonia and healthy patients using lung CT scans. The CNN model had the best accuracy, with 86.7 percent CT scans. The authors of Shuai et al. [11] used CT scans to predict COVID-19 patients and used the Inception transfer-learning model to achieve an efficiency of 89.5 percent, a specificity of 88.0 percent, and a sensitivity of 87.0 percent. [4] analyzed a dataset of X-ray images to discriminate coronavirus patients from pneumonia and normal cases using a number of CNN architectures that have already been utilized for various medical image classifications.

To detect abnormalities in CXR pictures, Sasaki et al. [31] used an ensemble model. To reduce the false-positive rate in CXR pictures of lung nodules, Li et al. [42] employed more than two CNNs (Convolution Neural Networks). Chouhan et al. [22] suggested a model to diagnose influenza using the transfer learning technique on CXR pictures that aggregates the outputs of five pre-trained models such as AlexNet, DenseNet121, ResNet-18, Inception-V3, and GoogleNet. Luz et al. [14] introduced a novel DL model based on the current pre-trained deep learning model EfficientNet [39]. They used COVID-19 CXR images to fine-tune their model for categorization. In addition, Panwar et al. [15] introduced a unique model, dubbed nCOVnet, that was based on the VGG-16 model and showed high accuracy on COVID-19 CXR image categorization for two classes.

IV. METHODOLOGY

A. ANN (Artificial Neural Network)

Artificial neural networks are the most widely used and major way to deep learning (ANN). They are based on a model of the human brain, which is our body's most sophisticated organ. The human brain is made up of almost 90 billion Neurons, which are small cells. Axons and dendrites are nerve fibers that link neuron to neuron. The primary function of an axon is to carry data from one neuron to another. Nerve cells, on the other hand, serve primarily to collect data provided by the axons of some other neuron to which it is linked. Each neuron processes a little quantity of knowledge before passing it on to another neuron, and so on. This is the main technique through which our human brain processes large amounts of data, such as voice, visual, and other types of data, and extracts meaningful information from it.

B. Perceptron

In 1958, psychologist Frank Rosenblatt built the first Artificial Neural Network (ANN) based on this paradigm. ANNs are comparable to neurons in that they are made up of numerous nodes. The nodes are firmly linked and grouped into several hidden levels. The input layer accepts the information, which is then passed via one or more hidden layers in a sequential order before being predicted by the output layer. The input may be a picture, and the output could be the object detected in the image, such as a cat. A single neuron (also known as a perceptron in ANN) is seen below.

C. Multi-Layer Perceptron

The most basic type of ANN is the number of co perceptron. It has a single input layer, one or more hidden layers, and an output layer at the end. A layer is made up of a group of perceptron. One or more aspects of the input data make up the input layer. Every hidden layer has one or more synapses that process a specific component of the feature before sending the processed data to the next hidden layer. The data from the last hidden layer is received by the output layer procedure, which then outputs the result.

4. Convolutional Neural Network

One of the most common ANNs is the convolution layer. In the disciplines of video processing, it is commonly employed. It is founded on the mathematical notion of convolution. It's virtually identical to a multi-layer perceptron, with the exception that it has a sequence of convolution and pooling layers just before fully linked hidden neuron layer. It is made up of three layers:

- Convolution Layer: It's the most basic building block, and it uses the gaussian method to conduct computations.

- Pooling Layer −It sits next to the convolution layer and is intended to minimize the amount of inputs by eliminating extraneous data, allowing for quicker calculation.

- Fully Connected Layer: It's connected to a succession of convolutional layers, and it classifies input into several categories.

E. VGG-16 (Visual Geometry Group)

In their study Very Convolution Neural Systems for Large-Scale Image Recognition, K. Simonyan and A. Zisserman from the University of Edinburgh introduced the VGG-16 convolutional neural network model. In ImageNet, a dataset of over 14 million pictures belonging to 1000 classes, the model achieves 92.7 percent top-5 precision. It was a well-known model that was presented to the ILSVRC-2014. It outperforms AlexNet by sequentially replacing big kernel-size filters (11 and 5 in the first and second convolution operation, correspondingly) with numerous 33 kernel-size filters. VGG-16 was trained over a period of weeks utilizing NVIDIA Titan Black GPUs. The input to the cov1 layer is a 224 by 224 RGB picture with a fixed size.The picture is processed through a series of convolutional layers, each with an extremely small input patch: 33 (the smallest size that captures the concepts of left/right, up/down, and center). It also uses 11 convolution filters in one of the configurations, which can be thought of as a linear combination of the input streams (followed by non-linearity). The convolution stride is set to 1 pixel, and the spatial padding of conv. layer input is set to 1 pixel for 33 conv. layers so that the spatial resolution is kept after combining. Five at the very most layers, which follow part of the conv. layers, do geographical pooling (not all the conv. layers are followed by max-pooling). Max-pooling is done with stride 2 across a 22 pixel frame.

Following a stack of convolutional layers (of varying depth in various designs), three Fully-Connected(FC) layers are added: the first two have 4096 windows apiece, while the third performs 1000-way ILSVRC classifying and hence has 1000 channels (one for each class). The soft-max layer is the last layer. In all networks, the completely linked levels are configured in the same way. The rectification (ReLU) nonlinearity is present in all hidden layers. It should also be highlighted that, with the exception of one, none of the networks incorporate Local Response Normalization (LRN), which does not enhance performance on the ILSVRC dataset but increases memory requirements and computational cost.We'll use a VGG-16 pretrained model that's been trained on ImageNet weights to extract features and pass that data to a new decoder to identify photos. To get the VGG-16 model that was trained on the ImageNet dataset, we need to provide weights =ImageNet'. Include top = False is required to avoid downloading the classifier model's completely linked layers. Because the classifier model classifier contains more than two classes, we need to add our own classifier. Our goal is to categorise the picture into two classes: COVID positive and COVID negative. After the pretrained model's convolution extract low-level picture characteristics like edges, lines, and blobs, the deep learning model divides them into two groups.

V. SYSTEM ARCHITECTURE

A. Software Implementation

It includes all of the post-sale steps necessary in getting anything to work successfully in its environment, such as evaluating prerequisites, installing, testing, and running..

- Python: Python is a popular high-level programming language for general-purpose applications. Guido van Rossum invented it in 1991, and the Python Software Foundation developed it. Its syntax lets programmers to express concepts in fewer lines of code, and it was primarily built with code readability in mind. Python is a programming language that allows you to operate more quickly and effectively with systems. We may utilise the Python language to create APIs in Pycharm IDE.

B. Libraries

Python libraries are a collection of helpful routines that reduce the need to write code from the ground up. Today, there are about 137,000 Python open source libraries. Python libraries are used to create computer vision, data science, data visualization, picture and data transformation, and other programs. The following are some useful Python libraries:

- Keras and Tensorflow Keras is a Python-based deep learning API that runs on top of TensorFlow, a computer vision system. Francois Chollet, a Google artificial intelligence researcher, came up with the idea. Keras is presently used by Google, Square, Netflix, Huawei, and Uber, among others. It was created with the goal of allowing for quick experimenting. TensorFlow 2.0 and Keras TensorFlow 2.0 is an open-source machine learning platform that runs from start to finish. It brings together four crucial abilities:

a. Performing low-level tensor activities effectively on a CPU, GPU, or TPU

b. Tensorflow can perform distributed processing which allows handling of large amount of data such as big data.

c. Tensorflow uses a computation graph to explain all calculation operations, no matter how simple they are. 4. Keras enables engineers to fully use TensorFlow's flexibility and cross-platform features. We can now run Keras on TPU or massive groups of GPUs, as well as export our Keras models to run in the browser or on a smart phone, thanks to version 2.0.

2. NumPY and pandas The Pandas module primarily handles tabular data, whereas the NumPy module handles numerical information. Pandas provides a collection of sophisticated tools such as DataFrame and Series that are mostly used for data analysis, whereas the NumPy module provides a strong object known as Array.

3. MatplotlibIt's a popular Python data visualisation package that lets you make 2D graphs and plots using python scripts. It can display a broad range of graphs and plots, including line, histogram, bar-charts, scatter plots, power spectra, and error charts, among others.

C.Technology Used

Methods, processes, and equipment that are the outcome of scientific knowledge being applied to purposes are referred to as technology.

- Django: Django is a high-level Python web framework for building safe and stable websites quickly. Django is a web framework built by experienced developers that takes care of a lot of the heavy lifting so you can focus on developing your app instead of reinventing the wheel. It's free and open source, with a vibrant and active community, excellent docs, and a variety of free and paid additional services.

- Google CollabCollab is a cloud-based Jupyter notebook ecosystem that is free to use. Most significantly, it doesn't require any setup, and the notebooks you create may be modified concurrently by your team members, much like Google Docs projects. Many common machine learning libraries are supported by Colab and can be quickly loaded into your notebook.

- CSS :CSS is an exchange of information that specifies how a website markup language like HTML or XHTML is displayed, including fonts, layouts, spacing, and colours, to mention a few. CSS is a language that is used to make existing information seem more appealing; it is a language that is used to promote expressive style and inventiveness. Its ability to separate document content expressed in markup languages (like HTML) from document presentation defined in CSS is one of its most popular capabilities.

- JavaScript Each time a web page even does just sit there and showcase static data for you to look at, such as showing timely content and information, interactive maps, animated 2D/3D graphics, scrolling video jukeboxes, and so on, JavaScript is a scripting or programming language that allows you to enforce complex features on web pages.

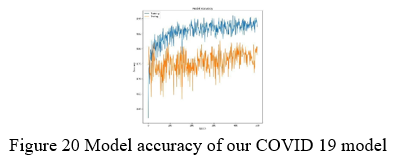

VI. RESULTS AND OBSERVATIONS

In the ILSVRC-2012 and ILSVRC-2013 contests, VGG-16 outperformed the newest generation of models considerably. The VGG-16 performance is also in competition with the classification task winner (GoogleLeNet with 6.7 percent error) and exceeds the ILSVRC-2013 winning entry Clarifai, which obtained 11.2 percent with external training data and 11.7 percent without it. The VGG-16 architecture delivers the greatest single-net performance (7.0 percent test error), surpassing a single GoogLeNet by 0.9 percent. The interface was designed with a visual notion in mind for freedom of usage, and we utilised Tkinter to construct graphical user interfaces. We picked Tkinter because Python and Tkinter together provide a quick and straightforward approach to construct graphical user interfaces. Tkinter also gives the Tk GUI toolkit a robust object-oriented interface.

A. Confusion Matrix

An N x N matrix is used to evaluate the accuracy of the model, where N is the number of target classes. The matrix determines the current goal values to the machine learning model's predictions. This provides us with a comprehensive picture of how well our classi?er is working and the types of errors it makes.

- There are two possible values for the target variable: positive or negative.

- The target variable's actual values are shown in the columns, while the projected values are represented in the rows.

B. VGG-16 Comparison

It was shown that representative depth improves accuracy rate, and that state-of-the-art results on the ImageNet challenge dataset can be reached using a standard ConvNet structure with significantly enhanced depth..

VII. FUTURE SCOPE

Our recommended method's performance, on the other hand, may be enhanced further. This also helps to increase the quantity of CXR pictures, which improves our model's accuracy. This study backs up a viable method for enhancing health-care quality and diagnostic procedure outcomes. Although deep learning is one of the most advanced computing technologies for diagnosing COVID-19 and non-COVID patients, it is not without flaws.

Conclusion

We suggested a deep learning-based algorithm to detect and classify COVID-19 instances from X-ray pictures in this work. Our model has an end-to-end structure that eliminates the need for individual feature extraction. Our built system can execute classifiers tasks with a 98.40 percent accuracy. The generated model\'s performance is evaluated, and it is ready to be tested on a bigger database. These models can also be used to identify other chest-related disorders including TB and pneumonia. The study\'s utilisation of a restricted number of COVID-19 X-ray pictures is a restriction. We plan to use additional similar photos from our nearby hospitals to create our model more stable and efficient.

References

[1] Y. Zhang, L. Zheng, L. Liu, M. Zhao, J. Xiao, Q.Zhao Liver impairment in COVID-19 patients: a retrospective analysis of 115 cases from a single centre in Wuhan city, ChinaLiver Int, 40 (9) (2020), pp. 2095- 2103, 10.1111/liv.14455 View PDF CrossRefViewRecordinScopus Google Scholar [2] World Health Organization “Public health emergency of international concern (PHEIC),” who(2020), pp. 1-10 [Online]. Available:https://www.who.int/publications/m/item/COVID-19- public-health-emergency-of-international-concern-(pheic)-global-researchand-innovation-forum, Accessed 2nd Aug 2021accessedView Record in ScopusGoogle Scholar [3] COVID live update: 199,193,272 cases and 4,244,017 deaths from the coronavirus – worldometer https://www.worldometers.info/coronavi rus/,Accessed 2nd Aug 2021Accessed Google Scholar [4] W. O\'Quinn, R.J. Haddad, D.L. Moore Pneumonia radiograph diagnosis utilizing deep learning network Proc. 2019 IEEE 2nd int. Conf. Electron. Inf. Commun. Technol, ICEICT (2019), pp. 763-767, 10.1109/ICEICT.2019.8846438 Jan. 2019 View PDFCrossRefView Record in ScopusGoogle Scholar [5] J. Vilar, M.L. Domingo, C. Soto, J. CogollosRadiology of bacterial pneumonia Eur J Radiol, 51 (2) (2004), pp. 102-113, 10.1016/j.ejrad.2004.03.010 ArticleDownload PDFView Record in ScopusGoogle Scholar [6] J. Zhang, et al.Viral pneumonia screening on chest X-rays using confidenceaware anomaly detectionIEEE Trans Med Imag, 40 (3) (Mar. 2021), pp. 879- 890, 10.1109/TMI.2020.3040950 View Record in ScopusGoogle Scholar [7] G. Ling, C. Cao Atomatic detection and diagnosis of severe viral pneumonia CT images based on LDA-SVM IEEE Sensor J, 20 (20) (2020), pp. 11927- 11934, 10.1109/JSEN.2019.2959617 Oct View PDFCrossRefView Record in ScopusGoogle Scholar [8] M.E.H. Chowdhury, et al.Can AI help in screening viral and COVID-19 pneumonia? IEEE Access, 8 (2020), pp. 132665-132676, 10.1109/ACCESS.2020.3010287 View PDFCrossRefView Record in ScopusGoogle Scholar [9] T. A, A. A Real-time RT-PCR in COVID-19 detection: issues affecting the results Expert Rev Mol Diagn, 20 (5) (May 2020), pp. 453- 454, 10.1080/14737159.2020.1757437 View PDFCrossRefGoogle Scholar [10] S. A, et al. Duration of infectiousness and correlation with RT-PCR cycle threshold values in cases of COVID-19, England, January to May 2020 Euro Surveill, 25 (32) (2020), 10.2807/1560-7917.ES.2020.25.32.2001483 Google Scholar [11] S. Tabik, et al. COVIDGR dataset and COVID-SDNet methodology for predicting COVID-19 based on chest X-ray imagesIEEE J. Biomed. Heal. Informatics, 24 (12) (Dec. 2020), pp. 3595-3605, 10.1109/JBHI.2020.3037127 View PDFCrossRefView Record in ScopusGoogle Scholar [12] U. Özkaya, ?. Öztürk, M. Barstugan Coronavirus (COVID-19) classification using deep features fusion and ranking technique (2020), pp. 281- 295, 10.1007/978-3-030-55258-9_17 View PDF CrossRefView Record in ScopusGoogle Scholar [13] A. Khan, J.A. Doucette, R. Cohen, D.J. Lizotte Integrating machine learning into a medical decision support system to address the problem of missing patient data Proc. - 2012 11th int. Conf. Mach. Learn. Appl, 1, ICMLA (2012), pp. 454-457, 10.1109/ICMLA.2012.82 2012 View PDFCrossRefView Record in ScopusGoogle Scholar [14] S.M. Sasubilli, A. Kumar, V. Dutt Machine learning implementation on medical domain to identify disease insights using TMS Proc. 2020 Int. Conf. Adv. Comput. Commun. Eng. ICACCE (2020), 10.1109/ICACCE49060.2020.9154960 Jun. 2020 Google Scholar [15] R. Katarya, P. Srinivas Predicting heart disease at early stages using machine learning: a survey Proc. Int. Conf. Electron. Sustain. Commun. Syst, ICESC (Jul. 2020), pp. 302-305, 10.1109/ICESC48915.2020.9155586 2020 View PDFCrossRefView Record in ScopusGoogle Scholar [16] R.M. Balajee, H. Mohapatra, V. Deepak, D.V.Babu Requirements identification on automated medical care with appropriate machine learning techniquesProc. 6th int. Conf. Inven. Comput. Technol., ICICT (2021), pp. 836-840, 10.1109/ICICT50816.2021.9358683 Jan. 2021 View PDFCrossRefView Record in ScopusGoogle Scholar [17] G. Chitnis, V. Bhanushali, A. Ranade, T.Khadase, V. Pelagade, J. Chavan A review of machine learning methodologies for dental disease detection Proc. - 2020 IEEE India counc. Int. Subsections conf, INDISCON (Oct. 2020), pp. 63-65, 10.1109/INDISCON50162.2020.00025 2020 View PDFCrossRefView Record in ScopusGoogle Scholar [18] Waheed, M. Goyal, D. Gupta, A. Khanna, F. Al-Turjman, P.R. Pinheiro, “CovidGAN Data augmentation using auxiliary classifier GAN for improved covid-19 detection IEEE Access, 8 (2020), pp. 91916-91923, 10.11 09/ACCESS.2020.2994762 View PDFCrossRefView Record in ScopusGoogle Scholar [19] S. Degadwala, D. Vyas, H. Dave Classification of COVID-19 cases using fine-tune convolution neural network (FT-CNN) Proc. - Int. Conf. Artif. Intell. Smart Syst. ICAIS(2021), pp. 609- 613, 10.1109/ICAIS50930.2021.9395864 Mar. 2021 View PDFCrossRefView Record in ScopusGoogle Scholar [20] T. S, A. Tb, T. I Convolutional capsnet: a novel artificial neural network approach to detect COVID-19 disease from X-ray images using capsule networks Chaos Solitons Fractals, 140 (Nov. 2020), 10.1016/J .CHAOS.2020.110122Google Scholar

Copyright

Copyright © 2022 Dawood Ahmad Dar, Ravinder Pal Singh, Dr. Monika Mehra. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET39393

Publish Date : 2021-12-12

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online