Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Deep Learning Based Gastric Cancer and Disease Detection System

Authors: Madhuri Nallamothu, Sri Hari Prasada Rao Chennakesavula, Jahedunnisa Abdul, Dinesh Kandimalla, Venkata Veera Naga Sairam Atmuri, Harish Kolla

DOI Link: https://doi.org/10.22214/ijraset.2024.58759

Certificate: View Certificate

Abstract

Gastric cancer, commonly known as stomach cancer, is a leading cause of cancer-related deaths worldwide. Early detection of gastric cancer is crucial for improving patient outcomes and reducing mortality rates. In recent years, advances in medical imaging and machine learning techniques have shown great promise in aiding the early diagnosis of gastric cancer. This abstract presents an innovative approach for gastric cancer detection using machine learning algorithms. Our proposed system leverages state-of-the-art convolutional neural networks (CNNs) for the automatic analysis of endoscopic images, to identify potential malignancies. Preprocessing techniques are employed to enhance the quality of input images and reduce noise. Feature extraction methods are then applied to extract relevant information from the images, and these features are used to train a machine learning model. The machine learning model is trained on a large dataset of gastric cancer cases, including various subtypes, to ensure robust performance. We employ cross-validation techniques to assess the model\'s accuracy, sensitivity, specificity, and overall performance. The proposed system demonstrates high accuracy in identifying gastric cancer lesions, even in cases where subtle or early-stage abnormalities may be difficult to detect by human experts. In conclusion, our advanced machine learning approach for gastric cancer detection shows promise in revolutionizing early diagnosis, improving patient outcomes, and reducing the burden on healthcare systems. Further research and clinical validation are necessary to fully assess the system\'s efficacy in a real-world clinical setting.

Introduction

I. INTRODUCTION

A new era of opportunities has just been brought about by the convergence of healthcare and technology, especially in the area of disease detection and diagnosis. One common and possibly fatal type of cancer that presents several obstacles to early detection and treatment is gastric cancer. But because of developments in machine learning models, especially in Convolutional Neural Networks (CNNs) like ResNet (Residual Networks), we may be able to diagnose stomach cancer and diseases more accurately and provide better care for patients. The goal of this project, "Deep Learning Based Gastric Cancer and Disease Detection System," is to transform the process of detecting and diagnosing stomach cancer and diseases by utilizing the potential of CNNs, particularly ResNet. We aim to create an advanced system that can precisely detect gastric cancer in its initial stages, facilitating prompt intervention and treatment, by utilizing deep learning and picture recognition capabilities.

Gastric cancer is still a major worldwide health problem because it is frequently diagnosed at an advanced stage, which results in a worse prognosis and fewer treatment options. Conventional diagnostic techniques, although somewhat successful, are labor-intensive and subject to human error. Our objective is to overcome these constraints and furnish physicians with an effective instrument for prompt identification and accurate diagnosis through the incorporation of sophisticated machine learning methodologies into the diagnostic procedure.

CNNs and ResNet in particular have great potential because of how well they can process and evaluate complicated medical pictures. The ability of these neural networks to extract complex patterns and attributes from images allows them to identify small irregularities that may be signs of stomach cancer or diseases. Additionally, the network's depth and efficiency are increased by the use of ResNet's residual learning framework, which further improves the network's performance in difficult diagnostic tasks. We hope that this research will significantly progress the fields of medical imaging and diagnostics as well as personalized and targeted healthcare.

We hope to increase the accessibility, efficacy, and accuracy of stomach cancer or disease diagnosis by equipping physicians with state-of-the-art instruments and technology. In the end, this will lead to improved patient outcomes and a more optimistic outlook for the fight against cancer.

II. LITERATURE SURVEY

In [1], Y. Sakai et al displayed an exchange literacy strategy for the discovery of the cancer cells from the endoscopic filmland which were collected from the endoscopic recordings by gastroenterologists. Different expansion procedures were performed to extend the information for preparing the show. Pre-trained CNN, GoogLeNet comprising of 22 convolutional layers was employed and assessed on a specific set of information to realize the prosecution and fulfill the ideal to distinguish the lesions.

L. Li, M. Chen, Y. Zhou, J. Wang and D. Wang, [2] has audited colourful deep literacy procedures for diagnosing the cancer cells within the endoscopic filmland. From their probe, it's set up that DenseNet show has displayed great prosecution by exercising styles similar as editing, revolution, emptying clamour. With this, they concluded that preprocessing and information increase can be the extraordinary coffers for the preparing the demonstrate precisely.

The paper entitled “Gastric Cancer Diagnosis with Mask R-CNN” by G. Cao, W. Song and Z. Zhao, published in the International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC) in 2019,presents a system designed to facilitate the identification and classification of gastric cancer using Mask R-Convolutional Neural Networks (CNN).The dataset considered is of pathological sections with the size of 2048 x 2048 pixels where the images are reduced to the confine size and made the classification. Pathological images are images of tissue samples that are obtained by staining the samples with frequent dyes and are observed under microscope for analysing specific features.[3]

Shokoufeh Hatami et al., 2020,[4] created a convolutional neural network model to classify stomach lesions from endoscopic pictures with greater accuracy and dependability. The main motive is to predict the disease in early stages with the help of methods like data augmentation, dropout, noise addition, and weight decay. They improved the model's performance and robustness while training on a dataset. Through the use of accuracy, cost, and confusion matrix criteria, the system's efficacy was assessed. The model demonstrated its potential to help prevent stomach diseases and cancer by aiding in the early detection of stomach disorders.

[5] In this paper, an algorithm titled “Bicubic Interpolation Algorithm” is introduced which uses high order interpolation algorithms. During the image interpolation procedure, the influence of the neighbouring points gray value gradient is taken into account in addition to the gray values of the four adjacent points. As a result, the image will be smoother since bicubic interpolation has good effects and high pixel quality, outperforming nearby interpolation algorithms in anti-aliasing. This bicubic interpolation algorithm identifies tumors from the CT images.

M. El Ansari et al., 2020 developed a fine-tuned model based on the CNN Architecture that is MobileNet, VGGNets, Inception V3. In order to fine-tune a network for a tiny provided new dataset, transfer learning involves first training it on a large, well-known dataset and then allowing last layers adaption. This hybrid algorithm can showcase the detection of gastrointestinal diseases, if not predicted in early stages the diseases can advance into gastric cancer.[6]

III. DESIGN AND METHODOLOGY

This section outlines our proposed CNN Architecture for detecting gastrointestinal diseases and cancer tissues.

A. CNN Architecture

Convolutional Neural Networks (CNNs) are widely employed in the image classification and segmentation because of their efficacy in the analysis of medical pictures, particularly endoscopic images of stomach tissue. Designed to automatically and adaptively learn spatial hierarchies of characteristics straight from the raw data, CNNs are a sort of deep learning model. The CNN architecture commonly used for diagnosis is summarized as follows:

- Convolutional Layers: Convolution operations are applied to the input images by means of numerous convolutional layers that make up the CNN architecture. These layers take elements out of the input images, like edges, textures, and forms, using learnable filters or kernels. These characteristics are essential for recognizing aberrant tissue patterns suggestive of a malignant area in the identification of stomach cancer.

- Activation Functions: Following each convolutional layer, non-linear activation functions like as Rectified Linear Unit (ReLU) are added to provide non-linearity to the model and help it recognize intricate patterns in the input data.

- Pooling Layers: Convolutional layers are separated by pooling layers, such as MaxPooling or AveragePooling, in order to decrease the spatial dimensions of the feature maps without sacrificing the most crucial information. This helps manage overfitting and lower computational complexity.

- Flattening Layer: The feature maps undergo a series of convolutional and pooling layers before being flattened into a vector form. The 2D feature maps are flattened into a 1D vector by this layer, which then feeds that vector into the fully connected layers.

- Fully Connected Layers: One or more fully connected layers, sometimes referred to as dense layers, process the flattened feature vectors and classify the data using the learnt features. Through training, these layers discover intricate connections between the extracted data and the target classes (such as normal tissue, polyps, and malignant lesions).

- Output Layer: Typically, the CNN's output layer is made up of one or more neurons, each of which represents a class label. A softmax activation function is frequently employed to create probability scores for each class of gastric abnormalities, and many neurons corresponding to different classes of abnormalities may be present in the output layer during gastric cancer detection.

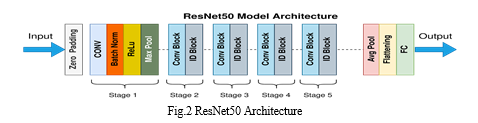

B. ResNet50 Model

ResNet50, an acronym for Residual Network with an astounding 50 layers, is a powerful deep learning architecture created to address the difficulties involved in training extremely deep neural networks. ResNet50 is well known for having pioneered the idea of residual learning, which makes use of skip connections, also known as residual blocks. ResNet50 is powerful because it can effectively train deep neural networks by using these skip connections to solve the vanishing gradient issue, which makes training optimization simpler. In essence, these skipping connections remove the obstacles associated with training extremely deep models by facilitating the gradient's easier passage through the network. The ResNet50 architecture, which consists of layers upon layers of convolutional neural networks, is well known for its extraordinary depth and intricacy. The model uses a global average pooling layer to finish the classification task, which guarantees efficient feature extraction from the input data.[10] To get the final categorization, a fully connected layer is also used. Transfer learning is the process of applying the knowledge acquired from solving one problem to another that is related yet unrelated. When labelled data or computational resources are scarce for the second task, this strategy is especially helpful.[9]

ResNet50's exceptional performance and efficiency in picture identification have made it a popular choice for a variety of computer vision tasks, despite its complex design. This architecture, which is based on a fully linked layer and global average pooling, is an important tool for deep learning. The ResNet50 model used here has the following architecture:-

- Input Layer: The input layer is made up of 256x256 pixel pictures of the gastrointestinal tract.

- Convolutional Layers: Four convolutional layers with a 7x7 filter size and no padding are used in this implementation. Using the convolution procedure, features from the images are extracted by multiplying the input by computed weights, or filters.

- Max Pooling Layers: By using the max-pooling technique, the maximum value for each feature map is kept in each patch.

By setting the max-pooling kernels to a size of 2x2 and a stride of 2, the feature maps' spatial dimensions are essentially reduced. - ReLU Layers: After every convolutional layer, Rectified Linear Unit (ReLU) layers are added to the model to add non-linearity. Negative values are removed from the feature maps using ReLU activation functions, which helps the network successfully learn intricate patterns.

- Fully Connected Layer: The fully-connected layer completes the network's prediction hierarchy. The ultimate forecast for every image is generated by this layer by combining the features that were discovered in the earlier levels. As fully connected layers, the sigmoid and softmax activation functions are employed, contingent on the particular demands of the task. For binary classification problems, sigmoid activation is usually utilized, whereas softmax activation works well for multi-class classification tasks.

IV. IMPLEMENTATION

A. Dataset

In order to carry out our research, which focuses on diagnosing stomach cancer and related conditions, we have acquired a specific image collection that comes from medical facilities. This dataset includes a wide range of endoscopic pictures that have been carefully selected to address different medical disorders related to the health of the stomach. The Asian Institute of Gastroenterology was the source of our initial picture datasets. Only early-stage gastric cancer photographs which were acquired by linking the images to patients who had received hospital treatment were verified as cancerous images, and this included the lesions. What was left were normal, or non-cancerous, pictures. A gastroenterologist manually recognized every lesion in the cancer pictures. Additionally, photos of ulcerative colitis, a chronic inflammatory bowel disease, are integrated to broaden the scope of our study beyond malignant lesions, aiding in the recognition and representation of gastrointestinal diseases. The dataset also illustrates esophagitis, which is characterized by inflammation of the esophageal covering and provides information on disorders pertaining to the upper gastrointestinal system. Moreover, pictures with a normal cecum are important benchmarks that help distinguish between diseased and healthy conditions. Our approach seeks to create strong machine learning models that can reliably identify and classify stomach cancer and related conditions by using this carefully selected dataset. This will improve patient outcomes in clinical settings and strengthen diagnostic skills.

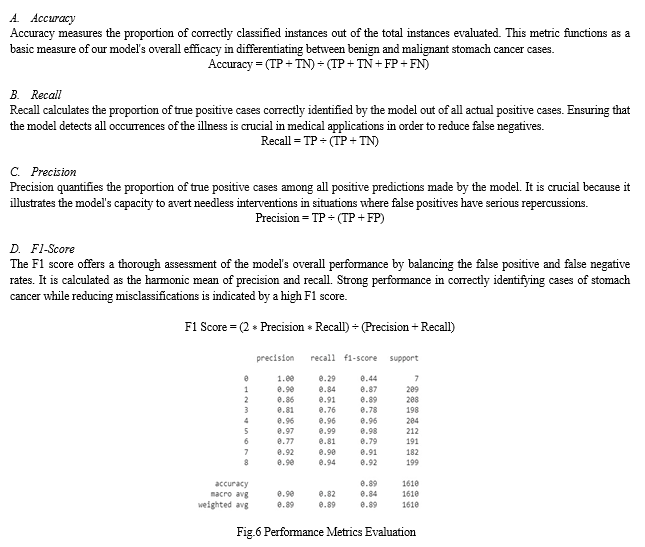

V. RESULT

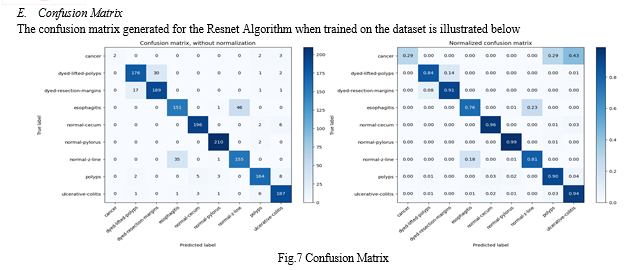

We have observed encouraging results from our research on the use of sophisticated machine learning models, especially Convolutional Neural Networks (CNNs) with the Residual Network (ResNet) architecture, to improve healthcare in the area of stomach cancer detection. We have achieved strong outcomes that highlight the effectiveness and potential significance of our strategy through thorough testing and assessment.

The system showed impressive precision in identifying stomach cancer through medical imaging data. We demonstrated the efficacy of our models in automated cancer detection by obtaining consistently high accuracy rates in differentiating between non-cancerous and malignant regions.

Our CNN-ResNet model precision and recall measures show that they can correctly detect worrisome regions that may be signs of stomach cancer. Our models demonstrate a high degree of sensitivity and specificity, which are essential for accurate cancer detection, by decreasing false positives and false negatives.

The model demonstrated a constant ability to reliably identify and categorize cases of stomach cancer from medical imaging data, as evidenced by their outstanding F1 scores. Based on both the rate of real positive predictions (sensitivity) and the precision (avoidance of false positives) of the model, the F1 Score provides a reliable indicator of its overall performance.

VI. ACKNOWLEDGMENT

We would like to sincerely thank the authors whose works formed the foundation of our investigation. Our work has been greatly influenced by their dedication and effort in the machine learning and healthcare domains. The analytical writing of these authors has been extremely beneficial to us in the development and validation of our solution. We extend our gratitude to the medical practitioners and researchers who assisted in compiling and organizing the medical imaging datasets utilized in this study. Without their determination and commitment to the growth of medical knowledge, our attempts to develop innovative methods for early cancer diagnosis would not have been achievable.

Conclusion

In conclusion, after extensive training and validation, we have demonstrated that CNN-ResNet models accurately detect stomach cancer from medical imaging data, such as endoscopic photos. Due to their remarkable performance, ability to handle complex picture data, and capability to acquire discriminative characteristics, medical professionals may find these models to be helpful in their diagnostic toolkit. It is imperative to recognize the constraints of our investigation and the domains that require further exploration. Although our results show assurance, additional validation on larger, more datasets is required to guarantee the reliability and adaptability of our model.

References

[1] Y. Sakai et al., \"Automatic detection of early gastric cancer in endoscopic images using a transferring convolutional neural network,\" 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) [2] L. Li, M. Chen, Y. Zhou, J. Wang and D. Wang, \"Research of Deep Learning on Gastric Cancer Diagnosis,\" 2020 Cross Strait Radio Science & Wireless Technology Conference (CSRSWTC) [3] G. Cao, W. Song and Z. Zhao, \"Gastric Cancer Diagnosis with Mask R-CNN,\" 2019 11th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC) [4] S. Hatami, D. R. Shamsaee and M. Hasan Olyaei, \"Detection and classification of gastric precancerous diseases using deep learning,\" 2020 6th Iranian Conference on Signal Processing and Intelligent Systems (ICSPIS), Mashhad, Iran, 2020 [5] H. Zhao, \"Recognition and Segmentation of Gastric Tumor Based on Deep Residual Network,\" 2020 IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE) [6] S. Lafraxo and M. El Ansari, \"GastroNet: Abnormalities Recognition in Gastrointestinal Tract through Endoscopic Imagery using Deep Learning Techniques,\" 2020 8th International Conference on Wireless Networks and Mobile Communications (WINCOM) [7] S. -A. Lee, H. C. Cho and H. -C. Cho, \"A Novel Approach for Increased Convolutional Neural Network Performance in Gastric-Cancer Classification Using Endoscopic Images,\" in IEEE Access [8] B. Taha et al., “Automatic polyp detection in endoscopy videos: A survey,” In IASTED Int. Conf. on BioMed, Feb. 2017. [9] N. Tajbakhsh et al., “Convolutional Neural Networks for Medical Image Analysis: Full Training or Fine Tuning,” IEEE Trans. Medical Imaging, 35, 5, pp. 1299-1312, Mar. 2016. [10] S.J. Pan et al., “A survey on transfer learning,” IEEE Trans. Knowledged. Data Eng., 22, 10, pp. 1345-1359, Oct. 2010.

Copyright

Copyright © 2024 Madhuri Nallamothu, Sri Hari Prasada Rao Chennakesavula, Jahedunnisa Abdul, Dinesh Kandimalla, Venkata Veera Naga Sairam Atmuri, Harish Kolla. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58759

Publish Date : 2024-03-03

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online