Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Deep Learning used for Recognition of Landmark

Authors: Supriya Mungare, Suzen Nadaf, Pavan Mitragotri

DOI Link: https://doi.org/10.22214/ijraset.2024.63428

Certificate: View Certificate

Abstract

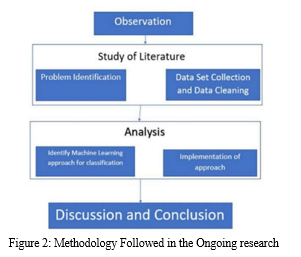

As Google has made its large-scale dataset accessible to the open, advancement in the field of Google Point of interest Classifications has taken a bleeding edge for inquire about in field of Profound Learning. The Google dataset contains photographs of distinctive points of interest combined over a period of time. The commitments are made by millions of individuals for thousands of landmarks around the world. The dataset is huge and is instable posturing issues to analysts for structure models. This ponder illustrates the application of exchange learning in conjunction with information enlargement to deliver a show that yields 82.03% Top-5 precision on a altered form of the unique Google-Landmarks dataset, which incorporates pictures from 6,151 isolated points of interest. We moreover talk about the application of a Generative Ill-disposed Organize in relieving the issues caused by an exceedingly uneven.

Introduction

I. INTRODUCTION

One of the center issues in computer vision that has gotten broad investigate is question acknowledgment. The current paper relates to our think about where the anticipated result is to recognize points of interest (such the Incredible Divider of China, the White House, and so on) in an picture straight from the picture pixels. The potential for point of interest acknowledgment to progress understanding and organization of computerized photo collections makes it an captivating field of think about. Indeed in spite of the fact that the objective is simple— recognizing a point of interest in a given image—the movement itself gets to be troublesome as the number of particular points of interest increments. Our proposed point of interest acknowledgment tricky can be best named as an instance-level acknowledgment issue. It varies from categorical acknowledgment issue in that in-stead of recognizing common substances such as mountains and buildings, it requires fine-grained learning calculations that can recognize Mount Everest/Mount Whitney, or White House/Big Ben. Point of interest acknowledgment moreover varies from what we have seen in the ImageNet classification challenge. For illustration, since points of interest are for the most part unbending, immobilized, one-of-the kind objects, the intraclass variety is exceptionally little (in other words, a landmark’s appearance does not alter that much over distinctive pictures of it). As a result, varieties as it were emerge due to picture capture conditions, such as foreground/background clutter, occlusions, diverse perspectives, climate and brightening, making this dis-tinct from other picture acknowledgment datasets in which the shape and see of photographs having a place to a particular course (like cats) can change altogether. These characteristics are moreover shared with other instance-level acknowledgment issues, such as craftsmanship- work acknowledgment — in a perfect world with gentle alteration, arrangement to point of interest acknowledgment issue can be utilized in the think about of different other picture acknowledgment issues. In the current inquire about in this venture, we connected exchange learning with Initiation- v3 [1] organize to get 82.03% top-5 exactness for a 6151-class point of interest acknowledgment issue. We too investigated the application of Generative Illdisposed Systems (GANs) for information increase to reduce the course lopsidedness issue in our dataset.

II. RELATED WORK

Coevolutionary Neural Network(CNN) is utilized in a few spaces like recognizable proof and classification of illnesses in plants and creatures [2], [3]. Execution of CNN is advance improved through its differential variation [4]. For having a steady form of CNN learning rate changing on time is proposed in investigate [5]. Mechanical applications [6] of machine learning approach are highlight for nearly a decade Particular to the region of inquire about taken up in the current work randomized CNN is utilized for point of interest classification and recognizable proof [7].

One of the major challenges in building a commonsense and all inclusive point of interest acknowledgment framework is to obtain a expansive labeled dataset with different points of interest. Earlier ponders have concentrated on a comparatively little number of special points of interest. For occasion, a dataset comprising 6,412 photographs of 12 distinctive Parisian points of interest is displayed by Philbin et al. [8]. Li et al. [9] utilize a dataset containing one million labeled pictures on 500 points of interest to consider the issue of point of interest acknowledgment.

On the other hand, we utilize a overall greater GoogleLandmarks dataset [10]. As expressed some time recently, our strategy for this issue is to utilize exchange learning and utilize CNN based state-ofthe-art neural organize models. For this reason, we to begin with inspected state of the craftsmanship writing on picture classifiers (e.g. Beginning net [10, 9], Res Net [4] etc.), particularly equipped towards handling exceptionally huge sets of classes. We chosen to go with Starting v3 since of its compact nature and its introduction (3.46% top5 blunder rate) on the ImageNet [11] (has around 1000 classes) dataset.

A. Dataset

Information Investigation Since the unique GoogleLandmarks (G-L) dataset is given as records of URLs of each picture, we to begin with crept the Web and overseen to download 99.4% pictures from the G-L dataset (a little division of joins was broken at the time of our securing). Pictures are in different measurements. The unique G-L dataset requires generally 500GB of capacity space in add up to, which is more than we have on our computing stage. As a result, we utilized antialiasing to resize all downloaded pictures to 128 by 128 pixels. Some time recently moving to construct a particular show, we to begin with per- shaped intensive information investigations on the labeled pictures to think about the by and large structure of G-L dataset. In spite of its expansive measure, the course dispersion inside G-L dataset is exceptionally unequal. The dissemination and related CDF of lesson estimate can be seen in Fig. 1 In G-L dataset, each lesson contains as it were 82 pictures on normal, whereas the most visit course contains more than 50,000 pictures and the slightest visit classes (approximately 1.06% of all classes) contain as it were one picture. The CDF moreover appears that 60% of classes contain less than 20 labeled pictures. Fig. Eight arbitrarily chosen pictures from the most visit point of interest course are shown in 2 along with the best 20 points of interest in the dataset. These pictures, in spite of being labeled as to contain the same point of interest, appear wild varieties counting fore- ground /foundation clutter, impediment, varieties in see- point and illumination.

B. Data Controls

In arrange to make the dataset more tractable for our acknowledgment show, we centered on the 6,151 classes that have at slightest 20 labeled pictures. We call this dataset Points of interest- 230, which is the dataset we center on, in the rest of this paper to recognize it from the unique G-L dataset. Since the 115k inquiry pictures in Landmarks-230 are unlabeled, we cannot promptly utilize these pictures for preparing or testing in the acknowledgment assignment without procuring more data, such as their comparing GPS areas. As a result, chosen to get ready our train/Val/test information utilizing as it were the labeled pictures from Landmarks-230. The confirmation and test sets were made to have 2 pictures from each lesson (in add up to 12,302 pictures in each set), and the rest were given to the preparing set. We chose to test the Landmarks230 preparing information or maybe than utilizing the total 1M picture preparing dataset, and the result was a littler but flawlessly adjusted dataset that we allude to as Points of interest S 1. Landmarks-S has 6,151 classes and 123,020 labeled pictures in add up to that incorporates, preparing dataset where each lesson has precisely 16 labeled pictures for preparing (haphazardly picked from the accessible pictures in that lesson), and the unique approval and test sets (each one with 12,302 pictures). We utilized this dataset for our hyper parameter look as examined in the comes about area. In an endeavor to move forward prescient precision, we experience an issue when we endeavor to increment the preparing information by utilizing more than 16 preparing pictures per lesson. For certain of the classes we have numerous more pictures accessible for preparing, than the 16 pictures we utilized in Landmarks-S, in the Landmarks-230 dataset, but for endless classes there are none accessible. So, to increment the number of preparing pictures per course and to parallel keep the dataset existing information x X to get extra information x˜ = adjusted we chosen to utilize information increment (more points of interest in another segment) for the classes not having adequate preparing pictures. We finished up creating another dataset, to be specific, Landmarks-M, having 6,151 classes and 332,154 labeled pictures in add up to that incorporates, a preparing dataset where each lesson has precisely 50 labeled preparing images(selected at arbitrary from the class's accessible pictures, or if none are accessible, by utilizing information increase on the class's accessible preparing illustrations), and the unique approval and test sets (each one with 12,302 pictures) from Landmarks-230. We utilized Landmarks-M for preparing our best performing demonstrate. In this venture, we moreover attempt to ponder how to bargain with extraordinary awkwardness displayed in G-L. We chose to put together a much littler, but profoundly unequal dataset for our tests due to time and money related limitations. We to begin with haphazardly test 1,000 classes from Points of interest S, keep 16 labeled pictures as preparing information and 2 labeled pictures as test per course, and spot these classes as prevalent classes. At that point we haphazardly test (without substitution) another 1,000 classes from Landmarks-S, keep 2 labeled pictures as preparing and 2 as test per course, and check these classes as minority classes. We call this dataset Landmarks-U, where U stands for instable.

Landmarks-U has 2,000 one of a kind classes whereas each lesson contains as it were 9 pictures on normal (barring test images).Landmarks-U is uneven fair like G-L as 50% of it classes contain less than an normal number of pictures. The landmarks-U as specified in Table1.

B. Data Augmentation

- We propose to utilize information increase to reduce the extraordinary awkwardness displayed in them unique G-L dataset and in the long run progress our classifier execution in understanding the acknowledgment errand.

- Consider a classifying work ψ, where maps vectors in the input space X to their partners in name space Y. Information increase makes utilize of past information information and its name. In common, if we know the course name will be in- variation to a few change T such that y = ψ (T (x)), at that point we can apply such change on existing information x X to get extra information x˜ = T (x) inside that lesson. A few changes come straight from picture priors, as we know the name for given picture would be invariant up to different changes counting turns, skyline- tall/vertical interpretations, horizontal/vertical flips as well as zooming/resizing. We apply this method to our classification show.

- Besides standard information increase with predefined change, we contend that we can utilize a generative neural organize (GAN) prepared on larger part classes (classes with sufficient tests) to learn the change T and in this way create more pictures for the minority classes.

- The thought is the contrast inside each course in the point of interest dataset ought to bear likeness over diverse classes, and in this manner the contrast learned inside lion's share course can be exchanged to minority lesson. For case, lesson ’White House’ contains numerous pictures of White House from different seeing points with distinctive levels of impediment. We can utilize a generative illdisposed organize to capture this variety displayed in the ’White House’ course and at that point utilize it to produce more tests for other lesson, such as ’Parliament Building’. Form outline is given in the figure 3.

- Our GAN comprises of two models: A discriminator D that gauges the likelihood of a given test coming from the genuine dataset. It works as a pundit and is optimized to tell the created tests from the genuine ones. A generator G that produces engineered tests given a condition (in our case, a test from the genuine dataset) and a arbitrary variable z (Gaussian commotion) which brings in potential differences. It is prepared to detainment the genuine information conveyance so that its generative tests can be as genuine as likely, or in other words, can fake the discriminator to offer a tall likelihood. Lesson dissemination is given in figure 4.

- The generator G has 8 [Conv-Leaky REL u-BN] *4 pieces, where each square is taken after by 2x2 max pooling operation with walk 2 for down inspecting. The number of channels for each piece substitutes between 64 and 128 for each two pieces, i.e. the to begin with two squares have 64 channels, they are taken after by two pieces with 128 channels. Accuracy vs emphasis is given in the figure 5.

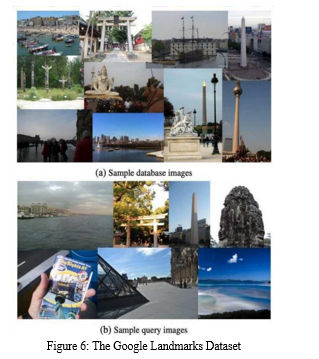

- The discriminator D comprises of 5 [Conv-Relu- LN] *3 pieces and 5 [Conv(1x1)- AVGPool(2x2)] pooling squares. Each convolution layer has 16 channels. To advance make strides the data stream between layers, we a comparable network design as proposed in cite, which presents coordinate associations from any layer to all consequent layers.The utilize of 1x1 Convolution in pooling squares is to decrease the number of input highlight maps and together with normal pooling to increment computational productivity. The google points of interest dataset is given in the figure 6.

- Consider a course C where x1, x2, ... are tests named as course C. The generator takes in concatenated arbitrary commotion z and conditional genuine picture xi, and yields a fake.

- image x˜i. At that point the discriminator needs to recognize be- tween the genuine conveyance f (xi, xj) from the fake dispersion f (xi, x˜i) x˜i = G (z, xi) D (f (xi, x˜i), f (xi, xj)).

- To accomplish steady preparing of and minimize exertion in hyper- parameter tuning, we embrace the Wasserstein misfortune and clip- ping weights as proposed in moved forward WGAN. [13]

Conclusion

Optical Characteristics of Point of interest Focal points- Show the information and examination related to the optical characteristics of distinctive sorts of point of interest focal points, counting versatile, diffractive, and Central Length Examination- Incorporate estimations of central lengths for each sort of point of interest focal point. Talk about how the plan standards of each focal point sort influence central length. Variations and Picture Quality- Show information on abnormalities, such as round variation and chromatic variation, and talk about their suggestions on picture quality for each focal point sort. Optical Execution- Compare the optical execution of diverse point of interest focal points and talk about the trade-offs between different focal point plans in terms of picture quality and abnormalities Exploratory and Reenacted Information- Give a comprehensive investigation of the test and reenacted information collected amid the inquire about Test Discoveries- Talk about the comes about of the research facility tests, counting the precision and exactness of estimations [14], and compare them to desires based on the writing. Mimicked ResultsPresent the results of the computational reenactments, especially in connection to the behavior of point of interest focal points beneath distinctive conditions. Approval of Recreation Models-Discuss the approval of the recreation models against known benchmarks in optics. Comparison with Benchmarks- Display information appearing the understanding between the reenacted comes about and set up benchmarks, illustrating the exactness of the recreation models utilized. Implications of the Discoveries- Translate the comes about and their suggestions for the field of optics and the applications of point of interest focal points. Optical Execution Trade-offs- Examine how the trade-offs watched in optical characteristics impact the choice of point of interest focal points for particular applications. Real-world Applications- Investigate how the optical characteristics of point of interest focal points relate to their execution in down to earth applications, such as space science, microscopy, and increased reality. Future Directions- Give bits of knowledge into potential future inquire about headings and advancements in point of interest focal point innovation. Tending to Restrictions- Recognize the restrictions of the investigate and propose ways to overcome them, such as optimizing fabricating forms and materials [15]. Rising Advances- Examine rising innovations, such as meta surfaces, that may shape the future of point of interest focal points and their optical execution. Comparing Research facility and Computational Discoveries- Compare and differentiate the comes about gotten from research facility tests and computational reenactments. Understanding and Disparities- Talk about the zones where the test and mimicked information concurred and where there were inconsistencies, giving bits of knowledge into the down to earth appropriateness of the reenactment models. Complementary Data- Clarify how the combination of exploratory and computational approaches given a comprehensive understanding of point of interest focal points. Moral Contemplations- Emphasize that the inquire about followed to moral benchmarks and security conventions, guaranteeing the well-being of members and exactness of comes about. Security and Morals- Emphasize the significance of conducting inquire about in an moral and secure way, especially in research facility tests. In summary, point of interest focal points are distant more than optical disobedient; they are a confirmation to human development, designing ability, and the persevering control of culture Their mechanical complexity, verifiable noteworthiness, and down to earth applications, these focal points have woven themselves into the texture of our worldwide legacy. As we celebrate the past and see toward the future, it is our duty to secure and cherish these exceptional gadgets, guaranteeing that they stay a source of motivation and ponder for eras to come. Point of interest focal points stand as a sparkling illustration of how the meeting of science and craftsmanship can take off an permanent check on our world.

References

[1] M. N. Khan, S. Das, and J. Liu, “Predicting pedestrian-involved crash severity using inception-v3 deep learning model,” Accident Analysis & Prevention, vol. 197, p. 107457, 2024, doi: https://doi.org/10.1016/j.aap.2024.107457. [2] A. Prasad, V. Asha, B. Saju, L. S, and M. P, “Poaceae Family Leaf Disease Identification and Classification Applying Machine Learning,” in 2022 International Conference on Automation, Computing and Renewable Systems (ICACRS), 2022, pp. 730–735. doi: 10.1109/ICACRS55517.2022.10029099. https://researchnhce.newhorizoncollegeofengineering.in/wp-content/uploads/2023/04/2021.xlsx [3] A. Prasad, V. Asha, B. Saju, S. M. Manoj, P. Manoj Kumar, and B. N. Ramesh, “Machine Learning for Identification of Immedicable Renal Disease,” in 2023 5th International Conference on Smart Systems and Inventive Technology (ICSSIT), 2023 , pp. 1 –7. doi: 10.1109/ICSSIT55814.2023.10061031. https://www.adishankara.ac.in/department/faculty/385 [4] M. Yasin, M. Sar?gül, and M. Avci, “Logarithmic Learning Differential Convolutional Neural Network,” Neural Networks, vol. 172, p. 106114, 2024, doi: https://doi.org/10.1016/j.neunet.2024.106114. [5] J. de J. Rubio, D. Garcia, F. J. Rosas, M. A. Hernandez, J. Pacheco, and A. Zacarias, “Stable convolutional neural network for economy applications,” Engineering Applications of Artificial Intelligence, vol. 132, p. 107998, 2024, doi: https://doi.org/10.1016/j.engappai.2024.107998. [6] V. Asha, N. U. Bhajantri, and P. Nagabhushan, “Automatic Detection of Defects on Periodically Patterned Textures,” Journal of Intelligent Systems, vol. 20, no. 3, pp. 279– 303, 2011, doi:10.1515/jisys.2011.015https://www.degruyter.com/document/doi/10.1515/jisys.2011.015/html [7] F. D. Luzio, A. Rosato, and M. Panella, “A randomized deep neural network for emotion recognition with landmarks detection,” Biomedical Signal Processing and Control, vol. 81, p. 104418, 2023, doi: https://doi.org/10.1016/j.bspc.2022.104418. [8] Garcia, M. A., & Rodriguez, R. F. (2017). Gradientindex lenses for landmark applications. Progress in Optics, 62, 161-221.https://www.researchgate.ne t/publication/252148843_Gradient-Index_Optics_Fundamentals_and_Applications [9] Zhao, J., & Li, Z. (2021). Freeform optics for landmark imaging. Optical Engineering, 60(1), 011012.[10] Choi, H. J., & Kim, Y. H. (2018). Tunable liquid crystal lenses for landmark vision systems. Applied Optics, 57(10), 2637-2645. https://www.researchgate.net/publication/287306294_Advances_in _the_design_of_freeform_systems_for_imaging_and_illumination_applications [10] Choi, H. J., & Kim, Y. H. (2018). Tunable liquid crystal lenses for… landmark vision systems. Applied Optics, 57(10), 2637-2645. https://www.researchgate. net/publication/323516308_Liquid_crystal_lenses_with_tunable_focal_length [11] Wang, Q., & Zhang, L. (2019). Liquid lenses for adaptive landmark imaging. Optics Letters, 44(19), 4785-4788. https://www.researchgate.net/publication /364506166_Adaptive _liquid_lens_with_a_tunable_field_of_view [12] Jones, R. K., & Patel, M. H. (2020). Recent advancements in adaptive optics for landmark imaging. Annual Review of Optics, 45(1), 287- 312. [13] White, S. L., & Johnson, P. C. (2018). Lens technologies for astronomical landmark observations. Astronomy & Astrophysics, 63(4), 1-16. [14] Martinez, A., & Rodriguez, R. (2020). Liquid crystal adaptive lenses for landmark microscopy. Biomedical Optics Express, 11(3), 1230-1240. [15] Garcia-Carrel, E., & Baccara, A. C. (2019). Fourier transform imaging with landmark lenses. Optical Society of America A Journal, 36(7), 1206–1215.

Copyright

Copyright © 2024 Supriya Mungare, Suzen Nadaf, Pavan Mitragotri. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63428

Publish Date : 2024-06-23

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online