Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Deepfake Detection System

Authors: Mr. Yogesh Rai, Mr. Aditya Shinde, Mr. Pranav Patil, Prof. Preeti Komati

DOI Link: https://doi.org/10.22214/ijraset.2024.62007

Certificate: View Certificate

Abstract

Because deep fake technology allows for the creation of incredibly realistically modified material that has the potential to mislead viewers and possibly cause instability across a range of businesses, it poses a severe threat to modern society. These days, detecting such modified content is crucial to maintaining the trustworthiness and integrity of digital media. In response, this research proposes a robust deep learning-based technique for detecting deep fakes in videos. Our method uses the Deep fake Detection Challenge dataset, which contains both real and deep fake films, to train and assess our deep learning model. We want to provide a dependable solution that can differentiate between artificially generated information and actual content using state-of-the-art neural networks. Artificial intelligence-generated phony videos are more likely to spread thanks to deep learning algorithms, which raises serious concerns about official blackmail, terrorist propaganda, revenge pornography, and political manipulation. To alleviate these concerns, our approach is designed to automatically identify several forms of deep forgery, including replacement and re-enactment techniques. By means of extensive testing and analysis, we demonstrate the effectiveness of our deep fake detection system in precisely distinguishing bogus films from authentic ones. Our system, which combines cutting-edge deep learning methods with a large dataset, offers a promising way to lessen the hazards related to the spread of deep fake technologies.

Introduction

I. INTRODUCTION

A. Overview

The human face is the most distinguishing feature. The importance of the security risk provided by face alteration is increasing as face synthesis technology advances swiftly. A particular kind of AI technology called "deep fake" makes it possible to superimpose one person's face over another without that person's permission. Deep learning is a powerful and practical technology that has been used to machine learning, computer vision, natural language processing, and other fields. Advances in deep learning have simplified the process of editing digital data and creating artificial content more than before. Deepfake technology is a rapidly emerging field that can create believable audio files, images, and videos that are difficult to distinguish from the actual thing. The rise of deepfakes has sparked worries about the spread of misinformation, propaganda, and fake news as well as the potential for fraud, extortion, and other evil deeds. Because of this, it's critical for governments, media and tech companies, and regular people to recognize Deep Fakes. Deep fake detection techniques are emerging swiftly; they discern minute differences between real and false media using computer vision, forensic analysis, and machine learning. Detecting deepfakes is a major challenge because they can be very convincing and difficult to distinguish from real material. The main challenge in detecting Deep fakes stems from their ability to employ subtle visual or audio cues that are difficult for humans to pick up on. Additionally, since deepfakes are constantly changing, traditional detection techniques might not be enough. Engineers and researchers have developed a number of Deep fake detection techniques to address these problems. These techniques include machine learning-based algorithms, photo and video analysis, and audio analysis. Nevertheless, a lot of research is being done on Deep fake detection, and in order to keep up with the rapid advancement of Deep fake technology, new methods are being developed on a regular basis. Preserving the integrity of digital media and preventing its malicious usage requires the accurate identification of Deepfakes.

B. Background

These systems are designed to identify and detect deepfake movies, which are altered or synthetic videos created using Deep Learning algorithms. Deepfake detection systems use complex algorithms and machine learning models to analyze audio and visual information in the videos. They look for anomalies, inconsistencies, and artifacts that suggest deepfakes are real. These systems employ deep learning models, such as convolutional neural networks (CNNs), to facilitate accurate identification and support efforts to combat the dissemination of fraudulent content.

C. Problem Statement

To develop and put into operation a deep fake detection system that makes use of deep learning to identify if a video is real or fake. to accurately recognize and discern between original and modified material. The advent of AI-generated deepfakes has made it harder to distinguish these phony movies, which can have detrimental effects on the media, politics, and cybersecurity, among other fields.

D. Goal

The main objective of deepfake detection is to create dependable and efficient techniques for detecting manipulated media in order to lessen the possible risks connected to the dissemination of deepfake material.

II. LITERATURE SURVEY

Artificial intelligence (AI)-generated movies or images that modify or substitute authentic content with synthetic material are known as deepfakes. The body of research emphasizes how critical it is to provide effective detection methods in order to counteract the possible abuse of deepfakes in a variety of contexts, such as politics, entertainment, and cybersecurity. An extensive review of the literature on deepfake detection algorithms demonstrates the increasing amount of research devoted to preventing the spread of synthetic media. Researchers have looked into a number of techniques for identifying deepfakes by leveraging advancements in machine learning, computer vision, and signal processing. Using deep neural networks to distinguish between legitimate and false media is one of the more prevalent methods. For example, because Convolutional Neural Networks (CNNs) can automatically learn discriminative characteristics from photos and movies, they have been employed widely. Studies have demonstrated the effectiveness of CNN-based models in detecting anomalies and differences that may indicate deepfake changes. Furthermore, studies on deepfake detection highlight how important a large and diverse dataset is to model training. Researchers emphasize that large-scale datasets with a range of deepfake variations are necessary to improve the generalizability and durability of detection methods. These datasets typically feature synthesized video made using several approaches, like face swapping, lip-syncing, and image reenactment, in order to represent a wide range of potential deepfake scenarios. In addition to CNNs, researchers have investigated customized architectures and frameworks created specifically for deepfake detection. For the purpose of creating synthetic media for deepfake creation process analysis and detector training, generative models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) have been repurposed.

Researchers can learn more about the underlying traits and patterns present in deepfake content by utilizing these generative models. Reviews of the literature also demonstrate how interdisciplinary research on deepfake detection combines expertise from sociology, psychology, and ethics. The ethical ramifications of deepfakes and their possible influence on society further encourage researchers to create responsible detection techniques. Knowing the sociocultural and psychological effects of deepfakes is necessary to develop a complete detection system that tackles both technological issues and larger social ones. In conclusion, the review of the literature emphasizes the complex field of deepfake detection research, highlighting the convergence of data diversity, technical advancement, and collaboration across disciplines and ethical consciousness. Sustained efforts in this area are necessary to reduce the dangers connected to manipulating synthetic media.

[1] Concerns about the spread of incredibly realistic DeepFake content have increased as a result of recent developments in generative AI, which has sparked intense study into the creation of advanced machine learning-based detection methods. Interestingly, merging various media modalities has received a lot of attention in an effort to improve detection capabilities. With this thorough analysis, Tony Jan, Scott Thompson Whiteside, and Mukesh Prasad explore this field and look at the most recent tactics and approaches used to achieve successful DeepFake detection. [2] The authors of the survey, "Deep Learning for Deepfakes Creation and Detection," Thanh Thi Nguyen, Quoc Viet Hung Nguyen, Cuong M. Nguyen, and Dung Nguyen, provide a comprehensive analysis of the deepfake landscape, including its history and the most recent developments in deepfake detection techniques. Through a comprehensive analysis that synthesizes existing state-of-the-art approaches and background knowledge, the study aims to support the development of new and robust solutions that can effectively address the growing complexity posed by deepfake threats. [3] In their survey titled "DeepFake Creation and Detection," Swathi P and Saritha Sk delve into the persistent challenge of discerning DeepFaked content from genuine material, a task traditionally arduous for the human eye. However, recent advancements in technology have exhibited promising results in this endeavor, albeit with certain constraints. The paper extensively explores various algorithms utilized in both the creation and detection of DeepFakes, providing a comprehensive overview of these techniques and their respective advantages and limitations, with the ultimate goal of aiding in the identification and understanding of their strengths and weaknesses.

[4] In their paper "Two-Stream Neural Networks for Tampered Face Detection," Peng Zhou, Xintong Han, Vlad I. Morariu, and Larry S. Davis present a new method based on a two-stream network architecture for detecting tampered faces. Their methodology uses a patch-based triplet network in a single stream to extract features related to residuals of local noise and camera properties. In parallel, they use GoogleNet in a different stream to detect artifact tampering. This novel approach improves the resilience and accuracy of the detection process by efficiently combining numerous streams to take advantage of various visual clues linked to tampering, which makes it possible to identify tampered faces.

III. ANALYSIS OF PROPOSED MODEL

A. Feasibility

- Technological Advancements: The creation of deepfake detection algorithms is becoming more and more possible due to the quick development of machine learning and deep learning techniques as well as the accessibility of powerful computing resources.

- Data Accessibility: To train and assess deepfake detection models, a variety of labeled datasets comprising real and altered media must be made available. Although gathering these kinds of datasets can be difficult, there are initiatives in place to provide repositories of deepfake data for scientific uses.

- Algorithmic Development: Scientists are always coming up with novel ideas and approaches to identify deepfakes, including detection methods based on audio and visual cues. These developments make the development of reliable detection systems more feasible.

- Computational Resources: Training and deploying deepfake detection models at scale is made possible by the availability of cloud computing services and specialized hardware accelerators (such as GPUs and TPUs), even if deep learning methods can be computationally demanding.

- Integration with Current Systems: Deepfake detection systems can be included into current programs and platforms, including content management systems, social media networks, and websites that stream videos. The implementation of detecting techniques to counter the proliferation of deepfake content is made more feasible by this integration.

- Cooperation: Researcher, business, and policymaker collaboration increases the likelihood of creating deepfake detection systems that are practical. Collaborative projects have the potential to expedite advancement in this field by combining resources, knowledge, and data.

- Ethical and Legal Considerations: It is essential to address ethical and legal issues, such as permission, privacy protection, and potential biases, in order to ensure that deepfake detection systems may be deployed responsibly and ethically.

- User Awareness and Education: Increasing user knowledge of deepfakes, their possible dangers, and how to recognize manipulated content will help stop the disinformation about them from spreading. Raising user awareness makes relying on detection technologies as part of a larger anti-deepfake approach more feasible.

B. Scope

- Goals: Recognize and categorize modified media to tell real material from deepfake stuff. Provide strong detection techniques that can identify several kinds of deepfake manipulations, such as voice impersonation, lip syncing, and facial swapping.

2. Media Categories

- Photographs: Recognizing changes to the scene, body parts, and faces in static photographs

- Videos: Detecting lip-syncing, facial re-enactment, and temporal discrepancies.

- Audio: Identifying artificial speech, voice mimicry, and audio manipulation in recordings.

3. Modes of Detection

- Visual Analysis: Using computer vision techniques, one can examine visual clues such pixel-level anomalies, blinking patterns, and facial expressions.

- Audio analysis is the process of evaluating auditory aspects such as pitch changes, spectral properties, and voice quality using machine learning and signal processing techniques.

- Multi-modal fusion: Combining data from auditory and visual modalities to improve detection robustness and accuracy.

4. Technological Methods: Deep learning is the process of classifying deepfake content by using transformer topologies, recurrent neural networks (RNNs), or convolutional neural networks (CNNs) to extract discriminative characteristics from data.

5. Conventional Machine Learning: Using feature engineering, statistical techniques, and traditional classifiers (such as SVMs and decision trees) to identify anomalies suggestive of deepfake manipulation.

6. Ensemble Methods: Increasing overall performance and reliability by combining several models or detection algorithms.

7. Uses: Social Media Platforms: Including deepfake detection tools to help spot and stop the spread of bogus material on sites like YouTube, Facebook, and Twitter. Helping law enforcement organizations and digital forensics specialists find manipulated media evidence for inquiries is known as forensic analysis. Supporting content hosting platforms in enforcing policies against the distribution of dangerous or deceptive deepfakes is known as content moderation.

C. Challenges

Here are some challenges associated with this model:

- Adversarial Attacks: By tampering with the input data or the detection system itself, authors of deepfakes may use adversarial approaches to avoid detection. It is possible to create adversarial instances that trick deepfake detection methods, resulting in false negatives or decreased detection accuracy.

- Data Diversity and Availability: Access to sizable and varied datasets comprising real and altered material in a range of modalities (such as photos, videos, and audio) is necessary for developing strong deepfake detection models. However, due to ethical issues, privacy concerns, and the lack of annotated datasets, gathering labeled data for deepfake detection system training can be difficult.

- Generalization to Unknown Manipulation Techniques: Models for detecting deepfakes that were trained on current manipulation techniques can find it difficult to adapt to new and developing strategies for creating deepfakes. Modifying detection methods to identify unknown or zero-day.

- Computational Resources and Efficiency: Deepfake detection sometimes entails computationally demanding operations, particularly when handling massive amounts of multimedia data in real-time or evaluating high-resolution films. When deploying detection systems at scale, scalability and efficiency become crucial, especially in contexts with limited resources.

- Interpretability and Explainability: Deep learning-based detection algorithms are sometimes thought of as "black boxes," which makes it difficult to understand how decisions are made and why certain results are detected. To foster trust and enable human oversight, deepfake detection systems must provide interpretability and transparency.

- Cross-Modal Detection: Additional issues include feature fusion, heterogeneous data alignment, and resolving modality-specific vulnerabilities when integrating information from several modalities (e.g., visual, auditory) for cross-modal deepfake detection.

- Implications for Society and Ethics: Deepfake detection brings up issues with permission, privacy, and possible abuse. Ethical norms and careful consideration are needed to strike a balance between the need to preserve individual rights and freedoms and the requirement to detect and mitigate harmful deepfakes.

- Cat-and-Mouse Game: Deepfake producers and detection researchers are engaged in an ongoing game of cat and mouse as a result of the ongoing evolution of deepfake production techniques and detection technologies. It is a constant challenge to remain detection-effective while keeping up with deepfake technology improvements.

D. Implications

Here are several applications of deepfake detection systems across different domains:

- Social Media Sites: Deepfake detection algorithms can be integrated into well-known social media sites like Facebook, Twitter, and YouTube to automatically identify or remove faked content. This promotes platform integrity, protects user trust, and stops false information from spreading.

- News and Journalism: Before publishing or airing, news organizations and journalists can utilize deepfake detection technologies to confirm the legitimacy of user-generated material or perhaps manipulated media. This guarantees the public receives accurate and trustworthy information.

- Digital Forensics and Law Enforcement: When looking at cases involving tampered audiovisual evidence, forensic analysts and law enforcement organizations might benefit from the use of deepfake detection technology. The integrity of justice systems can be maintained and legal proceedings strengthened by detecting deepfake manipulation.

- Elections & Political Campaigns: Deepfake detection systems can assist in identifying and halting the transmission of modified videos or pictures that target political personalities or candidates, given the surge in disinformation campaigns and political propaganda. By doing this, openness is encouraged and public opinion manipulation is avoided.

- Entertainment Industry: To prevent unlawful use of celebrity likenesses or copyrighted material in deepfake films, movie studios, entertainment platforms, and content creators can utilize deepfake detection to safeguard intellectual property rights.

- Cybersecurity and Fraud Detection: Systems that use deepfake detection can help identify identity theft, phishing schemes, and other cybercrimes that use modified media to trick people or businesses. To reduce potential hazards, cybersecurity procedures can be increased by recognizing deepfake content.

- Education and Awareness: Deepfake detection technologies can be included into educational programs and awareness campaigns to educate the public about the existence of deepfakes, their potential impact on society, and how to critically examine media content for authenticity.

IV. REQUIREMENTS SPECIFICATION

A. oftware Requirements

- System of Operation: Windows 10 (64-Bit)

- Programming Language: Python versions 3.7, 3.8

- IDE: Spyder

- Libraries: Keras, NumPy, OpenCV, Tensor Flow

B. Hardware Requirements

- Device: Intel Core

- Hard drive: 500GB

- Speed: 2.80GHz

- Memory: 8GB

- Keyboard: Standard Windows Keyboard

C. Database

SQLite Database: The database management system for the system is SQLite. A deepfake detection system's backend, which stores user information, preferences, or system settings, can be powered by SQLite. Model checkpoints, feature vectors, and training data for deepfake detection models can all be kept in SQLite.

D. Tool

Spyder: An open-source platform and integrated development environment for scientific Python programming is called Spyder. Spiders are crucial in the early stages of creating a deepfake detection system because they gather, organize, and prepare the dataset needed to train machine learning models. They enable the system to learn and differentiate between authentic and fraudulent media, enhancing its ability to accurately identify deepfakes.

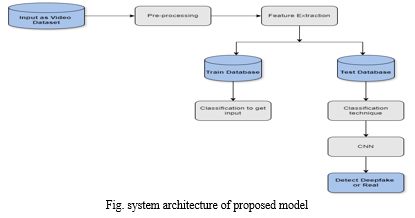

V. SYSTEM ARCHITECTURE

Modern algorithms and machine learning approaches are commonly used in the development of deepfake video detection systems. One method for evaluating the features and patterns of the face is the use of convolutional neural networks and other deep learning models. When attempting to distinguish between real and deepfake videos, these models can be useful. These methods may enable us to develop a potent instrument that detects and thwarts deepfakes at an early stage, enhancing the general security and integrity of digital media.

VI. IMPLEMENTATION

A. Dataset

Since there doesn't seem to be a dataset that gathers videos created using Deepfake, we created our own. Training auto-encoders for the forgery job requires several days of training with regular processors and can only be done for two specific faces at a time in order to yield realistic results. Instead, in order to have an appropriate variety of faces, we have chosen to get the abundance of videos that are easily available to the public on the internet. Consequently, 175 rushes of fraudulent videos have been collected from different websites. Every face has been located using the Viola-Jones technique. compared to a neural network that has been trained for the detector Face recognition using landmarks. In order to guarantee a fair distribution of face resources, the amount the number of camera angles in each movie and the variations in illumination on the target's face determine the number of frames selected for extraction. For instance, roughly fifty faces were removed from each scene. Subsequently, a second dataset was constructed with real-world face photos that were obtained from several websites at identical resolutions. It has finally undergone a manual review to remove misalignment and improper facial detection. Every face has been located using the Viola-Jones technique. compared to a neural network that has been trained for the detector Face recognition using landmarks. In order to guarantee a fair distribution of face resources, the amount the number of camera angles in each movie and the variations in illumination on the target's face determine the number of frames selected for extraction. For instance, roughly fifty faces were removed from each scene. Subsequently, a second dataset was constructed with real-world face photos that were obtained from several websites at identical resolutions. It has finally undergone a manual review to remove misalignment and improper facial detection.

B. Classification Setup

Construct a CNN architecture suitable for image or video classification. Common architectures include ResNet, VGG, and custom-designed networks. input frames from images or movies. Output: A true or false binary classification. In this case, a sequential model with successive layer additions is initialized using the Sequential () function. This includes an additional 2D convolutional layer with 32 3x3 filters, often known as kernels. The input picture size (64x64 pixels with 1 channel, assuming grayscale) are specified by input_shape = (64, 64, 1). includes a max pooling layer with a 2x2 pool. By halving the spatial dimensions, this facilitates feature extraction and downsampling.

VII. RESULTS

The project's main goal is to put into practice a deepfake detection system that can spot edited or fake videos. Users have to go through a secure login process in order to authenticate themselves and gain access to the system. Users can upload any video for analysis once they've logged in. The video that has been uploaded is automatically processed in order to extract individual frames, where each frame is a snapshot of the movie. Following the extraction of these frames, a number of computer vision and deep learning algorithms designed especially for deepfake detection are applied. These algorithms examine the visual properties and patterns in every frame to see whether there are any indications of synthesis or manipulation common to deepfakes. The detection algorithms produce a response for each investigated frame that indicates whether the frame is likely a deepfake or not. This analysis is put together to offer a general evaluation of the authenticity of the video. The user-friendly interface of the system makes it possible to upload films with ease and presents detection findings in an understandable manner. In the end, this project promotes accuracy and trust in digital communications and media verification efforts by utilizing cutting-edge technologies to counteract the spread of false information and fraudulent media material.

VIII. FUTURE SCOPE

Deepfake technology must progress in tandem with the detection methods. Researchers are working on new methods to improve the accuracy and robustness of deepfake detection systems. One area of research is developing increasingly sophisticated deep learning models that are capable of analyzing and comprehending the minute nuances included in edited videos. Researchers are also investigating the idea of integrating multiple detection techniques, such as audio analysis and behavioral cues, in order to improve overall detection effectiveness.

Another essential element is the development of real-time deepfake detection tools that can quickly identify and report deepfakes while they are being created or distributed. Although it now only recognizes facial deep fakes, the system may be made to detect entire body deep fakes. This project has a ton of room for improvement in the future. Thanks to its enhanced dataset and training, this system can be applied in other domains, including the safety of women, the identification of fake news, identity verification, and the investigation of different cybercrimes in the future. This project has a ton of room for improvement in the future. Thanks to its enhanced dataset and training, this system can be applied in other domains, including the safety of women, the identification of fake news, identity verification, and the investigation of different cybercrimes in the future.

Conclusion

In conclusion, Deepfake\'s growing popularity can be attributed to the abundance and increased accessibility of content on social media. This is particularly crucial because deepfake technologies are becoming more accessible and social media has made it simpler for people to spread misleading information. The technique provides a neural network-based approach for determining whether a video is real or deepfake, as well as the confidence level of the proposed model. The system employed the provided video to detect deepfakes, and a convolutional neural network (CNN) was employed to categorize and ascertain if the video was authentic or counterfeit. It\'s well knowledge that altering someone\'s appearance on video carries risks.We propose two possible network configurations that may be used to effectively and economically detect such frauds. Additionally, we release a dataset devoted to the Deepfake method, a topic that has received a lot of attention but has, to our knowledge, received little documentation. One of the key characteristics of deep learning is its capacity to generate an answer to an issue without the need for prior theoretical investigation. We spent a lot of time visualizing the layers and filters of our networks because understanding the origins of this solution is crucial to evaluating its merits and drawbacks.

References

[1] Heidari, A., Dag, H., Unal, M., & Jafari Navimipour, N., \"DeepfakeDetectionUsingDeepLearningMethods,\" [Year2024]. [2] \"Deepfake Detection using Deep Learning Techniques: A Literature Review,\" by Amala Mary and Anitha Edison [Year 2023]. [3] Dheeraj JC and Kruttant Nandakuma, \"Deepfakes Detection Through Deep Learning,\" [Year 2021]. [4] Theerthagiri Prasannavenkatesan \"DeepfakeFaceDetectionUsingDeepInceptionNetLeARNingAlgorithm\" [Year 2023] [5] \"Deeplearning basedDeepFake video detection\" [Year2023] SarraGuefrachi1. [6] \"DeepFakeDetectionforHumanFaceImagesandVideos:ASur-vey\", [Year2022] ASADMALIK [7] Peng Zhou et al. (2018) \"Two-StreamNeural Networks for Tampered Face Detection,\" Xintong Han et al. (2018) [8] This work, \"A Machine Learning Based Approach for Higher Education,\" was published on Research Gate. [9] C. Tonsager and B. Balas. Contrast chimeras provide evidence that facial animacy is not limited to the eyes. 2014; Perception, 43(5): 355–369. [10] M. Barni, B. Tondi, S. Tubaro, L. Bondi, N. Bonettini, P. Bestagini, A. Costanzo, and M. Maggini. Convolutional neural networks are used for both aligned and nonaligned double JPEG detection. 2017; 49:153–163, Journal of Visual Communication and Image Representation. [11] M. C. Stamm and B. Bayar. A novel convolutional layer is used in a deep learning method for the detection of global picture alteration. The 4th ACM Workshop on Information Hiding and Multimedia Security Proceedings, pp 5–10. ACM, 2016. [12] F. Chollet. Xception: Depthwise separable convolutions for deep learning. preprint arXiv, 2017; pages 1610–02357. [13] Chollet, F. et al. (2015). Keras. https://keras.io. [14] P. Vincent, A. Courville, Y. Bengio, and D. Erhan. displaying a deep network\'s upper-layer features visually. 1341(3):1, University of Montreal, 2009. [15] B. L. Koenig, J. S. Herberg, T.-T. Ng, C. Y.-C. Tan, R. Wang, and S. Fan. Visual realism as perceived by humans in computer-generated and photorealistic face photographs. 2014; 11(2):7 in ACM Transactions on Applied Perception (TAP). [16] H. Farid. 2009; IEEE Signal Processing Magazine, 26(2):26–25. A Survey Of Image Forgery Detection. [17] P. Perez, C. Theobalt, T. Thormahlen, O. Rehmsen, L. Valgaerts, and P. Garrido. Automated recreation of faces. Pages 4217–4224, 2014 IEEE Conference on Computer Vision and Pattern Recognition Proceedings. [18] C. Szegedy and S. Ioffe. Batch normalization: By lowering internal covariate shift, this technique speeds up deep network training. Preprint arXiv arXiv:1502.03167

Copyright

Copyright © 2024 Mr. Yogesh Rai, Mr. Aditya Shinde, Mr. Pranav Patil, Prof. Preeti Komati. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET62007

Publish Date : 2024-05-12

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online