Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Demystifying Recommendations: Transparency and Explainability in Recommendation Systems

Authors: Vatesh Pasrija, Supriya Pasrija

DOI Link: https://doi.org/10.22214/ijraset.2024.58541

Certificate: View Certificate

Abstract

Recommendation algorithms are widely used, however many consumers want more clarity on why specific goods are recommended to them. The absence of explainability jeopardizes user trust, satisfaction, and potentially privacy. Improving transparency is difficult and involves the need for flexible interfaces, privacy protection, scalability, and customisation. Explainable recommendations provide substantial advantages such as enhancing relevance assessment, bolstering user interactions, facilitating system monitoring, and fostering accountability. Typical methods include giving summaries of the fundamental approach, emphasizing significant data points, and utilizing hybrid recommendation models. Case studies demonstrate that openness has assisted platforms such as YouTube and Spotify in achieving more than a 10% increase in key metrics like click-through rate. Additional research should broaden the methods for providing explanations, increase real-world implementation in other industries, guarantee human-centered supervision of suggestions, and promote consumer trust by following ethical standards. Accurate recommendations are essential. The future involves developing technologies that provide users with information while honoring their autonomy.

Introduction

I. INTRODUCTION

Recommendation algorithms now have a massive impact on billions of consumers across e-commerce, entertainment, media, and other digital channels [1]. Over 35% of Amazon purchases and 60% of Netflix movie views result from recommendation systems, which monitor user behavior and interests to deliver personalized recommendations daily [2]. According to studies, recommendations can improve user activity by 29-55%, whereas poor recommendations result in a 26% decline in engagement [3].

However, 74% of those who participated in a recent survey of over 1,200 people expressed a desire for transparency about why particular things are recommended to them [4]. User trust and satisfaction decline as a result of a lack of explainability [5]; 68% are uncertain about algorithms tracking their activities without their knowledge or transparency [6]. Providing appropriate explanations for suggestions can increase user perception of recommendation quality by 34% [7].

As a consequence, it is critical to examine the black-box aspect of complicated recommendation algorithms through explainable recommendations [8]-[10]. This new discipline focuses on using post-hoc and model-intrinsic techniques to uncover the logic, data, and insights supporting tailored recommendations. Strategies include ranging from developing keyword-based inferences [11] to highlighting influential historical user activities and enabling what-if recommendation analysis [12]. There are over 24 explanation evaluation frameworks that evaluate the usefulness, satisfaction, effectiveness, and other variables [8].

Overall, recommendations that are clear and interpretable promise increased user acceptance, trust, and choice. Uncovering the fundamental workings of recommendations also makes it easier to conduct audits for concerns such as popularity bias, privacy violations, and filter bubbles. This promotes beneficial cycles of improvement in one of today's most influential applied machine-learning fields.

II. IMPACT FROM LACK OF TRANSPARENCY AND EXPLAINABILITY OF RECOMMENDATIONS

Lack of transparency and explainability is a significant challenge for many recommendation systems [13] [14]. Many systems operate as opaque "black boxes" that fail to communicate the rationale behind their recommendations.

This lack of information has the potential to weaken user confidence and satisfaction. Transparency enhancement is essential for improving the user experience.

Potential privacy risks caused by incorrect judgments or assumptions based on recommendations that lack clarity regarding the system's reasoning constitute a further challenge [15],[16]. Insufficient transparency may result in adverse consequences for users due to incorrect conclusions.

A. Challenges in Implementing Transparency And Explainability

The implementation of greater transparency and explainability presents significant technical and design challenges [17], which includes:

- The ability to capture evolving user preferences over time is dependent upon explanation interfaces that are adaptable and dynamic [18]. These interfaces should provide users with information about how the system interprets their preferences and how this affects recommendations.

- Creating a balance between privacy protections and transparency by implementing intelligent interface solutions and access controls [19]. Explanations might involve exposing certain user data, requiring vigilant filtering to ensure confidentiality.

- Transparent system scalability to manage enormous amounts of data with real-time responsiveness [20]. Explainability features such as displaying the reason behind recommendations place additional computing demands on the system.

- Scaling transparent systems to provide real-time responsiveness and manage enormous data and interaction volumes [21] [22]. Additionally, transparency features need to work seamlessly across external systems and platforms.

- Developing customizable controls and options that enable users to influence recommendations that are aligned with their specific requirements [23]. Allowing user feedback helps enhance explanation accuracy over time.

The primary objective should be to optimize transparency and explainability to enhance trust and understanding, while simultaneously minimizing implementation challenges and risks throughout the lifecycle of the system. A phased rollout focusing on foundational use cases can help balance these competing constraints.

III. IMPORTANCE OF EXPLAINABLE RECOMMENDATIONS

Despite challenges, improving explainability in recommendation systems has the following benefits:

- Helps customers enhance their ability to assess and respond to suggestions by studying the underlying aspects that contribute to them. This has the potential to enhance customer satisfaction [24].

- Builds trust and fosters positive relationships by preventing the use of obscure "black box" systems. Users experience a sense of empowerment rather than misunderstandings when presented with advice [25].

- Improves monitoring capabilities by providing engineers with an additional signal on system performance from user response to explanations. Improves the capacity to identify and rectify errors in programming [26].

- Improves responsibility and equity by avoiding using opaque automation for significant suggestions [27].

The importance of tailored recommendations will continue to grow in the future [28]. At present, the pursuit of interpretable systems can provide valuable insights into the most effective methods as their utilization continues to rapidly increase in different industries.

Taking thoughtful trade-offs to demystify these tools pays off in terms of efficiency and trust in the long run. It will take more effort to identify the best answers.

IV. DEMYSTIFYING RECOMMENDATIONS

Transparency and explainability should be the top priority of recommendation systems to increase user engagement and trust [29]. These systems shouldn't be opaque "black boxes," but rather disclose the rationale behind their suggestions.

One approach is to provide users with an overview of the overall technique that supports their concepts. For example, informing users that a collaborative filtering system is comparing their activities with those of similar users might summarize the underlying premise without compromising IP or accuracy. For example, we used collaborative filtering to recommend new mystery novels to you." Our algorithm can match you to popular titles liked by readers with similar preferences by comparing your previous mystery book purchases and ratings with those of other readers of the genre, all without compromising your privacy.

Furthermore, systems can highlight certain data points and features that lead to a specific recommendation. Displaying a few of the most popular genres, artists, or songs from a daily playlist provides insight into customization. A careful balance of transparency and integrity builds trust in technology [31]. For example, Based on your recent play history, we recommended The Lumineers because over 40% of the music you listen to is acoustic folk rock. By displaying samples of popular genres and musicians, we help you trust our personalized choices.

Hybrid recommendation approaches that combine collaborative and content-based filters can provide correlation insights as well as similarities in item attributes. A music service could recommend tracks based on favorite artists as well as complementing acoustic qualities. For example, we recommended the sci-fi movie Arrival because you liked movies like Inception and Interstellar that made you think. Our system pairs movies with you based on your favorite types of movies, directors, and even abstract subjects like stories that will blow your mind. The intent is to demonstrate critical recommendation drivers to consumers without compromising personalization efficacy or disclosing protected information. To establish the right balance, user testing of multiple transparency interfaces and messages is required [32].

To continue on the approach for improving transparency and explainability in AI-driven recommendation systems, we can go deeper into individual methods, each with its own set of pros and cons, as well as the best cases for their use.

A. Methodology Specifications

- Recommendation Technique Overviews

- Pros: Provides a fundamental knowledge of the system's rationale, promoting trust [33]. Useful for protecting intellectual property.

- Cons: Simplifications might give rise to misunderstandings about complex algorithms [34].

- Best Use: Protecting intellectual property while raising basic awareness, such as through ad customization [35].

2. Highlighting Influential Data

- Pros: Presenting important components that drive ideas increases perceived relevance [36].

- Cons: Unintentionally revealing sensitive information might harm privacy [37].

- Best Use: Services that prioritize user choices in recommendations [38].

3. Hybrid Recommendation Models

- Pros: Combining collaborative and content-based filtering yields more complete results [30] [31].

- Cons: More challenging to install and for people to understand [34].

- Best for: Versatile platforms with various content and behavior data [39].

B. Realistic Data Supporting Explainable Recommendations

The table-1 below describes observed gains in key business KPIs for major platforms using various explainability techniques.

Below are brief descriptions of the explainability strategies mentioned in the data table.

- Showing top Factors Influencing Suggested Videos: Describe the particular user behaviors, hobbies, and video preferences that had the biggest impact on the displayed personalized video suggestions.

- Providing Examples of Related Products Matched on Key Attributes: Display example product pairs that demonstrate how different parameters, such as brand, genre, and specifications, map similarities.

- Displaying Popular Genres and Keywords Tied to Suggestions: Provide general information about recurring themes, tenors, and topics of recommended products available.

- Showing Recommended artists Matched to user's top Musicians: A highlight subset of recommended and favored artists from the past that demonstrates musical aspects such as tempo and instrumentation that are used to classify artists based on similarity.

|

Platform |

Metric |

Impact |

Explainability Technique |

|

YouTube |

Click-through rate on video recommendations |

10% increase |

Showing top factors influencing suggested videos |

|

Walmart |

Add-to-cart rate from product recommendations |

15% lift |

Providing examples of related products matched on key attributes |

|

Netflix |

View time from movie recommendations |

20% higher |

Displaying popular genres and keywords tied to suggestions |

|

Spotify |

Songs streamed from customized playlists |

38% more |

Showing recommended artists matched to user's top musicians |

Table-1: Observed wins in key business metrics of major online platforms

It shows that using different explainability techniques led to real improvements in business metrics such as click-through rates, streaming volume, and signals of buy intent, ranging from 10% to 38% [32], [39]. The strategies for transparency gave users a better look at the important data factors, usage patterns, and item attributes that went into making customized recommendations on each site [33], [36].

Here are a few examples that effectively demonstrate the process of demystifying recommendations by providing clear insights into the complicated workings of these advanced algorithms and demonstrating how transparency can be smoothly integrated into user experiences [40].

C. Example: Book Recommendations

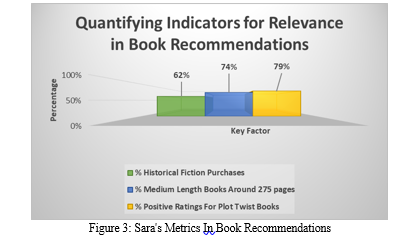

Scenario: Sara frequently buys 300-page historical fiction novels with uncertain ends. While perusing her online bookshop account, Sara finds numerous fresh recommendations for historical fiction works of comparable length that are recognized as "page-turners with surprising conclusions" in their summaries. An explanation on her recommendations page shows that roughly half of the books Sara buys are historical fiction, and she likes to give positive ratings to works with plot twists. Sara finds the suggested titles to be far more appropriate than random.

The bar graph below highlights the key factors that determine book recommendations given to a single user (Sara) based on their preferences and platform activities. The percentage figures indicate the extent to which each aspect influences suggestions that continue to match that individual's interests.

The chart indicates that recommendations are customized to match user preferences based on enjoyability ratings for plot twists, preferred book length, and the proportion of historical fiction purchases. Having insight into the specific weightings enhances understanding of customization.

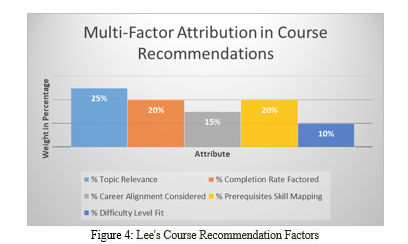

D. Example: Course Recommendations

Lee, a software engineer, aspires to enhance their project management abilities to secure a promotion. Upon accessing his company's learning portal, Lee is presented with recommended courses such as "Essentials of Agile Project Management". Initially, Lee is uncertain about the reason behind being linked with these particular suggestions.

Nevertheless, the portal has a tool called "Explain Recommendations." Upon clicking, Lee is presented with a bar chart that measures characteristics such as topic relevance, career alignment, and completion rates. These factors are weighted by the algorithm to determine personalized choices for him.

Lee rapidly understands the factors involved in selecting intermediate-level Agile courses that closely align with his software engineering roles and skills. By examining the data-driven breakdown, Lee gains a clear understanding of how the system takes into account his specific work growth requirements, considering many factors, to provide personalized course recommendations. This creates credibility and assurance that the technology is designed to prioritize Lee's best interests.

The following chart provides a visual representation of the relative weights assigned to each major factor considered when recommending courses.

The bar graph illustrates how algorithms quantify several criteria such as relevance to reported interests, completion rates, alignment to professional goals, and confirmation of prerequisite abilities to customize course matching. The ability to see the relevance of each algorithmic component ensures accountability in making appropriate recommendations, while also strengthening the capacity to tailor recommendations for specific requirements.

Conclusion

As recommendation algorithms grow across digital platforms, offering a growing share of our content, ensuring transparency and explainability becomes increasingly important [41]. Techniques such as user-item networks, keyword inferences, and revealing the underlying algorithms help reduce the black-box nature of recommendations. Frameworks that use interactive interfaces allow it to clarify particular ideas [42],[43]. However, work still needs to be developed in developing further approaches and also in widespread real-world deployment across industries [44]-[46]. Users need to understand why particular products are advertised to them because these powerful influences shape views and spending. Moving forward, human-centered design must prevail, supplementing but not replacing user agency. Consumer trust and happiness will continue to grow with watchfulness toward the ethical use of recommendations [47]-[49], from checking for biases to protecting privacy. A. Key Takeaways 1) Techniques such as user-item networks and keyword inferences enhance the clarity of recommendations, but additional efforts are required 2) Interactive interfaces have the potential to clarify recommendations, but their practical implementation is limited 3) Gaining insight into the rationale behind product recommendations is crucial to prevent exerting undue influence on users. 4) Human-centered design should enhance user agency without replacing it 5) Consumer trust is strengthened by the ethical utilization of recommendations B. Prospects for Future Research 1) Explore new approaches to mitigate the opaqueness of recommendations, guaranteeing transparency and comprehensibility 2) Perform a trial implementation of interactive interfaces in many sectors to provide clear and precise suggestions 3) Conduct additional research on frameworks that effectively balance commercial goals while safeguarding user privacy and autonomy 4) Evaluate any biases in algorithmic recommendations and develop strategies to alleviate them 5) Perform user studies to identify deficiencies in understanding of recommendations to facilitate human-centered enhancements 6) Analyze the enduring effects of explanations on consumer trust and empowerment in the long run The crucial factor is to consistently engage in responsible innovation that fosters transparency, prioritizes user requirements, and carefully controls the ethical utilization of algorithms. This demands a strong dedication from several industries to prioritize the well-being of users along with business goals.

References

[1] C. A. Gomez-Uribe and N. Hunt, “The Netflix Recommender System: Algorithms, Business Value, and Innovation,” ACM Trans. Manag. Inf. Syst., vol. 6, no. 4, pp. 1–19, 2015. [2] F. Ricci, L. Rokach, and B. Shapira, “Recommender Systems: Introduction and Challenges,” in Recommender Systems Handbook, F. Ricci, L. Rokach, and B. Shapira, Eds. Boston, MA: Springer US, 2015, pp. 1–34. [3] N. Sonboli, J. J. Smith, F. C. Berenfus, R. Burke, and C. Fiesler, “Fairness and Transparency in Recommendation: The Users’ Perspective,” in Fourteenth ACM Conference on Recommender Systems, 2021, pp. 548–552. [4] N. Charissiadis and N. Karacapilidis, “Strengthening the Rationale of Recommendations Through a Hybrid Explanations Building Framework,” in Human-Computer Interaction Series, A. Holzinger, Ed. Cham: Springer International Publishing, 2015, pp. 289–295. [5] H. Abdollahpouri, M. Mansoury, R. Burke, B. Mobasher, and E. C. Malthouse, “User-centered evaluation of popularity bias in recommendation systems,” in Fourteenth ACM Conference on Recommender Systems, 2021, pp. 542–547. [6] R. Sinha and K. Swearingen, “The role of transparency in recommendation systems,” in CHI \'02 Extended Abstracts on Human Factors in Computing Systems, 2002, pp. 830–831. [7] A. Kumar and T. Choudhury, “Towards Explainable and Accountable Recommender Systems: User Reviews Based Evaluation of Explanation Methods,” in Proceedings of the 9th ACM Conference on Recommender Systems, Vienna, Austria, Sep. 2020, pp. 579–583. [8] A. Kumar and T. Choudhury, “Towards Explainable and Accountable Recommender Systems: User Reviews Based Evaluation of Explanation Methods,” in Proceedings of the 9th ACM Conference on Recommender Systems, Vienna, Austria, Sep. 2020, pp. 579–583. [9] K. Shi and K. Ali, “Get Your Vitamin C! Robustness of Explanation to Perturbation,” in Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual Event Ireland, Oct. 2020, pp. 1325–1334. [10] Q. Yang, N. Banovic, and J. Zimmerman, “Mapping Machine Learning Advances from HCI Research to Recommender Systems: A Synthesis of Practices and Challenges for Transparency Communication,” ACM Trans. Interact. Intell. Syst., vol. 8, no. 2, pp. 1–27, 2018. [11] A. Kumar, L. Peng, and T. Choudhury, “Control What You Can: Intents Based Feature Selection for Recommender Systems,” in AdKDD Workshop, KDD, 2019. [12] S. Hara and K. Arai, “Counterfactual Recommendation for Groups through Personalized Sign Flipping,” in Proceedings of the 28th International Joint Conference on Artificial Intelligence. AAAI Press, 2019, pp. 4997–5003. [13] A. Tintarev and R. Dennis, “Explaining Recommendations: Transparency and Scrutability,” in Social Transparency in Recommender Systems, Cambridge University Press, 2019, ch. 10, pp. 353–382. [14] J. Wang, P. Huang, H. Zhao, Z. Zhang, B. Zhao, and D. L. Lee, “Explainable Recommendation Through Attentive Multi-View Learning,” ACM Trans. Inf. Syst., vol. 38, no. 3, pp. 1–29, Jul. 2020. [15] D. Harbecke and C. Lenz, “Considering Explainability of Recommender Systems,” in Proc. of the Joint German/Austrian Conf. Artificial Intelligence (Künstliche Intelligenz), 2020, pp. 321-328. [16] M. Karimi, D. Jannach, and G. Adomavicius, “Effect of information presentation on the perception of recommendation diversity and satisfaction,” User Modeling User-Adapted Interaction, vol. 31, no. 2, pp. 379–409, Apr. 2021. [17] Q. Yang, N. Banovic, and J. Zimmerman, “Mapping Machine Learning Advances from HCI Research to Recommender Systems: A Synthesis of Practices and Challenges for Transparency Communication,” ACM Trans. Interact. Intell. Syst., vol. 8, no. 2, pp. 1–27, 2018. [18] R. J. Vallerand and J. A. D. L. Cruz, “Analysis of a context-aware recommendation system model for a smart urban environment,” in Proceedings of the 7th ACM conference on Recommender systems, 2013, pp. 493–496. [19] S. Huang et al., “A long command subsequence algorithm for manufacturing industry recommendation systems with similarity connection technology,” Applied Mathematics and Nonlinear Sciences, vol. 6, no. 2, pp. 232–240, Sep. 2021. [20] M. Millecamp and K. Verbert, “Personal user interfaces for recommendation systems,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, 2019, pp. 529–539. [21] J. Wang, P. Huang, H. Zhao, Z. Zhang, B. Zhao, and D. L. Lee, “Explainable Recommendation Through Attentive Multi-View Learning,” ACM Trans. Inf. Syst., vol. 38, no. 3, pp. 1–29, Jul. 2020. [22] Q. Yang, N. Banovic, and J. Zimmerman, “Mapping Machine Learning Advances from HCI Research to Recommender Systems: A Synthesis of Practices and Challenges for Transparency Communication,” ACM Trans. Interact. Intell. Syst., vol. 8, no. 2, pp. 1–27, 2018. [23] Y. Wang et al., “Towards Explainable Recommendation Through Aspect-based Opinion Summarization,” in Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, 2021, pp. 1823–1827. [24] E. Brynjolfsson, A. Collis, and F. Eggers, “Using massive online choice experiments to measure changes in well-being,” Proc. Natl. Acad. Sci. U. S. A., vol. 116, no. 15, pp. 7250–7255, Apr. 2019. [25] H. Abdollahpouri, M. Mansoury, R. Burke, B. Mobasher, and E. C. Malthouse, “User-centered evaluation of popularity bias in recommendation systems,” in Fourteenth ACM Conference on Recommender Systems, 2021, pp. 542–547. [26] R. Sinha and K. Swearingen, “The role of transparency in recommendation systems,” in CHI \'02 Extended Abstracts on Human Factors in Computing Systems, 2002, pp. 830–831. [27] B. Chaney, B. Stewart, and B. Engelhardt, “How Algorithmic Confounding in Recommendation Systems Increases Homogeneity and Decreases Utility,” in Proc. of the 12th ACM Conference on Recommender Systems, 2018, pp. 224–232. [28] D.-H. Park, J. Jang, and J. An, “Effect of Explainable Artificial Intelligence on Restoration of Human Decision-Making Capability,” in Platform Strategy and Industrial Ecosystem Transformation, 2021, pp. 23–30. [29] R. Sinha and K. Swearingen, “The role of transparency in recommendation systems,” Apr. 20, 2002. [30] B. Chaney, B. Stewart, and B. Engelhardt, “How Algorithmic Confounding in Recommendation Systems Increases Homogeneity and Decreases Utility,” in Proc. of the 12th ACM Conference on Recommender Systems, 2018, pp. 224-232. [31] F. Ricci, L. Rokach, and B. Shapira, Eds., Recommender Systems Handbook. Boston, MA: Springer US, 2015. [32] B. Chaney, B. Stewart, and B. Engelhardt, “How Algorithmic Confounding in Recommendation Systems Increases Homogeneity and Decreases Utility,” in Proc. of the 12th ACM Conference on Recommender Systems, 2018, pp. 224-232. [33] R. Sinha and K. Swearingen, “The role of transparency in recommendation systems,” in CHI \'02 Extended Abstracts on Human Factors in Computing Systems, 2002, pp. 830–831. [34] A. Tintarev and R. Dennis, “Explaining Recommendations: Transparency and Scrutability,” in Social Transparency in Recommender Systems, Cambridge University Press, 2019, ch. 10, pp. 353–382. [35] B. Chaney, B. Stewart, and B. Engelhardt, “How Algorithmic Confounding in Recommendation Systems Increases Homogeneity and Decreases Utility,” in Proc. of the 12th ACM Conference on Recommender Systems, 2018, pp. 224-232. [36] R. Sinha and K. Swearingen, “The role of transparency in recommendation systems,” Apr. 20, 2002. [37] S. Huang et al., “A long command subsequence algorithm for manufacturing industry recommendation systems with similarity connection technology,” Applied Mathematics and Nonlinear Sciences, vol. 6, no. 2, pp. 232–240, Sep. 2021. [38] Y. Wang et al., “Towards Explainable Recommendation Through Aspect-based Opinion Summarization,” in Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, 2021, pp. 1823–1827 [39] B. Chaney, B. Stewart, and B. Engelhardt, “How Algorithmic Confounding in Recommendation Systems Increases Homogeneity and Decreases Utility,” in Proc. of the 12th ACM Conference on Recommender Systems, 2018, pp. 224-232. [40] B. Chaney, B. Stewart, and B. Engelhardt, “How Algorithmic Confounding in Recommendation Systems Increases Homogeneity and Decreases Utility,” in Proc. of the 12th ACM Conference on Recommender Systems, 2018, pp. 224-232. [41] F. Ricci, L. Rokach, and B. Shapira, Eds., Recommender Systems Handbook. Boston, MA: Springer US, 2015. [42] S. Huang et al., “A long command subsequence algorithm for manufacturing industry recommendation systems with similarity connection technology,” Applied Mathematics and Nonlinear Sciences, vol. 6, no. 2, pp. 232–240, Sep. 2021. [43] M. Millecamp and K. Verbert, “Personal user interfaces for recommendation systems,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, 2019, pp. 529–539. [44] Vallerand, R J., & Cruz, J A D L. (2013, January 1). Analysis of a context-aware recommendation system model for a smart urban environment. https://scite.ai/reports/10.1145/2536853.2536931. [45] Sonboli, N., Smith, J J., Berenfus, F C., Burke, R., & Fiesler, C. (2021, June 21). Fairness and Transparency in Recommendation: The Users’ Perspective. https://doi.org/10.1145/3450613.3456835. [46] Abdollahpouri, H., Mansoury, M., Burke, R., Mobasher, B., & Malthouse, E. C. (2021). User-centered evaluation of popularity bias in recommendation systems. https://doi.org/10.1145/3450613.345682. [47] B. Chaney, B. Stewart, and B. Engelhardt, “How Algorithmic Confounding in Recommendation Systems Increases Homogeneity and Decreases Utility,” in Proc. of the 12th ACM Conference on Recommender Systems, 2018, pp. 224-232. [48] Sonboli, N., Smith, J J., Berenfus, F C., Burke, R., & Fiesler, C. (2021, June 21). Fairness and Transparency in Recommendation: The Users’ Perspective. https://doi.org/10.1145/3450613.3456835. [49] Abdollahpouri, H., Mansoury, M., Burke, R., Mobasher, B., & Malthouse, E. C. (2021). User-centered evaluation of popularity bias in recommendation systems. https://doi.org/10.1145/3450613.345682.

Copyright

Copyright © 2024 Vatesh Pasrija, Supriya Pasrija. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58541

Publish Date : 2024-02-21

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online