Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Design and Development of Sensor Based Glove for Speech Impaired People

Authors: Ketan Tajne, Swastik Jha, Swaraj Patil, Swayam Kanoje, Mangesh Tale, Abhjeet Takale, Rahul Waikar

DOI Link: https://doi.org/10.22214/ijraset.2024.63154

Certificate: View Certificate

Abstract

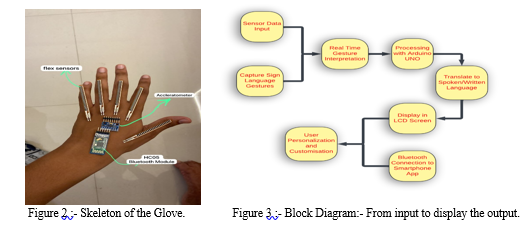

This project aims to address communication challenges faced by deaf and mute individuals through the development of a portable Sign Language Glove. The intelligent glove incorporates advanced sensor technologies, including flex sensors, an accelerometer, and a microcontroller, to accurately capture and interpret sign language gestures. By combining these technologies, the smart glove achieves close-to-real-time translation capabilities, seamlessly converting sign language into spoken or written language. The output is displayed on an LCD screen and conveyed audibly through an integrated speaker. Further enhancements include integrating a Bluetooth module for connection to a dedicated smartphone app, allowing users to personalize their communication experience. This comprehensive solution goes beyond mere translation, fostering inclusivity and adaptability. Ultimately, this groundbreaking technology aims to integrate speech-impaired individuals into society, dismantle communication barriers, and promote a more inclusive global community.

Introduction

I. INTRODUCTION

This project aims to leverage technology to fulfill a crucial communication need. The primary objective is to create an innovative sign language glove designed to cater to the unique challenges of deaf and mute individuals. By incorporating cutting-edge sensor technologies, this intelligent glove seeks to establish a seamless connection between those proficient in sign language and those who communicate through written or spoken language. Through the application of advanced sensors, the glove aspires to narrow the communication gap and enhance inclusivity between these distinct linguistic communities.

In the research, we delved into the extensive work conducted in the realm of assistive technologies for individuals with hearing and speech impairments. The existing research landscape has witnessed the development of various solutions, including gesture recognition systems and translation devices. Specifically, multiple prototypes of Sign Language Gloves have emerged within the domain of gesture recognition systems, each presenting subtle variations. The evolutionary trajectory reveals an initial phase where a glove was devised to detect American Sign Language, translating it into written output displayed on an LCD screen. Subsequent advancements enabled the conversion of sign gestures into audible speech through an integrated speaker. Some projects extended their scope to encompass other sign languages, such as Indian Sign Language. However, despite these strides, we observed that most of these endeavors concluded or remained in the prototype phase, never reaching the public domain for practical use or commercialization.

One major drawback in past projects was the lack of options for users to customize and personalize their experience. What sets our project apart is the addition of a Bluetooth module, connecting the glove to a special app on smartphones. This combination forms a complete communication solution that goes above and beyond current limits, offering a more inclusive and adaptable environment for those dealing with communication difficulties. With our smartphone app, users can pick gestures for everyday phrases they often use, making the whole experience user-friendly and tailored to each person's needs.

II. LITERATURE REVIEW

In their research paper Abhay et al. [1] discusses "Smart Hand Gloves" designed to aid disabled individuals by detecting hand gestures using flex sensors and converting them into text or pre-recorded voice. Unlike bulky wireless gloves, these gloves are wired, providing a practical solution for patients and partially disabled individuals.

Mayan J et al. [2] in their paper introduces a Smart Glove translating sign language into text, aiding speech-impaired individuals via a web interface. Utilizing flex sensors, an accelerometer, and Arduino UNO, the technology facilitates communication between the hearing impaired and others.

In their paper Bhore et al. [3] explores a smart glove translating sign language into spoken English for deaf and mute individuals using Raspberry Pi 3 Model B, flex sensors, and accelerometers.

Ghimire et al. [4] explore a "Smart Glove" designed to facilitate communication between deaf and dumb individuals using sign language and the hearing population. This electronic device translates sign language gestures into text and speech, employing sensors and a Raspberry Pi. The literature review covers related projects aiding communication for people with disabilities.

Visamaya et al. [5] discusses the Smart Glove, a portable device aiding communication for the deaf and dumb. Using flex sensors and Arduino, it translates hand gestures into text and speech, bridging the communication gap. The system is user-friendly, cost-effective, and adaptable to different sign languages, offering an innovative solution for people with communication disabilities.

In 2011, Meenakshi Panwar [6] proposed a shape based approach for hand gesture recognition with several steps including smudges elimination orientation detection, thumb detection, finger counts etc. Visually Impaired people can make use of hand gestures for writing text on electronic document like MS Office, notepad etc. The recognition rate was improved up to 94% from 92%.

Pallavi Verma et al., [7] described a system that captures user movement utilizing a pair of gloves with flex sensors along each finger, thumb, and arm. The voltage divider method is employed with flex sensors to determine the voltage equivalent of the degree of the fingers, thumb, and arm. The PIC microcontroller is utilized for a variety of tasks, including converting data from flex sensors from analog to digital.

The digital data is then transmitted after being encoded in an encoder. Once the received data has been decoded by the decoder, the gesture recognition system compares it to previously provided data. The voice segment notifies the speaker whether the data matches.

Purushottam Kar et al. [8] introduced INGIT, a system tailored for translating Hindi text into Sign Language, specifically for Railway Inquiry purposes. The system utilized FCG to construct Hindi grammar. It processed user input into a streamlined semantic structure by eliminating unnecessary words through ellipsis resolution.

Subsequently, the SL generator module produced an appropriate SL-tag structure based on sentence type, followed by graphical simulation via a HamNoSys converter. The system achieved a success rate of approximately 60% in generating semantic structures. In contrast, Ali et al. [9] developed a domain-specific system requiring English input, which was transformed into SL text and further translated into SL symbols.

A recent study conducted by researchers at the University of Toronto in 2019 explored using sign gloves in a classroom setting. The researchers found that sign gloves could be an effective tool for improving communication between deaf and mute students and their hearing peers. Specifically, the sign gloves allowed deaf and mute students to participate more fully in classroom discussions, resulting in improved academic performance and social integration [7].

III. METHODOLOGY

This section presents the methodology employed to develop and evaluate a sign language translation system. The system integrates various hardware and software components to capture sign language gestures, which are then processed to produce textual outputs. (as shown Figure 5)

A. System Architecture

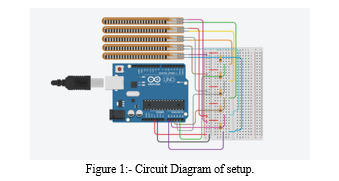

The system comprises a microcontroller, flex sensors, a Bluetooth module, an accelerometer and gyroscope, and an LCD. Each component is integral to the system's functionality.

B. Hardware Configuration

- Microcontroller: Acts as the central processing unit. It is programmed to read inputs from the flex sensors accelerometer and gyroscope, process these inputs to recognize gestures, and control outputs displayed on the LCD.

- Flex Sensors: Attached to a glove, these sensors detect the degree of bending of the fingers. The resistance of each sensor varies with the bending angle, which is read by the microcontroller for gesture analysis.

- Bluetooth Module: Enables wireless communication between the Arduino and a mobile application, facilitating user interaction and system configuration.

- Accelerometer and Gyroscope: Provides data on hand orientation and movement dynamics, complementing the flex sensor data for a comprehensive gesture analysis.

- LCD Display: Used for displaying the recognized text from the gestures.

C. Software Development

Arduino Programming: The Arduino is programmed using its native IDE. The code integrates algorithms for data acquisition from sensors, gesture recognition using a predefined library of sign language gestures, and control of output devices.

Mobile Application: Developed to allow users to customize gesture databases and settings via a user-friendly interface. The app communicates with the Arduino through the Bluetooth module.

D. Data Collection and Processing

Data Collection: Data from the flex sensors the accelerometer and gyroscope are continuously captured while a user performs sign language gestures.

Signal Processing: The Arduino processes the sensor data to identify specific gesture patterns. This process involves noise filtering, normalization, and a comparison with stored gesture templates.

E. Gesture Recognition Algorithm

The recognition algorithm employs a combination of threshold-based analysis for flex sensor data and pattern recognition for accelerometer and gyroscope data. The algorithm matches input patterns against a database of known gestures and determines the best match based on predefined criteria.

F. Output Generation

Upon recognizing a gesture, the corresponding text is displayed on the LCD.

G. Testing and Validation

The system is tested under various conditions to assess its accuracy and reliability. This includes tests with multiple users and in different environments. User feedback is incorporated to refine the gesture recognition algorithms.

IV. RESULTS AND DISCUSSIONS

In our initial experimental phase, a significant breakthrough has been achieved with the successful sensor connection to the Microprocessor microcontroller. This accomplishment is crucial, laying the foundation for developing the Sign Language Glove. It sets the stage for subsequent testing and refinement, emphasizing the importance of this sensor-microcontroller connection as a critical step in our project's evolution. This successful connection signifies a promising initiation toward realizing our project's objectives. In the upcoming phases, we will carry out the development and integration of software, databases, and applications with the hardware. (as shown Figure 4). This comprehensive approach aims to refine the system, enhancing its gesture recognition and real-time translation capabilities. The significance of completing this project is widely acknowledged among our team. Beyond its technological implications, this achievement holds the potential to be groundbreaking in integrating deaf and mute individuals into society. We recognize the broader societal impact and remain dedicated to pushing the boundaries of innovation to ensure the success of this transformative endeavor.

Out of the 200+ experiments, there were 132 successful experiments, these trial yielded the desired results, confirming the effectiveness of our protocol. The success rate was approximately 66%. 30 experiments had slight variations, in these we observed minor deviations from the expected outcome. These variations were within an acceptable range and did not significantly impact the overall validity of our findings. The remaining experiments resulted in failure, where the desired result was not achieved. However, the failure rate was remarkably low, accounting for less than 34% of the total trials.

V. FUTURE SCOPE

This section outlines the potential future developmental trajectories for the Sign Language Glove, aimed at enhancing its applicability and usefulness. The forthcoming research and development will concentrate on expanding the linguistic capabilities, improving technological integration, and fostering broader societal integration.

A. Linguistic Expansion

The initial version of the Sign Language Glove has demonstrated potential in recognizing basic gestures from a single sign language. Future iterations will, increase language databases meaning expanding the database to include more comprehensive vocabularies from various sign languages, such as American Sign Language (ASL), British Sign Language (BSL), and others. Also partnering with linguists and sign language experts to ensure accuracy and cultural relevance in gesture recognition will be done.

B. Technological Advancements

To boost the glove's performance and enhance the user experience, several technological advancements are proposed. Firstly, integrating AI and machine learning would allow the glove to analyze user interactions and refine its gesture recognition accuracy over time. Additionally, developing and incorporating more sensitive and accurate flex sensors and motion detectors would minimize errors in gesture interpretation. Finally, an emphasis on ergonomic design would make the device more comfortable for extended periods, promoting everyday use and wider adoption.

C. Application and Integration

To ensure the glove's usefulness extends beyond individual users and creates broader community benefits, several key initiatives are proposed. Firstly, developing educational programs utilizing the glove could promote inclusivity by teaching sign language. Secondly, there is significant medical potential; collaboration with healthcare providers would enable the glove to become an invaluable communication aid for patients who rely on sign language, enhancing patient-provider interactions. Finally, partnerships with assistive technology firms will help expand the glove's reach through wider distribution and integration with existing solutions.

Conclusion

In conclusion, the strides made in our project to develop a Sign Language Glove mark a significant advancement in the realm of assistive technologies for individuals with hearing and speech impairments. The project\'s unique approach, combining advanced sensor technologies and a user-friendly interface through a dedicated smartphone app, holds promise for revolutionizing communication for individuals facing hearing and speech impairments. As we progress, the commitment to addressing challenges, optimizing gesture recognition, and creating a comprehensive communication tool remains steadfast. The Sign Language Glove stands as a testament to our dedication to enhancing the lives of those who are deaf and mute, fostering inclusivity, and breaking down communication barriers in society.

References

[1] Abhay, L.Dhawal, S.Harshal, A.praful, P.Parmeshwar, “Smart Glove For disabled people”, International Research Journal of Engineering and Technology, 201 [2] Albert Mayan J, Dr. B.Bharathi Challapalli Vishal, Chidipothu Vishu Vardhan Subramanyam,“Smart Gloves For Hand Gesture Recognition using Sign Language To Speech Conversion System”, International Journal of Pure and Applied Mathematics, 201 [3] Pooja S Bhore, Neha D Londhe, Sonal Narsale, Prachi S Patel, Diksha Thanambir, “Smart Gloves for Deaf and Dumb Students”, International Journal of Progressive Research in Science and Engineering, 2020 [4] Adarsh Ghimire, Bhupendra Kadayat, Aakriti Basnet, Anushma Shreshta, “Smart Glove-The Voice of Dumb”, IEEE, 2019 [5] Vismaya A P, Sariga B P, Keerthana Sudheesh P C, Manjusha T S, “Smart Glove Using Arduino With Sign Language Recognition System”, International Journal of Advance Research and Innovative Idea in Education, 202 [6] Meenakshi Panwar and Pawan Singh Mehra. “Hand Gesture Recognition for Human Computer Interaction”. In Proc. IEEE International Conference on Image Information Processing Waknaghat, India, November 2011 [7] Pallavi Verma , Shimi S. L, Richa Priyadarshani, International Journal of Science and Research (IJSR) , “Design of Communication Interpreter for Deaf and Dumb Person,” January 2013 [8] Rao, R R, Nagesh, A, Prasad, K. and Babu, K E (2007) Text-Dependent Speaker Recognition System Languages.International for Journal Indian of Computer Science and Network Security, Vol. 7, No.11 [9] T.Starner,“Visual Recognition of American Sign Language Using Hidden Markov Models,” Master’sthesis, MIT, Media Laboratory, Feb. 199 [10] Gautam, Uma & Asgar, Md & Ranjan, Rajeev & Chandan, Narayan. (2023). “A REVIEW ON SIGN GLOVES FOR DUMB AND DEAF PEOPLES USING ESP32.” International Journal of Engineering Applied Sciences and Technology. 8. 303-308. 10.33564/IJEAST.2023.v08i02.046.

Copyright

Copyright © 2024 Ketan Tajne, Swastik Jha, Swaraj Patil, Swayam Kanoje, Mangesh Tale, Abhjeet Takale, Rahul Waikar. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63154

Publish Date : 2024-06-06

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online