Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Detection and Classification of Brain Tumor using Deep Learning Techniques

Authors: Sanjay Gandhi Gundabatini , Teki Lakshmi Lahari, Tejaswi Kusam, Perecharla Divya Sai, Ponugubati Manasa

DOI Link: https://doi.org/10.22214/ijraset.2024.59343

Certificate: View Certificate

Abstract

Brain tumors are abnormal enlargements of nerves that disrupt normal brain function and can cause death. Locating tumor-affected brain cells can be time-consuming. Early detection and appropriate treatment are critical for saving lives. However, conventional methods for identifying brain tumors through image processing have limitations in both accuracy and processing speed. In this study, we propose using the EfficientNet architecture, a cutting-edge convolutional neural network (CNN), to improve the accuracy and efficiency of brain tumor detection. We present a comprehensive approach that includes preprocessing steps to prepare the input data and data augmentation techniques to enhance the dataset, thus improving the CNN model\'s generalization and robustness. EfficientNet surpasses earlier CNN architectures by utilizing a compound coefficient to uniformly scale the depth, width, and resolution of an image with a consistent ratio. The proposed model achieved 99.22% accuracy during training, 98.05% during validation, and 98.44% during testing.

Introduction

I. INTRODUCTION

The brain, a vital organ controlling bodily functions, along with the spinal cord, constitutes the central nervous system. When normal cell growth regulation mechanisms fail, abnormal cell growth in the brain can occur, leading to the formation of brain tumors. These tumors occupy space within the skull, disrupting normal brain function and exerting pressure on surrounding tissues. This pressure can cause displacement and compression of brain tissues, potentially damaging healthy nerves. The World Health Organization has identified approximately 120 types of brain tumors, categorized based on cell origin and behavior, ranging from less aggressive benign tumors to more aggressive malignant tumors. Benign tumors are non-cancerous growths that develop within the brain, while malignant tumors are cancerous.

Presently, the integration of Information Technology (IT) and e-healthcare methodologies in the medical field empowers healthcare providers to deliver high-quality services to patients. Brain tumors pose a significant threat to brain function due to abnormal cell proliferation, disrupting normal brain activity and potentially leading to life-threatening consequences. Brain tumors represent a common form of cancer in humans and are closely associated with mortality. Hence, timely detection of this condition is imperative and serves a crucial role in reducing mortality rates.

Accurate diagnosis and staging of brain tumors are crucial steps in both prevention and treatment protocols. Magnetic Resonance Imaging (MRI) serves as a primary diagnostic tool employed by medical professionals for brain tumor analysis. In this study, deep learning techniques are utilized to analyze MRI scans and distinguish between normal brain tissue and tumor-infected regions. While various medical imaging modalities such as Ultra Sound, Single-Photon Emission Computerized Tomography (SPECT), Computed Tomography (CT) Scan, X-Rays, Positron Emission Tomography (PET) are available for brain tumor detection, MRI stands out for its superior contrast and clarity in imaging malignant tissues. As a result, MRI imaging remains the preferred method for identifying brain tumors. Analyzing MRI scan images represents a modern approach to detecting brain tumors at an early stage.

Researchers are exploring the potential of fully automated CNN models to swiftly and precisely classify brain tumors. Nonetheless, attaining exceptional accuracy remains an ongoing hurdle in brain image classification due to inherent ambiguities in the data. Transfer learning involves leveraging the learned weights of a previously trained model, typically on a different dataset, for another application. In this research, for instance, weights from a model trained on the ImageNet dataset with 1000 classes were utilized. This approach accelerates training convergence and reduces resource and dataset requirements.

The paper proposes utilizing a pre-trained Convolutional Neural Network (CNN) model, specifically EfficientNet-B3, for accurately diagnosing and classifying brain tumors using MRI images.

II. RELATED WORKS

Detecting tumors in MRI brain images is a complex and formidable task within the medical field. Researchers are actively engaged in this area, striving to develop optimal methodologies and approaches for accurately identifying tumors.

In a study conducted by Avsar E in 2019[1], MRI emerged as a valuable diagnostic tool for identifying brain tumors in humans. Their system utilized MRI images to detect tumor regions and classify them into three distinct categories: meningioma, glioma, and pituitary tumors. To achieve this, they employed Deep Learning (DL), a potent method for image classification. Specifically, they utilized a faster Region-based Convolutional Neural Network (CNN) architecture, which is rooted in DL techniques. The proposed system was implemented using the TensorFlow library, a popular framework for building and training deep learning models. For training and testing the classifier, they utilized a publicly available dataset consisting of 3064 MRI brain images from 233 patients, including 708 meningioma, 1426 glioma, and 930 pituitary tumor images. Their system achieved an impressive accuracy of 91.66%, outperforming other systems employing the same dataset.

Pashaei et al. [2] created a model to detect meningioma, glioma, and pituitary tumors with convolutional neural networks. The model had four convolutional layers, four pooling layers, a fully connected layer, and four batch normalization layers. The model was trained with ten epochs (16 iterations each) and a learning rate of 0.01. Cheng's dataset was used to evaluate the model's performance using tenfold cross-validation, with 70% allocated for training and 30% for system testing. Their approach outperformed other methods such as MLP, Stacking, XGBoost, SVM, and RBF, with an accuracy of 93.68% for tumor classification.

In their study, Eswaraiah Rayachoti et al. [3] introduced a novel CNN-based EU-Net, incorporating a modified skip connection for Medical Image Segmentation (MIS). They utilized bilateral filtering for preprocessing and incorporated a pyramid edge extraction module to capture edge information from medical images. The Ebola Optimization Algorithm (EOA) was employed to optimize the network parameters, effectively reducing loss function and weight. The model underwent training using five separate datasets. Their approach yielded impressive results, with an overall accuracy of 97.33%, RMSE of 1.36, and a Dice coefficient of 94.97%.

Machine learning techniques rely on various methods such as feature extraction, feature selection, and classification to analyze medical images. Different feature extraction techniques, such as thresholding-based, clustering-based, contour-based, and texture-based methods, are utilized to identify tumor regions within the human skull [4] from MRI images. These techniques extract essential characteristics from the MRI data, and the most relevant features are selected through a process called feature selection. This selection of discriminative features plays a crucial role in achieving high accuracy in tumor detection[5]. However, during feature extraction, there is a risk of losing important information from the original images.

In contrast, Deep Learning (DL) methods offer a solution to this issue by directly utilizing the original images as input [6], eliminating the need for handcrafted features. Specifically, Convolutional Neural Networks (CNNs), a type of DL model, are adept at automatically extracting features from images [7] using different convolutional layers. CNNs have shown significant promise, particularly in scenarios with ample data, which can be challenging to obtain in medical imaging. One approach to circumvent the data scarcity issue is through transfer learning. Transfer learning [8] involves leveraging a pre-trained model that has been previously trained on a large dataset from a related domain. By utilizing transfer learning, these pre-trained models can be adapted for classification tasks in medical imaging, thereby benefiting from the knowledge gained from the original dataset [9].

Drawing from the literature survey outlined previously, a model has been formulated. The objectives achieved by this study include:

- Accurately diagnosing brain tumors.

- Classifying three types of tumors: Glioma, Meningioma, and Pituitary.

- Employing pre-trained models to minimize resource utilization and mitigate the impact of small datasets.

III. PROPOSED METHODOLOGY

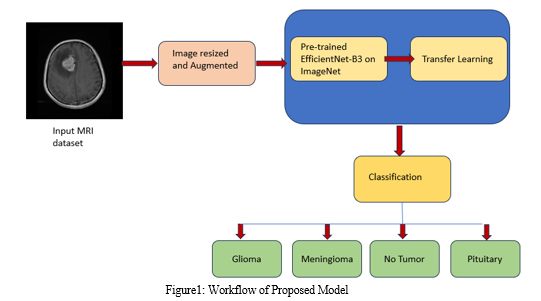

The process outlined in the provided code begins by augmenting the training dataset to increase its diversity and robustness. After augmentation, the model utilizes pre-trained convolutional layers from the EfficientNetB3 architecture to capture intricate features from the MRI brain images. Following feature extraction, a tailored sequential model is crafted, incorporating techniques such as batch normalization, dense layers with regularization, and dropout to mitigate the risk of overfitting. This model is then compiled using the Adamax optimizer and categorical cross-entropy loss function to optimize its performance. Lastly, the trained model is stored for future use, forming a comprehensive pipeline for the classification of brain tumors. Figure1 shows the classification pipeline.

A. Dataset Acquisition

Magnetic resonance imaging (MRI) scanning:

- MRI is the preferred imaging method for studying brain tumors due to its high resolution and ability to visualize pathological and physiological changes in live tissues without using ionizing radiation.

- It generates high-resolution 3D images, facilitating complex tumor localization and providing structural and functional data in the same scan.

- MRI offers detailed views of the internal structure of the brain, enabling clear visualization of soft tissue contrasts and enhancing the quality of brain images compared to other imaging techniques.

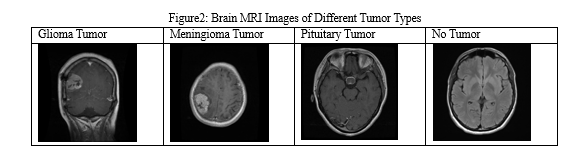

For this study, we obtained brain MRI images from the Kaggle dataset for brain tumor classification, which consists of two main folders: Training and Testing. Each folder comprises four classes: glioma, meningioma, no tumor, and pituitary. Meningioma, a benign brain tumor, originates in the meninges, the brain and spinal cord's protective layers. Generally slow-growing, it is often treated successfully through surgery. Glioma, a malignant brain tumor, stems from glial cells providing neuron support. Varied in aggressiveness and graded accordingly, treatment includes surgery, radiation, and chemotherapy. Pituitary tumors can be benign or rare malignant growths within the pituitary gland, affecting hormone production and categorized by cell type. "No tumor" signifies the absence of abnormal mass, offering reassurance of a healthy condition in that specific area. The Training folder contains a total of 5712 original images of brain tumors, which were augmented to 5712 * 5 images for training purposes. The dataset is publicly available on Kaggle at the following link: https://www.kaggle.com/datasets/masoudnickparvar/brain-tumor-mri-dataset. The dataset size is 156MB. Figure 1 illustrates a selection of brain MRI images showcasing various tumor types, including meningioma, pituitary tumor, glioma, and a normal scan representing no tumor presence.

B. Data Augmentation

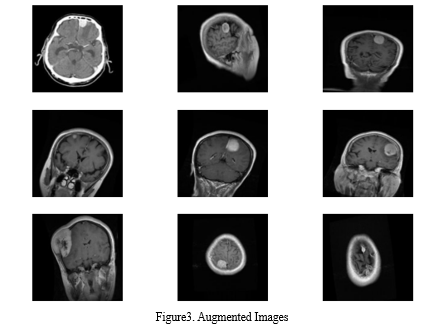

We employed data augmentation techniques to expand the dataset, enhancing the classification model's accuracy due to its limited size. Through random transformations, diverse copies of original images were generated, each with variations in scaling, orientation, and other characteristics. Specifically, the augmentation strategies included rotation, horizontal and vertical shifts, scaling, shearing, brightness adjustments, and horizontal and vertical flipping.

The augmentation process involved iterating through each image in the dataset, loading it using OpenCV, resizing it to a specified size, and reshaping it to the required format. Augmented images were then generated using the defined augmentation parameters, producing five additional variations for each original image. This augmentation strategy aids in improving the model's robustness and ability to generalize to unseen data by exposing it to a broader range of image variations during training. Figure 3 displays the brain MRI images post-augmentation.

C. CNN Architecture

Convolutional Neural Networks (CNNs) represent a class of neural networks within the realm of deep learning, specially tailored for image classification tasks. These networks operate by analysing input images and assigning weights and biases to various objects within the images, facilitating their classification. One of the key functionalities of CNNs is their ability to condense images into a format that is more manageable for processing, all while retaining crucial features necessary for accurate predictions.

In the architecture of CNNs, several components play pivotal roles in the feature extraction and classification process. Firstly, convolutional layers are responsible for extracting both low-level and high-level attributes from the input images. Initially, the first convolutional layer focuses on extracting low-level attributes, while subsequent layers delve deeper to unearth higher-level features, allowing the network to capture increasingly complex patterns and nuances present within the data.

Additionally, pooling layers are integrated into the CNN architecture to reduce the computational burden and streamline the processing of extracted features. Through dimensionality reduction, pooling layers effectively shrink the spatial size of the extracted features, enhancing computational efficiency without compromising the integrity of the essential spatial information.

Once the convolutional and pooling layers have been applied, the CNN model undergoes training to comprehensively understand the extracted features. During this training phase, the model learns to discern and interpret intricate patterns within the data, optimizing its ability to accurately classify images into distinct classes. Following successful training, the final output is flattened and directed to a Fully Connected (FC) layer.

In the fully connected (FC) layer, features extracted from preceding layers undergo comprehensive analysis and classification into distinct classes, utilizing learned patterns and characteristics. Techniques such as dropout are employed to enhance model generalization, while activation functions like softmax facilitate multi-class classification. With its role in discerning intricate patterns and optimizing classification accuracy, the FC layer plays a crucial role in achieving robust performance across a wide range of image datasets.

In essence, CNNs use a sophisticated architecture that includes convolutional and pooling layers, as well as FC layers, to extract, analyze, and classify features from input images. This hierarchical approach to feature extraction and classification demonstrates CNNs' efficiency in a variety of domains, including computer vision and medical imaging.

D. Transfer Learning

Transfer learning is a crucial technique utilized in machine learning, particularly in scenarios where training a model with a large dataset is impractical or unfeasible. Typically, the performance of convolutional neural networks (CNNs) is greatly enhanced when trained on extensive datasets. However, in real-world scenarios, obtaining such vast datasets may not always be feasible. In such cases, transfer learning comes into play.

The concept of transfer learning involves leveraging a pre-trained model, initially trained on a large, diverse dataset such as ImageNet, which contains a vast array of labelled images across numerous categories. These pre-trained models have already learned to recognize a wide range of patterns and features within images. Instead of starting from scratch, transfer learning enables us to utilize the knowledge gained by these pre-trained models as a foundation for solving a different task or working with a smaller, more specialized dataset.

By utilizing transfer learning, we can repurpose the pre-trained model as a feature extractor for our specific task or dataset. This approach allows us to leverage the learned representations from the pre-trained model, which captures general features and patterns, and adapt them to our target domain. In the context of medical imaging, such as MR images dataset, transfer learning proves to be invaluable. It enables us to efficiently utilize the knowledge learned from vast datasets like ImageNet to improve the performance of our model on smaller medical imaging datasets.

Transfer learning has found widespread application across various fields, including X-ray baggage security screening, lung pattern analysis, and medical image classification. By leveraging transfer learning, we can enhance the efficiency and effectiveness of our models while providing a more generalized approach to tackling new challenges. This approach not only saves computational resources and training time but also facilitates the development of robust and accurate models for diverse tasks and datasets.

E. EfficientNet Architecture

Efficient Net, introduced in 2019, revolutionized CNN architectures with its innovative approach to scaling. Unlike traditional models like VGG-16, ResNet50, and Inception V3, EfficientNet employs compound coefficients to scale the dimensions of width, resolution, and depth in a constant ratio.

This scaling technique optimizes both accuracy and efficiency without sacrificing model performance. By leveraging the AutoML MNAS framework for neural architecture search, EfficientNet was developed as a baseline network, upon which the compound scaling method is based. This method significantly enhances both the accuracy and efficiency (measured in FLOPS) of the architecture. EfficientNet utilizes mobile inverted bottleneck convolution (MBConv), and through consistent use of compound scaling, a family of models ranging from EfficientNet-B1 to EfficientNet-B7 was derived.

In our study, EfficientNet-B3 was selected for brain tumor detection using an MR images dataset. Following preprocessing and image augmentation, the weights of EfficientNet-B3 were pre-trained on the ImageNet databank to enhance the model's training. The preprocessing steps were crucial to address the challenge posed by the non-uniform sizes of two-dimensional MRI brain data. Specifically, the images were resized to a uniform dimension of 224 × 224 × 3 to align with EfficientNet's input requirements. This standardization ensures consistency in image dimensions across the dataset, facilitating seamless integration with the EfficientNet model for accurate and efficient brain tumor classification. By standardizing the image sizes, the model can effectively learn and extract relevant features from the MRI data, contributing to enhanced performance and robustness in tumor detection tasks.

The model architecture includes an EfficientNet-B3 base model followed by batch normalization, dense layers with regularization, dropout, and a softmax activation function in the output layer for multi-class classification. In the fully connected (FC) layer, the loss function, also known as the error function, is employed to calculate the prediction error of the network. Additionally, adaptive learning rates are applied to each parameter to optimize model training and convergence.

IV. RESULTS AND DISCUSSIONS

Accuracy is critical for evaluating the performance of machine learning models during training, testing, and validation. During training, the model learns from the dataset and adjusts its parameters to minimise differences. The projected and actual outputs. Training accuracy refers to how well the model performs on the training data. Following training, the model is assessed on a new dataset called the validation set. Validation accuracy measures how well a model can generalise to new data. Finally, the model's performance is measured on a completely independent dataset known as the testing set, and the testing accuracy reflects the model's realworld performance.

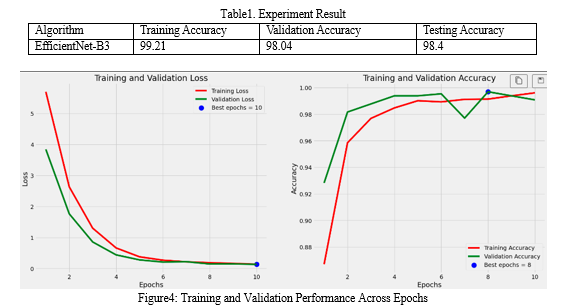

Fig4 The plot illustrates the training and validation process of a machine learning model across different epochs. The left subplot showcases the loss evolution over epochs, where the red curve represents the training loss and the green curve represents the validation loss. The blue dot highlights the epoch with the lowest validation loss, indicating the point where the model performs best in terms of minimizing loss. On the right subplot, the red curve depicts the training accuracy, while the green curve depicts the validation accuracy. The blue dot denotes the epoch with the highest validation accuracy, signifying the epoch where the model achieves its peak performance in terms of accuracy on the validation dataset. These visualizations help in understanding how the model's performance improves over training epochs, ensuring effective monitoring of both loss minimization and accuracy optimization during the training process.

Conclusion

Finally, our study describes a novel approach to brain tumor detection that employs convolutional neural networks (CNNs) and MR images as the dataset. Using transfer learning (TL) with the pre-trained EfficientNet model enabled us to effectively address the challenge of limited sample size while avoiding the need for manual model scaling during training. Our experiments have shown that the EfficientNet architecture outperforms existing CNN architectures in this domain, highlighting the importance of scaling width, depth, and resolution simultaneously for optimal performance. Notably, the computational efficiency achieved with EfficientNet opens doors for deploying CNN-based models on resource-constrained platforms such as mobile phones, thereby expanding the accessibility of brain tumor detection technology. Furthermore, our findings suggest that adopting the more sophisticated EfficientNet-B7 model could further enhance performance by capturing richer and more complex features. Looking ahead, our proposed framework lays a foundation for extending this system to classify different stages of brain tumors, promising significant advancements in diagnostic capabilities and ultimately improving patient outcomes in the future.

References

[1] Avsar E, Salcin K (2019) Detection and classification of brain tumors from MRI images using faster R-CNN. Tehni?ki Glasnik 13(4):337–342 [2] Pashaei A, Sajedi H, Jazayeri N. Brain tumor classification via convolutional neural network and extreme learning machines. In: 2018 8th international conference on computer and knowledge engineering (ICCKE). IEEE; 2018 Oct 25. p. 314–9. [3] Eswaraiah Rayachoti, Ramachandran Vedantham, Sanjay Gandhi Gundabatini (Feb 2024), EU?net: An automated CNN based ebola U?net model for efficient medical image segmentation. [4] Shah FM, Hossain T, Ashraf M, Shishir FS, Al Nasim MA, Kabir MH. Brain tumor segmentation techniques on medical images-a review (2019). [5] Komura D., Ishikawa S. Machine learning methods for histopathological image analysis. Comput Struct Biotechnol J. 2018. [6] Muhammad Arif F., Ajesh Shermin Shamsudheen, Geman Oana, Izdrui Diana, Vicoveanu Dragos. Brain tumor detection and classification by mri using biologically inspired orthogonal wavelet transform and deep learning techniques. J Healthcare Eng 2022. [7] Hamza Rafiq Almadhoun and Samy S. Abu-Naser. Detection of brain tumor using deep learning. Int J Acad Eng Res 2022. [8] Sadia Anjum, Lal Hussain, Mushtaq Ali, Monagi H. Alkinani, Wajid Aziz, Sabrina Gheller, Adeel Ahmed Abbasi, Ali Raza Marchal, Harshini Suresh, and Tim Q. Duong. Detecting brain tumors using deep learning convolutional neural network with transfer learning approach. Int J Imag Syst Technol 2022. [9] Muhannad Faleh Alanazi, Muhammad Umair Ali, Shaik Javeed Hussain, Amad Zafar, Mohammed Mohatram, Muhammad Irfan, Raed AlRuwaili, Mubarak Alruwaili, Naif H. Ali, and Anas Mohammad Albarrak. Brain tumor/mass classification framework using magnetic-resonance-imaging-based isolated and developed transfer deep-learning model. Sensors 2022.

Copyright

Copyright © 2024 Sanjay Gandhi Gundabatini , Teki Lakshmi Lahari, Tejaswi Kusam, Perecharla Divya Sai, Ponugubati Manasa. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59343

Publish Date : 2024-03-23

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online