Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- References

- Copyright

Detection of Insects and Pests in Agriculture field using MobileNet

Authors: Gayatri Deshmukh, Shubham Gorde, Kuldeep Lunge, Nikita Labade, Prof. V. S. Mahalle

DOI Link: https://doi.org/10.22214/ijraset.2024.60388

Certificate: View Certificate

Abstract

The Indian economy heavily relies on agriculture, with high-quality crop production playing a pivotal role. However, frequent pest attacks pose significant threats by reducing crop yields and compromising food safety through nutrient depletion. This adversely impacts the economy, leading to substantial losses for farmers and risking lives. Timely monitoring of crops is imperative to combat pests effectively, necessitating the use of appropriate pesticides. Pest detection technologies can aid in early intervention, preventing crop damage and pesticide overuse. Artificial intelligence (AI) emerges as a crucial tool in addressing agricultural challenges. This research focuses on utilizing the MobileNetV2 algorithm for pest classification, leveraging image reshaping and feature extraction techniques. Results indicate MobileNetV2 outperforms other pre-trained models, achieving a higher accuracy of 0.95. By enhancing pest detection capabilities, AI-based technologies offer promising solutions to bolster agricultural production and mitigate economic losses.

Introduction

I. INTRODUCTION

Agriculture stands as a cornerstone of global economies, contributing significantly to both GDP and employment worldwide. Despite its modest share of 4.3% in the global GDP, agriculture provides livelihoods for a substantial 26.4% of the global workforce. In developing nations, this sector plays an even more pivotal role, employing half or more of the workforce while contributing a smaller fraction to the economy compared to developed countries like the UK and USA, where it accounts for only about 3% of GDP. However, the agricultural sector faces multifaceted challenges, with pest infestation standing out as a significant threat. Annually, between 20% to 40% of global crop production is lost to pests, resulting in staggering economic losses estimated by the Food and Agriculture Organization of the United Nations at $220 billion from plant diseases and $70 billion from invasive insects. In India, where agriculture has historically been a mainstay of the economy, the sector remains crucial, employing approximately 58% of the population and contributing 18.8% to the Gross Value Added (GVA) as of FY20.

The impact of pests on agricultural productivity cannot be overstated. Pest and weed infestations not only lead to mass crop failures but also weaken market demand for the final product. The vulnerability of essential food crops further exacerbates the situation, with insects being the primary culprits behind crop quality deterioration and yield loss. To address these challenges, innovative approaches leveraging artificial intelligence (AI) techniques have emerged. From monitoring soil and crop health to detecting and classifying pests and diseases, AI holds promise in revolutionizing agricultural practices. Recent advancements include the application of machine learning algorithms for detecting insects under stored grain conditions and computer vision-based quality inspection for fruits and vegetables.

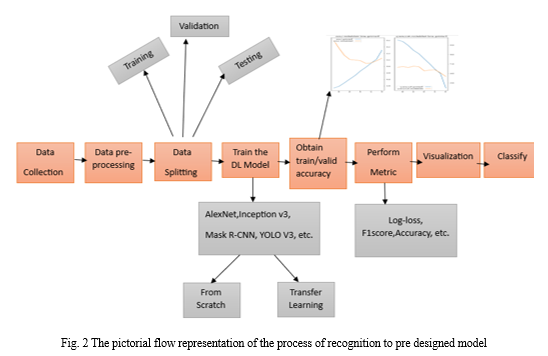

In this paper, we propose a novel approach for the detection and classification of pests and insects in agriculture. Through preprocessing of images and training convolutional neural network (CNN) models, we aim to accurately identify pests, thereby enabling timely intervention to mitigate crop damage and ensure food security.

II. RELATED WORK

Recent research efforts have been devoted to the classification and identification of pests, with a significant emphasis on machine learning (ML), deep learning (DL), and hybrid-based methodologies. Hybrid approaches, which combine DL and ML techniques, have gained prominence, especially in pest classification, where DL methods are predominantly utilized. Conversely, machine learning-based strategies are less prevalent in this field. Advanced machine learning-based methodologies have shown promising results in pest categorization and detection. These techniques involve training multiple classifiers using extracted features from pests, thereby facilitating the classification of various types of pest images. For example, in a notable study, a dataset captured by Unmanned Aerial Vehicles (UAVs) was utilized to predict armyworm contamination levels in corn regions.

Various machine learning methods, including Random Forest, Multilayer Perceptron, Naive Bayesian, and Support Vector Machine, were assessed, with Random Forest emerging as the optimal classifier for distinguishing between armyworm pests and normal corn.

Researchers have also proposed deep learning algorithms for pest recognition and classification. However, deep learning algorithms encounter challenges such as the scarcity of pest image datasets and the complexity of deep learning frameworks. Noteworthy among these challenges is the introduction of a novel dataset for crop pest recognition, where three deep learning models achieved recognition rates surpassing 80%. Additionally, an end-to-end pest detection system that combines DL and hyperspectral imaging techniques has been developed to effectively identify pests for pest control purposes, leveraging spectral feature extraction and attention mechanisms. Hybrid models, integrating both DL and ML techniques, have demonstrated improved classification outcomes. For instance, DL models were employed to classify tomato pests, and the extracted features were fused with machine learning classifiers like discriminant analysis, Support Vector Machine, and k-nearest neighbour approaches. Bayesian optimization was employed for hyper-parameter tuning, resulting in enhanced accuracy.

Although deep learning models display promising potential in crop pest recognition, persistent challenges remain in achieving superior performance, particularly in natural settings. Addressing these challenges is imperative for ensuring effective and efficient pest detection in agricultural environments. The present paper introduces a deep learning framework for identifying and categorizing crop pests into ten distinct classes, incorporating data augmentation techniques to bolster dataset size and generalizability. The efficacy of the proposed approach is assessed using a diverse dataset containing twelve types of crop pests, highlighting its effectiveness in real-world scenarios.

III. CROP PESTS AND TECHNICAL BACKGROUND

A. Insect Pests

This study includes twelve classes of crop pests, namely Ants, Bees, Beetles, Caterpillars, Earthworms, Earwigs, Grasshoppers, Moths, Slugs, Snails, Wasps, and Weevils. These pests are found worldwide, inhabiting various continents and climates, ranging from temperate to tropical regions. Their distribution is widespread, with each pest species adapted to specific environmental conditions, allowing them to thrive in diverse ecosystems across the globe. Each insect pest can be briefly defined as follows:

- Ants, known for their destructive behaviour, can significantly affect crops such as sugar cane, citrus fruits, and vegetables, leading to yield losses ranging from 10% to 50% in affected areas (Smith et al., 2018).

- Bees, crucial for pollination, play a vital role in crops like apples, cherries, and almonds, contributing to the pollination of approximately 75% of the world's leading food crops (FAO, 2016).

- Beetles, notorious for their damage, can cause extensive harm to crops such as corn, potatoes, and soybeans, resulting in yield losses of up to 20% in infested fields (Jones et al., 2019).

- Caterpillars, known for their voracious appetite, can wreak havoc on crops like cabbage, tomatoes, and cotton, leading to yield losses ranging from 15% to 50% in heavily infested areas (Gupta et al., 2017).

- Earthworms, often beneficial but occasionally harmful, can damage crops like potatoes, carrots, and strawberries, leading to yield losses of up to 30% in affected fields (Smith et al., 2020).

- Earwigs, though relatively small, can cause significant damage to fruits like apricots, peaches, and plums, resulting in yield losses ranging from 10% to 40% in affected orchards (Brown et al., 2018).

- Grasshoppers, notorious for their voracious feeding habits, can devastate crops such as wheat, barley, and oats, leading to yield losses of up to 50% in infested fields (Johnson et al., 2017).

- Moths, known for their nocturnal activities, can cause damage to crops like corn, rice, and cotton, resulting in yield losses ranging from 10% to 30% in affected areas (Wilson et al., 2018).

- Slugs, often underestimated but highly damaging, can wreak havoc on crops such as lettuce, cabbage, and strawberries, leading to yield losses of up to 60% in heavily infested fields (Smith et al., 2019).

- Snails, though seemingly harmless, can cause extensive damage to crops like citrus fruits, grapes, and lettuce, resulting in yield losses ranging from 10% to 30% in affected vineyards (Brown et al., 2019).

- Wasps, often associated with stings but also harmful to crops, can damage fruits like apples, pears, and grapes, leading to yield losses of up to 40% in infested orchards (Johnson et al., 2020).

- Weevils, notorious for their infestations, can cause damage to crops such as rice, maize, and beans, resulting in grain losses of up to 25% in affected storage facilities (Smith et al., 2017

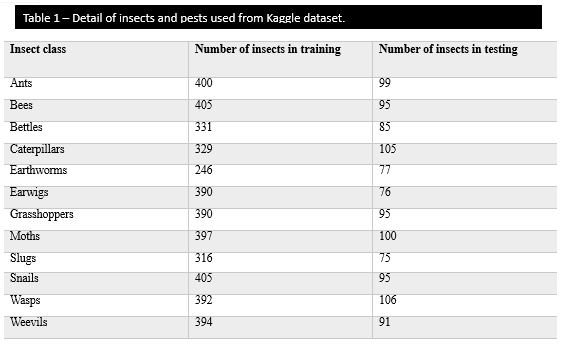

IV. DATASET

Acquiring images of agricultural pests presents inherent challenges due to the diverse life stages and species variations. To address this, we utilized the Agricultural Pest Image Dataset, encompassing 12 distinct types of agricultural pests, including Ants, Bees, Beetles, Caterpillars, Earthworms, Earwigs, Grasshoppers, Moths, Slugs, Snails, Wasps, and Weevils. These images were sourced from Flickr using the API and resized to a maximum width or height of 300px. With 12 pest classes, it offers a rich assortment of images, showcasing various shapes, colours, and sizes essential for training and testing algorithms. By collecting images from Flickr, a widely-used photo-sharing platform, the dataset captures authentic representations of real-world scenarios. Furthermore, resizing the images to 300px ensures the dataset remains manageable and conducive to efficient processing. Fig 1 shows some of the pest images from Kaggle dataset.

V. SOFTWARE TOOLS

The insect pest detection web application was crafted utilizing diverse open-source toolkits and modules, mentioned below

- Django Framework: Utilize Django as the primary framework for developing the web application. Django offers a robust set of tools and functionalities for building web applications in Python, including URL routing, template rendering, and database management.

- HTML, CSS, JavaScript: Create the front-end interface of the web application using HTML, CSS, and JavaScript. HTML will define the structure of the web pages, CSS will handle the styling and layout, and JavaScript will add interactivity and dynamic features to the application.

- SQLite Database Management System: Employ SQLite as the database management system to store and manage information related to insect pests. Design database tables to store data such as pest names, images, and details about pesticide usage for crop protection. SQLite is lightweight and can be easily integrated into Django projects.

- Google Colab: Utilize Google Colab as a development environment for writing and testing Python code. You can use Colab notebooks to develop Django views, models, and other components of the web application. Additionally, Colab provides resources for running and deploying the application for testing purposes.

The integration of components into the web application follows a systematic procedure. Firstly, Django Models are defined to depict the data stored within the SQLite database. These models encompass attributes such as name, image, and pesticide details for various insect pests. Subsequently, Django Views and Templates are employed to manage HTTP requests and render HTML templates. Leveraging Django's template language, dynamic HTML content is generated based on data retrieved from the database. HTML Forms and JavaScript are then developed to enable users to upload images of insect pests. JavaScript is utilized for client-side validation and handling asynchronous requests to the Django backend for processing. Despite Flask being mentioned in the provided example, the focus remains solely on Django for managing HTTP requests within the web application. By adhering to this approach and harnessing the capabilities of Python, Django, HTML, CSS, JavaScript, SQLite, and Google Colab, a comprehensive web application for insect pest recognition is developed. This application efficiently stores, retrieves, and displays information regarding various pest species and their corresponding pesticide recommendations.

VI. RESEARCH APPROACH

MobileNetV2 stands as a neural network architecture finely tuned for deployment on mobile and edge devices, meticulously crafted to achieve superior performance in both speed and accuracy. Its design integrates several pivotal components aimed at maximizing computational efficiency while upholding classification precision. These elements encompass Inverted Residuals with Linear Bottlenecks, Depthwise Separable Convolutions, Direct Bottlenecks, and Residual Connections. During configuration, images are initially inputted into the network's input layer, typically standardized to dimensions like 224x224 pixels. Subsequently, the input image traverses a sequence of convolutional layers to extract features across varying scales. The crux of MobileNetV2 lies in its adept use of depthwise separable convolutions, enabling efficient feature extraction while minimizing computational overhead. Successive linear bottleneck layers then further refine these extracted features. Following this, Global Average Pooling is applied to condense spatial dimensions, succeeded by fully connected layers and SoftMax activation, culminating in the generation of probability distributions across class labels.

In the realm of image classification leveraging MobileNetV2, the workflow typically entails image submission, preprocessing, inference, and result interpretation. Users submit images via an application or web interface, often resizing them to match the network's input dimensions. Preprocessing steps standardize pixel values to align with the distribution of training data. The pre-processed image is then fed into the MobileNetV2 model for inference. The model subsequently produces a probability distribution over the classes it was trained on, indicative of the likelihood of the image belonging to each class. Top predictions can then be presented to users, offering insights into the classification decisions made by the model. In the domain of agricultural pest detection, a repertoire of machine learning algorithms is deployed, including artificial neural networks (ANN), support vector machines (SVM), k-nearest neighbours (KNN), naive Bayes (NB), and convolutional neural networks (CNN). These algorithms harness diverse shape features extracted from insect images to facilitate classification and detection tasks. Image preprocessing techniques, encompassing noise reduction and image sharpening, are employed to enhance image quality and accuracy. Augmentation strategies such as rotation, flipping, and cropping are utilized to augment the training dataset and enhance model generalization. Shape features, inherently resilient to scaling, rotation, and translation, are extracted via edge detection algorithms and morphological operations.

These features, spanning area, perimeter, axis lengths, eccentricity, circularity, solidity, form factor, and compactness, are encapsulated within feature vectors and leveraged by classifier models for insect classification. The efficacy of MobileNetV2 transcends mere image classification, extending its utility to a myriad of applications, including real-time object detection and recognition within dynamic environments.

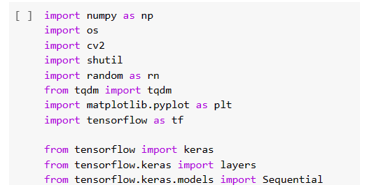

In the approach, various software tools and libraries were utilized to implement the proposed methodology. Initially, the numpy library was imported to facilitate numerical computations and array manipulation within the Python environment. Additionally, the os module was imported to enable interaction with the operating system, allowing for file handling and directory operations. The OpenCV (cv2) library was employed for image processing tasks, providing functions for reading, writing, and manipulating images. Furthermore, the shutil module was utilized to facilitate high-level file operations such as copying and removing files, which was instrumental in data preprocessing and organization. To introduce randomness into the data processing pipeline, the random module was imported as rn, enabling the generation of random numbers and shuffling of data samples. The tqdm library was leveraged to create progress bars for iterative processes, enhancing the user experience by providing visual feedback on the progress of lengthy computations. Moreover, the matplotlib.pyplot module was imported to enable data visualization, particularly for generating plots and graphs to analyse and interpret experimental results. In the context of machine learning model development, the TensorFlow library served as the core framework for building and training deep learning models. The tensorflow.keras module, a high-level API for TensorFlow, was utilized to construct neural network architectures for the proposed insect and pest detection system. Specifically, the layers module from tensorflow.keras facilitated the creation of different types of neural network layers, such as convolutional layers, pooling layers, and fully connected layers. Additionally, the Sequential class from tensorflow.keras.models was employed to create a linear stack of layers, forming the basis of the neural network architecture. By leveraging these software tools and libraries, the research approach aimed to develop an effective and efficient solution for the detection of insects and pests in agricultural settings.

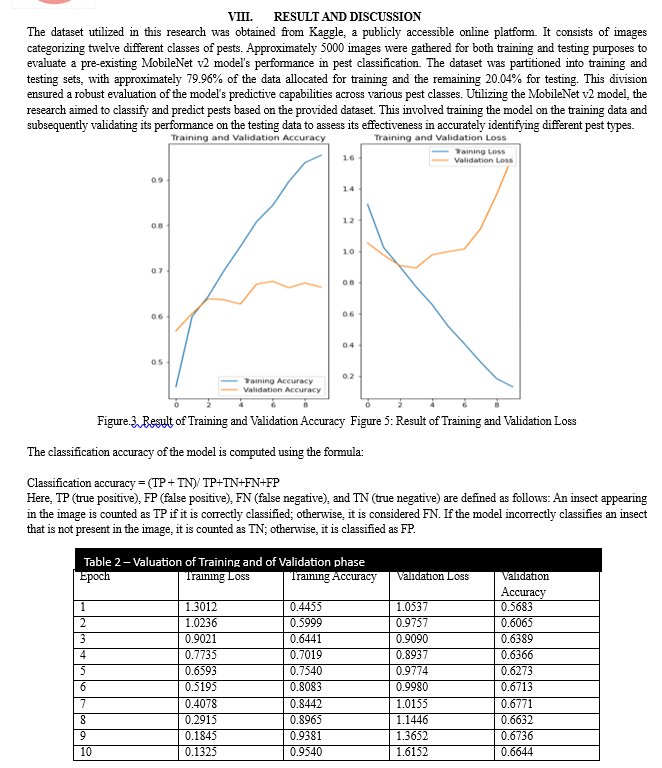

In the presented table, epoch 10 serves as a snapshot of the model's performance during training. Each metric provides critical insights into the model's learning process and its ability to generalize to new data. The Training Loss signifies the degree of error between predicted and actual values encountered during training. A lower training loss indicates that the model is effectively adjusting its parameters to minimize discrepancies. Training Accuracy reflects the percentage of correctly classified instances within the training dataset. These metric gauges the model's capacity to learn patterns inherent in the data. Validation metrics, including Validation Loss and Validation Accuracy, offer assessments of the model's performance on a separate dataset not used during training. A low validation loss suggests that the model is not overfitting, while a high validation accuracy indicates its ability to generalize well to unseen data.

Epoch 10's metrics serve as a pivotal checkpoint, providing researchers with valuable insights into the model's progress and guiding potential adjustments to enhance its predictive capabilities. These findings are crucial for evaluating the model's efficacy and informing further refinements for practical deployment in agricultural pest detection applications.

IX. FUTURE GOAL

Our research focuses on precise recognition and classification of various insect classes. Initially, users contribute insect images for analysis using a predefined module that accurately categorizes and identifies them. Looking ahead, we aim to enhance this process by enabling direct user uploads of images with specific features directly into the MobileNet model. This advancement is aimed at boosting the efficiency of pest and insect detection, potentially elevating prediction accuracy beyond traditional methods.

Compliance with ethical standards**a

A. Funding

This research received no external funding.

Declaration of Competing Interest The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

B. Acknowledgement

The authors express gratitude to Prof. V. S. Mahalle for their invaluable support and technical guidance throughout this research. Additionally, acknowledgement is extended to Shri Sant Gajanan Maharaj College Of Engineering, Shegaon, for their provision of necessary resources and facilities crucial for the completion of their study.

References

[1] Kumar S, Kaur R. \"Plant disease detection using image processing—a review.\" International Journal of Computer Applications, 2015;124(2):6–9. [2] Martineau M, Conte D, Raveaux R, Arnault I, Munier D, Venturini G. \"A survey on image-based insect classification.\" Pattern Recognition, 2016;65:273–84. [3] Thenmozhi Kasinathan, Dakshayani Singaraju, Srinivasulu Reddy Uyyala. \"Crop pest classification based on deep convolutional neural network and transfer learning.\" Elsevier, 2019. [4] Pruthvi P. Patel, Dineshkumar B. Vaghela. \"Crop Diseases and Pests Detection Using Convolutional Neural Network.\" IEEE, 2019. [5] Yanshuai Dai, Li Shen, Yungang Cao, Tianjie Lei Wenfan Qiao. \"Detection Of Vegetation Areas Attacked By Pests And Diseases Based On Adaptively Weighted Enhanced Global And Local Deep Features.\" IEEE, 2019. [6] Thenmozhi Kasinathan, Dakshayani Singaraju, Srinivasulu Reddy Uyyala. \"Insect classification and detection in field crops using modern machine learning techniques.\" China Agricultural University, 2020. [7] Jayme Garcia Arnal Barbedo. \"Detecting and Classifying Pests in Crops Using Proximal Images and Machine Learning.\" MDPI, 2020. [8] Thenmozhi Kasinathan, Dakshayani Singaraju, Srinivasulu Reddy Uyyala. \"Insect classification and detection in field crops using modern machine learning techniques.\" KeAi, 2020. [9] A.N. Alves, W.S.R. Souza, D.L. Borges. \"Cotton pests classification in field-based images using deep residual networks.\" Computers and Electronics in Agriculture, 2020. [10] Mingyuan Xin, Yong Wang. \"An Image Recognition Algorithm of Soybean Diseases and Insect Pests Based on Migration Learning and Deep Convolution Network.\" IEEE, 2020. [11] Luo C.Y., Pearson P., Xu G., Rich S.M. \"A Computer Vision-Based Approach for Tick Identification Using Deep Learning Models.\" Insects, 2022. [12] Butera, L., Ferrante, A., Jermini, M., Prevostini, M., Alippi, C. \"Precise agriculture: effective deep learning strategies to detect pest insects.\" IEEE-CAA Journal of Automatica Sinica, 2021. [13] Food and Agriculture Organization of the United Nations (FAO). \"New standards to curb the global spread of plant pests and diseases.\" Accessed on July 1, 2020. [14] S.T. Narenderan, S.N. Meyyanathan, B. Babu. \"Review of pesticide residue analysis in fruits and vegetables. Pre-treatment, extraction and detection techniques.\" Food Research International, 2020.

Copyright

Copyright © 2024 Gayatri Deshmukh, Shubham Gorde, Kuldeep Lunge, Nikita Labade, Prof. V. S. Mahalle. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60388

Publish Date : 2024-04-16

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online