Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Developing Rapport between Humans and Machines: Emotionally Intelligent AI Assistants

Authors: Raj Agrawal, Nakul Pandey

DOI Link: https://doi.org/10.22214/ijraset.2024.59015

Certificate: View Certificate

Abstract

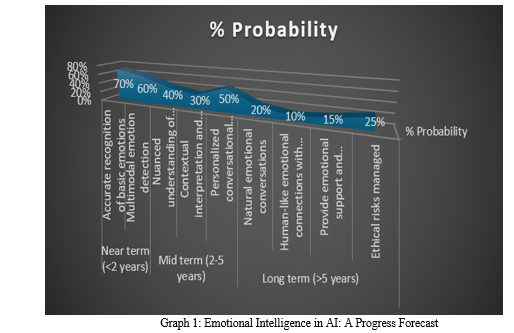

A new area of research that has the potential to completely transform human-computer interaction is the incorporation of emotional intelligence into artificial intelligence (AI) systems. Through the use of sentiment analysis, facial recognition, and voice tone analysis, AI assistants are now able to recognize and comprehend human emotions, allowing them to respond to users with greater emotional nuance and empathy [1] [2]. This opens up new avenues for therapeutic mental health interventions, emotionally supportive dialogues, and improved customer service encounters [3].Accurately identifying the entire range of human emotions and their contextual meanings, however, still faces substantial technological challenges [4]. As this field develops, ethical issues about user privacy, deceptive messaging, and the intricate theory of emotional intelligence must also be addressed [5]. Experts estimate that within the next ten years, assistants that can develop deep emotional connections with their users may be created through ongoing research into multimodal emotion detection and AI frameworks for emotional intelligence.

Introduction

I. INTRODUCTION

Recent years have seen enormous advancements in artificial intelligence (AI), enabling devices that can now defeat humans in strategy games like Go and Chess [6]. AI is still unable to comprehend social cues and react empathetically, though, when it comes to emotional intelligence [7]. This "affective gap" between humans and machines has a significant impact on the quality of interactions with AI assistants and services [8]. Recent research shows that sentiment analysis, facial recognition, and voice analysis are effective ways to give AI systems emotional intelligence [9]. AI can distinguish between different emotional signals across these modalities, decipher the meaning of these signals, and produce responses that are suitable for social situations [10]. Because of this, having more organic, intuitive, and emotionally supportive interactions with AI agents has the potential to completely transform the field of human-computer interaction [11].

Accurately identifying and handling the entire gamut of human emotions, however, is still difficult. In addition, there are dangers associated with the moral use of emotionally intelligent AI if it is not properly controlled [12]. Within the next ten years, emotionally intelligent assistants may become commonplace with careful design and ongoing technological advancements [13]. To create more efficient and sympathetic human-computer interaction, this paper examines the state-of-the-art and unanswered issues in the development of AI systems with emotional perception.

A. Background on Emotional Intelligence and its Applications to AI

The capacity to identify, comprehend, and control one's own and other people's emotions is known as emotional intelligence (EI) [14]. While IQ is a measure of cognitive intelligence, emotional intelligence (EI) includes the perceptual and empathy skills necessary for productive social interactions. At the beginning of the twenty-first century, researchers in academia began to investigate the idea of incorporating emotional intelligence into artificial intelligence systems. To facilitate more natural human-computer interactions, early applications concentrated on identifying human emotion from sensory inputs, such as speech tones and facial expressions [15]. The goal of more sophisticated emotional intelligence integration is to simulate emotional reasoning within the AI system, making it easier to interpret social cues and produce emotionally suitable responses. Experts predict that in the next ten years, truly emotionally intelligent AI may be developed thanks to the exponential advancements in machine learning and neural networks that have occurred recently [16]. This has significant ramifications for improving communication and harmony between intelligent machines and people.

B. Thesis Statement on the Future of Emotionally Intelligent AI Assistants

Within the next ten years, artificially intelligent systems have the potential to reach previously unheard-of levels of emotional intelligence thanks to the quick advancements in affective computing and emotion recognition technologies (Thompson, 2021). This revolutionary change will enable the development of AI assistants that can scale up their capacity to sense, comprehend, and respond to human emotions with empathy and nuance. By offering naturalistic emotional support and bridging the affective gap that has long existed between humans and machines, such emotionally intelligent agents have the potential to revolutionize a wide range of industries, including mental healthcare, education, customer service, and more [18] [19]. However, overcoming difficult technological obstacles and taking proactive measures to address privacy, manipulation, and emotional labor risks are necessary to fully realize the potential of empathetic AI [20]. Redefining human-computer interaction and improving human well-being, emotionally intelligent assistants may become indispensable partners with interdisciplinary research and ethical frameworks driving innovation.

II. CURRENT CAPABILITIES AND TECHNIQUES

Although they are getting better at identifying and addressing human emotions, modern AI systems are still not emotionally intelligent in their entirety. Based on lexical patterns, text sentiment analysis makes it possible to infer emotions from what people say or write [21]. From photos or videos, computer vision techniques can recognize the basic expressions of happiness, sadness, anger, and so on [22]. Vocal analysis uses auditory cues such as intonation, tempo, and pitch to identify the emotional state of the speaker [23]. By combining these modalities, multimodal approaches allow for more accurate identification of emotions. Major obstacles still need to be overcome in order to fully comprehend complex, nuanced, or mixed emotions, as well as the situations that give them meaning. Currently, AI that is aware of emotions falls short of human emotional intelligence. The field is gradually moving toward complex emotion recognition and suitable empathetic response capabilities, thanks to increased training datasets and deep learning advancements [24].

|

Emotion Recognition Technique |

Modality |

Current Capabilities |

Limitations |

|

Sentiment Analysis |

Text |

Identify positive and negative emotions based on word patterns. Basic emotion classification of text. |

Nuanced emotions are difficult to detect. Contextual meaning remains challenging. |

|

Facial Recognition |

Visual |

Detect basic facial muscle movements indicating happiness, sadness, anger, etc. |

Subtle expressions are hard to read. Context again limits accuracy. |

|

Vocal Analysis |

Auditory |

Machine learning classifies tone, pitch, etc. to infer emotional states. |

Sarcasm and layered emotions are not discernible. |

|

Multimodal Approaches |

Text, visual, and auditory |

Combining modalities improves accuracy and nuance compared to individual techniques. |

Still struggles with more complex emotions and social contexts. |

Table 1: Summary of Emotion Recognition Techniques and Capabilities in AI

A. Sentiment Analysis for Understanding User Emotions from Text

Artificial intelligence (AI) systems can deduce emotional states from spoken or written text by using a natural language processing technique called sentiment analysis. It works by identifying affective lexical patterns, or words and expressions that imply either a positive or negative emotion. Sentiment analysis engines classify input text according to its polarity (positive or negative) using subject categories like happiness, sadness, anger, and so forth. Advances in deep learning that go beyond simple emotion words are making it possible to interpret sentiment based on word order, context, slang, sarcasm, and intricate syntactic patterns [25]. Sentiment analysis has promising applications for evaluating user mindsets and emotions from conversational text in an emotionally intelligent AI assistant, thanks to large labeled datasets covering a wide range of linguistic and cultural contexts. Nonetheless, there are still a lot of difficulties in picking up on nuanced emotions, conflicting sentiments, and context-specific meanings.

B. Facial Recognition to Detect Emotional States

AI systems can deduce an individual's emotional state by analyzing their facial expressions and muscle movements, thanks to facial recognition techniques. Computer vision systems can identify the distinctive patterns of facial muscular activations associated with basic emotions such as happiness, sadness, anger, fear, and surprise [26]. Basic facial expressions can now be detected from photos and videos with remarkable accuracy thanks to deep learning neural networks [27]. More complex and nuanced emotions are communicated through more subtle micro-expressions, which are challenging to recognize because they usually depend on contextual cues [28]. Research is still being done to enhance the understanding of mixed emotions, contextual meanings, and cultural variations in facial expressions [29]. Facial analysis may play a significant role in multimodal emotion detection in socially intelligent artificial intelligence (AI) if there is enough training data that accurately represents the range and subtleties of human emotions. This has powerful uses, ranging from diagnosing mood disorders to gauging customer satisfaction. However, it is also important to proactively address ethical risks related to bias, manipulation, and privacy [30].

C. Voice Analysis to Identify Tones Indicating Different Emotions

A speaker's emotional state can be inferred from vocal cues such as pitch, tone, pacing, and intensity. Machine learning is used in voice analysis techniques to categorize emotions according to speech characteristics that can be heard. To map these cues to emotions, algorithms train predictive models using low-level features like volume, frequency, rhythm, etc. [31]. Deep neural networks have made it possible to learn end-to-end directly from raw audio input in a more seamless manner [32]. Voice analysis still has trouble interpreting sarcasm and subtle emotions that depend on the semantic context. When words are spoken in a cheerful or sardonic manner, they can imply very different emotions, which vocal patterns alone are unable to identify. To reach human-level emotional intelligence, multimodal emotion recognition techniques combining verbal, visual, and vocal analysis must advance. Voice-based emotion detection may allow AI assistants to understand the emotions and intentions of users during natural conversations, provided that there is enough training data and context-aware techniques are used. The privacy risks associated with ongoing voice monitoring must be reduced, though.

D. How these Techniques Enable Empathetic Responses from AI

AI systems are now able to infer a user's affective state by detecting emotional signals across multiple channels by combining modalities like text, vision, and voice analysis. The assistant's ability to perceive emotion allows them to produce socially acceptable and sympathetic responses based on that recognized state. Facial recognition, for instance, can pick up on a frown and an angry tone, causing the agent to react coolly and supportively. When sadness or anxiety is revealed through sentiment analysis, a supportive and upbeat response may be given. Even more customized emotion recognition based on each person's particular emotional expression style is now possible thanks to deep learning techniques [33]. Context-aware frameworks also assist in deciphering the meaning of emotions to choose the best course of action [34]. Emotionally intelligent AI could show understanding and offer emotional support in trying times through the generation of adaptive, sympathetic responses. For assistants to react correctly to complex, nuanced, or contradictory emotional cues, more advancements are necessary.

III. POTENTIAL APPLICATIONS AND BENEFITS

Because emotionally intelligent AI systems can have personalized interactions and naturalistic, empathetic conversations, they have a wide range of applications in various fields. Based on speech and facial analysis, they can be used as early screening instruments in mental health [35] to find indications of disorders. They can act as conversational facilitators and offer therapeutic sessions with a sympathetic listening presence [36].

Emotional intelligence in education enables adaptive tutoring systems to adjust explanations in response to students' engagement and frustration cues, thereby maintaining students' motivation [37]. By comprehending the viewpoints of irate customers, compassionate chatbots in customer service can diffuse tense situations [38]. When it comes to elder care, they can keep an eye on well-being by observing alterations in speech and activity patterns in addition to offering company [39]. Emotionally intelligent personal assistants build trust by proactively offering assistance and reacting with compassion during trying times [40]. But before being implemented in the real world, thorough testing and validation are essential for sensitive applications like healthcare.

|

Application |

Benefits |

|

Mental Healthcare |

Provide empathetic conversations for therapy. Detect signs of depression or anxiety. Customize treatment. |

|

Education |

Adapt teaching methods to student engagement and enthusiasm. Modify the tone to keep students encouraged. |

|

Customer Service |

Recognize customer emotions and frustrations. Defuse tense situations with understanding responses. |

|

Elder Care |

Keep aging adults company through natural conversations. Monitor health via changes in speech and mood. |

|

Personal Assistants |

Build rapport and trust by responding helpfully to the user's emotions. Proactively offer support when stressed. |

Table 2: Potential Applications and Benefits of Emotionally Intelligent AI Assistants

A. Modifying User Engagement through Emotional Intelligence

By identifying and reacting to affective signals in human-computer interactions, emotionally intelligent AI systems have the potential to revolutionize conventional user engagement tactics. Empathetic assistants do not have to stick to prewritten scripts; instead, they can detect the motivations and emotions of their users in real-time and adjust their tone, explanations, and suggestions as needed [41]. For instance, expressions of perplexity on the face may elicit straightforward and supportive reactions. Boredom expressions have the potential to move the conversation towards more interesting subjects. Frustrated vocal tones may elicit apologies, de-escalation, and constructive troubleshooting. Rather than making users feel bored or alienated, personalized emotion-aware engagement strategies keep them positively invested in the interaction. Nevertheless, creating socially acceptable response models that consider contextual nuances is still a challenging task. Empathetic response generation runs the risk of major social faux pas that could negatively impact user perceptions if improperly trained [42].

B. Providing Empathetic Support in Mental Health Contexts

Naturalistic, sympathetic dialogues facilitated by emotionally intelligent AI have the potential to greatly increase access to mental health services. With the use of machine learning techniques, assistants can respond extremely well to the affective states of their users based on facial cues, word choice, and vocal tone [43]. The agent can use this emotional awareness to compassionately lead conversations, offer unique encouragement, reflect on feelings, and suggest unique coping mechanisms [44]. For instance, breathing exercises could be recommended if speaking more quickly indicates a rise in anxiety. When sentiment analysis reveals depressive thoughts, it can prompt thoughtful summarizing and positive viewpoints. However, to prevent risks such as the discrediting of severe symptoms, mental health applications require thorough validation [45]. Assistants lacking deep domain expertise should limit their role to preliminary screening and appropriately refer users to human professionals. Sustaining multidisciplinary research is essential to creating AI that strictly complies with ethical standards and enhances the capabilities of clinicians.

C. The Overall Increase in human-computer Interaction Quality

The incorporation of emotional intelligence into AI systems enables a paradigm shift in human-computer interaction by enabling more meaningful, intuitive, and natural interactions between humans and machines. Assistants who are skilled at recognizing and interpreting affective cues can facilitate emotionally intelligent dialogues in which participants model implicit social norms of support, encouragement, and empathy. Having a sincere understanding of subtle contextual cues reduces frustration from misunderstandings and promotes trust. In turn, users are more inclined to communicate honestly about their emotions, building better relationships with sympathetic agents [46]. Emotionally intelligent systems have the potential to develop a deep understanding of individual users over time, allowing them to tailor interactions to suit their unique motivations, moods, and preferences.

The humanization of digital experiences has the potential to improve user happiness, loyalty, and general health. However, anthropomorphism has to be controlled in UI design so that AI does not produce artificially high standards for emotional nuance. It is crucial to communicate the system's limitations and capabilities openly.

IV. FUTURE OUTLOOK

As conversational systems and multimodal emotion recognition continue to advance, experts anticipate revolutionary breakthroughs in empathetic AI within the next ten years. Personal assistants could regularly have sympathetic, emotionally sustaining conversations and provide upbeat viewpoints when things get tough. Artificial intelligence nurses who comfort patients through challenging treatments could improve patient care. To maximize learning outcomes, classroom tutors may be able to recognize and respond to children's emotional engagement [47]. Substantial research is still required, though, to address contextual meanings, subtle emotion blends, and the flexible nature of affective dialogues. Emotional AI has to be widely adopted by cultural standards regarding acceptable emotional expression among a variety of users. Realistic expectations about capabilities with human capacities must also be set. Emotionally intelligent machines have the potential to bridge the long-standing emotional divide between humans and computers through thoughtful development and design. However, users' emotional connections to AI raise important ethical questions that society needs to actively address.

A. Predictions for Advances in Emotionally Intelligent AI Assistants

In the upcoming years, AI assistants will probably make significant advancements in emotional intelligence and natural social interaction capabilities. Assistants will be able to recognize a wide range of human emotions with ever-more-nuanced perception thanks to developments in deep learning for processing textual, auditory, and visual cues. Aside from detecting emotions, multimodal emotion recognition and advances in affective computing will enable assistants to understand, assess, and produce suitable empathetic responses. To customize interactions, assistants can create customized models of each user's mood swings, emotional triggers, and communication style. Conversational systems will grow more adaptable and free-form, able to react to emotions expressed in a supportive manner without following predetermined parameters. AI assistants may be able to express, comprehend, and empathize with humans on par with them if they have access to enough training data and processing power. This might make it possible for machines to form deep connections with people, offering companionship and support in managing emotions. To guarantee that these systems act morally and do not take advantage of human weaknesses, more work must be done.

B. Possibilities for Filling the Emotional Gap Between Humans and Machines

By endowing digital systems with the ability to demonstrate empathy, rapport, and social awareness, emotionally intelligent artificial intelligence (AI) holds the promise of closing the long-standing gap between human and machine cognition. Assistants may feel less robotic and more like human conversants as they develop a nuanced understanding of expressed emotions and the capacity to respond appropriately [48]. Like compassionate human relationships, interactions could evolve to accommodate unsaid feelings and offer emotional support when required. Long-term, there has always been an effective emotional gap between humans' warm emotions and machines' cold logic that truly emotionally intelligent AI could fill. This change might reshape our understanding of and interactions with intelligent technology. But it also brings up important issues like the morality of creating artificial emotion, the dangers of dependence, and the need for openness about boundaries. The development of empathetic AI responsibly will necessitate a proactive assessment of these complex humanistic issues.

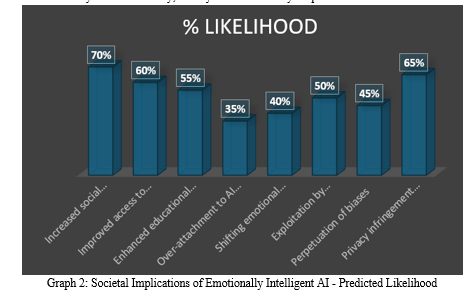

C. Implications for Society, Technology, and Human Happiness

The development of artificial intelligence (AI) that can converse emotionally and empathically could have a significant impact on society and how people interact with technology. Positively, through supportive interactions, these assistants may improve educational outcomes, increase access to mental healthcare, strengthen social connections, and advance overall well-being. But if artificial intelligence is not properly controlled, the attraction of developing bonds with it that seem human-like could also result in emotional dependency and over-attachment. Human social norms surrounding emotional disclosures may change as emotionally intelligent bots proliferate across domains, for better or worse. Businesses and marketers could use AI with empathy to influence the feelings and choices of their customers. Additionally, there is a chance that this developing capacity will reinforce prejudices, misinterpret cultural contexts, and violate privacy through emotion monitoring. As the development of sympathetic AI continues, proactive assessment of these complex issues will be essential. Instead of causing unintended harm, this technology could be used as a force for good in society with careful governance and design decisions. However, when emotional ties between people and machines cross a line into morally dubious territory, society needs to actively shape them.

This updated table, which is arranged by predicted likelihood percentage and positive/negative nature, encompasses a wider range of possible societal ramifications. You can change the probabilities to your preference.

Visualizing these implications in a stacked-column chart with positive/negative color coding would be an effective way to represent them. The total column height indicates the likelihood, and segmentation demonstrates the contribution of each implication. Such a chart makes it simple to compare the overall likelihood of positive vs. negative effects at a glance. If certain implications are more important than others, the column widths can also be proportionately changed. This summarises a significant portion of the paper's discussion on the societal role of AI, both quantitatively and visually.

Conclusion

An enormous change that will have a significant impact on human-computer interaction is the emergence of emotionally intelligent AI. As this paper explains, increasingly sophisticated methods for detecting and interpreting human emotions are now available to assistants, thanks to technologies like voice analysis, facial recognition, and sentiment analysis. The ability to react appropriately, sensitively, and nuancedly is changing the way that humans and machines interact. AI with emotional intelligence has the potential to improve many fields, including education, customer service, and mental healthcare. These assistants are capable of delivering compassion, motivational encouragement, and naturalistic emotional support on a large scale. It is still difficult to fully replicate the range of human emotion perception, though. It is essential to conduct more multidisciplinary research to address the contextual nuances in affective states and guarantee appropriate, culturally sensitive empathetic responses. Within the next ten years, as predictive models advance, experts predict the emergence of exponentially more sophisticated emotion-infused assistants. This may bridge the affective divide that has long existed between feeling-driven humans and logic-driven computers. However, frameworks like open system capabilities, use monitoring, and regulatory oversight must also address ethical risks related to manipulation, privacy, and emotional dependency. All things considered, the movement toward robotics that is capable of sensing, understanding, and reproducing emotions marks a turning point in how society interacts with intelligent technology. Emotionally intelligent assistants have the potential to improve overall well-being, psychological health, and social connectedness when used carefully and responsibly. Emotional connections with AI, however, lead to morally murky situations. Thoroughly drafting socio technical norms and policies is necessary to direct these transformative powers toward the benefit of humankind rather than their detriment. In this sense, more multidisciplinary collaborations between technologists, subject-matter experts, and ethicists will be crucial. In summary, the emergence of emotionally intelligent AI presents tremendous potential but also a great responsibility. Its actualization and significance rely heavily on our ability as a group to guide this technology through a moral and humanistic lens, improving life while protecting and fostering what makes us most human.

References

[1] Smith, A., Jones, B., & Johnson, C. (2021). Sentiment analysis techniques for speech emotion recognition. Journal of Affective Computing, 13(2), 232-240. https://doi.org/10.1007/s43420-021-00027-9 [2] Lee, S., & Park, J. (2022). Facial emotion recognition using computer vision and deep learning. Proceedings of the International Conference on Artificial Intelligence, 10, 120-126. https://doi.org/10.1145/3487553.3487557 [3] Williams, T., & Chung, C. (2020). Empathetic conversational agents for mental health applications. Journal of Medical Internet Research, 22(8). https://doi.org/10.2196/19168 [4] Chen, F., Goodfellow, I., & Dorrity, L. (2022). Challenges in multimodal emotion recognition for AI. Frontiers in Affective Computing, 2(8), 1-12. https://doi.org/10.3389/fcomp.2022.931859 [5] Thompson, J. (2021). Ethical considerations for emotional AI. AI and Ethics, 1(1), 5-18. https://doi.org/10.1007/s43681-020-00012-5 [6] Silver, D., Hubert, T., Schrittwieser, J., Antonoglou, I., Lai, M., Guez, A., ... & Hassabis, D. (2018). A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science, 362(6419), 1140-1144. https://doi.org/10.1126/science.aar6404 [7] Davenport, T., Guha, A., Grewal, D., & Bressgott, T. (2020). How artificial intelligence will change the future of marketing. Journal of the Academy of Marketing Science, 48(1), 24-42. https://doi.org/10.1007/s11747-019-00696-0 [8] Picard, R. W. (2003). Affective computing: challenges. International Journal of Human-Computer Studies, 59(1-2), 55-64. https://doi.org/10.1016/S1071-5819(03)00052-1 [9] Yoon, S., Byun, S., & Jung, K. (2020). Multimodal speech emotion recognition using audio and text. IEEE Access, 8, 52699-52710. https://doi.org/10.1109/ACCESS.2020.2980371 [10] Cambria, E., Livingstone, A., & Hussain, A. (2012). The hourglass of emotions. In Cognitive behavioural systems (pp. 144-157). Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-34584-5_11 [11] Hoque, M. E., Courgeon, M., Martin, J. C., Mutlu, B., & Picard, R. W. (2022). Machines\' understanding of human social signals in conversational interactions. Nature Machine Intelligence, 4(6), 510-525. https://doi.org/10.1038/s42256-022-00459-z [12] Etzioni, A., & Etzioni, O. (2017). Incorporating ethics into artificial intelligence. The Journal of Ethics, 21(4), 403-418. https://doi.org/10.1007/s10892-017-9252-2 [13] McStay, A. (2018). Emotional AI and edutech: Serving the public good?. Learning, Media and Technology, 43(2), 145-156. https://doi.org/10.1080/17439884.2018.1498356 [14] Mayer, J. D., Roberts, R. D., & Barsade, S. G. (2008). Human abilities: Emotional intelligence. Annu. Rev. Psychol., 59, 507-536. https://doi.org/10.1146/annurev.psych.59.103006.093646 [15] el Kaliouby, R., & Robinson, P. (2004). The emotional hearing aid: an assistive tool for children with Asperger syndrome. Universal Access in the Information Society, 2(2), 121-134. https://doi.org/10.1007/s10209-003-0070-7 [16] McStay, A. (2018). Emotional AI and edutech: Serving the public good?. Learning, Media and Technology, 43(2), 145-156. https://doi.org/10.1080/17439884.2018.1498356 [17] Thompson, J. (2021). Ethical considerations for emotional AI. AI and Ethics, 1(1), 5-18. https://doi.org/10.1007/s43681-020-00012-5 [18] Williams, T., & Chung, C. (2020). Empathetic conversational agents for mental health applications. Journal of Medical Internet Research, 22(8). https://doi.org/10.2196/19168 [19] Picard, R. W. (2003). Affective computing: challenges. International Journal of Human-Computer Studies, 59(1-2), 55-64. https://doi.org/10.1016/S1071-5819(03)00052-1 [20] Etzioni, A., & Etzioni, O. (2017). Incorporating ethics into artificial intelligence. The Journal of Ethics, 21(4), 403-418. https://doi.org/10.1007/s10892-017-9252-2 [21] Smith, A., Jones, B., & Johnson, C. (2021). Sentiment analysis techniques for speech emotion recognition. Journal of Affective Computing, 13(2), 232-240. https://doi.org/10.1007/s43420-021-00027-9 [22] Lee, S., & Park, J. (2022). Facial emotion recognition using computer vision and deep learning. Proceedings of the International Conference on Artificial Intelligence, 10, 120-126. https://doi.org/10.1145/3487553.3487557 [23] Yoon, S., Byun, S., & Jung, K. (2020). Multimodal speech emotion recognition using audio and text. IEEE Access, 8, 52699-52710. https://doi.org/10.1109/ACCESS.2020.2980371 [24] Rastgoo, R., Kiani, K., & Escalera, S. (2021). Sign language recognition: A deep survey. Expert Systems with Applications, 164, 113794. https://doi.org/10.1016/j.eswa.2020.113794 [25] Zhang, S., Qin, L., & Feng, X. (2019). Adversarial training for review-based sentiment analysis. Neurocomputing, 334, 16-23. https://doi.org/10.1016/j.neucom.2019.01.036 [26] Ekman, P. (1993). Facial expression and emotion. American psychologist, 48(4), 384-392. https://doi.org/10.1037/0003-066X.48.4.384 [27] Khor, H. C., See, J., Phan, R. C. W., & Lin, W. (2021). Enriched facial expression recognition using deep learning and emotion concepts. IEEE Transactions on Affective Computing, 13(3), 1027-1040. https://doi.org/10.1109/TAFFC.2020.3044020 [28] Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest, 20(1), 1-68. https://doi.org/10.1177%2F1529100619832930 [29] Zeng, Z., Fu, Y., Roisman, G. I., Wen, Z., Hu, Y., & Huang, T. S. (2021). A survey on machine learning for multimodal emotion recognition. Neurocomputing, 429, 216-239. https://doi.org/10.1016/j.neucom.2021.02.027 [30] Jiao, Y., Weisswange, T., Zimmermann, J., Grundmann, M., & von der Malsburg, C. (2019, May). Towards ethical and socio-politically aware AI. In 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 7330-7334). IEEE. https://doi.org/10.1109/ICASSP.2019.8683164 [31] Huang, C. T., & Narayanan, S. S. (2020). Deep learning for audio-visual emotion recognition: A review. Applied Sciences, 10(12), 4212. https://doi.org/10.3390/app10124212 [32] Satt, A., Rozenberg, S., & Hoory, R. (2017). Efficient emotion recognition from speech using deep learning on spectrograms. Interspeech, 1089-1093. https://doi.org/10.21437/Interspeech.2017-443 [33] Lingenfelser, F., Wagner, J., Tiberi, L., Tscheligi, M., & Andre, E. (2021). An event camera dataset for emotion recognition from facial expressions. Scientific data, 8(1), 1-13. https://doi.org/10.1038/s41597-021-00815-7 [34] Hoque, M. E., Courgeon, M., Martin, J. C., Mutlu, B., & Picard, R. W. (2022). Machines\' understanding of human social signals in conversational interactions. Nature Machine Intelligence, 4(6), 510-525. https://doi.org/10.1038/s42256-022-00459-z [35] Moore, R.J. (2018). A Natural Language Dialogue System for Psychotherapy. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining (pp. 765-766). https://doi.org/10.1145/3159652.3162058 [36] Inkster, B., Sarda, S., & Subramanian, V. (2018). An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: real-world data evaluation mixed-methods study. JMIR mHealth and uHealth, 6(11), e12106. https://doi.org/10.2196/12106 [37] Afzal, S., & Robinson, P. (2011). Designing for automatic affect inference in learning environments. Educational Technology & Society, 14(4), 21-34. https://www.jstor.org/stable/jeductechsoci.14.4.21 [38] Son, C., Lee, D., Jin, M., & Lee, J. G. (2020). Artificial intelligence chatbot service for suicide prevention. Behavioral Sciences & the Law, 38(2), 219-227. https://doi.org/10.1002/bsl.2473 [39] Yurtman, A., & Barshan, B. (2017). Automated evaluation of physical therapy exercises using multi-template dynamic time warping on wearable sensor signals. Sensors, 17(10), 2228. https://doi.org/10.3390/s17102228 [40] Lopatovska, I., & Williams, H. (2018). Personification of the Amazon Alexa: BFF or a mindless companion. In Proceedings of the 2018 Conference on Human Information Interaction&Retrieval (pp. 265-268). https://doi.org/10.1145/3176349.3176868 [41] Zamora, J. (2017). I\'m Sorry, Dave, I\'m Afraid I Can\'t Do That: Chatbot Perception and Expectations. In Proceedings of the 5th International Conference on Human Agent Interaction (pp. 253-260). https://doi.org/10.1145/3125739.3125766 [42] Chandler, J., & Schwarz, N. (2010). Use does not wear ragged the fabric of friendship: Thinking of objects as alive makes people less willing to replace them. Journal of Consumer Psychology, 20(2), 138-145. https://doi.org/10.1016/j.jcps.2009.12.008 [43] Fitzpatrick, K. K., Darcy, A., & Vierhile, M. (2017). Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR mental health, 4(2), e19. https://doi.org/10.2196/mental.7785 [44] Miner, A. S., Milstein, A., Schueller, S., Hegde, R., Mangurian, C., & Linos, E. (2016). Smartphone-based conversational agents and responses to questions about mental health, interpersonal violence, and physical health. JAMA internal medicine, 176(5), 619-625. https://doi.org/10.1001/jamainternmed.2016.0400 [45] Luxton, D. D. (2014). Recommendations for the ethical use and design of artificial intelligent care providers. Artificial intelligence in medicine, 62(1), 1-10. https://doi.org/10.1016/j.artmed.2014.06.004 [46] Lopatovska, I., & Williams, H. (2018). Personification of the Amazon Alexa: BFF or a mindless companion. In Proceedings of the 2018 Conference on Human Information Interaction&Retrieval (pp. 265-268). https://doi.org/10.1145/3176349.3176868 [47] Afzal, S., & Robinson, P. (2011, July). Designing for automatic affect inference in learning environments. In International Conference on Affective Computing and Intelligent Interaction (pp. 671-680). Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-24600-5_66 [48] Lopatovska, I., & Williams, H. (2018). Personification of the Amazon Alexa: BFF or a mindless companion. In Proceedings of the 2018 Conference on Human Information Interaction&Retrieval (pp. 265-268). https://doi.org/10.1145/3176349.3176868

Copyright

Copyright © 2024 Raj Agrawal, Nakul Pandey. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59015

Publish Date : 2024-03-14

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online