Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Driver Drowsiness Detection Using CNN

Authors: P. Jagadishwar Reddy , B. Karthik Goud, M Harsha Vardhan , CH. Manaswini , CH. Manikanta, Prof. R. Karthik

DOI Link: https://doi.org/10.22214/ijraset.2024.61620

Certificate: View Certificate

Abstract

Drowsiness detection is a safety technology that can prevent accidents that are caused by drivers who fell asleep while driving. The objective of this intermediate Python project is to build a drowsiness detection system that will detect that a person’s eyes are closed for more than a few seconds. This system will alert the driver when drowsiness is detected.

Introduction

I. INTRODUCTION

In the 21st century we are witnessing the highest growth in vehicle sales and vehicle traffic on the road. With such high traffic comes the fear of accidents taking place. Generally the main cause for an accident taking place is the carelessness of either drivers involved in the accident. Sleep deprived drivers are also known to be one of the most frequent causes in the accidents being caused. As much as 30% of the road accidents are known to have been caused by drowsy drivers. Driver drowsiness is a serious problem and needs to be addressed. The Driver drowsiness detection systems play a very significant role in achieving the target. Even one life saved can make a huge difference in someone’s life.

II. PROPOSED SYSTEM

Our proposed system is entitled as Driver Drowsiness Detection system. It is designed specifically to avoid accidents, the majority of which is caused because the driver is feeling drowsy.

The system will work on simple principle of counter, wherein a limit (say 15) is set above which if the driver is found sleepy (or his/her eyes are closed), then audio/ alarm will be played.The counter will increase based on the time for which the driver’s eyes are shut. As a result of which driver will be alerted and can take control of the vehicle.

III. LITERATURE REVIEW

A data fusion strategy is used in the study to detect sleepiness utilising a variety of biological markers, ocular features, vehicle temperature, and vehicle speed. The system has an efficiency of 96 percent to detect that the driver is awake and 97 percent to detect that he is asleep, allowing us to know the signs that show a sleepy-eyed driver. It was developed as an application for a real-life scenario where measuring security-related data does not require additional costs or additional equipment. Systems for assessing eye closure are also included in existing driver monitoring technology. PERCLOS is commonly used in these systems (Percent of the time Eyelids are CLOSed).It uses two different wavelengths of infrared (IR) light to brighten the driver's face (850 nm and 950 nm), as well as an IR camera to identify the driver's face and calculate the auto-reflection of the eye. Because eyeballs are relatively transparent to 850 nm radiation until they reach the retinal surface at the rear of the eye, the radiation is reflected at the retina, causing the shining pupil effect.

The 950 nm light, on the other hand, is absorbed almost entirely by the water molecules in the eye, resulting in very little reflection. The image obtained using 950 nm radiation is subtracted from the image obtained using 850 nm radiation, yielding a picture that only comprises retinal reflections. By calculating how the eyelids hide this auto-reflection, it may detect and measure eye blink using the aforesaid method. The target of this work was to implement a surveillance system to the vehicular driver based on artificial vision techniques and that can be further implemented in a smartphone so as to find and alert once the motive force has sleepiness. To attain this objective, different works had been analysed with sleuthing sleepiness in drivers; determining the technical parameters and algorithms that allows to signal the state of drowsiness. In this work a developed drowsiness detection algorithm is presented, the interface in which the state of drowsiness is displayed and the necessary adjustment to get the correct functioning of the implemented system is shown.

IV. OBJECTIVE

Automotive population is increasing exponentially in our country. The most important drawback concerning the inflated use of vehicles is the rising number of road accidents. Road accidents are doubtlessly a global peril in our country. The frequency of road accidents in India is among the highest in the world according to a report by the transport research wing of the ministry of road transport and highways, about a total of 151,113 population got killed in 480,652 road accidents across India in 2019, an average of 414 a day or 17 an hour. For the same, a total of 4,37,396 road accidents got recorded resulting in the death of 1,54,732 people and injuries to another 4,39,262, according to the latest National Crime Records Bureau (NCRB) data of 2019 in India. Approximately, 1.35 million of the public go to deathbed each year as an outcome of road traffic crashes worldwide as per reports.

The fatalities, associated expenses and related dangers have been recognized as critical threats to the country. Intelligent Transportation Systems (ITS) was developed on account of these vulnerable accidents. ITS aims to achieve traffic efficiency by minimizing traffic related problems not to mention the driver assistance systems that are included like Adaptive Cruise Control, Park Assistance Systems, Intelligent Headlights, Pedestrian Detection Systems, Blind Spot Detection Systems, etc. In accordance with these factors, the driver’s state is the utmost challenge for designing advanced driver assistance systems

V. METHODOLOGY AND ANALYSIS

A. The Dataset

The Dataset used for the CNN model training system is yawn_eye_dataset. It is a free and open source dataset available on kaggle. The entire dataset has been divided into 2 parts which are used separately for training (75% of the dataset) and testing (25% of the dataset). Each part has 4 different feature values that will be taken into consideration while evaluating . For the transfer Learning method we have used the mrl_eyes_dataset. The mrl eye dataset is a giant dataset consisting of only eye characteristics and is also divided into 2 parts , training and testing. This dataset will be used to retrain the model on the pre-trained InceptionV3 model.

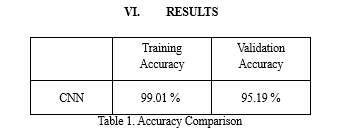

B. The Model Architecture

For the given project definition, we have used the CNN model approach towards the solution. First being the Deep learning model in which we built a Convolutional Neural Networks (CNN) with keras. A convolutional neural network is a special type of deep neural network which performs extremely well for image classification purposes. A CNN basically consists of an input layer, an output layer and a hidden layer which can have multiple numbers of layers. A convolution operation is performed on these layers using a filter that performs 2D matrix multiplication on the layer and filter. It consists of multiple layers namely Convolutional layer, Fully connected layer, Input layer and output (Final) layer. Each layer has an activation function and an optimizer except the final(output) layer.

C. Feature Extraction

Feature extraction is a process to locate significant features or attributes of the data. This technique could be really fruitful when the dataset contains data with a lot of dimensions. It would extract relevant data and bring out more precise and concise descriptions.

The dataset we are using contains a lot of complex information. Feature extraction is a step which helps to break complex information into much simpler form, making it extremely easier to extract relevant information from the data. The features extracted could prove to be really useful as it helps in mitigating the computational complexity that would otherwise have occurred. It also increases the accuracy of the model.

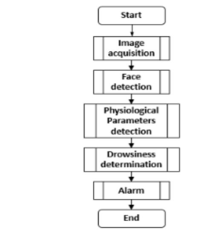

D. Schema Diagram

E. Outcomes

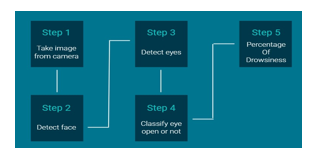

- Face Detection

The suggested system's face detection is based on the Viola-Jones object detection framework. Because all human faces have comparable qualities, the Haarfeatures can be used. Despite its age, this framework, like with many of its CNN competitors, is still the best in face detection. In order to construct a system that detects things quickly and accurately, this framework incorporates the principles of Haar-like Features, Integral Images, the AdaBoost Algorithm, and the Cascade Classifier. Haar-Features can be used to determine features that are similar to the eye region darker than upper and those that seem similar to the nose bridge region brighter than eyes. These are computed not from the original image, however from the integral image. At any location(x,y), the integral image is the sum of pixels above and to the left of (x,y).

2. Eyes Detection

The open eye detection cascade classifiers in OpenCV work in a similar way. Classifiers are available in the OpenCV library for recognising different eye orientations such as open, closed eyes with eyeglasses but no sunglasses. Haarcascade eye.xml, which identifies open eyes, is the fastest of all. If the user is wearing glasses, the classifier haarcascade eye tree eyeglasses.xml is picked according to his preferences. To avoid false positives from the backdrop, eyes are only identified in the part of the face that is visible. The minimum size of the eyes is 20*20. The face detected area displays open eyes that have been detected. A timer is begun as soon as no eyeballs are detected. When your eyes are closed, the system fails to detect the eyeballs and thus triggers the alarm. If the driver's eyes are closed for more than the counter value, the system determines that the driver is tired and sounds an alarm. All of this is done through a python application interface.

3. OpenCV

OpenCV (Open Source Computer Vision Library) is a free software library for computer vision and machine learning. Apart from the necessity to speed the usage of machine viewpoints in commercial goods, it was created to provide a standard architecture for computer vision applications. This library includes a comprehensive set of both classic and state-of-the-art computer vision, as well as machine learning algorithms, with over 2500 optimised algorithms. To detect and recognize faces, identify objects, classify human actions in videos, track moving objects, track camera movements, produce 3D point clouds from stereo cameras, extract 3D models of objects, combine images together producing a high resolution image of an entire scene, and similar images from an image database, remove red eyes from images taken using ash, follow eye movements, recognize scenery and establish markers to overlay it with augmented reality, etc. Over 500 algorithms and about 10 times as many functions that support those algorithms are included here. By including it as a library of image processing functions, it is easily deployed.

Conclusion

The proposed system was first implemented in web browsers and later in android applications to be easily accessible as these gadgets are basic requirements of people nowadays and can be found with any and everybody. For monitoring drowsiness of the driver’s alertness, Smartphone is taken as a minimum requirement and by extracting facial and eye gestures alongside its movements as input. This gave a high performance, nonetheless can be taken to a higher degree of performance by fusing more data and information into the same. Some examples are weather forecast, mechanical data of vehicles, signing in and monitoring the driver daily at different drives and the list is endless. Hence, here the real time processing is attained utilizing OpenCV and other required packages for an inclined outcome that gives results with flying colors and the boost in expansion of this technology is exceptionally perceived.

References

[1] Galarza, Eddie E., et al. \"Real time driver drowsiness detection based on driver’s face image behavior using a system of human computer interaction implemented in a smartphone.\" International Conference on Information Technology & Systems. Springer, Cham, 2018. [2] https://www.researchgate.net/publication/30841 4018_Driver_Alertness_Monitoring_using_Ope nCV_and_Android_Smartphone [3] Dospinescu, Octavian, and Iulian Popa. \"Face Detection and Face Recognition in Android Mobile Applications.\" Informatica Economica 20.1 (2016). [4] Navastara, Dini Adni, Widhera Yoza Mahana Putra, and Chastine Fatichah. \"Drowsiness Detection Based on Facial Landmark and Uniform Local Binary Pattern.\" Journal of Physics: Conference Series. Vol. 1529. No. 5. IOP Publishing, 2020. [5] Dey, Sanjay, et al. \"Real Time Driver Fatigue Detection Based on Facial Behaviour along with Machine Learning Approaches.\" 2019 IEEE International Conference on Signal Processing, Information, Communication & Systems (SPICSCON). IEEE, 2019. [6] https://patents.google.com/patent/US6661345#p a tentCitations [7] Batchu, Srinivasu, and S. Praveen Kumar. \"Driver drowsiness detection to reduce the major road accidents in automotive vehicles.\" International Research Journal of Engineering and Technology 2 (2015): 345-349. [8] https://www.ndtv.com/india-news/roadaccidentskilled-1-54-lakh-people-in-india-in- [9] 2019-report-2 288871 [10] https://www.hindustantimes.com/mumbainews/i ndia-had-most-deaths-in-road-accidentsin-2019- report/story-pikRXxsS4hptNVvf6J2g9O.html [11] https://thepoint.gm/africa/gambia/headlines/over [12] 1m-people-die-each-year-of-road-traffic-crashes [13] https://github.com/josearangos/driverdrowsiness -detection/issues/2 [14] Cen, Kaiqi. \"Study of Viola-Jones Real Time Face Detector.\" Web Stanford 5 (2016). [15] Jensen, O. H. \"Implementing the Viola-Jones face detection algorithm (Master\'s thesis, Technical University of Denmark, DTU, DK2800 Kgs. Lyngby, Denmark).\" (2008).

Copyright

Copyright © 2024 P. Jagadishwar Reddy , B. Karthik Goud, M Harsha Vardhan , CH. Manaswini , CH. Manikanta, Prof. R. Karthik . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61620

Publish Date : 2024-05-05

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online