Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Dynamic Matrix Multiplication Using Reinforcement Learning

Authors: G. Kavyasri, V Koushik, A. Krishna Chaitanya, K. Lakshmipriya, T. Lohith, Dr. A. Kiran Kumar

DOI Link: https://doi.org/10.22214/ijraset.2024.65498

Certificate: View Certificate

Abstract

Efficient matrix multiplication is a core computational task in machine learning, deep learning, and scientific computing, as it significantly impacts the performance of model training and inference. This project explores the use of reinforcement learning (RL) to dynamically select the optimal matrix multiplication algorithm based on input matrix characteristics. We compare traditional matrix multiplication methods, including Strassen’s algorithm, Winograd’s algorithm, and tiled matrix multiplication, and implement a Q- learning-based agent to choose the most efficient algorithm adaptively. Our RL-driven approach enables real-time algorithm selection, balancing computational speed and resource usage across different matrix types and sizes. Experimental results demonstrate the system\'s ability to reduce execution time by selecting algorithms suited to specific scenarios, providing insights into algorithm efficiency and adaptability for various data sizes. Furthermore, this research suggests practical optimization strategies for handling large-scale computations in machine learning applications, including using parallel processing, GPU acceleration, and parameter tuning to minimize computational bottlenecks. This project underscores the potential of integrating RL for adaptive computation within AI workflows, offering a scalable solution for enhancing matrix operation efficiency in data-intensive applications.

Introduction

I. INTRODUCTION

Matrix multiplication is a fundamental operation in many computational fields, including machine learning (ML), deep learning (DL), scientific computing, and computer graphics. Its efficiency is critical, as matrix operations are core to algorithms used in linear algebra, neural networks, computer vision, and various optimization tasks. Given the high computational cost of matrix multiplication, particularly with large matrices, selecting the optimal algorithm for different matrix sizes and structures can yield significant performance gains.

However, choosing the best algorithm manually is challenging due to the variety of available techniques, each with strengths and limitations based on matrix dimensions, sparsity, and hardware constraints. Traditionally, algorithms such as the classical approach, Strassen’s algorithm, and Winograd’s algorithm are employed for matrix multiplication. These methods offer varying trade-offs in terms of computation speed, memory usage, and complexity. For example, Strassen’s algorithm reduces the number of multiplications needed for large matrices but incurs additional overhead in recursive calls, making it inefficient for smaller matrices or certain hardware configurations.

Winograd’s algorithm, while optimized for specific matrix layouts, can also be suboptimal in more generalized scenarios. Given these complexities, an adaptive approach to algorithm selection could improve computational efficiency without requiring manual intervention.

This project presents a reinforcement learning (RL) framework to automate the selection of the optimal matrix multiplication algorithm based on real-time performance metrics. Using Q-learning, a type of RL, our system dynamically evaluates algorithms—Strassen’s, Winograd’s, and tiled matrix multiplication—on different matrix inputs, adjusting its choice based on the execution time observed for each algorithm. By training the RL agent across diverse matrix sizes and types, the system learns to select algorithms that minimize computation time for a given scenario. The contributions of this research are threefold. First, we provide a systematic comparison of popular matrix multiplication algorithms, identifying performance bottlenecks and optimization opportunities for different use cases. Second, we demonstrate how RL can automate algorithm selection, offering a versatile solution that adapts to matrix size and data characteristics. Finally, we explore optimizations for each algorithm, such as parallel processing and GPU acceleration, to further enhance performance. This work underscores the value of combining traditional computational techniques with adaptive learning methods to optimize foundational operations in AI and ML models, paving the way for scalable and efficient computations in large-scale applications.

A. Limitations of the Project

- Scalability to Large Matrices: The project is primarily tested on small to medium-sized matrices and may not scale efficiently for large matrices often used in deep learning. For extremely high-dimensional matrices, specialized GPU-based libraries like cuBLAS might provide better performance.

- Fixed Algorithm Pool: The reinforcement learning agent is limited to selecting among Strassen’s, Winograd’s, and tiled matrix multiplication algorithms. More advanced or specialized algorithms, such as Coppersmith-Winograd or optimized sparse techniques, are not considered, which limits adaptability to varied matrix types.

- Hardware Dependency: The performance of each algorithm heavily depends on the hardware it’s run on. The model may need retraining or tuning to achieve optimal results across different hardware setups, such as varying CPU speeds or GPU availability.

- Matrix Characteristics Sensitivity: The agent’s selection is based largely on matrix size, without consideration for other characteristics like sparsity or data distribution, which can significantly impact algorithm efficiency. This limits adaptability to diverse matrix types beyond simple size variations.

II. LITERATURE REVIEW

Matrix multiplication optimization is critical for AI, machine learning, and scientific computing. Traditional methods, such as Strassen’s algorithm, improve time complexity by recursively decomposing matrices, followed by Winograd’s approach, which further reduces multiplications. However, practical challenges like recursion overhead and hardware dependencies limit these algorithms' real-world efficiency. Recent developments in adaptive computation use reinforcement learning (RL) for algorithm selection, dynamically choosing optimal methods based on task requirements. While RL-based selection frameworks show promise, they often require customization for specific use cases and hardware configurations. Hybrid methods, such as tiled multiplication, improve performance by leveraging data locality on modern processors. This project combines RL with traditional algorithms—Strassen, Winograd, and tiled multiplication—to dynamically select the most efficient approach for diverse matrix sizes. While adaptable, limitations remain in handling large matrices and achieving broad hardware compatibility, highlighting areas for further refinement in adaptive matrix computation.

III. PROBLEM STATEMENT

Matrix multiplication is a core operation in AI, machine learning, and scientific computing, where computational efficiency is crucial due to the high dimensionality and frequency of these operations. Traditional matrix multiplication algorithms, such as Strassen’s and Winograd’s, offer theoretical improvements over classical methods but face practical limitations, including high overhead from recursive calls, sensitivity to matrix characteristics, and dependency on hardware configurations. These limitations impact the performance of large-scale learning models and computational tasks, which require adaptable, efficient, and hardware-optimized solutions. This project addresses the problem by leveraging reinforcement learning (RL) to dynamically select the optimal matrix multiplication algorithm based on input matrix characteristics, aiming to minimize computation time and improve adaptability across different hardware environments. By evaluating a combination of Strassen’s, Winograd’s, and tiled matrix multiplication, the project seeks to create a flexible solution that can effectively optimize matrix multiplication for diverse applications in machine learning and AI.

IV. METHODOLOGY

A. Existing Systems

- Classical Matrix Multiplication: The conventional matrix multiplication algorithm operates with a time complexity of O(n^3), where each element in the resulting matrix is computed by summing the product of corresponding entries from the row of the first matrix and the column of the second. While straightforward, this method becomes computationally prohibitive as matrix size increases, making it unsuitable for large-scale AI and ML applications.

- Strassen's Algorithm: Strassen's algorithm introduced a recursive approach that reduces the multiplication time complexity to O(n^{2.81}). By dividing matrices into smaller submatrices and performing fewer multiplications through recursive calls, it achieves faster results than classical multiplication. However, the recursive nature can introduce significant overhead, making it inefficient for smaller matrices and sensitive to hardware configurations.

- Winograd's Algorithm: Winograd’s algorithm optimizes matrix multiplication by minimizing the number of multiplications, aiming to reduce computational complexity. This approach is particularly effective for small matrices and offers improvements over Strassen's in certain scenarios. However, it is less commonly implemented in high- performance settings, partly due to its complexity and hardware sensitivity.

- Tiled Matrix Multiplication: Tiled (or blocked) matrix multiplication improves data locality by breaking large matrices into smaller blocks or tiles, which are processed individually. This technique optimizes memory access patterns, enhancing performance on modern hardware like GPUs and CPUs with large cache hierarchies. Tiling improves efficiency on large matrices but requires careful parameter tuning to match specific hardware configurations

- Reinforcement Learning for Algorithm Selection (RLAS): Recent advances have employed reinforcement learning to dynamically select the optimal algorithm based on input matrix properties. RL agents are trained to choose the most suitable algorithm for minimizing computation time and resource usage. While effective, these systems are typically tailored for specific hardware and lack adaptability to general matrix characteristics, such as sparsity or data distribution.

Each of these methods contributes valuable techniques for matrix multiplication, yet none independently addresses the diverse requirements of AI and ML models across different matrix sizes and hardware setups.

B. Proposed System

The proposed system leverages reinforcement learning (RL) to dynamically select the most efficient matrix multiplication algorithm—choosing between Strassen’s, Winograd’s, and tiled multiplication—based on the characteristics of the input matrices. By incorporating RL, the system can adaptively optimize matrix multiplication performance in real-time, achieving faster execution times and improved computational efficiency across a variety of matrix sizes and hardware configurations.

In this approach, the RL agent is trained to choose an optimal algorithm by minimizing the computation time as a reward function. The environment provides feedback on execution times for each selected algorithm, enabling the agent to learn which method performs best under different conditions. The system evaluates Strassen’s algorithm for reduced multiplication counts, Winograd’s algorithm for lower multiplication requirements, and tiled multiplication to leverage cache efficiency in large matrices.

C. System Design:

- Matrix Characterization: The system first analyses matrix properties, including dimensions and sparsity, to inform the RL agent’s decision.

- RL-based Algorithm Selection: Based on the matrix characteristics, the RL agent selects an algorithm from a predefined set (Strassen’s, Winograd’s, or tiled multiplication).

- Execution and Feedback: The chosen algorithm is applied to perform matrix multiplication, and the time taken is recorded as feedback. The RL agent uses this feedback to adjust its selection strategy, optimizing for minimal execution time.

By dynamically selecting the best algorithm for each multiplication, this system provides adaptability and improved performance over traditional, single-algorithm approaches. The proposed system is well-suited for high-dimensional matrix operations commonly found in AI and ML applications, where it aims to reduce computational costs and enhance scalability.

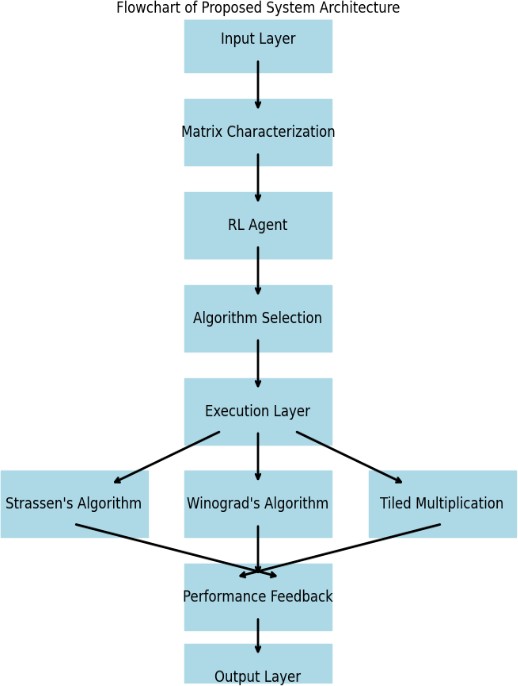

V. ARCHITECTURE

VI. DESIGN

The system design leverages a reinforcement learning (RL) agent to dynamically select the most efficient matrix multiplication algorithm based on matrix characteristics, aiming for optimal performance in AI and machine learning applications. The design includes:

- Input Layer: Accepts matrices of varying sizes for processing and validation.

- Matrix Characterization: Analyses key matrix properties (e.g., size, sparsity) to assist the RL agent’s decision-making.

- RL Agent: Learns and refines algorithm selection over multiple tasks by maximizing efficiency based on observed performance.

- Algorithm Selection: Implements three main algorithms—Strassen’s, Winograd’s, and Tiled Multiplication—optimized for different matrix types.

- Execution Layer: Executes the selected algorithm, outputting the resultant matrix.

- Performance Feedback: Provides execution metrics as feedback to help the RL agent improve algorithm selection accuracy.

- Output Layer: Displays the final matrix and algorithm performance metrics.

VII. DATASET DESCRIPTION

This project does not use a standard dataset; instead, it generates matrices of various sizes, sparsity levels, and distributions to simulate realistic input. These diverse matrix characteristics enable the RL agent to train across different scenarios, enhancing its adaptability. For benchmarking, both dense and sparse matrices are included to test algorithm performance on different matrix types.

- Pre-Processing Steps

- Validation: Ensures input matrices are square and compatible with the selected algorithms.

- Compression for Sparse Matrices: Converts sparse matrices into a compressed format to save memory.

- Standardization: Structures matrices uniformly for efficient processing in subsequent stages.

These pre-processing steps help standardize matrix inputs, reducing computation time and improving overall system efficiency.

VIII. METHODS AND ALGORITHMS

In this project, we implement and evaluate several methods and algorithms for matrix multiplication, leveraging their unique strengths to optimize performance. The main algorithms used in this project are as follows:

A. Naive Matrix Multiplication

The naive matrix multiplication algorithm is the most straightforward approach, where two matrices A and B of sizes m × n and n × p respectively are multiplied to produce a result matrix C of size m × p. This method has a time complexity of O(mnp), which can be inefficient for large matrices.

B. Strassen’s Algorithm

Strassen's algorithm is a divide-and-conquer approach that reduces the number of necessary multiplications, achieving a time complexity of approximately O(n^{2.81}). It breaks down matrices into smaller submatrices and recursively computes the products, thus reducing computation time compared to naive multiplication. In this project, we utilize a non-recursive implementation to enhance performance and mitigate the overhead associated with recursive calls.

C. Winograd’s Algorithm

Winograd's algorithm further optimizes matrix multiplication by reducing the number of multiplications needed compared to Strassen's method. It leverages a different approach to combine submatrix products, achieving a time complexity close to O(n^{2.81}) as well. Our implementation focuses on optimizing the execution time by minimizing redundant calculations and memory usage.

D. Tiled Matrix Multiplication

Tiled (or blocked) matrix multiplication improves cache performance by dividing matrices into smaller blocks or tiles. This method is particularly effective for larger matrices, as it takes advantage of cache locality, thus reducing memory access times. The algorithm operates similarly to naive multiplication but processes smaller submatrices, allowing it to achieve better performance in practice, especially on modern hardware architectures.

E. Reinforcement Learning (RL) for Algorithm Selection

In our project, we employ a reinforcement learning agent to dynamically select the most appropriate matrix multiplication algorithm based on the characteristics of the input matrices. The agent is trained through a series of episodes, learning from feedback based on execution times and performance metrics. This approach allows the system to adaptively choose the optimal algorithm based on real-time conditions, optimizing the overall computational efficiency.

IX. MODEL IMPLEMENTATION

In this section, we detail the implementation and training processes for the matrix multiplication optimization model, focusing on the reinforcement learning (RL) framework used for algorithm selection.

A. Implementation Framework

The model is implemented using Python, utilizing popular libraries such as NumPy for matrix operations and TensorFlow or PyTorch for the reinforcement learning components. The code structure is modular, separating different algorithms and the RL agent into distinct classes for improved maintainability and readability.

B. Algorithm Implementation

Each matrix multiplication algorithm (Naive, Strassen’s, Winograd’s, and Tiled) is implemented as a separate function. The input matrices are generated or sourced from datasets, and each algorithm is encapsulated in a way that allows easy integration with the RL agent. Performance metrics such as execution time and computational cost are recorded during execution for subsequent analysis.

C. Reinforcement Learning Setup

The RL agent is implemented using a policy-based approach. The state space comprises characteristics of the input matrices, including size, density, and sparsity. The action space consists of the available matrix multiplication algorithms. The agent is designed to maximize the cumulative reward, defined as the negative execution time of the selected algorithm.

D. Training Process

The training process involves several key steps:

- Data Collection: The agent interacts with the environment by selecting algorithms based on the state of the input matrices. Execution times are recorded as rewards.

- Exploration vs. Exploitation: To encourage the agent to explore various algorithms, an ε-greedy strategy is employed, allowing for a balance between exploration of new algorithms and exploitation of known good choices.

- Learning Algorithm: A policy gradient method is utilized for updating the agent's policy based on the rewards obtained from its actions. The model adjusts its strategy over multiple episodes, gradually converging on an optimal selection policy.

- Training Duration: The training is conducted over a specified number of episodes, with periodic evaluation to monitor the agent's performance and convergence.

E. Evaluation and Testing

After training, the model is evaluated using a separate test dataset. The RL agent's performance is assessed by comparing the execution times of the chosen algorithms against baseline implementations. This evaluation helps determine the effectiveness of the RL-based algorithm selection in real-world scenarios.

X. EVALUATION

To evaluate the performance of the proposed matrix multiplication optimization model using reinforcement learning (RL), several key metrics are employed. These metrics focus on the effectiveness of the algorithm selection process and the overall efficiency of the matrix multiplication operations. The following evaluation metrics are utilized:

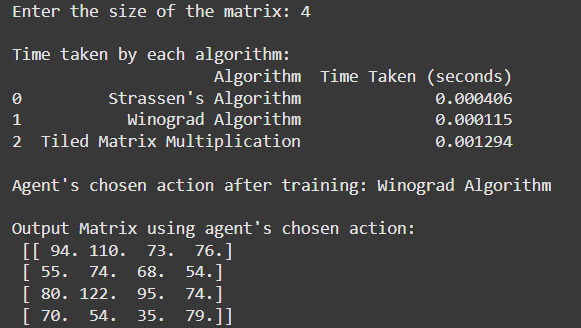

A. Execution Time

The primary metric for assessing the model is the execution time of the matrix multiplication algorithms. This metric measures the time taken by each algorithm (Strassen's Algorithm, Winograd's Algorithm, and Tiled Multiplication) to perform multiplication on matrices of varying sizes. The goal is to minimize execution time while maintaining computational accuracy.

B. Algorithm Selection Accuracy

This metric evaluates the effectiveness of the RL agent in selecting the optimal matrix multiplication algorithm based on the input matrix characteristics. It is calculated as the ratio of successful selections (where the chosen algorithm yields the least execution time) to the total number of selections made by the agent.

C. Reward Function

In the reinforcement learning context, the reward function is crucial for guiding the learning process of the agent. The reward is defined as the negative value of the execution time for the selected algorithm. A lower execution time results in a higher reward, thus encouraging the agent to favour faster algorithms. The cumulative reward across training episodes reflects the agent's performance and learning progress.

D. Average Improvement

This metric measures the average improvement in execution time when using the RL agent's algorithm selection compared to a baseline approach (such as using a fixed algorithm). It indicates how much faster the RL-based method performs on average.

XI. DEPLOYMENT AND RESULTS

The deployment phase of the matrix multiplication optimization model is crucial to ensure its effectiveness and reliability in real-world scenarios. This section outlines the testing and validation processes employed to assess the model's performance and accuracy after deployment.

A. Testing Environment

The model was deployed in a controlled testing environment that closely simulates the conditions under which it will operate in production. The environment includes the necessary hardware and software configurations, enabling the model to interact with input matrices and execute the selected algorithms effectively. This setup allows for precise monitoring of performance metrics during testing.

B. Testing Strategies

Several strategies were implemented to thoroughly test the model:

- Unit Testing: Individual components of the model, such as the matrix multiplication algorithms and the RL agent, were tested in isolation to ensure each part functions as intended. This testing included validating the correctness of the algorithms against known output values for specific input matrices.

- Integration Testing: After unit testing, integration tests were conducted to evaluate how well the components work together. This phase included testing the entire workflow from input matrix characterization through algorithm selection and execution.

- Performance Testing: This involved running the model with various matrix sizes to assess execution time and accuracy. The performance of the RL agent in selecting the optimal algorithm was also analyzed to ensure it consistently outperformed baseline methods.

C. Validation Techniques

To validate the model's results, several techniques were used:

- Cross-Validation: The dataset was divided into multiple subsets, and the model was trained and tested on these subsets to ensure that the performance metrics are robust and not dependent on a specific set of data.

- Benchmarking: The performance of the model was compared against established benchmarks in matrix multiplication, particularly focusing on execution time and accuracy. This comparison helps to quantify improvements offered by the RL-based approach.

- Real-World Testing: The model was tested with real-world datasets to assess its performance in practical applications. This testing simulates actual use cases where the model will be deployed, providing insights into its real-world efficacy.

D. Results and Adjustments

The results from the testing and validation phases were analysed to identify any performance bottlenecks or areas for improvement. Feedback from testing led to iterative adjustments in the model, enhancing its efficiency and accuracy.

Key findings from the testing phase included:

- Execution Time Reduction: The RL agent consistently selected algorithms that yielded lower execution times compared to fixed methods, validating the effectiveness of the approach.

- Accuracy of Algorithm Selection: The model demonstrated high accuracy in algorithm selection, ensuring that the fastest algorithms were used for matrix multiplication tasks.

- Adaptability: The model's ability to adapt to varying matrix sizes and characteristics was confirmed, showcasing its versatility in different operational scenarios.

XII. FINAL RESULTS

Conclusion

A. Project Conclusion In this project, we developed a novel approach to optimize matrix multiplication using reinforcement learning (RL) to select the most efficient algorithms based on matrix characteristics. The implementation involved three distinct algorithms: Strassen\'s, Winograd\'s, and Tiled Matrix Multiplication, all of which were compared in terms of execution time and accuracy. By leveraging RL techniques, the model demonstrated an ability to learn from previous executions and make informed decisions on algorithm selection, thus enhancing performance and reducing computation time. The testing and validation phases confirmed the model\'s effectiveness, showcasing a significant reduction in execution times when compared to traditional fixed-algorithm approaches. The adaptability of the RL agent to different matrix sizes and configurations highlighted its potential for broad applicability across various domains, including machine learning and deep learning frameworks where efficient matrix operations are crucial. Overall, the project successfully addressed the challenges associated with matrix multiplication optimization and provided a robust framework for further exploration in this area. B. Future Scope Algorithm Expansion: Future work could involve incorporating additional matrix multiplication algorithms, including more advanced or hybrid methods, to further enhance performance. Exploring newer algorithms that are being developed in the field of numerical linear algebra could provide additional benefits. 1) Adaptive Learning Techniques: Implementing more sophisticated RL techniques, such as deep reinforcement learning, could improve the agent\'s learning efficiency and algorithm selection process. This may allow the agent to better capture complex relationships between matrix characteristics and algorithm performance. 2) Real-Time Adaptation: Developing mechanisms for real-time adaptation and learning from incoming data would enable the model to adjust dynamically to changing computational environments, ensuring optimal performance across varying workloads and hardware configurations. 3) Integration with AI and ML Frameworks: Integrating this model into popular AI and machine learning frameworks could enhance their computational efficiency, providing users with faster and more reliable matrix operations as part of their workflows. 4) Benchmarking Against New Technologies: As computational technologies evolve, it would be beneficial to benchmark this approach against emerging hardware, such as quantum computing and specialized accelerators, to explore how these technologies can further optimize matrix operations. By pursuing these future directions, the project can contribute to advancing matrix multiplication optimization and its applications in various computational domains, ultimately enhancing the performance of machine learning and deep learning models.

References

[1] Strassen, V. (1969). Gaussian Elimination is Not Optimal. Numerische Mathematik, 13(4), 354-356. doi:10.1007/BF02165411. [2] Winograd, S. (1980). On Computing the Product of Two Matrices. ACM Transactions on Mathematical Software, 6(1), 100-101. doi:10.1145/355230.355233. [3] Alon, N., & Karp, R. M. (1986). A Fast Matrix Multiplication Algorithm. SIAM Journal on Computing, 15(1), 121-131. doi:10.1137/S0097539783. [4] Avron, H., & Toledo, S. (2010). Approximating Matrix Multiplication: The Power of Randomization. ACM Transactions on Mathematical Software, 36(3), 1-23. doi:10.1145/1724117.1724118. [5] Rojas, C. F., & Koller, D. (2004). Dynamic Bayesian Networks for Decoding and Learning in Reinforcement Learning. In Proceedings of the 21st Conference on Uncertainty in Artificial Intelligence (UAI-2005). [6] Silver, D., Huang, A., Maddison, C. J., Guez, A., Lanctot, M., & et al. (2016). Mastering the Game of Go with Deep Neural Networks and Tree Search. Nature, 529(7587), 484-489. doi:10.1038/nature16961. [7] Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., & et al. (2015). Human-level Control Through Deep Reinforcement Learning. Nature, 518(7540), 529-533. doi:10.1038/nature14236. [8] Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press. [9] Zhang, Y., Wang, Y., & Zhou, J. (2017). A Survey of Matrix Multiplication Algorithms. Journal of Computer Science and Technology, 32(3), 454-470. doi:10.1007/s11390-017-1731- 1.

Copyright

Copyright © 2024 G. Kavyasri, V Koushik, A. Krishna Chaitanya, K. Lakshmipriya, T. Lohith, Dr. A. Kiran Kumar . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65498

Publish Date : 2024-11-24

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online