Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

ECHO ATTENTION: The AI Assistance for Desktop

Authors: Daizy Deb

DOI Link: https://doi.org/10.22214/ijraset.2024.63540

Certificate: View Certificate

Abstract

In the linked world of today, digital, voice, and virtual assistants have become vital tools that are transforming people\'s interactions with technology and information access. This abstract offers a thorough introduction to these intelligent assistants, exploring their expansion, unique technology, a wide range of submissions in different arenas, and potential forthcoming directions. Virtual Assistants occasionally referred to as intelligent specific assistants, are computer programs or applications that use text-based or voice-activated interactions to help users with a variety of activities. They use artificial intelligence (AI) algorithms to understand natural language questions, obtain appropriate data from the internet, design tasks, create aides-mémoires, and activate smart home appliances. Virtual assistants like Siri, Google Assistant, and Amazon Alexa are few examples. Through constant learning from user interactions, these assistants adjust to unique predilections and progressively gain more aptitudes. Early Chabot and virtual agents, which mostly provided to text-based communications, are where virtual assistants first emerged. However, because of expansions in artificial intelligence (AI), machine learning (ML), and natural language processing (NLP), virtual assistants have become sophisticated machines that can comprehend vocal language and reply consequently. Voice assistants, including Google Assistant, Apple\'s Siri, Amazon\'s Alexa, and Microsoft\'s Cortana, have become more popular as an outcome of this development. These assistants use speech recognition technology to permit users to interact with devices hands-free. A subclass of virtual assistants known as voice assistants communicate with users mainly through voice instructions. They translate spoken words into text using voice recognition technology, and then they process the text to produce output or take action. They have influenced many facets of daily life, including productivity tools, smart homes, automobile systems, healthcare, and customer service. Smart speakers, cell phones, wearable technology, and other gadgets with voice capabilities are already commonplace, enabling smooth voice interactions and access to a wide range of services. Virtual and voice assistants are included in the category of digital assistants, along with other features and capabilities. To provide proactive support, predictive insights, and tailored suggestions, they integrate AI-driven capabilities with powerful data analytics, machine learning, and natural language comprehension. Numerous industries, such as customer service, healthcare, banking, education, and corporate operations, use digital assistants. They anticipate demands and provide pertinent information and services in real time, which increases productivity, streamlines procedures, and improves user experiences.

Introduction

I. INTRODUCTION

AI voice assistants have become an integral part of our daily lives, transforming the way we interact with technology. These intelligent systems, powered by advanced speech recognition, natural language processing, and machine learning technologies, enable us to communicate with machines using natural language, making our lives more convenient and efficient. The development of AI voice assistants dates back to the 1950s and 1960s. In the late 1990s and early 2000s, substantial developments in machine learning and natural language processing made it possible to develop more useful and economically viable voice assistant systems.The initial voice assistants powered by artificial intelligence (AI), including Siri, Google Assistant, Alexa, and Cortana, have evolved into multifaceted, sophisticated instruments capable of far more than just answering queries.AI voice assistants rely on a complex web of networks and components, including textspeech(TTS) engines, natural language processing (NLP) models, speech recognition models, and decision engines.When combined, these components are able to recognize and understand human speech, interpret user intent, choose the best course of action, and convert the response into artificial speech output.Advances in machine learning, particularly in the field of deep learning, have resulted in a significant rise in the functionality and performance of AI voice assistants. Various accents, the complexities and ambiguities of human language, we anticipate seeing even more cutting-edge use cases and applications for these systems as they develop further, such as conversational AI, multi-modal voice assistants, and integration with augmented and virtual reality (AR/VR) technology.

The creation and use of AI voice assistants also bring up significant moral and societal issues, including privacy, bias, openness, and the possible effects on interpersonal relationships and human communication. To guarantee that AI voice assistants are created and implemented responsibly and ethically, it will be imperative to address these issues and obstacles.

II. LITERATURE REVIEW

AI voice assistants have revolutionized how we engage with technology and have grown to be an essential part of our everyday lives. Our lives are made more convenient and effective by these intelligent systems, which are driven by cutting-edge machine learning, natural language processing, and speech recognition technology. We can now converse with machines

development of AI voice assistants dates back to the 1950s and 1960s. In the late 1990s and early 2000s, substantial developments in machine learning and natural language processing made it possible to develop more useful and economically viable voice assistant systems. The first artificial intelligence (AI) voice assistants, such as Siri, Google Assistant, Alexa, and Cortana, have now developed into complex, multifunctional tools that can do much more than merely respond to questions. Natural language processing (NLP) models, text-to-speech (TTS) engines, decision engines, and speech recognition models are just a few of the many networks and parts that AI voice assistants depend on. The aforementioned constituents collaborate to identify and comprehend spoken language, decipher user intentions, ascertain suitable actions or reactions, and translate responses into synthetic voice output.

III. METHODOLOGY

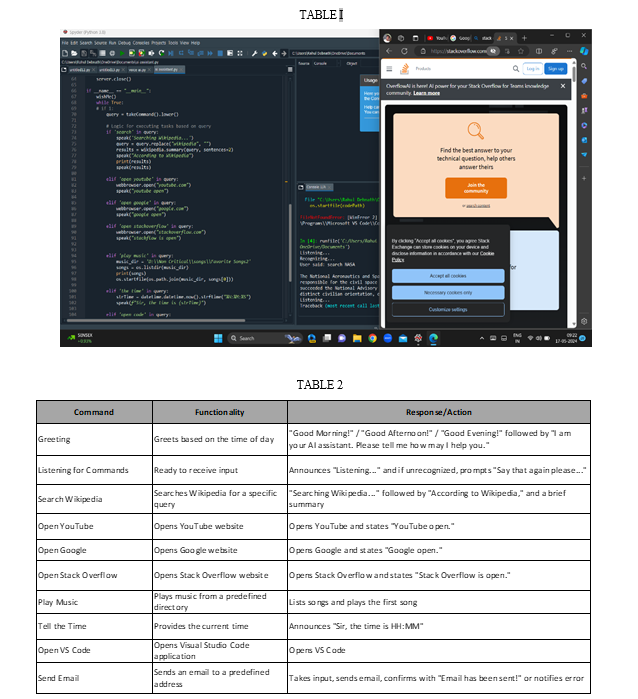

Introducing Echo Attention AI Desktop Voice Assistant, a cutting-edge solution designed to streamline our digital interactions and enhance productivity. Equipped with advanced capabilities, Echo Mind flawlessly integrates into this workflow, empowering with a countless of functionalities at our vocal command. Whether it require the convenience of email correspondence, the depth of knowledge from Wikipedia, or swift access to online platforms like Google and YouTube, it stands ready to serve. Furthermore, its sophisticated interface allows for effortless initiation of our preferred code editor or IDE, ensuring swift transitions between tasks with unparalleled efficiency. Experience the future of productivity with AI Desktop Voice Assistant.

A sophisticated application software powered by Anaconda 3, serves as the cornerstone of our development efforts. Leveraging Python programming, we meticulously craft the initial programming that drives Spider's functionality. Python's versatility and robustness empower us to create a dynamic and efficient system, capable of meeting the diverse needs of our experiment.

This pivotal element orchestrates the entire program's execution, serving as the primary entry point where the flow of operations converges and unfolds. Within this fundamental function, we seamlessly invoke our speak function, initiating a chain reaction of actions that drive our project's functionality and purpose forward. Implementing an Interactive Greeting Functionality Using Python in this project, we aim to develop a personalized greeting function.

The functionality of this program is to greet users according to the time retrieved from the system clock. To achieve this, we incorporate the `datetime` module, a powerful tool for handling date and time-related operations in Python. To begin, we import the `datetime` module, granting us access to functions for extracting current time information. Subsequently, we retrieve the current hour value from the system clock and store it in a variable named `hour`. The core functionality revolves around dynamically adjusting the greeting based on whether it's morning, afternoon, evening, or night. By utilizing conditionals, the program selects an suitable greeting message, ensuring a more engaging and user-friendly interaction. This adaptability enhances user experience, making the interaction with our program more intuitive and responsive to the user's context. Moreover, this project serves as a practical introduction to utilizing datetime manipulation and conditional statements in Python, offering valuable insights into building more sophisticated software systems. In conclusion, through the fusion of datetime handling and conditional logic, this project aims to create an engaging and personalized user experience, demonstrating the power and versatility of Python in crafting interactive and dynamic applications. In the ongoing pursuit of advancing human-computer interaction, our project endeavours to enhance the capabilities of our AI assistant through the integration of cutting-edge speech recognition technology. To achieve this objective, we have incorporated the speech Recognition module, denoted as "sr," insystem. This module enables our AI assistant to seamlessly interface with users via their system's microphone, facilitating intuitive command inputs through spoken language. This proactive approach not only mitigates disruptions to user interaction but also contributes to the overall stability and usability of our AI assistant. Through this innovative integration of speech recognition technology and error handling mechanisms, our project aims to elevate the user experience by providing a seamless and intuitive interaction platform. By enabling users to communicate with our AI assistant effortlessly through natural speech, we are poised to redefine the paradigm of human-computer interaction, ushering in a new era of efficiency and convenience."

In the development of intricate logic systems designed to facilitate a diverse range of commands, including but not limited to, conducting Wikipedia searches, opening designated websites, and executing various other functionalities. With a keen focus on precision and efficiency, employed sophisticated algorithms and cutting-edge technologies to ensure seamless user experiences. The commitment to excellence drives to continually refine and expand repertoire of commands, empowering users with enhanced capabilities and accessibility within digital environments. This work encompasses the comprehensive development and implementation of a versatile logic framework tailored to meet the demands of modern digital interactions. Through meticulous planning and agile development methodologies, we have engineered a robust system capable of executing a myriad of commands seamlessly. By integrating advanced algorithms and leveraging scalable infrastructure, this solution enables users to interact with digital platforms effortlessly, whether it be conducting nuanced Wikipedia searches, effortlessly opening designated websites, or interfacing with various other functionalities.

IV. RESULT

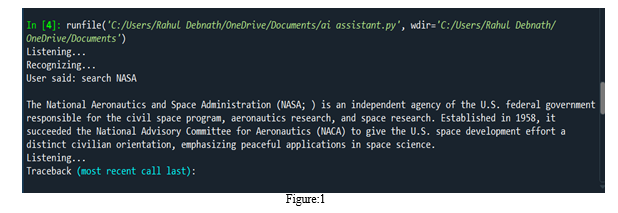

This Python script is a versatile voice-controlled assistant designed to streamline user interactions through natural language commands. By harnessing the power of speech recognition and text-to-speech conversion, it empowers users to effortlessly navigate a range of tasks, from retrieving information on Wikipedia to accessing popular websites like YouTube, Google, and Stack Overflow.

Beyond web browsing, it seamlessly integrates with local media, enabling users to enjoy their favourite music selections with ease. Moreover, it provides essential utilities such as time reporting and email functionality, all while offering a personalized touch through tailored greetings based on the time of day. With its intuitive command interpretation and robust functionality, this assistant enhances user productivity and accessibility in both online and offline environments. In the initiation phase, the Python script orchestrates the configuration of essential components pivotal for seamless user interaction. Specifically, it initializes the pyttsx3 engine, a robust platform for text-to-speech conversion, imbuing the assistant with the ability to articulate responses in a natural and intelligible manner. Additionally, meticulous attention is directed towards the setup of the speech recognition engine, a cornerstone technology that facilitates the comprehension of user commands.

This feature not only adds a layer of personalization but also demonstrates the assistant’s ability to adapt its responses to real-time conditions, thereby creating a more engaging and user-friendly experience. By acknowledging the time of day, the assistant establishes a natural and intuitive interaction flow right from the start. The system is designed to actively listen to the user's voice commands and accurately interpret them to execute a variety of specified tasks. By employing advanced speech recognition technology, the assistant captures audio input from the user, processes it, and converts it into actionable instructions. This seamless interaction model allows users to engage with the system in a natural and intuitive manner, enhancing the overall user experience. The assistant's ability to understand and respond to vocal commands demonstrates a sophisticated integration of machine learning algorithms and natural language processing techniques, ensuring reliable and efficient task execution across a wide range of functionalities. The assistant is capable of conducting comprehensive searches on Wikipedia for any given query. Upon receiving a voice command that includes the keyword "search," it initiates a search process on Wikipedia, effectively retrieving relevant information based on the user's request.

This feature not only enhances the accessibility of information but also demonstrates the integration of natural language processing with real-time data acquisition, thereby providing users with immediate and informative responses to their inquiries. This capability exemplifies the practical application of artificial intelligence in everyday tasks, showcasing the assistant's utility in facilitating quick and efficient access to a vast source of knowledge. The assistant is capable of seamlessly

integrating with popular web browsers to enhance user convenience and productivity. By recognizing and executing voice commands, it can automatically open essential websites such as YouTube, Google, and Stack Overflow.

This functionality allows users to quickly access these platforms without the need for manual input, streamlining their workflow and enabling a hands-free experience. Whether it's for watching videos on YouTube, performing web searches on Google, or seeking coding assistance on Stack Overflow, this feature significantly improves the efficiency and accessibility of web navigation. The assistant is equipped with the capability to play music from a specified directory on the user's local machine.

Conclusion

In conclusion, the AI assistant project presented herein represents a significant step towards developing voice-activated intelligent systems aimed at enhancing user productivity and convenience. Through the integration of various libraries and functionalities, the assistant demonstrates the capability to perform a range of tasks, including information retrieval, web browsing, music playback, time reporting, and even email sending. The project leverages the `pyttsx3` library for text-to-speech conversion, enabling natural interaction with users through spoken responses. Additionally, the `speech recognition` library facilitates speech recognition, allowing users to issue commands verbally, which are then processed and executed by the assistant. One of the notable features of the AI assistant is its ability to retrieve information from Wikipedia, providing users with concise summaries on a wide array of topics. This functionality enhances the assistant\'s utility as a quick-reference tool for obtaining relevant information on-demand. Moreover, the assistant seamlessly integrates with popular web services such as YouTube, Google, and Stack Overflow, enabling users to access these platforms effortlessly through voice commands. This functionality not only enhances user convenience but also showcases the assistant\'s versatility in performing web-related tasks. Furthermore, the project demonstrates the capability to interact with the operating system, allowing users to open applications such as VS Code and play music files stored locally on their system. These features contribute to the assistant\'s functionality as a multitasking tool for both work and entertainment purposes. Additionally, the assistant incorporates email sending functionality, enabling users to compose and send emails through voice commands. This feature enhances user productivity by providing a hands-free method for managing electronic communication.

References

[1] Research Paper on Desktop Voice Assistant: Vishal Kumar Dhanraj (076) [2] Research paper on Desktop Voice Assistant: Prof. Ranak Jain (05) [3] Artificial Intelligence Project Idea blogs [data-flair.training/blogs] [4] How to build virtual assistant in python blog (Author: Dante Sblendorio) [5] Making own AI assistant blog on CodeX (Author: Ramil Jivanni) [6] Python programming [www.pythonprogramming.net [7] Python documentation [www.python.org] [8] Desktop Assistant from Wikipedia [9] Designing Personal Assistant Software for Task Management using Semantic Web Technologies and Knowledge Databases. [10] Richard Krisztian Csaky, “Desktop Assistant and related Research paper Notes with Images, [11] Chatbot Learning: Everything you need to know about machine learning chatbots (2020). [12] How to use an API with Python (Beginner’s Guide). [13] Artificial Intelligence: A Guide for Thinking Humans\" by Melanie Mitchell [14] \"Practical Natural Language Processing: A Comprehensive Guide to Building Real-World NLP Systems\" by Sowmya Vajjala, Bodhisattwa Majumder, Anuj Gupta, and Harshit Surana. [15] “Voice User Interface Design\" by James P. Giangola, Jennifer Balogh, and Michael H. Cohen

Copyright

Copyright © 2024 Daizy Deb. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63540

Publish Date : 2024-07-03

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online