Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Effective Deep Learning Technique for Enhanced Data Privacy and Security

Authors: Prof. D. M. Kanade, Sharanya Datrange, Rutuja Aher , Nayan Deshmukh, Divya Tambat

DOI Link: https://doi.org/10.22214/ijraset.2024.62868

Certificate: View Certificate

Abstract

In the past, data privacy and security during analysis were challenging. Sensitive information often remained vulnerable, risking privacy breaches. This research introduces a comprehensive solution to address these challenges. It consists of three main stages: PII detection, differential privacy with Gaussian noise, and homomorphic encryption. It starts with data collection from various sources. What sets is the system apart is its ability to safeguard personal data. This research employ PII detection tech- niques to identify and anonymize sensitive information, preserving privacy without compromising data utility. Next, preprocess the data, enhancing its quality for analysis. Differential privacy is applied, intro- ducing controlled Gaussian noise and aggregating the data to protect individual privacy while enabling meaningful insights. Moreover, This research uses homomorphic encryption, which allows confidential calculations to be performed without revealing sensitive information. This is especially beneficial for securing indian household data. As move on to data analysis, the research system leverages machine learning and analytical methods to extract insights from the protected data. Finally, the results are visu- alized and presented in reports, ensuring that the protected data is effectively utilized while respecting privacy and security concerns. In summary, the system provides a comprehensive solution for handling sensitive data, ensuring privacy, and enabling valuable insights to be drawn from the data without com- promising individuals privacy and data security. It significantly enhances data privacy and security compared to the past, where these concerns were inadequately addressed.

Introduction

I. INTRODUCTION

In today’s world of vast datasets, balancing the need for insights with protecting privacy is crucial. This study focuses on safeguarding privacy while analyzing house- hold population data in India. As we dig into population dynamics, it’s essential to keep sensitive information safe. This research uses two key privacy techniques: adding noise with Differential Privacy, and using Ho- momorphic Encryption. By combining these methods, we aim to protect identities and sensitive data while keeping the dataset useful for analysis. Our goal is to create a system that balances data utility and pri- vacy, contributing to secure data analysis methods. This study is trying out a new way to keep people’s infor- mation safe while still analyzing big sets of data. It’s like putting three special locks on a treasure chest: one lock checks for personal info, another adds some ran- dom noise to the data, and the last one scrambles the data so no one can peek inside. By using all three locks together, we can make sure no one’s personal info gets out, while still keeping the data useful for studying. This research is like building a strong shield to protect people’s privacy while we learn from the data. It’s all about finding a balance between keeping info private and making sure we can still learn useful things from the data.

DATASET: Household population India:The dataset contains demographic information for various geo- graphic entities within India. The dataset includes pa- rameters such as State Code, District Code, Sub District Code, and the corresponding names of India’s States, Union Territories, Districts, and Sub-districts. Addi- tionally, it encompasses details on the total, rural, and urban classification, along with the counts of inhab- ited and uninhabited villages. Furthermore, the dataset records the number of towns, households, and popula- tion statistics, including the total population, male pop- ulation, and female population. Geospatial details, such as the area covered in square kilometers, are also pro- vided, offering insights into the distribution of popula- tion density across different regions.

II. METHODOLOGY

A. Differential Privacy

- For adjacent data sets D and Dâ that differ by at most one data item, an attacker can infer the pres- ence or absence of this data item from the query function f. The differential privacy algorithm can prevent this kind of attack by the randomized algo- rithm M. Differential privacy techniques can quan- tify the degree of protection of sensitive informa- tion by the randomized algorithm M.

If the ran- domized algorithm M privacy budget is high, then the probability of an attacker inferring sensitive data is high. The lower the privacy budget, the more rigorous protection can be applied to data privacy.Wegive the definition and implementations of differential privacy. First, the formal definition of differential privacy is given as follows:

2. Definition 1:differential privacy. For a randomized algorithm M with domain D and range S. For any two adjacent input data x and y, M satisfies differ- ential privacy if it holds that

B. Homomorphic Encryption

- Homomorphic encryption is a cryptographic technique that enables computations to be performed on encrypted data without decrypting it first. This property is crucial for preserving the privacy of sensitive information while allowing meaningful operations to be conducted. Homomorphic Encryption (HE) is primitive encryption that allows a party to encrypt data and send it to another party that can then perform certain operations on the encrypted version of the data [10]. An encryption system that allows arbitrary calculations to be encoded on encrypted data without decryption or access to any symmetric cryptographic decryption key is known HE [21]. When the account ends, the encrypted version of the result is sent to the first party that can decrypt and get the result in plain text. Homomorphic Encryption scheme (Enc) follows the following equation:

Enc (a)ÎEnc (b) = Enc( aâ b).

where Enc: X ⶠY is a Homomorphic Encryption scheme wherein X is used for a set of messages and Y is used for ciphertext.

Furthermore, a and b are messages in X and Î, â are linear operations [6].

2. Homomorphic encryption methods can be partially divided into, partially homomorphic and fully homomorphic encryption. In a par- tially homomorphic system, only one type of operation (either addition or multiplica- tion) can be performed on the encrypted data, while fully homomorphic encryption allows both operations.The fundamental idea be- hind homomorphic encryption lies in trans- forming plaintext operations into equivalent operations on encrypted data. This property allows computations on the encrypted data to produce results that, when decrypted, corre- spond to the desired output of the original op- erations.

3. These formulas illustrate the core principles of homomorphic encryption and its ability to perform computations while maintaining the confidentiality of the underlying data. Utiliz- ing these techniques in research can provide a robust framework for secure data process- ing. After completing the computations on the encrypted data, obtain the encrypted re- sult. In this we can perform operations on the encrypted data itself.

4. If applicable we can convert the encrypted result into a format suitable for further anal- ysis or interpretation.Use the private key to decrypt the final result, ensuring that only authorized entities can access the original outcome. Confirm that the decrypted result aligns with the intended output of the com- putations performed on the encrypted data. .

???????C. Autoencoder

Autoencoder is a fundamental component of our methodology, contributing to the preservation of data privacy and facilitating meaningful feature learning. In our project, we utilized an autoen- coder architecture consisting of multiple layers with Rectified Linear Unit (ReLU) activation func- tions.

The ReLU activation function introduces non-linearity to the model and aids in efficient gra- dient propagation, enhancing the learning process.

- Training Process: The autoencoder was trained using House- hold dataset, employing an optimizer and a custom loss function tailored to our project’s objectives. Additionally, privacy concerns were addressed by integrating differential privacy techniques into the training proce- dure. By distorting the data according to the privacy budget, the autoencoder ensured robust protection against potential privacy breaches.

- Evaluation Metrics: Evaluation of the autoencoder’s performance was conducted using reconstruction error analysis. This involved quantifying the dis- crepancy between the input data and the re- constructed output generated by the autoen- coder. Reconstruction error analysis pro- vided valuable insights into the effectiveness of the autoencoder in learning meaningful representations of the dataset.

- Results and Insights: Through extensive experimentation, we ob- served promising results from the autoen- coder model. Despite encountering chal- lenges such as noise and complexity inher- ent in real-world datasets, the autoencoder demonstrated robust capabilities in feature learning and data denoising. These insights underscore the significance of the autoen- coder in preserving data privacy and facili- tating secure data processing.

The first page must contain, in the following se- quence:

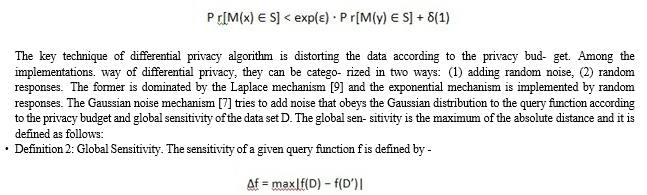

???????D. Figures and tables

A description of the program architecture is pre- sented. Subsystem design or Block diagram.

???????

???????

Conclusion

In conclusion, removing personally identifiable in- formation (PII) from datasets is not enough to anonymize data. This research work focuses on the privacy and security of sensitive data using the deep learning technique of Differential Pri- vacy. Differential privacy is achieved by adding Gaussian noise to the data. Specifically, it in- vestigates the possibility of performing computa- tions on encrypted data using homomorphic en- cryption, thus eliminating the need for decryp- tion. This approach aims to maintain the util- ity of the data while achieving high performance. Additionally, the integration of autoencoder tech- niques serves to enhance the researchâs privacy measures. The autoencoderâs purpose is to learn a compressed representation of the data, ensuring efficient data reconstruction without compromis- ing privacy. By leveraging the autoencoder, our re- search ensures that sensitive information remains protected throughout the data processing pipeline, ultimately contributing to the overall goal of effec- tive deep learning for data privacy and security.

References

[1] Jiapeng Zhang, Luoyi Fu, Huan Long, Guiâe Meng, Feilong Tang, Xinbing Wang, and Guihai Chen, Member, “Collective De- Anonymization of Social Networks With Op- tional Seeds”, IEEE 2021 [2] Jun Li, Fengshi Zhang, Yonghe Guo, Siyuan Li, Guanjun Wu, Dahui Li, Hongsong Zhu, “A Privacy-Preserving Online Deep Learn- ing Algorithm Based on Differential Pri- vacy”, 2023 [3] Matheus M. Silveira, Ariel L. Portela, Rafael A. Menezes, Michael S. Souza, Danielle S. Silva, Maria C. Mesquita, and Rafael L. Games, “Data Protection based on Search- able Encryption and anonymization Tech- niques”, IEEE 2023 [4] Salaheddine Kabou, Sidi Mohamed Bensli- mane, and Abdelbaset Kabou, “Towardsnew way of minimizing the loss of information quality in the dynamic anonymization”, 978- 1-7281-2580-0/20/, IEEE2020 [5] Peng Chong, “Deep Learning based Sensi- tive Data Detection”, IEEE 2022 [6] Muhammad Imran Tariq, Nisar Ahmed Memon, Shakeel Ahmed, Shahzadi Tayyaba, Muhammad Tahir Mushtaq, Natash Ali Mian, Muhammad Imran, and Muhammad W. Ashraf, “A Review of Deep Learning Se- curity and Privacy Defensive Techniques”, Hindawi Mobile Information Systems Vol- ume 2020 [7] Dong, A. Roth, and W. J. Su, “Gaus- sian differential privacy,” arXiv preprint:1905.02383, 2019 [8] Maryam Archie, Sophie Gershon, Abigail Katcoff, and Aaron Zeng, “Whoâs Watch- ing? De-anonymization of Netflix Reviews using Amazon Reviews” [9] C. Dwork, F. McSherry, K. Nissim, and A. Smith, “Calibrating noise to sensitivity in private data analysis,” in Theory of cryp- tography conference. Springer, 2006, pp. 265â284 [10] A. Acar, H. Aksu, A. S. Uluagac, and M. Conti, “A survey on homomorphic encryp- tion schemes,” ACM Computing Surveys, vol. 51, no. 4, pp. 1â35, 2018 [11] S. Halevi, Y. Polyakov, and V. Shoup, “An improved RNS variant of the BFV homo- morphic encryption scheme,” in Topics in CryptologyâCT-RSA 2019, pp. 83â105, Springer, Berlin, Germany, 2019 [12] M. Abadi, A. Chu, I. Goodfellow et al., “Deep learning with differential privacy,” in Proceedings of the 2016 ACM SIGSAC Con- ference on Computer and CommunicationsSecurityâCCSâ16, pp. 308â318, Vienna, Austria, 2016. [13] N. C. Abay, Y. Zhou, M. Kantarcioglu, B. uraisingham, and L. Sweeney, “Privacy pre- serving synthetic data release using deep learning,” in Machine Learning and Knowl- edge Discovery in Databases, pp. 510â526, Springer, Berlin, Germany, 2019 [14] S. D and K. Karibasappa, “Enhancing data protection in cloud computing using key derivation based on cryptographic tech- nique,” in 2021 5th International Conference on Computing Methodologies and Commu- nication (ICCMC), 2021, pp. 291â299. [15] C. F. Chiasserini, M. Garetto, and E. Leonardi, “Social network deanonymization under scale-free user relations,” IEEE/ACM Trans. Netw., vol. 24, no. 6, pp. 3756â3769, Dec. 2016. [16] K. Alrawashdeh and C. Purdy, “Toward an online anomaly intrusion detection system based on deep learning,” in Proceedings of the 2016 15th IEEE International Confer- ence on Machine Learning and Applications (ICMLA), pp. 195â 200, Anaheim, CA, USA, December 2016 [17] A. De Slave, P. Mori, and L. Ricci, “A sur- vey on privacy in decentralized online so- cial networks,” Computer Science Review, vol.27,pp.154-176,2018. [18] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, no. 7553, pp. 436â444, 2015 [19] V. Kotu and B. Deshpande, “Deep learning,” in Data Science, pp. 307â342, Elsevier, Am- sterdam, Netherlands, 2019. [20] X. Qiu, L. Zhang, Y. Ren, P. N. Suganthan, and G. Amaratunga, “Ensemble deep learn- ing for regression and time series forecast- ing,” in Proceedings of the 2014 IEEE Sym- posium on Computational Intelligence in En- semble Learning (CIEL), pp. 1â6, Orlando, FL, USA, December 2014. [21] D. Boneh, “Threshold cryptosystems from threshold fully homomorphic encryption,” in Advances in Cryptologyâ CRYPTO 2018, pp. 565â596, Springer, Berlin, Germany, 2018.

Copyright

Copyright © 2024 Prof. D. M. Kanade, Sharanya Datrange, Rutuja Aher , Nayan Deshmukh, Divya Tambat. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET62868

Publish Date : 2024-05-28

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online