Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Emotion-Driven Soundtrack Selector using AI

Authors: Mohith Namburu, Manjunadha Nagasai T

DOI Link: https://doi.org/10.22214/ijraset.2024.58506

Certificate: View Certificate

Abstract

The work portrayed the advancement of emotion-based Music Player, which is a web application implied for a wide range of clients, explicitly the music sweethearts. With the convenience of music players and other streaming apps, people can listen to music anytime, anywhere, and engage in various activities such as traveling, sports, or daily routines. The advancement of mobile networks and digital multimedia technologies has made digital music the most sought-after consumer content by young people. Moreover, listening to the appropriate music at the right time has the potential to enhance mental well-being. Human inclination assumes an indispensable part as of late. Emotion depends on human sentiments which can be both communicated or not. Emotion communicates the human\\\'s singular way of behaving which can be in various forms. The target of this project is to separate elements from the human face and recognize the emotion and play music as per the emotion identified. Be that as it may, many existing/strategies utilize past information to recommend music and different calculations utilized no Facial articulations are caught by a nearby catching gadget or an inbuilt web camera. Here we utilize a calculation for the acknowledgment of the component from the emotion we caught. Many music recommendation systems use content-based or collaborative-based recommendations. However, the choice of music for a user is not only based on his historical preference of the user. But also based on the mood of that user. This project proposes an emotion-based music recommendation that detects the emotion of a user from the webcam and plays the songs based on the user’s mood. In particular, the emotion of a user is classified and predicted the emotion and automatically redirects to the music page. This emotional information is stored in well-defined arrays. Thus, the model can predict the emotion and accuracy can be increased using these data.

Introduction

I. INTRODUCTION

Nowadays, Emotion detection is used mostly and considered as the most important technique used in many real life applications such as criminal, video indexing, smart-card application, image database investigation, surveillance, civilian applications, security. With the high increase in smart technologies and other effective feature-extraction algorithms, emotion identification in media credits like music or films is developing quickly and this framework can assume a significant part in numerous potential applications like human-PC communication frameworks and music diversion. We utilise looks to propose a recommender framework for emotion acknowledgment that can identify client emotions and recommend a rundown of proper songs. This project describes the event of Emotional Based Music Player. It is supposed for users to cut back their efforts and add that specialise in large playlists. It looks to propose a recommender framework for emotion acknowledgment that can identify client feelings and recommend a rundown of proper songs. At first the motion will be caught through the face mesh and from at least one movement of lips and face. The proposed model will remove the user’s expressions and highlights to see the current mind-set of the client. When the emotion is identified, a playlist of songs from system data will be introduced to him also the user choice of language and singer songs will be introduced to client. Its main goal is to produce better enjoyment to the music lovers in taking note of music. Within the model, the subsequent moods are included: Happy, Sad, Neutral, Angry. This technique involves image processing and facial detection processes. The input to the model remains images of the user which are further processed to work out the mood of the user.

A. Streamlit

Stream-lit is an app framework in python language. It helps us web applications for data science and machine learning very easy and in short time. It is compatible with vast python libraries such as scikit-learn, keras, NumPy, Pandas Matplotlib etc. in our project we used stream-lit to host our application with stream-lit no callbacks are needed since widgets are treated as variables. Stream-lit is very handy and easy to use unlike other platforms available in the market stream-lit reduces workload and provides more time to work on main source. Data caching speeds-up computation pipelines.

We have a lot of options in python for deploying our model. Some popular frameworks are Flask and Django. But the issue with using these frameworks is that we should have some knowledge of HTML, CSS, and JavaScript. Keeping these prerequisites in mind, Adrien Treuille, Thiago Teixeira, and Amanda Kelly created “Stream-lit”. Now using stream-lit you can deploy any machine learning model and any python project with ease and without worrying about the front end. Stream-lit is very user-friendly.

II. RELATED WORKS

Renuka R Londhe et al. [1] proposed a paper on Analysis of facial expression and recognition based on statistics, that focused on changes in the brightness of the corresponding pixels and the curvatures of the face. Artificial Neural Networks (ANN) were utilised to classify the emotions by the author. The author also recommended several playlist approaches. Anukriti Dureha et al [2] proposed Accurate Algorithm for Generating a Music Playlist based on Facial Expressions, that separates playlist manually and annotating songs based on the user's current emotional state is time consuming. To automate this process, a number of methods have been proposed. Existing algorithms, on the other hand, are slow, add to the overall cost of the system by requiring more hardware (such as EEG equipment and sensors), and are less accurate. This study proposes an algorithm that automates the process of creating an audio playlist based on a user's facial expressions, saving time and effort previously engaged in doing so manually. The algorithm suggested in this research aims to lower the planned system's overall processing time and cost. The proposed algorithm's facial expression recognition module is validated by comparing it to user-dependent and user-independent datasets. Parul Tambe et al. [3] proposed an idea of automating user-music player interactions by learning all of the user's preferences, moods, and activities and recommending songs as a result. The equipment recorded the users' varied facial expressions in order to assess their emotion and anticipate the music genre. A. Habibzad et al. [4] came up with an algorithm that recognizes facial emotions including three stages: pre-processing, feature extraction, and classification. The first part describes different stages in image processing including pre-processing, filtering used to extract various facial features. The eye and lip ellipse characteristics were optimised in the second half, and the eye and lip optimum parameters were employed to identify the emotions in the third part. The acquired results depicted that the speed of facial recognition was better than other approaches.

A. Proposed Scheme

Dataset

We have data sets from different resources to train the models and the data was gathered from multiple instances and the dataset is taken from the Kaggle and UCI repository and the dataset consists of several images of different facial emotions like happy, sad, and neutral. Which enables us to identify the emotion detections.

III. METHODOLOGY

A. Face Capturing

The goal of face capturing is to capture pictures, so here we are utilizing our gadget webcam to capture user’s picture. We are applying the Open-cv library to capture the photographs. This makes it simple to interact with different libraries that can likewise use NumPy, and it is generally utilized as an ongoing Open-cv framework. Whenever execution begins it begins to get to the camera by asking the users permission and turns on the webcam.

D. Media Pipe Face Mesh

The Media-Pipe Face Mesh framework gauges 468 3D face milestones progressively, even on cell phones. It utilises AI to construct 3D surface calculation from single-camera input, dispensing with the necessity for a specific profundity sensor. The methodology accomplishes ongoing execution vital for live encounters by using lightweight model plans and GPU speed increase across the pipeline. The arrangement additionally incorporates the Face Transform module, which overcomes any barrier between face milestone assessment and compelling continuous increased reality applications. It makes a metric 3D space and afterward gauges a face change inside that space utilizing the facial milestone screen positions. The face change information consists of standard 3D natives, for example, a face position change network. In the engine, Procrustes Analysis, a lightweight measurable investigation strategy, is utilized to drive a tough, performant, and versatile rationale. On top of the ML model, the investigation runs on a CPU and has a little speed/memory impression.

IV. EMOTION CLASSIFICATION

At the point when our face is recognized by the camera effectively , a connection point will show up as and it overlays the picture to extricate the face and for additional handlings. In the next step it will use face mesh and capture 100 pictures for information assortment using landmarks. It will measure the x,y values by the face mesh Thus, in the underlying period of this task to catch the pictures and face discovery, we utilized a calculation that captures the users face to arrange the pictures and we want a plenty of positive pictures that really contain pictures with faces just then again, here we have given our own emotion pictures to train the model. The code will remove the facial spatial situations from the face picture and it depends on the milestones in the face network. It plays out the examination between the information and with put away one so it can foresee the class that contains the inclination. Assuming it contains one of the four feelings: Happy, Sad, Neutral and identification of the inclination depends on the milestones recognized from the client's face.

V. MUSIC RECOMMENDATION

As we have created a web page which takes the users input as favourite language and favourite singer and then it turns on the webcam to detect the emotion of the user at that point of time, based on the emotion detected it will redirect to songs present in system dataset . The input user's face mesh values that are detected from the web camera and are used to procure from the web camera and are utilized to capture constant pictures. Furthermore, here we have four primary emotions since it is extremely difficult to characterise every one of the emotions that we captured and utilizing restricted choices it can assist the assemblage with timing and the result is more refined. It thinks about the qualities that are available as a limit in the code. The qualities will be moved to play out the web page. The songs will be diverted to your system dataset in view of the identified inclination. The emotions are allotted to the system dataset. In any case, we can utilise numerous sorts of models to suggest as a result of their precision. As we are utilising media-pipe to recognize the face, it gives the exactness better than different calculations. Whenever the song is played based on users emotion simultaneously it additionally addresses emotions as numerically in four distinct feelings. Every inclination is doled out with a number and that suggests to music and furthermore numeric that are recognized separately.

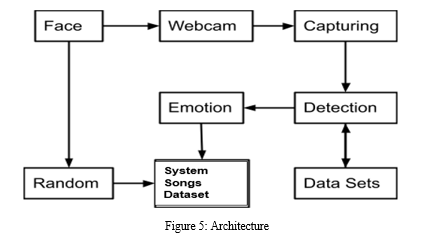

VI. ARCHITECTURE

In this project, when running a web page, users are prompted to enter their preferred language and favourite singer. Second, open-cv is useful not only for processing purposes, but also for capturing images from webcams. When using the player, use the emotion prediction displayed on the media player's main web page. In this, it will recommend the songs in in system data based on the emotion detected. We are using a library in python (Media-pipe). On the other side, we have an Emotion-Based Music recommender system. For this thing we are using the main keras model for detecting the emotion and playing the music.

This device describes facial expressions, the usage of detection and aggregate of spatial expressions. After Feature Extraction, the feelings are categorised into four categories (Happy, Sad, Angry and neutral). The feelings which are transferred to the final step are in numerical shape and the tune is performed from the feelings which are detected. The most important goal of face detection method is to discover the body I. e, face. And the alternative segment of the venture is the random mode for this we're the usage of media-pipe for the landmarks values for the face. The detected emotion and the chosen language, singer will be searched on system dataset. So songs related to the emotion will be played.

Conclusion

In this project, our music recommendation model is based on the emotions that are captured of the user. This project is designed for the purpose of making better interaction between the music system and the users. Because Music is useful in changing the state of mind of the client and for certain individuals it is a pressure reliever. Recent research states that a wide perspective in the developing the Emotion Based Music Recommendation system. Thus the present system presents an expression based recognition system so that it could detect the emotions and redirect to the music page accordingly. A. Future Work An Emotion-Based Music Player gives a standard to all music audience members via mechanising song determination(choosing) and refreshing playlists routinely founded on the users distinguished emotion. The music player that we are using can be utilised locally, and everything nowadays has become portable and efficient to carry, but it is feasible to capture a person\\\'s emotion using a variety of wearable sensors that are simple to use rather than the entire manual process. That would provide us with enough information to precisely estimate the customer\\\'s mood. This system will benefit, and the system with sophisticated features will need to be upgraded on a regular basis. The detection methodology is used to improve the automatic playing of music. The facial expressions are identified using a programming interface installed on the local PC. B. References Based on Related Work 1) Renuka R. Londhe, Dr. Vrushshen P. Pawar 2012 Analysis of facial expression and recognition based on statistical approach International Journal of Soft Computing and Engineering 2) Anukriti Dureha 2014 An Accurate Algorithm for Generating a Music Playlist based on Facial Expressions International Journal of Computer Applications. 3) ParulTambe, Taher Khalil, Noor UlAin Shaikh and Yash Bagadia 2015 Advanced Music Player with Integrated Face Recognition Mechanism International Journal of Advanced Research in Computer Science and Software Engineering. 4) A. Habibizad navin , Mir Kamal Mirnia, Tabriz Branch, Islamic Azad University, Tabriz, Iran 2012 International Conference on Computer Modelling and Simulation.

References

[1] Parmar Darsna \\\"Music Recommendation Based on Content and Collaborative Approach & Reducing Cold Start Problem\\\", IEEE 2nd International Conference on Intensive Systems and Control, 2018. [2] Hemanth P,Adarsh ,Aswani C.B, Ajith P, Veena A Kumar, EMO PLAYER: Emotion Based Music Player, International Research Journal of Engineering and Technology (IRJET), vol. 5, no. 4, April 2018, pp. 4822-87. [3] A. Abdul, J. Chen, H.-Y. Liao, and S.-H. Chang, An Emotion-Aware Personalized Music Recommendation System Using a Convolutional Neural Networks Approach, Applied Sciences, vol. 8, no. 7, p. 1103, Jul. 2018. [4] Shlok Gilda, Husain Zafar, Chintan Soni ,Kshitija Waghurdekar Smart music player integrating facial emotion recognition and music mood recommendation, International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET) 2017. [5] Lee, J., Yoon, K., Jang, D., Jang, S., Shin, S., &Kim, J. (2018). MUSIC RECOMMENDATION SYSTEM BASED ON GENRE DISTANCE AND USER PREFERENCE CLASSIFICATION.

Copyright

Copyright © 2024 Mohith Namburu, Manjunadha Nagasai T. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58506

Publish Date : 2024-02-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online