Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Emotion Recognition from Brain EEG Signals

Authors: Lohitha Lakshmi Kanchi, Chandana Sri Narra, Guna Mantri, Madhupriya Palepogu, Chandra Sekhar Reddy Mettu

DOI Link: https://doi.org/10.22214/ijraset.2024.59255

Certificate: View Certificate

Abstract

The recognition of emotions plays a vital role in various fields such as neuroscience, cognitive sciences, and biomedical engineering. This particular project is centered on the development of a system for recognizing emotions through EEG signals. The main goal is to accurately classify different emotional states like valence and arousal by analyzing EEG brain wave patterns. The study is based on the DEAP dataset, which contains EEG and peripheral physiological signals recorded as participants interacted with video clips and music. The main objective is to explore and compare the efficacy of two classification techniques: Long Short Term Memory (LSTM) and Convolutional Neural Networks (CNN). A total of 20 electrodes are used to identify and differentiate among 12 emotional states. The principal focus is on arousal and valence-related trends utilizing Russell\'s Circumplex Model, which depicts emotions on a two-dimensional plane determined by these two factors. This model allows for the visualization of emotions within this framework based on their levels of arousal and valence. By conducting extensive training and testing on the DEAP dataset, the accuracy of each classifier in predicting emotional states, including valence and arousal, is evaluated. This comparative assessment helps in understanding the strengths and weaknesses of each method for emotion recognition using EEG signals.

Introduction

I. INTRODUCTION

Emotions represent intricate psychological and physiological states that encompass diverse subjective sensations, actions, and bodily reactions. They hold a pivotal role in human encounters, shaping our perceptions and engagements with the surrounding environment. Emotions are commonly distinguished by their positivity, negativity, or neutrality, the degree of their intensity, and specific attributes like happiness, sorrow, anger, fear, or disgust.

Due to the increasing availability of various electronic devices in recent years, people have been spending more time on social media, playing online video games, shopping online, and using other electronic products. However, most human- computer interaction (HCI) systems today lack emotional intelligence, which means they are unable to analyze or understand emotional data. These systems cannot identify human emotions and use that information to inform choices and behaviors. Human emotions can be identified by behavior, speech, facial expressions, and physiological cues.

The first three approaches are subjective and unreliable, as subjects may purposefully hide their genuine feelings. It is more reliable and objective to identify emotions based on physiological signals. EEG (electroencephalography) is a technique used to record the electrical activity of neurons in the brain by placing electrodes on the scalp. By leveraging technologies such as EEG signals, we can delve deeper into individuals' emotional states, paving the way for more personalized and responsive systems.

This introduction sets the stage for exploring the real-world requirements and applications of emotion recognition technology, highlighting its transformative impact across diverse fields.

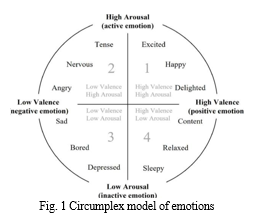

The Russell's Circumplex Model, proposed by James A. Russell, is a psychological model used to understand and measure emotions. It organizes emotions according to two main dimensions: valence and arousal. The model arranges emotions in a circular or wheel-like structure, hence the term "circumplex." The circle was divided into quadrants, with each quadrant representing a different combination of valence and arousal.

For example, high arousal and positive valence emotions such as excitement or joy would be in one quadrant, while low arousal and negative valence emotions such as sadness or lethargy would be in another quadrant.

II. LITERATURE REVIEW

- Koelstra et al. introduced a database for emotion analysis using physiological signals (DEAP). This database's primary goal is to develop a system for suggesting music videos to users based on their emotional states. The music video clips are used to elicit different emotions. Thirty-two participants' physiological signals, including their breathing rate, skin temperature, plethysmograph, electromyogram, and EEG signals, were recorded.

- Yang et al. employed the CNN model directly to extract information from the individuals' EEG signals. Finally, they achieved 90.65% and 90.01% recognition accuracy in the valence and arousal categories, respectively.

- Kim Y and Choi analyzed EEG signals from a temporal perspective, mined their temporal information using LSTM, and used a fully connected layer to classify features, ultimately achieving 90.2% and 88.1% recognition accuracy. Most scholars usually use all channel signals for experiments to guarantee that the extracted features carry enough rich information. These scholars, however, fail to acknowledge the issue that not all signals are strongly associated with feelings.

- Atkinson and Campos proposed an EEG feature-based emotion recognition approach in which the DEAP dataset was used. Statistical features were extracted, which were the median, standard deviation, and kurtosis coefficient. Additionally, for each channel, the band power of the following frequencies was extracted: theta (4–8 Hz), slow alpha (8–10 Hz), alpha (8–12 Hz), beta (12–30 Hz), gamma (30+ Hz), Hjorth parameters (HP), and fractal dimension (FD). The Minimum Redundancy Maximum Relevance (MRMR) was used to select a relevant set of extracted features. Then the support vector machine (SVM) was used to classify features into low/high arousal and low/high valence classes with accuracy of 60.72% and 62.4%, respectively.

- Liu et al. used MRMR for channel selection, then Random Forest and KNN machine learning models were used for emotion classification, in which they achieved an accuracy of 69.97% for valence and 71.23% for arousal.

- Salama et al. used 3D Convolutional Neural Networks (CNNs) to explore the integration of EEG data with advanced neural network architectures. This approach emphasizes the spatiotemporal features inherent in EEG signals, enabling more accurate emotion classification. The achieved accuracies are 87.44% and 88.49% for valence and arousal, respectively.

- Zhu merged the temporal, frequency, and spatial features extracted from the EEG signal and used SVM to classify the merged features, achieving 71% and 70% recognition accuracy in the valence and arousal dimensions.

- Chanel et al. proposed a new approach for adapting game difficulty according to the current emotions of the player. Fourteen players' EEG signals were obtained while they were playing Tetris at three different difficulty levels: easy, medium, and hard. The three levels correspond to the emotions of engagement, boredom, and anxiety, respectively. The Fourier transform was used to calculate the energy of the theta (4–8 Hz), alpha (8–12 Hz), and beta (12–30 Hz) frequency bands for each electrode. Different feature selection methods were experienced with different classifiers. The accuracy was 56%, achieved by employing analysis of variance (ANOVA) as a feature selection approach and linear discriminant analysis (LDA) as a classifier.

- Jadhav et al. used an EEG spectrogram image for emotion recognition. Gray-Level Co-occurrence Matrix (GLCM) features were extracted from an EEG spectrogram image. The DEAP dataset was used to classify four emotions: happy, sad, relaxed, and angry. The K-nearest neighbor was used for classification.

- Abeer et al. used data acquisition and preprocessing to clean the EEG data. Relevant features are extracted from the preprocessed signals, capturing patterns associated with different emotional states.

A DNN architecture is designed and trained using labeled datasets to learn complex relationships between features and emotions. They achieved accuracies of 89.3% and 74.33% for valence and arousal, respectively.

11. Choi et al. used EEG signals from the DEAP dataset to train the LSTM model, enabling the classification of arousal and valence levels associated with mental states. The LSTM architecture enables the model to capture temporal dependencies in the EEG signals, facilitating accurate classification of emotional states in which the achieved valence and arousal accuracies are 78% and 74.65%, respectively.

12. Xiaofen Xing et al. used Sparse Autoencoder (SAE) and Long Short-Term Memory (LSTM) networks to enhance the accuracy of emotion recognition from multi-channel EEG signals. This approach utilizes the capabilities of SAE for feature learning and LSTM for capturing temporal dependencies in EEG data, in which the accuracies achieved are 81.10% and 74.38% for valence and arousal, respectively.

13. Salma Alhagry et al. present an EEG-based emotion recognition system employing LSTM Recurrent Neural Networks. It focuses on leveraging LSTM networks to capture temporal dependencies in EEG signals, enhancing emotion classification accuracy. This demonstrates promising results in discerning emotional states from EEG data, indicating the potential of LSTM models in this domain. The obtained accuracies for valence and arousal are 85.45% and 85.65%, respectively.

14. Hao et al. investigated whether adding synchronization measurements would enhance the way EEG signals were represented. This study shows that adding synchronization measurements improves the discriminative attributes for tasks involving the detection of emotions. They obtained 70.21% and 71.85% accuracy for valence and arousal, respectively, using SVM for classification.

15. Samarth et al. used both CNN and DNN models, respectively. For CNN, they converted the DEAP data into 2D images to classify effectively. Finally, for the DNN model, their valence and arousal accuracies are 75.78% and 73.125%, respectively. For CNN, the valence and arousal classification accuracies are 81.406% and 73.36%, respectively.

16. Lohitha et al. analyzed two different datasets, RAVDESS and TESS, which included seven distinct feelings: neutral, happy, sad, angry, fearful, disgusted, and startled. In the raw audio wave, noise, stretching, shifting, and pitching. have been employed to do the preprocessing and data augmentation that have taken place. The research focuses on using voice signals to reliably discern emotions.

17. Bhargavi et al. examines the use of genetic algorithms (GA) to assess and distinguish between breast cancer tumour and normal breast gene data sequences. GA is a population-based evolutionary algorithm that employs mutation and crossover to forecast the best solutions. The research intends to create a progressive framework for distinguishing between cancer and non- cancer data sequences.

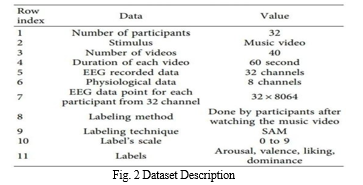

III. DATASET

An Emotion Analysis Database incorporating brain electrical waves and physiological signals captured while individuals react to external stimuli. The collection, known as DEAP, comprises brainwave, environmental, and facial signal recordings observed during the viewing of 40 music videos selected to evoke a wide range of emotions. The study involved 32 healthy participants aged between 19 and 37, with an average age of 26.9 years.

Data were collected from 40 channels, including 32 EEG and eight physiological channels. Each music video lasted 63 seconds, consisting of a 3-second preparation phase and a one-minute viewing duration. Post-viewing, participants rated the videos on the Valence, Arousal, Dominance, and Liking scales, serving as standard metrics for each individual.

Arousal signifies the intensity of one's emotions, with higher values indicating stronger feelings and lower values suggesting weaker emotions, ranging from placid to excited. Valence measures the level of pleasure in one's emotions, with higher values reflecting happier and more positive feelings and lower values indicating a more negative emotional state, ranging from unpleasant to pleasant.

The EEG signals were captured using a 512 Hz sampling rate. Their EEG data were downsampled to 128 Hz and then averaged to the common reference, after which eye artifacts were removed and a high-filter bandpass was applied.

IV. PROPOSED METHOD

A. EEG Signal Processing:

The EEG data were downsampled to 128 Hz. Electrooculography (EOG) is a technique used to measure the corneo-retinal standing potential between the front and back of the human eye. To remove the noise produced from this type of eye movement, a method for removing EOG artifacts in the EEG called Automatic Removal of Ocular Artifacts is applied. A bandpass frequency filter was used to generate bands 4.0–45.0 HZ. The data were segmented into 60-second trials, and a 3-second pre- trial baseline was removed.

B. Feature Extraction:

EEG signals are high-dimensional data that may contain many features. The major goal of feature extraction in the emotion recognition process using EEG data is to obtain information that effectively reflects an individual’s emotional state. Subsequently, this information can be used in emotion classification algorithms. The accuracy of emotion identification is primarily determined by the extracted features. Therefore, identifying key EEG properties of emotional states is vital.

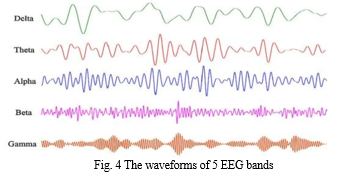

Typical methods include statistical metrics of the signal's first difference (i.e., median, standard deviation, kurtosis symmetry, etc.), spectral density (i.e., EEG signals with specific frequency bands), logarithmic power (Log BP) (i.e., power of a band within the signal), Hjorth parameters (i.e., EEG signals described by activity, mobility, and complexity), wavelet transform (i.e., decomposition of the EEG signal), and fractal dimension (i.e., complexity of the fundamental patterns hidden in a signal). EEG signals were classified into five categories based on the variation in frequency bands: delta (0.5–4 Hz), theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz), and gamma (30–45 Hz).

The FFT uses a 256-window that averages the band power of 2 seconds per video, with the window sliding every 0.125 seconds.

C. Feature Selection:

In this study, we employed an effective feature selection technique called Minimum Redundancy Maximum Relevance (MRMR).

- Relevance: How well do the features correlate with the target? Categorical variables were based on mutual information. For continuous variables, it is the F-statistic of the variable with the target.

- Redundancy: How well does the feature correlate with the features selected in previous iterations? The idea is that if a candidate feature correlates strongly to an already selected feature, it will not provide much additional information to the feature set. For categorical variables, this is based on mutual information. For continuous variables, this might be either the Pearson correlation coefficient or the distance between feature vectors.

MRMR operates iteratively by selecting one feature at a time. At each step, it calculates the feature with the maximum score among the remaining unselected features using either a difference (relevance minus redundancy) or a quotient (relevance divided by redundancy) approach.

We considered the readings from the EEG electrodes as features and selected various numbers of features using the top 10 and 20 channels from a set of 32 channels and started our research.

V. PROPOSED MODELS

Two different classification problems are posed: low or high arousal and low or high valence. Because there are only two levels for each classification problem, the continuous rating range per class is thresholded in the middle, such that if the rating is greater than or equal to five, then the video or trail belongs to the high class; otherwise, it belongs to the low class.

A. Long Short Term Memory

LSTM stands for Long Short-Term Memory, which is a type of recurrent neural network (RNN) architecture designed to handle sequence prediction problems and learn long-term dependencies in data.

Unlike traditional RNNs, LSTM networks have a more complex structure, with a series of gates that control the flow of information. These gates include an input gate, forget gate, and output gate, each responsible for regulating the information that enters and exits the memory cell. This mechanism helps LSTM networks selectively remember or forget information over long sequences, making them particularly effective for tasks such as speech recognition, language modeling, and time series prediction.

VII. LIMITATIONS

- Feature extraction and selection from EEG data is complex, impacting the performance of emotion recognition models.

- Most EEG-based emotion recognition studies focus on a limited number of basic emotions, potentially overlooking the full complexity of human emotions.

- EEG signals are prone to noise from various sources, necessitating thorough pre-processing to mitigate interference.

- Emotion recognition models trained on specific datasets may be difficult to generalize for diverse populations or real-world scenarios.

- The complex machine learning models used in EEG-based emotion recognition may lack interpretability, hindering understanding of underlying mechanisms and limiting practical applications.

VIII. FUTURE SCOPE

- In Exploring multimodal integration for enhanced emotion recognition by incorporating data from facial expressions, speech analysis, and physiological signals.

- Advancing real-time applications by developing algorithms and hardware solutions for the instantaneous processing of EEG signals in human-computer interaction, virtual reality, and affective computing systems.

- Personalizing emotion recognition models through adaptive learning algorithms and personalized feedback mechanisms to tailor predictions to individual users' characteristics and emotional expressions.

- Conducting cross-cultural studies to validate the effectiveness and generalizability of EEG-based emotion recognition across diverse populations and cultural contexts.

- Addressing ethical considerations in EEG-based emotion recognition; emphasizing privacy, consent, and data security; and developing responsible guidelines for data collection and usage.

IX. ACKNOWLEDGMENT

We would love to express our appreciation for all of the guidance and support we received in completing this paper. We express our gratitude to Dr. N. Sri Hari, our project coordinator, and Dr. K. Lohitha Lakshmi, our project guide, for their invaluable assistance and direction throughout the project.

Conclusion

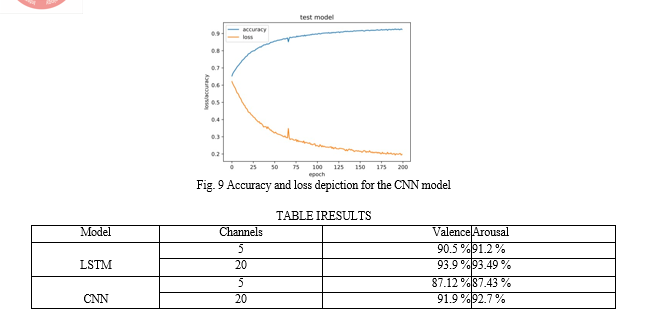

In this research, we propose an EEG-based technique for recognizing emotions. Unlike other approaches, our strategy improves the prediction accuracy of LSTM and CNN emotion classifiers based on two-dimensional emotion models (i.e., valence and arousal) by using the MRMR feature selection method as a signal preprocessing step. Furthermore, in comparison with the state- of-the-art emotion recognition techniques, the problem becomes more realistic and the training task becomes more challenging. Based on the findings, we can conclude that increasing the number of channels enhances the performance of both LSTM and CNN models in predicting valence and arousal. Furthermore, the LSTM model outperforms the CNN model, especially when the number of channels is enormous.

References

[1] Sander Koelstra, Christian Muhl, Mohammad Soleymani, Jong-Seok Lee, Ashkan Yazdani, Touradj Ebrahimi, Thierry Pun, Anton Nijholt, and Ioannis Patras, Deap: A database for emotion analysis; using physiological signals, IEEE Transactions on Affective Computing, 3(1):18–31, 2012. [2] H. Yang, J. Han, and K. Min, A multi-column CNN model for emotion recognition from EEG signals, Sensors, vol. 19, no. 21, p. 4736, 2019. [3] Y. Kim and A. Choi, Emotion Classification based on EEG signals with LSTM deep learning method, Journal of the Korea Industrial Information Systems Research, vol. 26, no. 1, pp. 1–10, 2021. [4] John Atkinson and Daniel Campos, Improving bci-based emotion recognition by combining eeg feature selection and kernel classifiers, Expert Systems with Applications, 47:35–41, 2016. [5] Jingxin Liu, Hongying Meng, Asoke Nandi, and Maozhen Li, Emotion detection from EEG recordings, 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), pp. 2173 - 2178, (2016) [6] Elham S. Salama, Reda A. El-Khoribi, Mahmoud E. Shoman, Mohamed A. Wahby Shalaby, EEG-Based Emotion Recognition using 3D Convolutional Neural Networks, (IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 9, No. 8, 2018. [7] H. Y. Zhu, Research of Emotion Recognition Based on Multidomain EEG Features and Integration of Feature Selection, Jilin University, Changchun, China, 2021. [8] Guillaume Chanel, Cyril Rebetez, Mireille Betrancourt, and Thierry Pun, Emotion assessment from physiological signals for adaptation of game difficulty, IEEE Transactions on Systems, Man, and CyberneticsPart A: Systems and Humans, 41(6):1052–1063, 2011 [9] Narendra Jadhav, Ramchandra Manthalkar, and Yashwant Joshi, Electroencephalography-based emotion recognition using gray-level cooccurrence matrix features, In Proceedings of International Conference on Computer Vision and Image Processing, pages 335–343. Springer, 2017. [10] Abeer Al-Nafjan, Areej Al-Wabil, Manar Hosny, Yousef Al-Ohali, Classification of Human Emotions from Electroencephalogram (EEG) Signal using Deep Neural Network, (IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 8, No. 9, 2017. [11] Eun Jeong Choi, Dong Keun Kim, Arousal and Valence Classification Model Based on Long Short-Term Memory and DEAP Data for Mental Healthcare Management, Healthc Inform Res. 2018 October. [12] Xiaofen Xing, Zhenqi Li, Tianyuan Xu, Lin Shu1, Bin Hu and Xiangmin Xu, SAE+LSTM: A New Framework for Emotion Recognition From Multi- Channel EEG, Front. Neurorobot. Volume 13, 12 June 2019. [13] Salma Alhagry, Aly Aly Fahmy, Reda A. El-Khoribi, Emotion Recognition based on EEG using LSTM Recurrent Neural Network, International Journal of Advanced Computer Science and Applications (IJACSA), Vol. 8, No. 10, 2017 [14] Hao Chao, Liang Dong, Yongli Liu, and Baoyun Lu, Improved Deep Feature Learning by Synchronization Measurements for Multi-Channel EEG Emotion Recognition, Hindawi Complexity Volume 2020, Article ID 6816502, 15 pages. [15] Samarth Tripathi, Shrinivas Acharya, Ranti Dev Sharma, Sudhanshi Mittal, Samit Bhattacharya, Using Deep and Convolutional Neural Networks for Accurate Emotion Classification on DEAP Dataset, Proceedings of the Twenty-Ninth AAAI Conference on Innovative Applications (IAAI-17). [16] Kanchi Lohitha Lakshmi, P. Muthulakshmi, A. Alice Nithya, R. Beaulah Jeyavathana, R. Usharani, Nishi S. Das, G. Naga Rama Devi, “Recognition of emotions in speech using deep CNN and RESNET”, Soft Computing, 2023, Page 1. [17] Peyakunta Bhargavi, Kanchi Lohitha Lakshmi, Singaraju Jyothi, Gene Sequence Analysis of Breast Cancer Using Genetic Algorithm, In: Venkata Krishna, P., Obaidat, M. (eds) Emerging Research in Data Engineering Systems and Computer Communications. Advances in Intelligent Systems and Computing, 2020, vol 1054. Springer, Singapore.

Copyright

Copyright © 2024 Lohitha Lakshmi Kanchi, Chandana Sri Narra, Guna Mantri, Madhupriya Palepogu, Chandra Sekhar Reddy Mettu. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59255

Publish Date : 2024-03-21

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online