Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Empowering the Blind: An AI Driven Indoor Assistance for Visually Impaired

Authors: Mrs. Shraddha. P. Mankar, Riya Gawande, Dipanshu Sankhala, Siddhi Vispute, Nikhita Watpal

DOI Link: https://doi.org/10.22214/ijraset.2024.61273

Certificate: View Certificate

Abstract

This review paper presents the design and development of a comprehensive mobile application aimed at enhancing the daily lives of visually impaired individuals. The proposed mobile app offers real-time assistance for various visual recognition tasks, including object detection, distance estimation, currency recognition, barcode detection, color recognition, and emotion analysis. The primary objective of this application is to provide visually impaired users with a powerful tool to navigate their surroundings, identify objects and currency, scan barcodes, discern colors, and even gauge the emotions of those they interact with. The app integrates pre-trained deep learning models for object detection and facial emotion analysis, computer vision techniques for distance estimation, and optical character recognition for currency recognition. User feedback and engagement are central to the ongoing improvement of the application, ensuring that it remains a valuable resource for the community it serves. Ethical considerations and privacy concerns are also addressed, with a focus on data security and user privacy. By presenting the development and functionalities of this app, this review paper not only contributes to the field of assistive technology but also underscores the importance of harnessing cutting-edge technology to improve the quality of life for visually impaired individuals.

Introduction

I. INTRODUCTION

In a world where millions contend with visual impairments, comprehending and navigating indoor environments presents a unique set of challenges. The visually impaired often encounter difficulties moving autonomously within enclosed spaces, where obstacles and objects can vary widely in position and type. Traditional aids, such as white canes, though helpful in some instances, do not provide real-time information about indoor objects and surroundings.

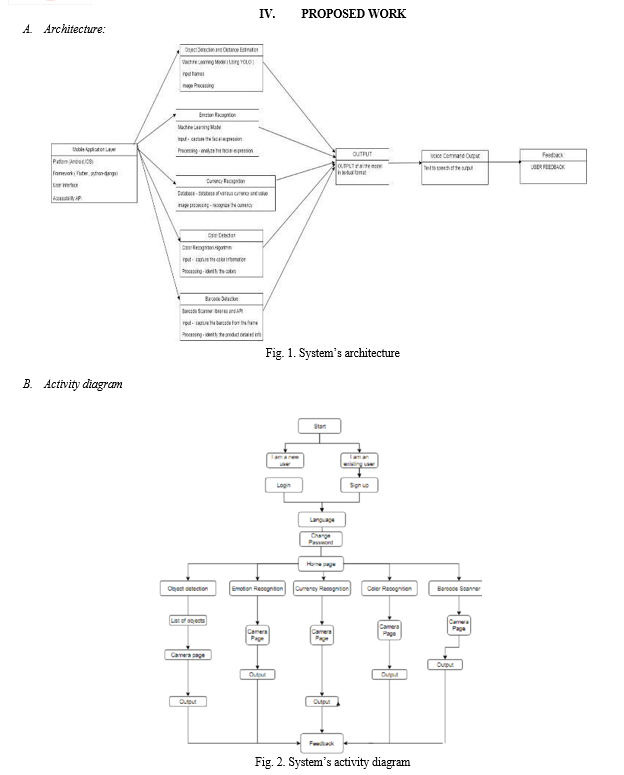

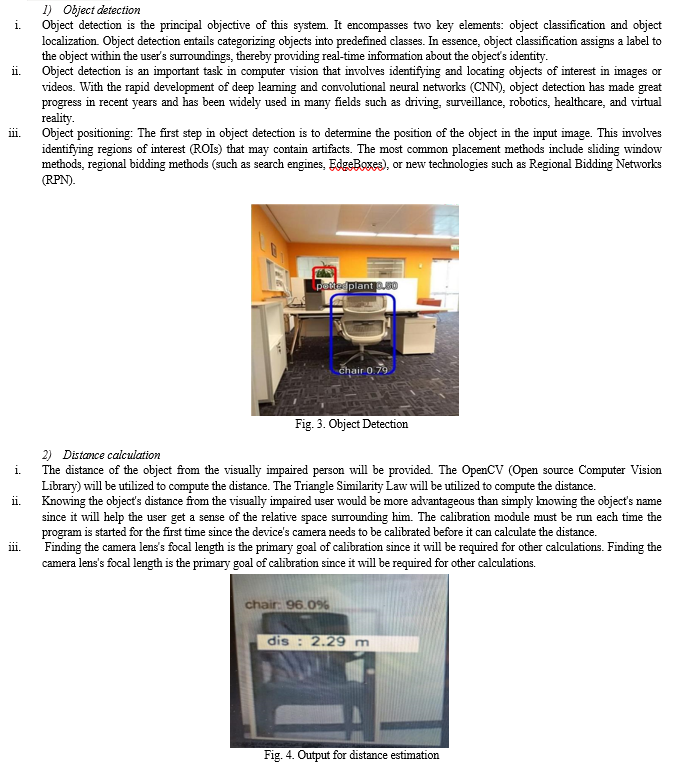

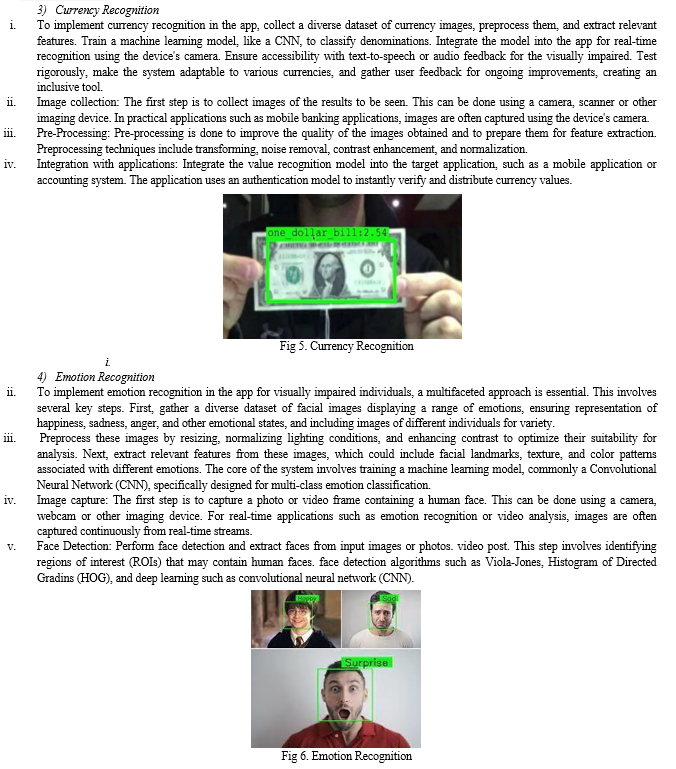

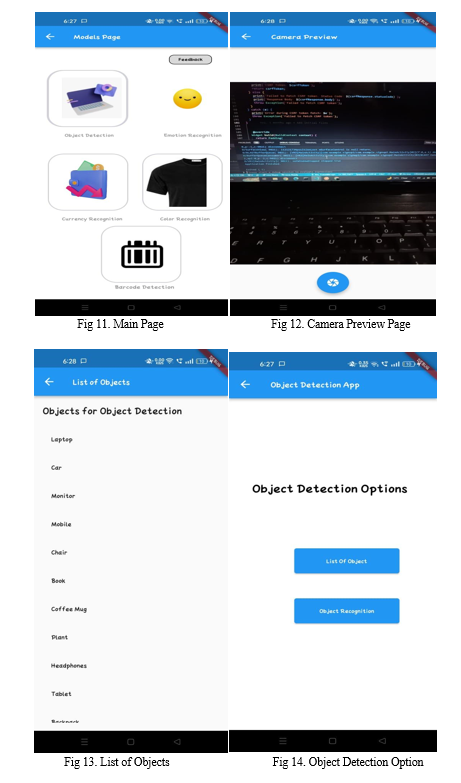

Recognizing the specific challenges faced by visually impaired individuals in indoor settings, this project aims to introduce a pioneering Android application tailored to enhance their independence and confidence. Our application is designed to assist with object detection, distance estimation, currency recognition, barcode scanning, color identification, and emotion analysis, with a Leveraging the device's built-in camera, the application employs a combination of deep learning models, including object detection using You Only Look Once (YOLO) V3 and Single Shot Detector (SSD), distance estimation with the Mono-depth algorithm, currency recognition based on OCR, barcode detection, color identification, and emotion analysis. It detects, describes, and recognizes indoor objects, provides real-time information about object distances, and delivers comprehensive object-related details through audio output, which can be seamlessly channeled through headphones or the device's speaker.

An essential characteristic of this system is its suitability for indoor environments, eliminating the need for external cameras or sensors. By emphasizing indoor functionality, this project addresses the unique challenges faced by the visually impaired within enclosed spaces, enhancing their ability to navigate and interact with their surroundings. The central aim of this project is to showcase how advanced computer vision techniques can significantly improve the quality of life for visually impaired individuals during indoor activities. We will present a comprehensive overview of our application, detailing its various modules, functionalities, and its potential to empower and support the visually impaired in indoor settings.

The project implemented an Android-based application designed to solve the problems faced by visually impaired people while navigating indoor environments. Traditional tools such as the white cane are limited in providing real information about indoor products and problems. The app uses the Android device's built-in camera and computer vision technology to fill this gap. The app facilitates interaction with visually impaired users by providing real-time information about indoor equipment, distance and other relevant details through voice output. More importantly, it is designed for an indoor environment and should not have external cameras or sensors. The project aims to increase the independence and confidence of blind people in prisons by focusing on in-house work.

The main goal of the project is to show that the use of computer vision technology can improve the quality of life of visually impaired people while navigating at home. From an overview of the app's structure and features, the project focuses on the app's ability to improve and support visually impaired people manage the indoor environment.

II. MOTIVATION

The motivation behind this project, aimed at assisting visually impaired individuals with their indoor activities, is multifaceted and deeply rooted in a commitment to making a positive impact on the lives of those with visual impairments. Several key factors drive the project's motivation. First and foremost, the project is motivated by the goal of empowering independence among visually impaired individuals. Vision loss often poses significant challenges to performing everyday tasks, which can lead to a greater reliance on assistance from others. This project seeks to change that by providing technological solutions that enhance self-sufficiency.

By offering tools and features tailored to the unique needs of the visually impaired, it aims to foster greater autonomy in daily life. this system. Raspberry pie has to be powered constantly with the help of a power bank which entails the user to carry a power bank along with him/her all the time. Moreover, this system tells the user the name of the detected objects.

The motivation behind the program stems from a strong commitment to promoting the participation, independence and empowerment of visually impaired people. Despite advances in technology, navigating the home environment is still a challenge for visually impaired people. Traditional service tools, while useful in some cases, often do not provide real-time information about indoor equipment and issues.

By creating an Android application suitable for indoor use, we aim to close this important gap and offer practical solutions that will improve the lives of visually impaired people. We are motivated by our belief that all people, regardless of their abilities, should be equal and be able to manage their environment safely and freely.

In addition, our activities are directed to create solutions to new problems that are beneficial to people's lives by using the power of today's technologies, especially computer vision and deep learning. Using the capabilities of smartphones and image processing equipment, we strive to create efficient and effective tools that will increase the independence and quality of life of visually impaired people. Finally, our passion is rooted in our belief in the power of technology to break down barriers and create greater unity.

Through this project, we hope to help visually impaired people move around in a more comfortable, safe and dignified environment, allowing them to participate and contribute to their communities.

III. RELATED WORK

The study on the Android application mentioned above for indoor navigation and object search for the visually impaired consists of research and technological studies aimed at solving a similar problem. Some of the main areas of work are:

- Technological services for the visually impaired: Various services have been developed to assist the visually impaired with navigation and navigation. This includes devices such as wearable cameras, smart sticks, and navigation apps. Research in this area focuses on improving the accuracy, usability, and effectiveness of technology to meet user needs.

- Computer Vision for Object Detection: Extensive research has been done on computer vision for object detection, including deep learning-based methods such as YOLO (You Only Look at One Leg) and SSD (Single Shot Detector). This technology, which is used in many areas including object recognition, can provide instant detection of objects in complex environments.

- Distance and Depth Estimation: Examining distance estimation and depth perception using relevant computer vision techniques to provide instant information about the distances of objects in the indoor environment. Depth algorithms and stereo vision are often used to estimate the depth of images captured by cameras.

- Accessibility and Inclusive Design: Accessibility and inclusive design studies focus on the use of technology to provide easy access to users with disabilities, including the visually impaired. This includes research on designing user interfaces, developing assistive technologies, and ensuring digital content is accessible to all users.

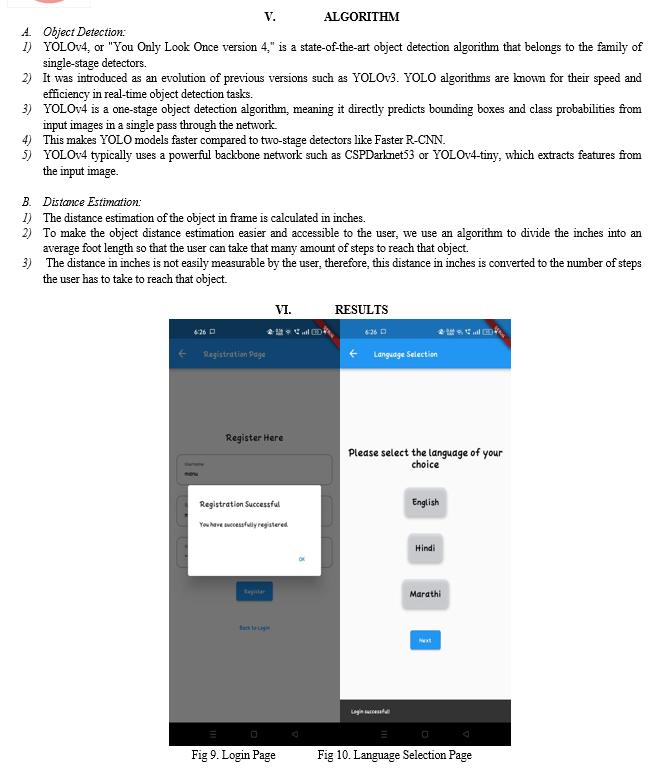

VII. FUTURE SCOPE

The future scope for an app designed for visually impaired people, featuring modules such as object detection, emotion recognition, color recognition, currency detection, and barcode detection, holds significant promise. With ongoing advancements in machine learning and AI, these modules can become more accurate and efficient, greatly enhancing their utility for the visually impaired. Furthermore, integration with wearable devices like smart glasses can provide a hands-free experience, and expanding the app's capabilities to include navigation and wayfinding would be invaluable. Language and OCR integration, a supportive user community, and educational modules can further enhance the app's functionality. Maintaining a user friendly interface, ensuring data privacy, and adhering to global accessibility standards are key considerations, as well as collaborating with relevant organizations for wider adoption and support. It is important to continuously improve the accuracy and efficiency of target detection algorithms. This will include improving the fundamental structure of machine learning, optimizing real-time processing algorithms, and reducing errors and omissions.

Integration of advanced sensors such as LiDAR (light detection and ranging) or radar with cameras can provide more in-depth information, improving the ability to accurately identify and separate objects, especially in a challenging or challenging environment. weather. In addition to simple object detection, future iterations of the system may include semantic understanding and context recognition. This means not only identifying objects, but also understanding their relationships, functions and effects in different situations.

Integrating object detection systems with navigation tools will provide greater assistance to the visually impaired. This may include using GPS and map data to generate audio or feedback to guide users around obstacles or to specific locations. Designing features that provide immediate feedback or descriptions of the environment can improve the user experience. This can include not only the identification of objects but also elements such as lighting, textures and spatial layout. Providing the user with a customizable interface based on the user's preferences and needs is crucial to ensuring interaction.

Future work will include creating a better information management system, support for multiple languages, and better compatibility with assistive devices.

Integration of object detection with wearable devices such as smart glasses or haptic vests can provide users with a more interactive and intuitive experience. This will enable hands-free working and promote environmental awareness. Optimizing object detection algorithms used at the edge can increase responsiveness and reduce dependence on cloud services. This includes the construction of heavy structures and efficient pipelines suitable for low-energy products with low-cost components.

It is crucial to collect continuous feedback from visually impaired users and incorporate their input into the design process. This ensures that the system remains user-friendly and addresses the needs and challenges faced by its users. Partnering with organizations and communities dedicated to accessibility and disability rights can help ensure that diagnostic products are designed and used in ways that respect and promote the freedom and independence of the visually impaired.

Conclusion

In conclusion, the app designed for visually impaired individuals, featuring modules such as object detection, emotion recognition, color recognition, currency detection, and barcode detection, represents a beacon of hope for a more inclusive and accessible future. With advancements in technology and AI, the app\\\'s potential for improving the quality of life for the visually impaired is substantial. Its evolution may lead to greater independence, better social interaction, and enhanced everyday experiences. As the app continues to grow and adapt, it has the power to break down barriers and provide new found opportunities, ensuring that the visually impaired community can navigate the world with confidence and autonomy. This app embodies the principles of innovation, compassion, and inclusivity, offering a brighter future for those who rely on its support.

References

[1] Ruffieux S, Hwang C, Junod V, Caldara R, Lalanne D and Ruffieux N. (2023). Tailoring assistive smart glasses a according to pathologies of visually impaired individuals: an exploratory investigation on social needs and difficulties experienced by visually impaired individuals. Online publication date: 1-Jun- 2023. [2] Jafri, Rabia & Ali, Syed & Arabnia, Hamid & Fatima, Shameem. (2013). Computer vision-based object recognition for the visually impaired in an indoors environment: a survey. [3] Murad, M., Rehman, A., Shah, A.A., Ullah, S., Fahad, M., Yahya, K.M.: RFAIDE—an RFID based navigation and object recognition assistant for visually impaired people. In: 7th Inter- national Conference [4] CICERONE- A Real Time Object Detection for Visually Impaired PeopleTherese Yamuna Mahesh1, S S Parvathy2, Shibin Thomas2. [5] Ruxandra Tapu, Bogdan Mocanu, Andrei Bursuc, Titus Zaharia; Proceedings of the IEEE International Conference on Computer Vision (ICCV) Workshops, 2013, pp. 444-451 [6] Shin, Byeong-Seok, and Cheol-Su Lim. \\\"Obstacle detection and avoidance system for visually impaired people.\\\" In International Workshop on Haptic and Audio Interaction Design, pp. 78-85. Berlin, Heidelberg: Springer Berlin Heidelberg, 2007. [7] Rodríguez, Alberto, J. Javier Yebes, Pablo F. Alcantarilla, Luis M. Bergasa, Javier Almazán, and Andrés Cela. \\\"Assisting the visually impaired: obstacle detection and warning system by acoustic feedback.\\\" Sensors 12, no. 12 (2012): 17476-17496. [8] Zhang, Qian, and Wei Qi Yan. \\\"Currency detection and recognition based on deep learning.\\\" In 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), pp. 1-6. IEEE, 2018. [9] Ajmal, Aisha, Christopher Hollitt, Marcus Frean, and Harith Al-Sahaf. \\\"A comparison of RGB and HSV colour spaces for visual attention models.\\\" In 2018 International conference on image and vision computing New Zealand (IVCNZ), pp. 1-6. IEEE, 2018. [10] Hansen, Daniel Kold, Kamal Nasrollahi, Christoffer Bøgelund Rasmussen, and Thomas B. Moeslund. \\\"Real-time barcode detection and classification using deep learning.\\\" In International Joint Conference on Computational Intelligence, pp. 321-327. SCITEPRESS Digital Library, 2017. [11] Kosti, Ronak, Jose M. Alvarez, Adria Recasens, and Agata Lapedriza. \\\"Emotion recognition in context.\\\" In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1667-1675. 2017. [12] Natanael, Gafencu, Cristian Zet, and Cristian Fo?al?u. \\\"Estimating the distance to an object based on image processing.\\\" In 2018 International Conference and Exposition on Electrical And Power Engineering (EPE), pp. 0211-0216. IEEE, 2018.

Copyright

Copyright © 2024 Mrs. Shraddha. P. Mankar, Riya Gawande, Dipanshu Sankhala, Siddhi Vispute, Nikhita Watpal . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61273

Publish Date : 2024-04-29

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online