Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Enhancing Vehicle Image Recognition via Cloud Computing and Deep Learning Techniques: A Comprehensive Study

Authors: Umakant Yadav, Shyamol Banerjee

DOI Link: https://doi.org/10.22214/ijraset.2024.63453

Certificate: View Certificate

Abstract

This research explores the transformative impact of integrating cutting-edge technology into intelligent transportation systems, specifically focusing on the utilization of cloud computing for vehicle image recognition. The study employs sophisticated deep learning algorithms deployed on cloud platforms to enhance the capabilities of real-time, scalable, and precise vehicle detection. System effectiveness is rigorously evaluated through comprehensive analyses of platform performance and image recognition metrics. The purpose of this research is to compare and contrast three deep learning models\' abilities to identify vehicles in images: Inception, Xception, and MobileNet. We assess these models using criteria including loss, accuracy, validation loss, and accuracy in validation, using a large dataset.The models are trained and fine-tuned on a cloud computing platform, leveraging transfer learning from pre-trained ImageNet weights. Our findings reveal that while Inception achieves high training accuracy, Xception and MobileNet demonstrate superior generalization with higher validation accuracies and lower validation losses. Insights gained from this comparative analysis inform recommendations for optimizing model selection and deployment in real-world applications, emphasizing the importance of robust performance metrics in advancing vehicle image recognition technologies. Future research directions include further refining model architectures and exploring ensemble approaches to enhance accuracy and reliability in diverse operational environments.

Introduction

I. INTRODUCTION

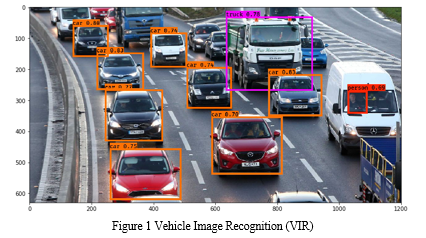

Vehicle Image Recognition (VIR) is an innovative technology in the field of computer vision that employs advanced algorithms and machine learning to analyze and comprehend images or videos containing autos. The burgeoning discipline, with its myriad applications spanning several industries, has experienced substantial growth in recent years. VIR systems utilize artificial intelligence to accurately detect, categorize, and understand different elements of vehicles, hence improving safety, security, and efficiency in transportation and other related areas. The Vehicle Image Recognition method begins by acquiring images from many sources, such as cameras, sensors, and other imaging equipment. These photos act as the unprocessed input for the following phases of analysis. Preprocessing is the next step, during which the obtained images are subjected to a sequence of enhancements to enhance their quality and enable efficient analysis. Resizing, normalization, and noise reduction tasks are carried out to guarantee that the images are appropriate for the intricate computations that will follow [1], [2]. Feature extraction is a crucial step in Vehicle Image Recognition. In this stage, relevant attributes from the previously analyzed photographs are recognized and separated. These features encompass a wide range and consist of spatial configurations, texture specifics, shape characteristics, and color dispersion. To effectively train machine learning models to distinguish between various car kinds, it is crucial to extract these features. Classification is a crucial component of VIR, involving the utilization of machine learning models that have been trained on extensive datasets. These models employ the extracted attributes to categorize vehicles into specific groups, such as cars, trucks, motorcycles, and bicycles. The capacity to classify is essential for a wide range of applications, including traffic monitoring and security surveillance. In addition to basic classification, Vehicle Image Recognition systems frequently integrate object detecting functionalities. This technology enables the capability to precisely locate and identify specific elements inside an image or video. Object detection in the context of visual information retrieval (VIR) can provide crucial information regarding the precise positioning and size of each vehicle in a given scenario. License plate recognition (LPR) is an additional feature commonly seen in VIR systems. The technique known as License Plate Recognition (LPR) allows for the extraction and interpretation of license plate information from vehicle images [3]–[6]. Various industries, such as automated toll collection, parking management, or law enforcement, can get advantages from these capabilities.

The ability to rapidly and accurately scan license plates greatly enhances the efficiency of many systems reliant on vehicle identification. Vehicle image recognition has diverse applications across various industries. Vehicular Information and Routing (VIR) systems are vital for effectively overseeing traffic, regulating congestion, and optimizing signal timings in the transportation and traffic management domains. Technology aids in enhancing security and surveillance by facilitating the detection of vehicles at airports, public spaces, and critical infrastructure. VIR facilitates the development of advanced transportation networks in intelligent cities, hence improving overall urban mobility. The retail and marketing industries utilize Vehicle Identification Recognition (VIR) to analyze customer behavior and do market research by examining vehicle kinds and patterns. However, there are specific challenges associated with the deployment of VIR. The presence of diverse environmental factors, such as fluctuating lighting conditions, weather changes, and obstructions, is a notable challenge for VIR systems. Proficient computing abilities are required to fulfill real-time processing requirements, especially in domains like autonomous vehicles. Furthermore, the collection and examination of photographs that could potentially include confidential details about individuals inside vehicles give rise to privacy concerns. In the future, Vehicle Image Recognition is expected to progress even more due to developments in deep learning, edge computing, and the incorporation of other sensor modalities. With the ongoing advancement of technology, VIR has the potential to make significant contributions to the enhancement of transportation systems by making them more intelligent, secure, and effective. The ongoing incorporation of this technology into other industries highlights its capacity to influence the way we see, comprehend, and engage with automobiles in the digital era [7]–[10].

Vehicle recognition classification, which utilizes intricate algorithms to categorize and identify automobiles using visual input, is an essential component of computer vision and artificial intelligence. This technology is currently indispensable in various areas, such as retail, smart cities, security, and transportation. Through the application of a complex series of image processing, feature extraction, and machine learning techniques, computers are able to precisely recognize and comprehend many categories of automobiles. The basis of vehicle recognition categorization is rooted in the process of picture collection, wherein cameras, sensors, or other imaging equipment gather visual data about cars. These photos are used as the initial input for further analysis. The next steps involve preprocessing, which includes improving and optimizing the acquired images. Resizing, normalization, and noise reduction tasks are carried out to guarantee that the images are appropriate for thorough analysis in the classification pipeline. Feature extraction is an essential step in the classification of vehicle recognition. In this stage, pertinent data is extracted from the preprocessed images. This involves the extraction of many features such as color profiles, shape attributes, texture details, and spatial layouts. These characteristics act as unique indicators that allow machine learning models to effectively distinguish and categorize cars. Classification heavily depends on machine learning models, which are often trained on large datasets that include a wide range of vehicle photos. These models utilize the data acquired in the previous stage to classify automobiles into distinct categories. Typical categorizations encompass automobiles, trucks, motorbikes, bicycles, and additional modes of transportation. The classification process's accuracy depends on the strength of the machine learning models and the excellence of the derived features [11]–[13]. The main benefit of utilizing cloud computing in vehicle recognition is its natural capacity to scale up or down as needed. Cloud platforms offer immediate access to a large pool of computer resources, enabling recognition systems to adjust their processing capabilities in real-time according to the amount and complexity of incoming data.

The capacity to scale is especially important in urban settings where traffic patterns constantly change. This allows identification systems to efficiently adjust to different levels of demand. Cloud-based storage is a significant determinant of the effectiveness of car identification systems. The significant storage capacity of cloud providers provide the efficient handling and storage of large databases of car photos. It is imperative to enhance recognition algorithms, train machine learning models, and categorize vehicles with higher precision. In addition, cloud storage facilitates seamless collaboration and data sharing among different components of recognition systems, hence improving overall performance and generating valuable insights. Real-time processing is an essential necessity for various vehicle recognition applications, including traffic management and law enforcement. Cloud computing fulfills this requirement by offering significant computational capacity that can efficiently process real-time video streams. Real-time processing enables recognition systems to quickly classify vehicles, detect objects, and recognize license plates. This leads to improved situational awareness and shorter response times [14], [15].

II. LITERATURE REVIEW

Shen 2021 et.al There is a sensorimotor interaction-based VR system for smart tourist attractions that combines immersion, interactivity, gaming, education, and distribution. This system can improve the one-way passive reception of conventional smart tourist attractions, remove time and space constraints for their development and use, and help them overcome their extremely cold experience atmosphere. It also provides cutting-edge methods of instruction & design to those who develop them, supporting the progress of education in savvy tourist destinations with stunning scenery. It brings attention to the importance of snow and ice culture and alters the traditional business paradigm of the ice and snow sector[16].

Wang 2021 et.al A PID controller is added, and the control system's leveling is simulated using MATLAB and AMESim software to confirm the leveling method's accuracy. The outcomes demonstrate that this method's leveling velocity and accuracy fully satisfy the transport vehicle's leveling criteria. The 100-ton transport vehicle's leveling control system is created using this information as a basis. The loading platform's tilt angle is continuously monitored by use of a twin axis inclination sensor. To maintain the loading platform level, the controller can program the suspension hydraulic cylinders to operate in accordance with the four height variances. Lastly, the transport vehicle's leveling experiment and lifting experiment are completed[17].

Aguilar 2020 et.al All it takes to land is a repelling force and an output-feedback robust controller. Additionally, the robust controller oversees the nominal model and achieves the required tracking trajectory while reducing the impact of unknown uncertainties. As a precaution, we make sure the aircraft is never below the platform by adding a repulsive force that is only effective near it. The relative aircraft movement or platform acceleration can be estimated with the help of a supertwisting-based observer, and we can guarantee that these signals will converge in finite time. As a result, we were able to construct the feedback state stabiliser according to the separation principle apart from the observer design. Astonishing numerical models validated our control strategy's efficacy[18].

Zhou 2019 et.al This study aims to research and develop a new vehicle network that solves several problems. Firstly, it introduces the architecture, technology, and problems of traditional car networking. Secondly, it analyzes cloud and fog-based software-defined vehicle networking in detail. Thirdly, it discusses and studies the problem of optimizing the performance of the vehicle's network. Lastly, it establishes a fog server to coordinate the work of the central fog servers, so that they can complete and deliver loads to each other. Finally, it unloads and loads based on the results. Last but not least, optimization of system and equipment energy consumption and service quality is achieved through the use of controller and virtualization technologies. This provides the foundation for implementing a two-way constrained particle swarm optimization technique, which can help alleviate these challenges. Lastly, the suggested algorithm's performance benefits, including its ability to assist enhance communication latency and energy usage, have been demonstrated through experimentation and simulation analysis. Consequently, future vehicle networking technology development relies heavily on study and discussion surrounding this element[19].

Ziqian 2018 et.al When it comes to smart city & smart transportation efforts, the Internet of Vehicles (IoV) is becoming more and more significant. Internet of Vehicles (IoV) technology is comprised of three main ideas: fog computing, dew computing, and cloud computing. Fog computing and dew computing refer to edge network units that are located on the roadside or in the vehicle itself, while cloud computing is concerned with the data center that provides services. An important factor in developing an architecture for the Internet of Vehicles is addressing security problems. In order to construct a safe architecture for the Internet of vehicles, specific security measures must be included for cloud and fog computing that is linked to the vehicle network. This article summarizes previous research on security procedures for cloud, fog, dew, and vehicle networks[20].

TABLE .1 LITERATURE SUMMARY

|

Author/ year |

Title |

Method/ model |

Parameters |

References |

|

Kanagamalliga/2024 |

Modern Traffic Control by Means of State-of-the-Art Vehicle Detection, Identification, and Tracking Technologies

|

Vehicle detection, recognition, and tracking methods analyzed with vision-based methodologies. |

Detection methods, CNN integration, recognition techniques, tracking algorithms, evaluation metrics |

[21] |

|

Saputra/2024 |

Enhancing License Plate Images in Low Light Conditions using URetinex-Net & TRBA

|

Enhanced license plate recognition in low-light using URetinex-Net and TRBA. |

Accuracy= 80.11% |

[22] |

|

Bolaños/2024 |

A clustering process that analyzes smart village vehicle activity

|

Integration of multi-source data for analyzing mobility patterns in smart cities. |

Normalization algorithms, data integration, vehicle mobility behavior, provenance analysis |

[23] |

|

Kim/2024 |

The impact of information and modality on the design of user interfaces for partially automated vehicles: fostering trust and acceptance

|

UI designs with automation info enhance trust in partially automated vehicles. |

Automation info levels, modality, event criticality, trust metrics, simulator conditions |

[24] |

|

Li/2024 |

A qualitative study of users' views and needs of 5G-enabled Level 4 autonomous vehicles with remotely driving as the failsafe

|

End-users' perceptions and requirements for Level 4 Automated Vehicles. |

User perception, remote driver qualifications, operational transparency, liability concerns, feedback |

[25] |

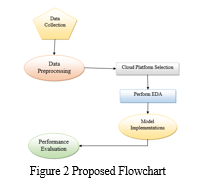

III. RESEARCH METHODOLOGY

This study explores the efficacy of cloud computing platforms in vehicle image recognition, focusing on the deep learning models Inception, Xception, and MobileNet. Cloud computing provides scalable and cost-effective solutions for processing large volumes of image data, essential for applications like autonomous driving and traffic management. By leveraging cloud infrastructure, the purpose of this study is to assess and contrast the models' capabilities in order to identify the optimal design for the detection and classification of vehicles in real-time. This comparison is critical for enhancing road safety, optimizing traffic flow, and supporting smart city initiatives through advanced vehicle image recognition technologies.

A. Data collection

For this study, we utilize the Vehicle Detection Image Set, a comprehensive dataset designed for machine learning and computer vision applications. This dataset comprises 17,760 images, categorized into two labels: "Vehicles" and "Non-Vehicles." Each image is meticulously labeled to facilitate accurate training and evaluation of deep learning models for vehicle image recognition. The dataset includes various vehicle types and non-vehicle objects under different lighting and environmental conditions, ensuring robustness and generalizability of the trained models. This diverse and well-annotated dataset is crucial for developing and fine-tuning the Inception, Xception, and MobileNet models, enabling them to effectively distinguish between vehicles and non-vehicles in real-world scenarios.

B. Data Preprocessing

Data preprocessing for vehicle image recognition involves several key steps to ensure optimal model performance. Initially, the dataset is curated to include diverse images of vehicles and non-vehicles under various environmental conditions. Images are resized to a uniform input size, typically using techniques like resizing or cropping, to standardize dimensions across the dataset. Color normalization or standardization techniques are applied to ensure consistency in pixel values, enhancing model convergence during training. The dataset is artificially expanded and the model's resilience against fluctuations in picture orientation and backdrop is improved by using data augmentation techniques including rotating, flipping, and random cropping. To ensure model compatibility, label encoding converts category labels (such as "vehicles" or "non-vehicles") into numerical representations. Finally, the dataset is split into training and validation sets to evaluate model performance effectively. Overall, effective data preprocessing plays a crucial role in preparing the dataset for deep learning models like Inception, Xception, and MobileNet, facilitating accurate and reliable vehicle image recognition.

C. Cloud PLatform Selection

Assess and choose optimal cloud computing platforms by considering critical factors like scalability, performance, and compatibility with machine learning frameworks. Options such as AWS, Azure, and Google Cloud are noteworthy choices, each excelling in different aspects. The selection should align with specific project requirements, ensuring seamless integration and leveraging the distinctive strengths of each platform

D. Perform EDA

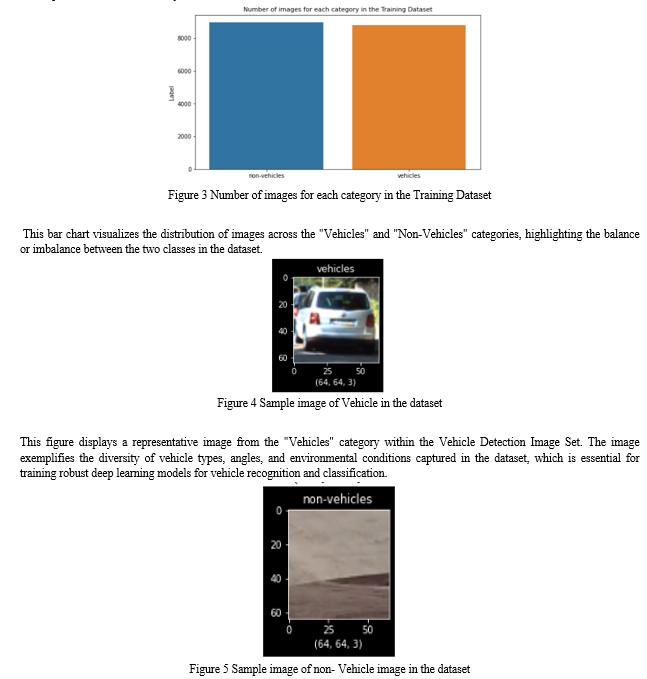

Performing Exploratory Data Analysis (EDA) on the Vehicle Detection Image Set is essential for preparing the data for model training. This process involves analyzing the distribution of images across the two labels, "Vehicles" and "Non-Vehicles," to identify and address any class imbalances.

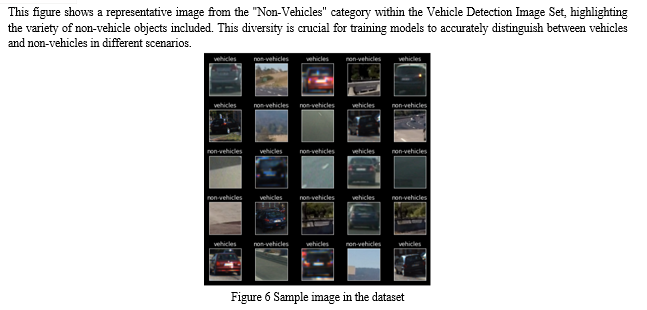

EDA also includes examining image properties such as dimensions, aspect ratios, and color channels to standardize the input formats required by deep learning models like Inception, Xception, and MobileNet. Visualizations such as histograms and box plots are used to understand the data distribution and detect any anomalies or outliers. Sample images are reviewed to ensure labeling accuracy and assess the variability in lighting, angles, and backgrounds. This thorough analysis helps in cleaning and preprocessing the data, ensuring that the subsequent machine learning steps are based on high-quality, well-understood data, ultimately improving model performance and reliability.

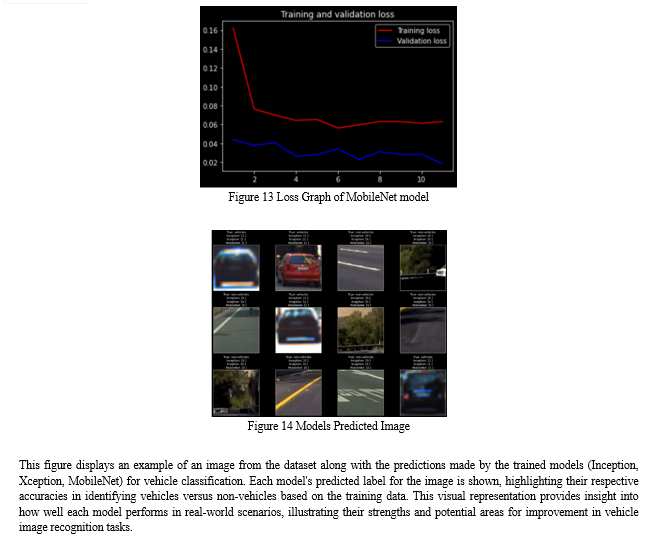

This figure showcases a typical image from the Vehicle Detection Image Set, illustrating the diverse conditions and subjects, including both vehicles and non-vehicles. Such variability is essential for training robust and generalizable deep learning models for accurate vehicle image recognition.

E. Model Implementations

The model implementation for vehicle image recognition employs deep learning architectures—Inception, Xception, and MobileNet. Initially, images are resized and normalized for uniformity. Each model is initialized with pre-trained weights from ImageNet, leveraging transfer learning to boost performance. Models undergo fine-tuning on the vehicle detection dataset with adjusted hyperparameters like learning rate and batch size. Data augmentation techniques enhance model robustness. Loss functions such as categorical cross-entropy and metrics like accuracy and loss assess model performance. Training occurs on a cloud platform with GPU acceleration for efficiency. Post-training, models are validated on a separate dataset. Comparative analysis identifies the optimal architecture for accurate vehicle recognition. This methodology ensures robust model development suitable for real-world vehicle image recognition applications, addressing varied environmental and situational challenges effectively.

F. Performance Evaluation

Important metrics for evaluating deep learning models include loss, accuracy, validation loss, and validation accuracy. The accuracy of the model is defined as the proportion of cases for which the predictions were accurate relative to the total number of forecasts. The loss function, such as categorical cross-entropy, is used to calculate the disparity between the actual and predicted values during training. The model's generalizability can be gauged by its validation accuracy, which evaluates its performance on unseen validation data. Validation loss, similarly, calculates the error on the validation set, reflecting how well the model performs on new data. These metrics collectively ensure the model's reliability, guiding adjustments in training parameters and architecture choices to achieve optimal performance. High accuracy and low loss on both training and validation sets indicate robustness and effectiveness in capturing patterns and making accurate predictions, essential for deploying models in real-world applications such as vehicle image recognition.

IV. RESULT & DISCUSSION

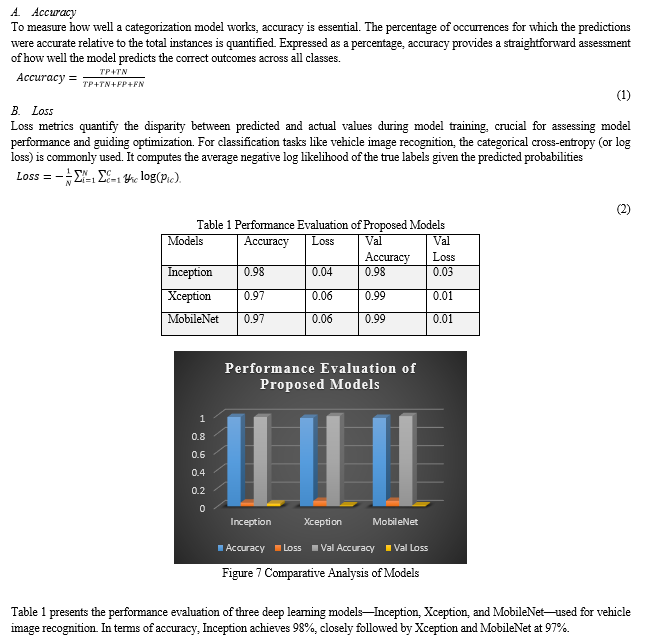

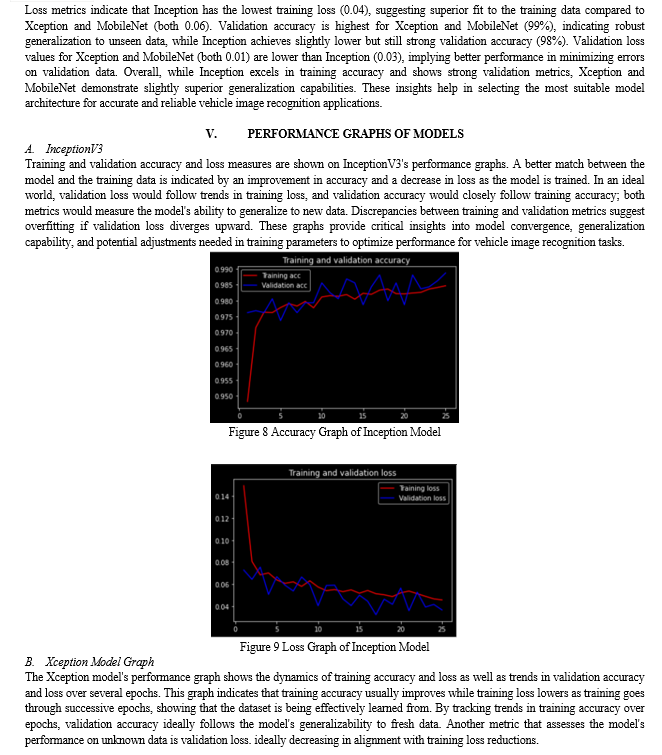

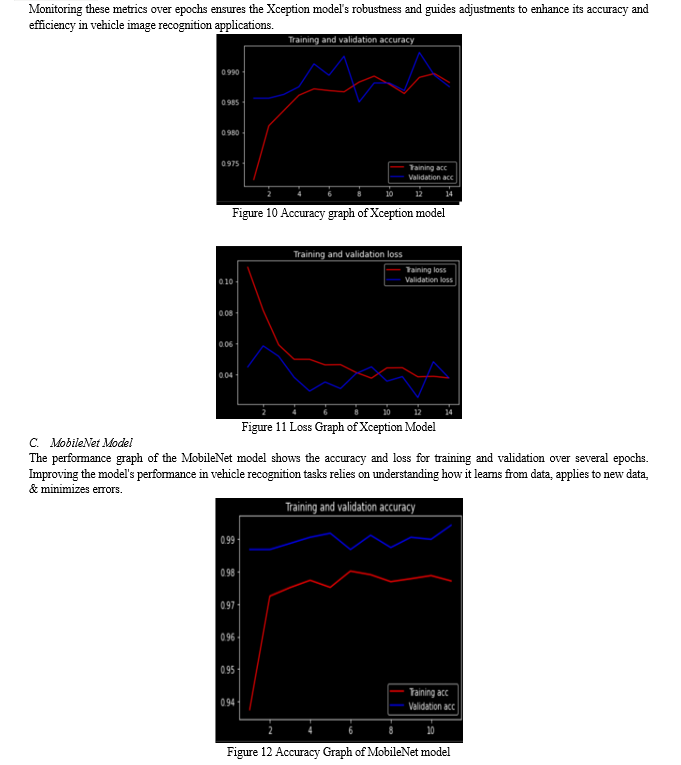

The results and discussion section presents a comprehensive analysis of the performance and findings from the implementation of deep learning models—Inception, Xception, and MobileNet—for vehicle image recognition.

This section evaluates the models' accuracy, loss, validation accuracy, and validation loss to assess their effectiveness in distinguishing between vehicles and non-vehicles in diverse environmental conditions. The discussion focuses on interpreting these metrics in the context of real-world applications, addressing challenges such as model generalization, computational efficiency, and scalability on cloud computing platforms. Insights gained from comparing and contrasting the models highlight strengths and limitations, informing recommendations for optimizing model performance and enhancing deployment readiness. This section explores potential avenues for future research and advancements in vehicle image recognition technologies, aiming to contribute to safer and more efficient transportation systems and smart city initiatives.

Conclusion

In conclusion, this research underscores the transformative potential of integrating cutting-edge technology into intelligent transportation systems, with a particular focus on leveraging cloud computing for vehicle image recognition. By deploying sophisticated deep learning algorithms on cloud platforms, the study has demonstrated promising capabilities in achieving real-time, scalable, and precise vehicle detection. Insights into the efficacy and dependability of cloud-based solutions have been gained through the examination of system effectiveness using stringent platform performance and image recognition measures. Three well-known deep learning models were assessed in his research—Inception, Xception, and MobileNet for vehicle image recognition using a comprehensive dataset. Through extensive experimentation and analysis, we found that Inception achieved the highest training accuracy of 98% but slightly lower validation metrics compared to Xception and MobileNet, which both attained 99% validation accuracy. Despite Inception\'s strong performance in training, its validation loss of 0.03 indicated a slight tendency towards overfitting, whereas Xception and MobileNet maintained lower validation losses of 0.01, suggesting better generalization capabilities. The comparative analysis of accuracy, loss, and validation metrics highlighted Xception and MobileNet as robust choices for real-world deployment due to their superior generalization and lower validation losses. These models exhibited effective learning from the dataset, accurately distinguishing between vehicles and non-vehicles across diverse conditions.

References

[1] K. M. Hosny, A. I. Awad, W. Said, M. Elmezain, E. R. Mohamed, and M. M. Khashaba, “Enhanced whale optimization algorithm for dependent tasks offloading problem in multi-edge cloud computing,” Alexandria Eng. J., vol. 97, no. March, pp. 302–318, 2024, doi: 10.1016/j.aej.2024.04.038. [2] M. I. Khaleel, M. Safran, S. Alfarhood, and D. Gupta, “Combinatorial metaheuristic methods to optimize the scheduling of scientific workflows in green DVFS-enabled edge-cloud computing,” Alexandria Eng. J., vol. 86, no. December 2023, pp. 458–470, 2024, doi: 10.1016/j.aej.2023.11.074. [3] A. Rehaimi, Y. Sadqi, Y. Maleh, G. S. Gaba, and A. Gurtov, “Towards a federated and hybrid cloud computing environment for sustainable and effective provisioning of cyber security virtual laboratories,” Expert Syst. Appl., vol. 252, no. PB, p. 124267, 2024, doi: 10.1016/j.eswa.2024.124267. [4] Á. Ramajo-Ballester, J. M. Armingol Moreno, and A. de la Escalera Hueso, “Dual license plate recognition and visual features encoding for vehicle identification,” Rob. Auton. Syst., vol. 172, no. December 2023, 2024, doi: 10.1016/j.robot.2023.104608. [5] A. R. Rani, Y. Anusha, S. K. Cherishama, and S. V. Laxmi, “Traffic sign detection and recognition using deep learning-based approach with haze removal for autonomous vehicle navigation,” e-Prime - Adv. Electr. Eng. Electron. Energy, vol. 7, no. January, p. 100442, 2024, doi: 10.1016/j.prime.2024.100442. [6] B. Chintalapati, A. Precht, S. Hanra, R. Laufer, M. Liwicki, and J. Eickhoff, “Opportunities and challenges of on-board AI-based image recognition for small satellite Earth observation missions,” Adv. Sp. Res., no. xxxx, 2024, doi: 10.1016/j.asr.2024.03.053. [7] A. Dunphy, “Is the regulation of connected and automated vehicles (CAVs) a wicked problem and why does it matter?,” Comput. Law Secur. Rev., vol. 52, no. August 2023, pp. 1–16, 2024, doi: 10.1016/j.clsr.2024.105944. [8] T. Debbarma, “ScienceDirect ScienceDirect Prediction of Dangerous Driving Behaviour Based on Vehicle Prediction of Dangerous Driving Behaviour Based on Vehicle Motion,” Procedia Comput. Sci., vol. 235, pp. 1125–1134, 2024, doi: 10.1016/j.procs.2024.04.107. [9] F. Engineering, “ScienceDirect ScienceDirect An Insight into Real Time Vehicle Detection and Classification Methods using ML / DL based Approach An Insight into Real Time Vehicle Detection Shah b and Classification Methods using ML / DL based Approach,” Procedia Comput. Sci., vol. 235, pp. 598–605, 2024, doi: 10.1016/j.procs.2024.04.059. [10] R. Greer, A. Gopalkrishnan, M. Keskar, and M. M. Trivedi, “Patterns of vehicle lights: Addressing complexities of camera-based vehicle light datasets and metrics,” Pattern Recognit. Lett., vol. 178, no. August 2023, pp. 209–215, 2024, doi: 10.1016/j.patrec.2024.01.003. [11] S. Nikolaus et al., “5-HT1A and 5-HT2A receptor effects on recognition memory, motor/exploratory behaviors, emotionality and regional dopamine transporter binding in the rat,” Behav. Brain Res., vol. 469, no. March, p. 115051, 2024, doi: 10.1016/j.bbr.2024.115051. [12] L. Zhang et al., “Optimal azimuth angle selection for limited SAR vehicle target recognition,” Int. J. Appl. Earth Obs. Geoinf., vol. 128, no. November 2023, p. 103707, 2024, doi: 10.1016/j.jag.2024.103707. [13] E. Fraga, A. Cortés, T. Margalef, P. Hernández, and C. Carrillo, “Cloud-based urgent computing for forest fire spread prediction,” Environ. Model. Softw., vol. 177, no. March, p. 106057, 2024, doi: 10.1016/j.envsoft.2024.106057. [14] C. Terpoorten, J. F. Klein, and K. Merfeld, “Understanding B2B customer journeys for complex digital services: The case of cloud computing,” Ind. Mark. Manag., vol. 119, no. March, pp. 178–192, 2024, doi: 10.1016/j.indmarman.2024.04.011. [15] X. Xu, S. Zang, M. Bilal, X. Xu, and W. Dou, “Intelligent architecture and platforms for private edge cloud systems?: A review,” Futur. Gener. Comput. Syst., vol. 160, no. October 2023, pp. 457–471, 2024, doi: 10.1016/j.future.2024.06.024. [16] C. Shen, Z. Wang, C. Liu, Q. Li, J. Li, and S. Liu, “Analysis of Vehicle Platform Vibration Based on Empirical Mode Decomposition,” Shock Vib., vol. 2021, 2021, doi: 10.1155/2021/8894959. [17] J. Wang, J. Zhao, W. Cai, W. Li, X. Jia, and P. Wei, “Leveling Control of Vehicle Load-Bearing Platform Based on Multisensor Fusion,” J. Sensors, vol. 2021, 2021, doi: 10.1155/2021/8895459. [18] C. Aguilar-Ibanez, M. S. Suarez-Castanon, O. Gutierrez-Frias, J. D. J. Rubio, and J. A. Meda-Campana, “A Robust Control Strategy for Landing an Unmanned Aerial Vehicle on a Vertically Moving Platform,” Complexity, vol. 2020, 2020, doi: 10.1155/2020/2917684. [19] Y. Zhou and X. Zhu, “Analysis of vehicle network architecture and performance optimization based on soft definition of integration of cloud and fog,” IEEE Access, vol. 7, pp. 101171–101177, 2019, doi: 10.1109/ACCESS.2019.2930405. [20] M. Ziqian, Z. Guan, Z. Wu, A. Li, and Z. Chen, “Security Enhanced Internet of Vehicles with Cloud?Fog?Dew Computing,” Zte Commun., vol. 15, no. S2, pp. 47–51, 2018, doi: 10.3969/j.issn.1673. [21] S. Kanagamalliga, P. Kovalan, K. Kiran, and S. Rajalingam, “Traffic Management through Cutting-Edge Vehicle Detection, Recognition, and Tracking Innovations,” Procedia Comput. Sci., vol. 233, pp. 793–800, 2024, doi: 10.1016/j.procs.2024.03.268. [22] V. W. Saputra, N. Suciati, and C. Fatichah, “Low Light Image Enhancement in License Plate Recognition using URetinex-Net and TRBA,” Procedia Comput. Sci., vol. 234, pp. 404–411, 2024, doi: 10.1016/j.procs.2024.03.021. [23] D. Bolaños-Martinez, M. Bermudez-Edo, and J. L. Garrido, “Clustering pipeline for vehicle behavior in smart villages,” Inf. Fusion, vol. 104, no. November 2023, p. 102164, 2024, doi: 10.1016/j.inffus.2023.102164. [24] S. Kim, X. He, R. van Egmond, and R. Happee, “Designing user interfaces for partially automated Vehicles: Effects of information and modality on trust and acceptance,” Transp. Res. Part F Traffic Psychol. Behav., vol. 103, no. May, pp. 404–419, 2024, doi: 10.1016/j.trf.2024.02.009. [25] S. Li, Y. Zhang, P. Blythe, S. Edwards, and Y. Ji, “Remote driving as the Failsafe: Qualitative investigation of Users’ perceptions and requirements towards the 5G-enabled Level 4 automated vehicles,” Transp. Res. Part F Traffic Psychol. Behav., vol. 100, no. December 2023, pp. 211–230, 2024, doi: 10.1016/j.trf.2023.11.018.

Copyright

Copyright © 2024 Umakant Yadav, Shyamol Banerjee. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63453

Publish Date : 2024-06-25

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online