Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Exploring the Use of Deep Reinforcement Learning for Autonomous Navigation in Unstructured Environments

Authors: Shifa Siraj Khan, Sanika Satish Lad, Anant Manish Singh

DOI Link: https://doi.org/10.22214/ijraset.2024.59534

Certificate: View Certificate

Abstract

Autonomous navigation in unstructured environments poses a significant challenge in robotics and artificial intelligence. The capability to navigate through dynamic and unpredictable terrains such as disaster zones, outdoor landscapes, or congested urban settings demands sophisticated solutions. This research paper delves into the imperative role of Deep Reinforcement Learning (DRL) in addressing these challenges and advancing the field of autonomous navigation. The core necessity of this research paper lies in the application and exploration of DRL within the realm of autonomous navigation. By leveraging neural networks and reinforcement learning algorithms, autonomous agents can dynamically navigate through unstructured environments without explicit programming or human intervention. Instead, they learn to navigate by receiving feedback through rewards or penalties, thereby continuously improving their decision-making processes. Through a comprehensive review of existing literature and experiments this paper aims to elucidate the pivotal role of DRL in shaping the future of autonomous navigation. It highlights the necessity of robust and adaptive systems capable of navigating unstructured environments, emphasizing the transformative potential of DRL in revolutionizing autonomous systems\' capabilities.

Introduction

I. INTRODUCTION

In recent years, the pursuit of autonomous navigation in unstructured environments has emerged as a critical frontier in robotics and artificial intelligence. The ability to traverse and navigate through complex, unpredictable terrains – ranging from disaster-stricken areas to bustling urban landscapes – stands as a testament to the evolving capabilities of autonomous systems. Amidst these challenges [1], Deep Reinforcement Learning (DRL) has emerged as a promising paradigm, offering a pathway towards addressing the intricacies of navigation in such environments. This adaptability not only allows for real-time adjustments but also fosters a more robust decision-making process, elevating the autonomy and versatility of these systems in navigating. [3]

Traditional navigation methodologies, reliant on predefined algorithms, regularly stumble whilst confronted with unstructured terrains. The dynamic and unpredictable nature of these surroundings gives an impressive hurdle for traditional structures, impeding their adaptability and proscribing their efficacy. It is inside this context that the application of DRL becomes vital. [19]

Deep reinforcement learning represents a hybrid of deep rootedness and reinforcement learning processes, which allows [10] independent professionals to learn and adapt through experiences gained from interactions with their environment is valid. This trial-and-error learning style enables these professionals to make truly informed decisions when It also increases the likeability of evolving condition.

The urgency of this research lies in its exploration of DRL's transformative potential in the domain of autonomous navigation.[5] Beyond a mere theoretical pursuit, the practical implications of employing DRL in navigating unstructured environments are vast and far-reaching. Envision unmanned vehicles swiftly traversing disaster zones to provide aid or drones seamlessly navigating complex urban landscapes for efficient deliveries. These scenarios underscore the significance and immediacy of deploying robust and adaptable autonomous systems.[12]

II. BACKGROUND AND CHALLENGES

In the context of robotics and artificial intelligence, autonomous navigation tracking in unstructured environments remains a cornerstone of technological progress. To understand the challenges and challenges faced by modern navigation modes, it is necessary to examine history development and existing terrain through the autonomous navigation system.

Today, as autonomous systems face a wide variety of dynamic environments, understanding the limitations and challenges of navigating unstructured environments is essential to the capabilities of modern navigation systems development.

A. Historical Trajectory

Autonomous navigation has taken a dramatic turn since its inception, characterized by reliance on pre-defined algorithms and sensor-based approaches [11]. However, these traditional approaches have faced major obstacles in the face of the unpredictability and dynamism of unstructured environments.

The genesis of autonomous navigation dates back to the early stages of robotics, where foundational frameworks centered around explicit algorithms and sensory inputs dictated the path of navigation. [14] These deterministic algorithms, while revolutionary at the time, were ill-equipped to adapt to the myriad complexities inherent in unstructured environments.[19] The rigidity of these approaches, fixed in their response mechanisms, often faltered when facing unforeseen obstacles or dynamically changing landscapes. As technology progressed, the limitations of these systems became increasingly evident, underscoring the necessity for more adaptable and dynamic methodologies capable of navigating the uncertainties of real-world terrains. [1]

B. Challenges in Traditional Navigation

Traditional navigation strategies, albeit foundational, grappled with limitations concerning their adaptability to changing environments. [3] The rigidity of static algorithms inhibited their ability to effectively maneuver through diverse and evolving landscapes, impeding the progress towards robust autonomous systems. [2]

Conventional navigation strategies, reliant on preprogrammed algorithms and predetermined decision trees, encountered notable constraints in adapting to the dynamic nature of unstructured environments. The inherent rigidity of these algorithms, although reliable within controlled settings, proved inadequate when met with the unpredictability of real-world scenarios. Variations in terrain, weather, or the sudden emergence of obstacles posed formidable challenges, often causing navigation systems to struggle or even fail in critical situations. [10] The limitations of these static methodologies prompted a quest for more adaptive and responsive systems capable of seamlessly operating in diverse and evolving landscapes without compromising efficiency or safety.

C. Adaptation and Innovation

The quest for autonomy in navigation prompted a shift towards adaptive and self-learning systems, necessitating the exploration of machine learning and artificial intelligence-driven approaches. This transition aimed to bridge the gap between the static nature of traditional algorithms and the dynamic demands of unstructured terrains.

The quest for freedom of navigation led to a paradigm shift to more agile and scalable systems. These changes include a shift from deterministic algorithms to machine learning insights and artificial intelligence-driven approaches Combining machine learning models with AI algorithms could enable navigation systems to begin to mimic human mind-like adaptive decision-making processes This departure from pre-programmed responses in learning-based approaches enabled these systems to analyze environmental data and learn from environmental data, evolving their navigation strategies in time in itself This turning point marked a pivotal moment, where the search for independent travel shifted from functional to functional in ever-changing environments A foundation in has been laid for systems capable of learning and changing environment of autonomous navigation system. [7]

III. FUNDAMENTALS OF DEEP REINFORCEMENT LEARNING

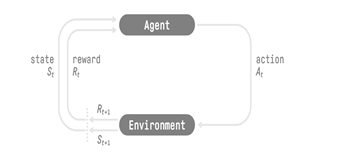

Deep Reinforcement Learning (DRL) stands at the intersection of deep learning and reinforcement learning, representing a cutting-edge approach in artificial intelligence. At its core, reinforcement learning involves an agent learning to make sequences of decisions in an environment to maximize cumulative rewards. Unlike supervised learning, where explicit input-output pairs guide the model's learning, and unsupervised learning, which focuses on finding patterns in unlabeled data, reinforcement learning learns through interaction with an environment. [1]

The fundamental components of DRL involve an agent, environment, actions, states, rewards and a learning algorithm. The agent interacts with the environment by taking actions based on its policy, which is guided by the learned knowledge.[5] These actions influence the state of the environment, leading to subsequent states and rewards. The agent's objective is to learn an optimal policy that maximizes cumulative rewards over time. The agent continuously engages with the environment, receiving states, taking actions, observing transitions, and receiving rewards as part of the learning process to optimize its decision-making and maximize cumulative rewards over time.

- Step 1: Initial State S0?: The agent receives the initial state S0? from the environment, representing the game's starting state at time step t=0. This state encapsulates the game's current situation, such as the initial frame or the configuration of the game environment.

- Step 2: Agent Action A0?: Based on the received state S0?, the agent takes an action A0?. In this scenario, the action chosen is to move to the right within the game.

- Step 3: Transition to New State S1?: The environment responds to the agent's action by transitioning to a new state S1?. This new state represents the updated game scenario, possibly showing a subsequent frame or the altered game environment after the agent's action of moving to the right.

- Step 4: Reward R1?: Along with the new state S1?, the environment provides a reward R1? to the agent. In this specific instance, the reward given is a positive value of +1, indicating that the agent's action resulted in a desirable outcome.

The learning process in DRL typically involves algorithms such as Deep Q-Networks (DQN), Policy Gradient Methods, or Actor-Critic methods, which modify an agent's policy or objective function based on experiences gathered from the environment. The combination of these elements underpins DRL algorithms’ ability to recognize and adapt them to complex tasks, making them essential technologies in a variety of industries, from robotics to sports games and beyond. Combined with sophisticated approaches such as interview-based research and meta-learning, reinforcement learning (DRL) continues to push the boundaries of autonomous decision-making, paving the way for transformative advances in AI research and implementation.

DRL extends traditional reinforcement learning by leveraging deep neural networks to handle high-dimensional sensory input, enabling it to handle complex problems.

Neural networks provide the architecture for approximating value functions or policy functions, allowing agents to learn complex mappings between states, actions, and rewards. This deep architecture enables DRL to tackle tasks that were previously infeasible due to their high-dimensional nature, such as image-based inputs in vision-based applications or raw sensor data in robotics. [12]

IV. MODELING UNSTRUCTURED ENVIRONMENTS

Modelling and representing the unstructured environment, especially in dynamic and unpredictable terrain, is crucial for successful navigation using deep reinforcement learning (DRL) techniques on:

A. Methods For Representing Unstructured Environments

- Grid-based representation: Divide the environment into a grid and represent each cell’s characteristics (e.g., obstacles, terrain, etc.).

- Graph-Based Representation: Represent the environment as a graph where nodes represent locations and edges represent connections or proximities.

- Object-based objects: Use objects extracted from the environment (e.g., visual objects from photographs or depth maps) to represent its objects.

B. Considerations For Dynamic And Unpredictable Terrain

- Adaptation: The method represented must be able to adapt dynamically to changes in the environment.

- Robustness: The indicator must be robust to soil uncertainties and variations.

- Real-time updates: Ensure that the position can be updated in real time as the environment changes.

C. The Importance Of Accurate Environmental Representation For Drl-Based Navigation

- Policy learning: Accurate representation enables travel policy to be learned more efficiently by providing the agent with meaningful information about the country.

- Generalization: The well-represented setting allows scholars to incorporate all systems across landscapes and settings.

- Safety: Successful references help ensure safe passage by providing operators with reliable information about obstacles and hazards.

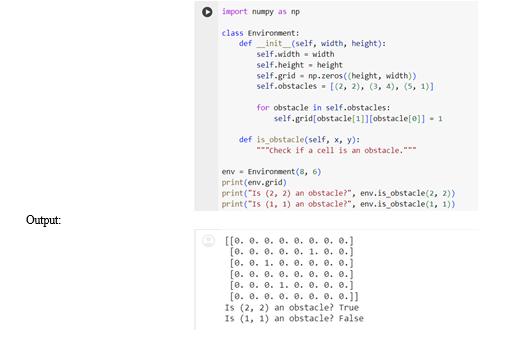

The development of algorithms that enable robots to move autonomously through such environments is important for various applications such as search and rescue operations, surveys and logistics. The objective of this project is to apply deep reinforcement learning (DRL) techniques to model basic environmental models for navigation projects. In particular, we aim to create a web-based platform where agents (robots) can navigate while avoiding obstacles.

Program Details:

- The program defines a Python class called Environment, which stands for the environment to be simulated.

- The environment is represented as a grid, where each cell can be empty or occupied by an obstacle.

- The obstacles in the environment are defined first, and their locations are shown as (x, y) coordinates.

- The is_obstacle method can ask if there is an obstacle in a particular cell in the environment.

- Initially, the function creates an instance of the Environment class with the specified width and height.

- The program then tests the environmental stability by asking if there are constraints in a cell.

- self.grid[obstacle[1]][obstacle[0]] = 1: This line sets the value of the grid cell corresponding to the obstacle's location to 1, indicating the presence of an obstacle at that location. Note that obstacle[1] corresponds to the y coordinate (row index) and obstacle[0] corresponds to the x coordinate (column index).

- self.obstacles = [(2, 2), (3, 4), (5, 1)]: This line defines a list of obstacle locations within the environment. Each obstacle is represented as a tuple (x, y) where x is the column index and y is the row index.

By accurately modeling and representing the environment, researchers and engineers can develop and test navigation systems under controlled conditions before using them in real-world situations Thus this system as a key step towards building a more sophisticated navigation system capable of operating in a dynamic and unpredictable landscape It works.

V. AUTONOMOUS NAVIGATION SYSTEMS

Autonomous navigation systems have made incredible strides in recent years, promising flexibility for use in a variety of industries from search and rescue missions to deliveries but also navigation to unstructured destinations presenting unique challenges that traditional transport systems attempt to address. In this section, we will explore the limitations of traditional navigation systems, the critical need for robust and adaptable navigation solutions, and the specific challenges faced in navigation in dynamic and unpredictable terrains in the 19th century.

A. Traditional Navigation Systems: Limitations and Constraints

Traditional navigation systems that rely on predefined maps or explicit plans are severely limited in the face of unstructured environments These systems often lack the ability to adapt to dynamic changes in terrain or obstacles, resulting in navigation errors and inefficiencies. For example, while GPS-based navigation is effective in well-mapped urban areas where satellite signals are obstructed or imprecise, such as dense forests or urban canyons, rule-based navigation struggle to cope with unexpected obstacles or changing environments, limiting their use in dynamic situations. [11]

B. Need for flexible and Complex Transport Systems

In unstructured environments where conditions can change rapidly and are unpredictable, the importance of flexible and robust navigation systems cannot be overstated. [10] These systems must have the ability to recognize their surroundings around, make appropriate decisions in real time, and safely navigate uncertain paths. In contrast to traditional systems of associated guidance systems, intuition, education, artificial intelligence, etc. is the advantage of the advanced technology, which continues to be their understanding of environmental and supports their intimate mortality strategy. Learning from experience and adapting to changing circumstances, these systems offer unprecedented flexibility and flexibility in navigating unstructured environments

C. Real-life scenarios: A Non-Invasive mechanism for Risk Management

Consider where a natural disaster like an earthquake or a hurricane hit a populated area, leaving in its wake a great deal of destruction and chaos in such crises, time is of the essence, traditionally search and rescue operations can prevent logistical challenges and dangerous situations This is where autonomous drone technology comes in, providing a quick and efficient way to search disaster areas, identify survivors feeding and providing assistance in remote areas. [2]

The use of autonomous drones in disaster management is a formidable challenge due to the dynamic and unpredictable nature of the environment. The disaster area is littered with debris, interfering with traditional routes, posing a hazard to rescuers and drones Also, environmental factors such as strong winds, smoke or poor visibility can make travel more difficult and pose a danger to aircraft.

D. Challenges of navigation in the midst of disaster

- Obstacle Avoidance: Navigating rough terrain filled with debris, debris and obstacles is a daunting challenge for autonomous drones. To ensure safe access, drones must use sophisticated intelligence systems including lidar, cameras and radar to accurately detect and classify obstacles in their vicinity and then search sensor data in real-time to derive optimal navigation options, including obstacle size, In addition to estimating factors such as size and speed, drones must role drones constantly monitor their surroundings to optimize their routes, avoid collisions, and maintain safe distances from buildings and terrain.

- Active terrain: Disaster areas have rapidly changing landscapes, where debris shifts, buildings collapse, and new obstacles suddenly emerge Given the mandate for such dynamic terrain, drones must the autonomous function is capable of capturing and responding to environmental changes in real time. This requires the integration of adaptive systems capable of dynamically updating routing options based on changing topography. Using sensor data and predictive models, drones can anticipate potential hazards to avoid obstacles Moreover, machine learning can enable drones to learn from experience past and predict future changes in land development, their navigation in dynamic environments provides flexibility and adaptability.

- Available Contacts: Communication disruption is common in disaster situations, where infrastructure disruption or network congestion can compromise real-time data availability and remote control capabilities so autonomous drones must operate autonomously, using them rely on onboard sensors and algorithms to make informed navigational decisions without external guidance ? Drones for situational insight In addition to being able to be controlled and navigated efficiently, drones can provide internationally deployed communication channels be used, to enable collaboration with other independent contractors and to share information locally to achieve common goals

An autonomous mechanism that assesses risk through external communication less reliable methods -Maintain independence of operation and environmental effectiveness. [13]

4. Human-robot interaction: Effective communication between autonomous drones and human responders during joint search and rescue operations is critical to maximizing mission effectiveness and ensuring that human the safety of all participants shall be They should be equipped with flexible control devices and communication systems that allow people to stay on the ground. Seamless communication with employees is enabled. This includes the ability to take orders, provide status updates, transmit critical information such as known hazards or identified survivors clearly and in a timely manner Also, drones can exhibit cooperative behaviors, such as following the instructions of human responders, the context of the situation, responding based on human interactions and adjusting their actions and enhancing the effectiveness of human and robot collaboration, the use of drones can increase the overall efficiency and success of search and rescue missions, ultimately saving lives and reducing the risks associated with them

Consider a scenario in which an autonomous robot is tasked with navigating a large warehouse to search for and retrieve specific products from a predetermined location The warehouse is organized as a path with obstacles such as storage so, machinery, and other tools are scattered everywhere. The robot must maneuver efficiently in this challenging environment to achieve its goals.

F. Objectives

- Retrieval efficiency: A self-directed navigation system primarily aims to traverse a warehouse environment and search for specific items based on predefined labels or identifiers

- Obstacle Avoidance and Safety: Another important objective is to ensure the safety of the robot and the warehouse environment. The navigation plan should include complex obstacle avoidance techniques for identifying and circumventing obstacles encountered along the way. By prioritizing safety, the system minimizes the risk of collisions with the robot, reducing potential damage to the robot or its surroundings.

- Optimized route planning: Besides avoiding obstacles, navigation systems aim to optimize robot routes to reduce transit time and energy consumption By intelligently design warehouse routes, taking into account factors such as congestion, distribution, navigation efficiency Emphasize the importance of balancing navigation speed.

F. Program Based Solution

H. Working of the Program

- 1. The program initializes an instance of the AutonomousNavigationSystem class with a specified large environment, representing the dimensions of the navigation area (e.g., a 10x10 grid).

- 2. The robot starts moving from the center of the environment (position [0, 0]) and starts taking a random step (take_random_step path) in four possible directions: up, down, left or right.

- 3. At each step, the robot updates its position based on a randomly chosen direction that increases the number of steps. Then the robot position is repositioned accordingly, and the number of steps taken is increased.

- 4. The trajectory continues for the specified number of steps (num_steps) or until the robot reaches the environment boundary, which exceeds the size of the environment and is determined by the value of its x or y coordinates which is accurate and indicative

- 5. When the robot reaches the limit, the program terminates the journey and prints a message indicating the completion of the journey and the number of steps taken and the final position of the robot. When the robot reaches the limit, the program terminates the journey and prints a message indicating the completion of the journey and the number of steps taken and the final position of the robot.

In a random walk algorithm, an agent (in this case an autonomous navigation system) randomly decides (in this case the direction of movement) at each step without considering any specific goal or target Turn off and on While simple, random walk algorithms can provide valuable insights into the behaviour of systems and are fundamental to more complex guidance strategies.

I. Time Complexity:

- The time complexity of each random step is a constant, O(1), because it involves a simple arithmetic operation and a choice of directions

- The navigation loop runs for the specified number of steps (num_steps), resulting in a linear time complexity of O(num_steps).

- Therefore, the total time complexity of the program is O(num_steps).

J. Space Complexity:

- The spatial complexity of the project depends mainly on the size of the environment, which is represented by a 2D grid with the dimensions environment_size x environment_size

- Since the size of the environment is the only input parameter that affects the spatial complexity, the spatial complexity is O(environment_size^2).

- In addition, the program controls the robot’s position and the variable number of steps, each of which requires a constant position.

- Therefore, the total space complexity of the program is O(environment_size^2).

VI. STATE-OF-THE-ART APPROACHES IN AUTONOMOUS NAVIGATION

Advances in automated mobility have transcended traditional models, inspiring sophisticated approaches that allow machines to navigate and operate autonomously in ever-changing lateral environments multi-dimensional and participatory navigation that enables informed decision making. The core of cutting-edge approaches lies in their ability to overcome the limitations of traditional navigation systems. In terms of growth in automated mobility, the data reveals a remarkable increase in investment and adoption, with global spending on autonomous vehicle technology reaching $556 billion by 2026, on an annualized basis compounded growth rate (CAGR) of 39.47% .

A. Simultaneous Localization and Mapping (SLAM)

Simultaneous localization and mapping (SLAM) is an important technique in autonomous guidance that allows robots or vehicles to simultaneously map unknown locations, and calculate their position on this map Modern SLAM techniques use sensor fusion use, using data from sensors such as LiDAR, cameras and IMUs. Google's MapCar, for example, uses a combination of 2D and 3D mapping techniques, allowing robots to navigate tight terrain with accuracy and efficiency Used in automated vehicles so ensures local and map accuracy in real time, facilitating safe and reliable travel.

B. Deep Learning-Based Perception

Deep learning has changed the perception that vehicles can detect and interpret complex environmental cues. Convolutional Neural Networks (CNNs) process sensor data (e.g., images, point clouds) for object recognition, semantic classification, and visual understanding. The Tesla Autopilot system uses deep neural networks for real-time object detection and route planning, enhancing its ability to recognize pedestrians, vehicles and road signs, which is critical for safe navigation contained in various circumstances. Additionally, consumer surveys indicate growing confidence in autonomous vehicles, with 75% indicating a willingness to consider purchasing vehicles equipped with advanced technology based on deep learning in the next five years.

C. Reinforcement Learning in Control Policies

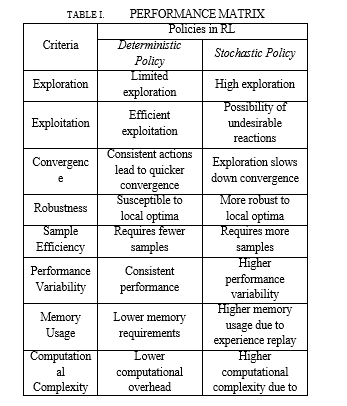

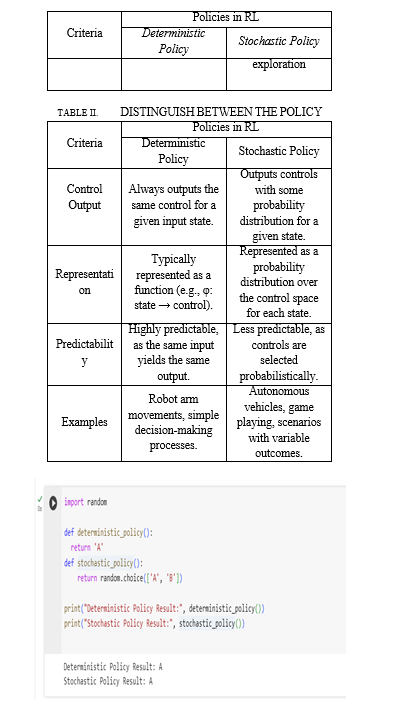

In the realm of reinforcement learning problems involving a finite set of states and controls, two primary types of policies can be established.

The program exhibits two different decision-making strategies: a deterministic policy, which consistently chooses a fixed action ('A') and a contingency policy, which introduces randomness through two possible actions ('A' or ‘B’) selectively. It shows the difference. This demonstration not only introduces the concepts of deterministic and contingency policies but also provides insights into how different strategies can affect decision outcomes in different situations.

VII. ADVANTAGES & DISADVANTAGES

A. Advantages

- Adaptability: An autonomous guidance system based on deep reinforcement learning (DRL) can adapt to changing environments and unpredictable obstacles, making it highly versatile under different circumstances in a dynamic environment. In an agricultural environment, an autonomous tractor guided by a DRL-based navigation system can adjust its course to avoid unexpected obstacles, such as rocks or fallen branches, while moving through fields These modifications ensure that the tractor can continue its operation without interruption, resulting in better harvesting or planting techniques.

- Efficiency: DRL-based navigation systems can optimize routing and decision-making processes over time, resulting in more efficient navigation routes and resource management. DRL-based guidance systems at distribution networks can dynamically adjust delivery routes based on real-time traffic conditions, weather forecasts and package priorities so This is adaptive to changing conditions and ensures that delivery vehicles use the most efficient routes to reach their destination.

- Real-time decision making: These systems can make real-time decisions based on sensory input and learned experiences, allowing them to react quickly to environmental changes and conditions which cannot be seen. During search and rescue operations in a disaster area, non-autonomous aircraft equipped with DRL-based navigation system can instantly adapt to changes in terrain, weather conditions, or they got they survived their presence. Using its learned experiences and reinforcement learning algorithms, the drone can quickly decide to change its altitude or heading to safely and effectively maneuver in a dynamic environment.

- Reduced human intervention: By relying on onboard sensors and algorithms, DRL-based guidance systems reduce the need for human intervention, reduces the risk to human operators and enables them to work in remote or dangerous areas

- Learning and Improvement: Independent management systems using DRL continually learn from their experience, enabling them to improve their performance and flexibility over time without explicit planning.

- Versatility: DRL-based navigation systems can be used in a variety of areas, including search and rescue operations, navigation, logistics and surveys, demonstrating usability application in many areas and is broadly applicable.

- Innovation possibilities: Continued improvements in DRL algorithms and technologies hold the promise of new innovations in autonomous navigation, opening the way for more sophisticated and capable systems in the future.

B. Disadvantages

- Complexity: Implementing DRL-based navigation systems requires expertise in machine learning, deep learning, and robotics, making them complex and challenging to develop and implement.

- Technical features: DRL algorithms typically require significant computational resources and processing power, limiting their potential in environments with embedded features or systems.

- Training data requirements: DRL-based navigation systems for training require a lot of data, including positive and negative experiences, which can be time-consuming and expensive to collect and interpret.

- Overfitting: There is a risk of overfitting in DRL algorithms, where the system learns to perform well on the training data but fails to generalize well to unseen areas or situations. In a self-driving car scenario, a DRL-based guidance system can be trained largely on data collected from specific locations or driving conditions, such as urban areas with road signs and internal signage clear but when the system has been deployed in a new location with different infrastructure or weather It may struggle to adapt and exhibit overly consistent behavior For example, if the system learned primarily to recognize some road signs often occurs in urban areas but is rare or absent in rural areas, can misinterpret roads or fail to navigate safely in unfamiliar areas, leading to potential accidents or navigation errors come by the way

- Safety Issues: Even with improvements, verifying the safety and reliability of DRL-based navigation systems remains a challenge, especially for high-priority applications such as autonomous vehicles in medical robotics.

- Ethical Considerations: An independent guidance system that raises ethical questions about responsibility, accountability and decision-making in situations where human life or property may be at risk. Ethical considerations in autonomous systems pose challenges in imposing responsibility in the event of an accident and in determining decision-making processes in critical situations, which require clear guidance for in terms of responsibility and risk priority

- Regulatory barriers: The implementation of autonomous guidance systems may face regulatory barriers and legal challenges related to compliance with liability, confidentiality, and security standards, hindering adoption and it has been widely used.

Conclusion

In conclusion, this paper explores the important role of deep reinforcement learning (DRL) in the development of the autonomous navigation field, especially in unstructured environments. Through a comprehensive review of DRL techniques and applications, we have highlighted the transformative potential of this technology in solving the complex challenges faced by autonomous systems. By using neural networks and reinforcement learning algorithms, practitioners can move energy into unpredictable environments, improving their decision-making processes over time. Results obtained through this research include a deeper understanding of the limitations of traditional navigation systems, exploration of DRL-based alternatives, and development of performance measures to provide various programs with wearable learning the characteristics of the intensity have been clarified Furthermore, the DRLs in an autonomous navigation system. Real-life cases and system-based solutions are presented to provide practical insights into the experiment. Looking to the future, there is a great opportunity for further developments in autonomous navigation systems. Future research will focus on refining the DRL algorithm for more efficient, robust, and adaptive navigation in different environments. Furthermore, the integration of emerging technologies such as simultaneous localization and mapping (SLAM) and mind-based deep learning can further enhance the capabilities of autonomous systems. Collaboration across sectors and continued experimentation with real-world applications will be key to unlocking the full potential of DRL in the future of shaping autonomous navigation system.

References

[1] Iqbal, J.; Islam, R.U.; Abbas, S.Z.; Khan, A.A.; Ajwad, S.A. Automating industrial tasks through mechatronic systems—A review of robotics in industrial perspective. Tech. Gaz. 2016, 23, 917–924. [2] Du, Y.; Hetherington, N.J.; Oon, C.L.; Chan, W.P.; Quintero, C.P.; Croft, E.; Van der Loos, H.M. Group Surfing: A Pedestrian-Based Approach to Sidewalk Robot Navigation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6518–6524 [3] Hurtado, J.V.; Londoño, L.; Valada, A. From Learning to Relearning: A Framework for Diminishing Bias in Social Robot Navigation. Front. Robot. AI 2021, 8, 650325. [4] Zheng, J.; Mao, S.; Wu, Z.; Kong, P.; Qiang, H. Improved Path Planning for Indoor Patrol Robot Based on Deep Reinforcement Learning. Symmetry 2022, 14, 132. [5] Ko, B.; Choi, H.; Hong, C.; Kim, J.; Kwon, O.; Yoo, C. Neural network-based autonomous navigation for a homecare mobile robot. In Proceedings of the 2017 IEEE International Conference on Big Data and Smart Computing (BigComp), Jeju, Republic of Korea, 13–16 February 2017; pp. 403–406. [6] Zhang, K.; Niroui, F.; Ficocelli, M.; Nejat, G. Robot Navigation of Environments with Unknown Rough Terrain Using deep Reinforcement Learning. In Proceedings of the 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Philadelphia, PA, USA, 6–8 August 2018; pp. 1–7 [7] Marin, P.; Hussein, A.; Gomez, D.; Escalera, A. Global and Local Path Planning Study in a ROS-Based Research Platform for Autonomous Vehicles. J. Adv. Transp. 2018, 2018, 6392697. [8] T. N. Larsen, H. Ø. Teigen, T. Laache, D. Varagnolo, and A. Rasheed, “Comparing deep reinforcement learning algorithms’ ability to safely navigate challenging waters,” Frontiers in Robotics and AI, vol. 8, 2021. [9] J. Schulman, S. Levine, P. Abbeel, M. Jordan, and P. Moritz, “Trust region policy optimization,” in International conference on machine learning. PMLR, 2015, pp. 1889–1897. [10] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv preprint arXiv:1707.06347, 2017. [11] T. P. Lillicrap, J. J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, and D. Wierstra, “Continuous control with deep reinforcement learning,” arXiv preprint arXiv:1509.02971, 2015. [12] S. Fujimoto, H. Hoof, and D. Meger, “Addressing function approximation error in actor-critic methods,” in International conference on machine learning. PMLR, 2018, pp. 1587–1596. [13] T. Haarnoja, A. Zhou, K. Hartikainen, G. Tucker, S. Ha, J. Tan, V. Kumar, H. Zhu, A. Gupta, P. Abbeel et al., “Soft actor-critic algorithms and applications,” arXiv preprint arXiv:1812.05905, 2018. [14] R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction. MIT press, 2018. [15] D. Silver, G. Lever, N. Heess, T. Degris, D. Wierstra, and M. Riedmiller, “Deterministic policy gradient algorithms,” in International conference on machine learning. PMLR, 2014, pp. 387–395. [16] The Mathworks, Inc. Robotics System Toolbox. MATLAB. Natick, Massachusetts, United States. [Online]. Available: https://www.mathworks.com/products/robotics.html [17] The Mathworks, Inc. Reinforcement Learning Toolbox. MATLAB. Natick, Massachusetts, United States. [Online]. Available: https://www.mathworks.com/products/reinforcement-learning.html [18] S. Sivashangaran and A. Eskandarian, “XTENTH-CAR: A proportionally scaled experimental vehicle platform for connected autonomy and all-terrain research,” arXiv preprint arXiv:2212.01691, 2022. [19] G. Mehr, P. Ghorai, C. Zhang, A. Nayak, D. Patel, S. Sivashangaran, and A. Eskandarian, “X-CAR: An experimental vehicle platform for connected autonomy research,” IEEE Intelligent Transportation Systems Magazine, pp. 2–19, 2022.

Copyright

Copyright © 2024 Shifa Siraj Khan, Sanika Satish Lad, Anant Manish Singh. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59534

Publish Date : 2024-03-28

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online