Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Face Emotion Recognition System for Depression Detection Using AI Techniques

Authors: Sonali Singh, Prof. Navita Srivastava

DOI Link: https://doi.org/10.22214/ijraset.2024.57890

Certificate: View Certificate

Abstract

The human face is an essential aspect of an individual\'s body. It plays a crucial function in detecting and identifying emotions since the face is where a person exhibits all of their fundamental emotions. Through human emotions, we solve different types of problems. Like healthcare, security, business, and education. The purpose of this paper is to present the detection of depression in the mental health sector. Depression or stress is faced by most of the population all over the world for many reasons and at different stages of life. As the current human life is a busy life cycle, a person gets depressed or stressed in their daily life. Depression may be found in educational activities, competitive or challenging tasks, employment pressure, family consequences, different sorts of human connection management, health issues, old age, and other situations. Artificial intelligence and deep learning approaches are suggested in this study to assess depression. This research is useful for analyzing the mental health of every employer and psychologist when counselling their patients. Here, we propose a deep convolutional neural network (DCNN) model. This model can classify two types of human facial emotions. Which is based on positive and negative emotions. This model is trained and tested using the FER-2013 dataset. The data set used for experimentation is a FER (Facial Expression Recognition) dataset available in the KAGGLE repository. The implementation environment includes Keras, TensorFlow, and OpenCV Python packages. The result includes the emotion detection accuracy between the training and test phases. The average accuracy achieved was 77%.

Introduction

I. INTRODUCTION

Nowadays, depression is a big problem that people face at different stages of life, and it can have serious consequences. Depression is faced by most of the population all over the world for many reasons due to the current busy life cycle. A person gets stressed in his life, which leads to depression in the long run. Nowadays, the number of suicides is increasing drastically due to uncontrolled stress levels. We encounter depression or stress in educational activities, competitive/challenging tasks, work pressure, family consequences, various types of coping with human relationships, health disorders, old age, etc. [1].

Through human facial expressions and emotional recognition, it takes on great importance at this time, because it can capture people's behaviors, feelings, and intentions [2]. Emerging technologies like machine learning (ML) and artificial intelligence (AI) have the potential to lead the automation revolution in today's technology. AI that uses motion and object detection to identify emotions. In this instance, the computer can monitor and record the position and motion of facial features like the lips, eyes, and eyebrows. After that, it contrasts movement data with learned emotions [3]. To address complicated issues, the Neural Network (NN), Deep Neural Network (DNN), and Convolutional Neural Network (CNN) use new and enhanced techniques [1]. In the developing world, face identification using image processing has several applications. When it comes to gathering data in real-time, real-time cameras show promise. Image and video datasets are used in many fields and applications such as medical imaging, much more scanned image data can be analyzed using artificial intelligence techniques.

Emotions are always a reaction to a person's state of mind and behavior, and they may be studied for a short while. Numerous other issues may be resolved and new applications can be developed after face identification employing computational approaches and procedures [4]. This study proposes "artificial intelligence-based AI technology" for depression diagnosis. Techniques for analyzing facial emotions are used to gauge an individual's stress or depressive state. When it comes to detecting emotions and sadness, this strategy is very adaptable. Thus, this technique may be used with patients of any age or kind [5]. Because the depression detection dataset comprises of pictures or videos, the system employs 2D CNN, a sort of deep learning that conducts mathematical operations, to do image or video recognition. Furthermore, the suggested deep convolutional neural network offers high accuracy in addition to being taught to recognize a variety of human facial emotions. A neural network framework is created and evaluated using a variety of criteria, including training loss, testing or validation loss, network train data correctness, and model test data accuracy. Deep Neural Networks are used to recognize expressions on faces.

The objective is to determine the optimal approach for real-time learning environments' use of facial expression recognition for depression detection. Enhancing the emotion detection algorithm's precision and effectiveness is essential to achieving this aim [32].

A. Related Work

Reviews of emotion analysis in social media data for fear were shown by Balu et al. [6]. detection of sadness or stress utilizing a variety of artificial methods. It was evident from this study's analysis that a variety of artificial intelligence approaches were employed to determine emotions from social media data that included words, emoticons, and emotions. Sentiment research reveals that multi-class categorization using the Deep Learning algorithm has a high accuracy rate [32].

Kalliopi Kyriakou from the Department of Geoinformatics at the University of Salzburg in Australia and others have designed a stress detection system based on wearable physiological sensor measurements. By using a rule-based system that combined the galvanic response of the skin with a physiological sensor measured by skin temperature, they were able to identify stress [7].

Mr. Purnendu Shekhar Panday of BML, Munjal University in Haryana, India has created a prototype to determine a person's stress level based on changes in their heart rate over time. It recognizes the pattern of heart rate changes, whether people are stressed or working out in the gym. When the server receives a heartbeat reading, a scatter plot is created as a visualization. Using these stress marks, the stress object known as a detector is also mapped [8].

Wang et al. al. (2008), according to researchers extracted geometric features from 28 regions formed by 58 2D facial landmarks to characterize facial expression changes. Probabilistic classifiers were used to propagate the probability frame by frame and create a probabilistic facial expression profile, the result showing that depressed patients show different facial expression trends than healthy controls [9].

In FER, Ghayoumi [10] recently gave an overview of deep learning. However, the assessments only focused on how the deep learning technique differed from the traditional approach. Nowadays, convolutional neural networks (CNNs) are used by most models to create FER models. CNN outperformed other algorithms in accuracy, even with a limited dataset (EmotiW). CNN's utility is not restricted to facial expression recognition (FER); with further refinement, it may be used to identify specific facial features rather than the whole face. Interest points like the lips, eyes, mouth, eyebrows, etc., will be included. On several datasets, including FER 2013, CK+, FERG, and JAFEE, CNN performs even better than the state-of-the-art models [12, 13]. CNN is more effective for Liu et al. [15] in terms of automated feature extraction and less input. They put out a CNN-based model that is composed of three subnetworks with various topologies. The Facial Emotion Recognition (FER) dataset is more accurately represented by this model [11].

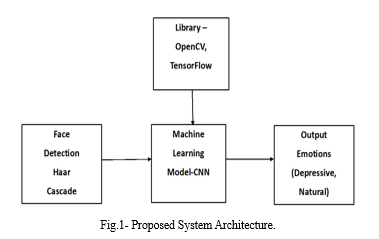

Other methods, like Haarcascade, integrate neural networks with eye and mouth cues to recognize them, and the result is a much superior scheme for the suggested system. This study uses the CNN approach to identify automatic emotions or facial expressions. To determine the patient's degree of depression, further analysis is done on these recorded facial expressions. A novel class of depression remedies is produced as a result of this paper's study of the suggested AI methodologies. Fig. [1] displays the design's system architecture.

II. DATASET

The data file used in the article for analysis is the FER-2013 open-source data file. This dataset was first created for a project by Pierre-Lue Carrier and Aaron Courville and then shared publicly for a Kaggle competition. This dataset consists of 35,887 grayscale images, where each image has a size of 48 × 48 face images with different emotions. And its main sign can be divided into 7 types- 0= Angry, 1= Disgust, 2= Fear, 3= Happy, 4= Sad, 5= Surprise, 6= Neutral. But in this article, we have divided these datasets into 2 types – 0= depressed, and 1= natural.

III. METHODOLOGY

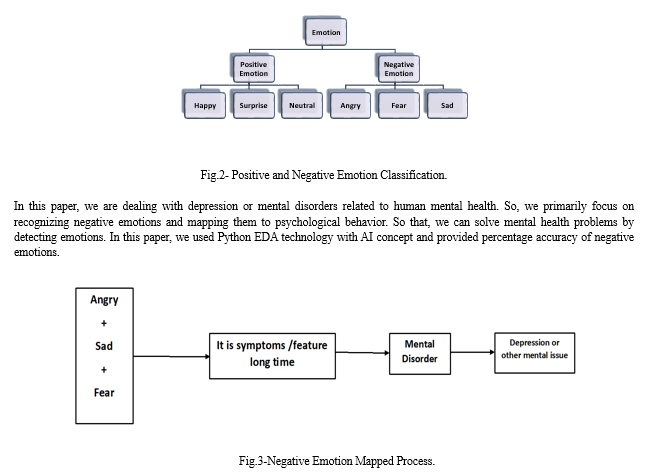

The process for identifying depressive feelings in picture data was covered in this part. Happiness, sorrow, surprise, anger, fear, and disgust are the six fundamental models of emotions that Ekman offers. These feelings were separated into good and negative categories by Seung Jun and Dong-Keun Kim. Contentment, astonishment, neutral and negative feelings, melancholy, fury, terror, etc. are examples of positive emotions. [17],[18]. happy emotions are linked to better job performance and human health, whereas negative emotions when influenced by a variety of conditions, lead to stress and impaired focus [19], [20]. For these reasons, it is reasonable to argue that happy emotions are very advantageous for human health. Negative ones cause injury and point to a potentially hazardous bodily or psychological state [21]. Depression, stress, anxiety, loneliness, mental breakdowns, and other related issues are among the effects on emotional health. To tackle this issue, we first identify the emotions on a human face and transfer them to the psychology domain. Emotional stress or depression symptoms that are similar.

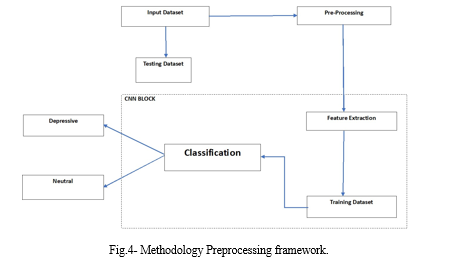

A. Methodology Preprocessing Framework

In this paper, we have used Python technology with the concept of AI that provides negative emotions. After the classification, we can achieve better solutions regarding the classification of image and video data. We compared the negative emotions with the possible disease of the patients. As with depression or stress, it can also describe how human emotions can be analyzed for healthcare purposes. Here, we used the methodological framework of emotion classification by preprocessing for depression detection. The methodological framework is a reflection of the method. The entire methodological procedure is shown in Fig.

B. Tools and Technologies

In this research, we employed a variety of methods and technologies to classify and recognize emotions.

- OpenCV: OpenCV is a free and open-source computer vision and machine learning software library. To speed the introduction of artificial intelligence into products, OpenCV has been built as a common infrastructure for computer vision applications. The library contains about 2500 optimized algorithms, encompassing a broad variety of classic and cutting-edge computer vision and machine learning approaches [17].

- TensorFlow: The machine learning model can be used with free and open-source TensorFlow software. Deep neural networks are the main focus of this comprehensive open-source machine learning platform. Huge volumes of poorly structured data are analyzed through deep learning. Python is used to create APIs for building high-performance applications [18].

- Keras: Keras is the TensorFlow platform's high-level API. It offers a user-friendly, high-productivity interface for tackling machine learning (ML) issues, with an emphasis on current deep learning. Keras handles all aspects of the machine learning workflow, from data processing to hyperparameter tweaking to deployment. It was created to allow for fast experimentation [19].

- Convolutional Neural Network: Convolutional neural networks are deep learning neural networks that are intended to interpret structured information such as images. Many visual applications, including picture categorization, are at the cutting edge of technology. Convolutional neural networks are commonly employed, if not entirely, in computer vision. A CNN's power is derived from a special layer known as a convolutional layer, which is a feedforward neural network with up to 20 or 30 layers [20].

C. Proposed Model

A neural network is an ensemble of algorithms designed to imitate the structure and functions of the human brain. It finds patterns in data and leverages those patterns to solve problems. Convolution is a mathematical technique that is used to determine the difference between data in convolutional neural networks [2], [21], [22]. Traditional neural networks are utterly ineffective at solving complicated problems such as pattern recognition, image and video classification, etc., whereas convolutional neural networks perform very well in these applications and provide to excellent accuracy [33].

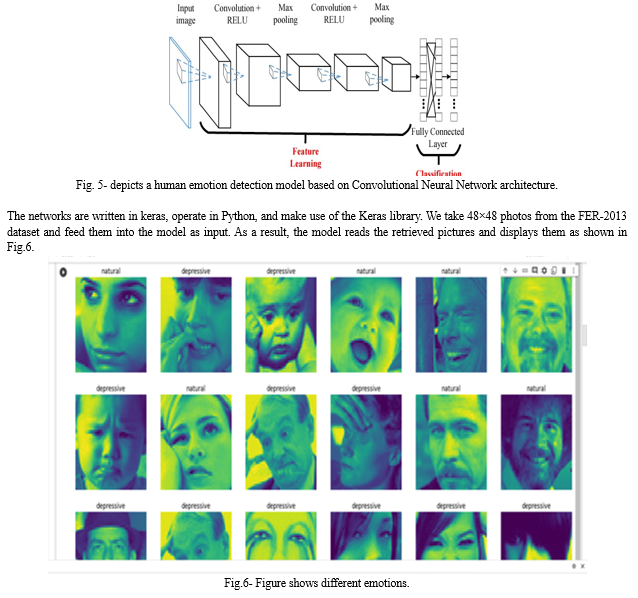

Convolutional layers, rectified linear units (ReLUs), pooling layers, and fully connected layers are the four layers that make up a convolutional neural network. Together, these layers extract information from the input pictures. after the feature extraction from all of the input photos. Each convolutional filter may represent a feature that has been extracted from the input pictures by the algorithm, which has learned it [23].\

The figure image depicts two distinct moods. The network's first 48x48 input layer seeks to increase accuracy and decrease loss in the final product. The model comprises a convolutional layer with 64 filters, each measuring [3×3] [2]. Subsequently, there are two additional convolutional layers, a max pooling flattening layer, a local contrast normalization layer, and a max pooling layer. Rectified Linear Units, or ReLU, is the activation function that we use, along with a drop of 0.4 to minimize overfitting. The softmax function comes after the CNN model's dimensionality is decreased by concurrently adding the first and second layers. To build a more precise model, we further use the drop-out, Adam optimizer.

IV. RESULT AND DISCUSSION

The model can be implemented using the Python programming language. This model is also simulated in Google Colab or Jupyter Notebook and combines convolutional neural network layers for model construction, model compilation, and fitting, keras that runs on TensorFlow, which is used as a deep learning library. Scikitlearn is a package that is used to identify the confusion matrix that provides the model's accuracy, precision, specificity, recall, etc. The confusion matrix, as well as other plots such as loss and precision plots, are plotted using Matplotlib and Seaborn. The FER image dataset is used to train CNNs that use the Adam optimizer and the loss feature is the categorical cross-entropy. Relu and softmax are activation functions. Adam is an optimizer of 40 epochs and is used with a learning rate of 0.01. Figures 1 and 5 show the CNN model. After running the OpenCV model, facial expressions and emotions are detected as shown in Figure 6 above, which indicate 2 main emotions. This human facial expression and emotions are depressed and normal emotions are recognized. After the training is complete, we include this code in the web application we created to evaluate our model. Figure 7 presents the facial expression analysis model that uses the keras model, and Figure 8 shows the accuracy of the value in the keras model. During model training, we save the model so that we may utilize it in our web application. It loads the trained data-containing stored model. Human emotion recognition in a picture collection is now accomplished using it.

V. FUTURE WORK

The update algorithms can be done easily because we are doing a modular implementation and the work could continue in the future. We can also improve the concept of emotion detection to detect depression or stress using negative emotion videos and negative voice audio processing techniques.

Conclusion

In this paper, we proposed a multi-layer convolutional neural network for human face expression extraction and emotion recognition for depression detection. This model classifies 2 human facial expressions from the FER dataset of 35887 images used. In this article, human emotions are considered to be depressed and natural. The model has similar training and testing accuracies, indicating that it best fits and generalizes to the data. Humans can fight mental illness by implementing depression or stress detection using facial expressions and systems. It adds space to improve a person\'s overall well-being. Deep learning technology provides effective stress prediction accuracy. Using several open-source libraries– TensorFlow, Google Collab, and some Python libraries results in a network model for prediction. The image data along with the labels are trained on a convolutional algorithm to achieve prediction accuracy. This document has a facial emotion recognition accuracy of 77% to detect depression or stress.

References

[1] Daniel Nixon et al., A novel AI therapy for depression counseling using face emotion techniques, Global Transitions Proceedings, 3 April 2022, DOI.org/10.1016/j.gltp.2022.03.008. [2] Sivakumar Depuru et al., Human Emotion Recognition System Using Deep Learning Technique, Journal of Pharmaceutical Negative Results, Volume 13, Issue 4,2022, DOI: 10.47750/pnr.2022.13.04.141. [3] Edwin Lisowski, AI Emotion Recognition: can AI guess emotions? https://addepto.com/blog/ai-emotion-recognition-can-ai-guess-emotions/ [4] Taejae Jeon et al., Stress Recognition using Face Images and Facial Landmarks, authorized licensed use limited to: UNIVERSITY OF BIRMINGHAM. Downloaded on June 14, 2020, at 19:31:52 UTC from IEEE Xplore. [5] José Almeida and Fátima Rodrigues, Facial Expression Recognition System for Stress Detection with Deep Learning, International conference on enterprise information systems, ICEIS 2021, DOI:10.5220/00 10474202560263. [6] N.V. Babu and E.G.M. Kangna, Sentiment Analysis in Social Media Data for Depression Detection Using Artificial Intelligence: A Review, 2021, DOI: 10.1007/s42979-021-00958-1 [7] A Phani Sridhar et al., Human Stress Detection using Deep Learning, International Journal of Progressive, research in Engineering Management, and Science (IJPREMS), Vol. 03, Issue 04, April 2023, pp: 428-435. [8] Mr. Purnendu Shekhar Panday, Machine Learning and IoT for Prediction and detection of stress, IEEE. 2017. [9] W?itong Guo ?t al., D??p N?ural N?twork for D?pr?ssion R?cognition Bas?d on 2D and 3D Facial ?xpr?ssions Und?r ?motional Stimulus Tasks, Volum? 15, Front. N?urosci, 23 April 2021, DOI: 10.3389/fnins.2021.609760. [10] Ghayoumi M., A quick r?vi?w of d??p l?arning in facial expression, J. Communication and Computer. 2017, [Googl? Scholar]. [11] Prof. Divya M.N. ?t al., Smart T?aching Using Human Facial ?motion R?cognition (F?r) Mod?l, Turkish Journal of Comput?r and Math?matics ?ducation Vol.12,10 May 2021. [12] S. Mina?? and Abdolrashidi, D??p- ?motion: Facial ?xpr?ssion R?cognition using Att?ntional Convolutional N?twork, F?b-2019. https://doi.org/10.48550/arXiv.1902.01019 [13] M.M. Taghi Zadeh et al., Fast Facial Emotion Recognition using Convolutional Neural Network and Gabor Filters, March 2019, DOI:10.1109/KBEI.2019.8734943 [14] P.R. Dachapally, Facial Emotion Detection using Convolutional Network, and Representational Autoencoder Units, 2017. [15] T.D. Sanger, Optimal Unsupervised learning in Feed Forward Neural Networks, Massachusetts Institute of Technology, Vol. 2, pp. 459-473, 1989, Printed in the USA. All rights reserved. [16] D. Yang et al., An Emotion Recognition Model Based on Facial Recognition in Virtual Learning Environment, 6th International Conference on Smart Computing and Communications, Procedia Computer Science 125 (2018) 2–10. [17] By Great Learning Team et al., OpenCV Tutorial: A Guide to Learn OpenCV in Python, August 14, 2023. [18] Ahmed Fawzy Gad, TensorFlow: A guide to Built Artificial Neural Networks using Python, University of Ottawa, December 2020, http://www.researchgate.net/ publication / 3218260203. [19] By Simplilearn, what is keras: The Best Introductory Guide to Keras, July 20, 2023, http://www.simplilearn.com/tutorials/deep-learning-tutorial/what-is-keras . [20] Anirudha Ghosh.et al., Fundamental Concept of Convolutional Neural Network, Research gate, January 2020, http://www.researchgate.net/publication/33740116/. [21] Zixuan Shangguan et al., Dual-Stream Multiple Instance Learning for Depression Detection with Facial Expression Videos, IEEE Transactions on neural systems and rehabilitation engineering, vol. 31, 2023. [22] C. J. L. Flores et al., \"Application of convolutional neural networks for static hand gestures recognition under different invariant features,\" 2017 IEEE XXIV International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Cusco, 2017, pp. 1-4. [23] Siva Kumar Depuru and Dr.K.Madhavi, Autoencoder Integrated Deep Neural Network for effective analysis of malware in distributed Internet of Things (IoT) Devices, The International Journal of analytical and Experimental Modal Analysis, Page No:226-232, Volume XI, Issue, XII, December/2019. [24] John Aravindhar, Mental Health Monitoring System Using Facial Recognition, PEN Test and IQ Test, April 26th, 2021, DOI: https://doi.org/10.21203/rs.3.rs-430144/v1. [25] Xiaofeng Lu, Deep Learning Based Emotion Recognition and Visualization of Figural Representation, 6 January 2022, Volume 12, doi: 10.3389/fpsyg.2021.818833. [26] Amey Chede et al., Emotion Recognition and Depression Detection Using Deep Learning, IJARIIE-ISSN(O)-2395-4396, Vol-9 Issue-3 2023. [27] Jeffrey F. Cohn et al., Detecting Depression from Facial Actions and Vocal Prosody,2009, PA, USA. [28] Suha Khalil Assayed et al., Psychological Emotion Recognition of Students Using Machine Learning Based Chatbot, International Journal of Artificial Intelligence and Applications (IJAIA), Vol.14, No.2, March 2023, International Journal of Artificial Intelligence and Applications (IJAIA), Vol.14, No.2, March 2023, DOI: 10.5121/ijaia.2023.14203. [29] Shrey Modi et al., Facial Emotion Recognition using Convolution Neural Network, Proceedings of the Fifth International Conference on Intelligent Computing and Control Systems, (ICICCS 2021) IEEE Xplore Part Number: CFP21K74- ART; ISBN: 978-0-7381-1327. [30] Aristizabal, Sara, et al. \"The feasibility of wearable and self-report stress detection measures in a semi-controlled lab environment.\" IEEE Access 9 (2021): 102053-102068. [31] Kuttala et al., Multimodal Hierarchical CNN Feature Fusion for Stress Detection, IEEE Access (2023). [32] Aditya Saxena et al., A Deep Learning Approach for the Detection of COVID-19 from Chest X-Ray images using Convolutional Neural Networks, Birla Institute of Technology and Science Pilani – Dubai Campus. [33] Danfeng Xie et al., Deep Learning in Visual Computing and Signal Processing, Applied Computational Intelligence and Soft Computing, 2017, Article ID 1320780 | https://doi.org/10.1155/2017/1320780.

Copyright

Copyright © 2024 Sonali Singh, Prof. Navita Srivastava. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET57890

Publish Date : 2024-01-05

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online