Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Facial Emotion Recognition using Convolutional Neural Networks

Authors: Arvind R, Dhanush Gowda S P, Bhaskar Prajapati, Ikram Nawaz Khan, Prof. Swaathie V

DOI Link: https://doi.org/10.22214/ijraset.2022.41536

Certificate: View Certificate

Abstract

Humans use their facial expressions to communicate their emotions, which is a strong tool in communication. Facial expression identification is one of the most difficult and powerful challenges in social communication, as facial expressions are crucial in nonverbal communication. Facial Expression Recognition (FER) is just an important study topic in Artificial Intelligence, with numerous recent experiments employing Convolutional Neural Networks (CNNs). The emotions that have grown in the face image have a significant impact on judgments and debates on a variety of topics. Surprise, fear, disgust, anger, happiness, and sorrow are the six basic categories in which a person\'s emotional states can be categorized according to psychological theory. The automated identification of these emotions from facial photos can be useful in human-computer interaction and a variety of other situations. Deep neural networks, in particular, are capable of learning complicated characteristics and classifying the derived patterns. A deep learning-based framework for human emotion recognition is offered in this system. The proposed framework extracts feature with Gabor filters before classifying them with a Convolutional Neural Network (CNN). The suggested technique improves both the speed of CNN training and the recognition accuracy, according to the results of the experiments.

Introduction

I. INTRODUCTION

Electronica photogram (EEG), electrocardiogram (ECG), and other physiological signals. A picture, often known as a test image, serves as the input data. Consider a picture with such a lot of people in it. These distinct faces that are responsible for vision are retrieved by surrounding each person with a box in tracking. These extracted faces feature a variety of alignments, such as a 45-degree angle left or right or inclined. As a result, Face Alignment is required. Aligned faces are the faces that have been acquired. It is possible to extract a variety of characteristics. The eyes, nose, and lips, for example, are depicted in the advertisement. These features are referred to as Feature Vectors when they are retrieved using Feature Extraction. A Feature vector has been added to the test picture, which can now be matched to the database. The whole model is trained using the database shown in Fig. 1. The characteristics and faces have already been extracted. As a result, the characteristics are compared and examined.

Emotions play a significant influence in our daily lives. Emotional and facial expressions are used to establish communication between individuals. There is a lot of attention these days to enhance the human-computer connection.

Surprise, fear, disgust, anger, pleasure, and sadness are the six types of human moods, according to psychological theory. This group of emotions can be portrayed by a person adjusting his or her facial muscles. Deep neural networks had also lately demonstrated their ability to model complex patterns. This system describes a deep learning-based method for human emotion identification. The suggested method uses Gabor filters for feature extraction, followed by a Convolutional neural network for the greatest accuracy.

Emotions play a significant influence in our daily lives. Emotional and facial expressions are used to establish communication between individuals. There is a lot of attention these days to enhance the human-computer connection.

When confronted with an external stimulus, the human neurological system develops a matching subjective attitude and communicates emotions through a variety of channels, including the face, voice, speech, stride, and body language.

II. LITERATURE SURVEY.

When Charles Darwin wrote "The Expression of Emotion in Man and Animals," he realized the value of Emotion Recognition [8]. The study of emotional states was heavily influenced by this work.

Reaction Recognition has grown in prominence as a result of its varied uses, such as the ability to detect a sleepy driver by means of emotion appreciation algorithms [9]. Multimodal approaches were employed by Corneanu et al., Matusugu et al., and Viola et al. [8,10,11] to offer the major classification for emotion recognition. They mostly spoke about how to recognize emotions and what techniques to use. The options covered included facial localization utilizing detection and segmentation algorithms such as Convolutional Neural Networks (CNN) and Support Vector Machine (SVM). Laterally by means of all of these strategies, Corneanu et al. focused on the classification of reaction identification by taking into account two main components: parametrization and facial expression recognition. In his study, parametrization was used to link the emotions identified, while facial expression recognition was performed using algorithms like Jones and Viola. This training correspondingly tries out some additional techniques such as CNN [12] as well as SVM [13], and it finishes by demonstrating that CNN outperforms Viola and Jones techniques in terms of accuracy. The first face ER model was created by Matusugu et al. [10]. The developed system was described as "sturdy" and "subject-independent." To find local changes between neutral and expressive faces, they used a CNN model. Despite two CNN models that were comparable to Fasel's model [14], a unique structure CNN was utilized to explore. Fasel's model featured two separate CNNs, One is for facial expression recognition, while the other is for determining the identity of a person's face. In addition, a Multi-Layer Perceptron (MLP) had been used to incorporate them together. The research was carried out using a variety of photos and yielded a 97.6% accuracy rate for 5600 still photographs of ten people. Tanaya et al. used a Curvelet-based feature selection method. They took use of Curvelet's illustration of interruptions in 2D functions. Being part of their duties, they converted the pictures to grayscale. These photos were again exploited to 256 resolutions, 16 resolutions, and finally 8 and 4 resolutions. Further in his work, he employed a curvelet to train the procedure. The reason for taking this route was that if a person's face was not recognized in the image file at first, bigger curves, which are available at lower bit resolutions, would be used to identify the human image. Meanwhile, on diverse datasets, the one-Against-All (OAA) Support Vector Machine methodology was used to compare the results of wavelet and curvelet-based approaches, with the curvelet method being superior to the wavelet method.

III. PROPOSED SYSTEM

Humans have emotions; based on those emotions we can predict whether they are surprised, scared, disgusted, furious, happy, hurt, or sad. Basically, this Facial Emotion Recognition is useful for companies to recognize the various moods of the employees.

This can help in finding out their stress levels and pressure handling. Hence sometimes the employees end up in a critical situation. To address these issues, a deep learning-based approach for human emotion identification was recently created. Using this we can predict whether the employees are in pain or not. It will be reported to the concerned authorities quickly and avoid a critical situation.

IV. METHODOLOGY

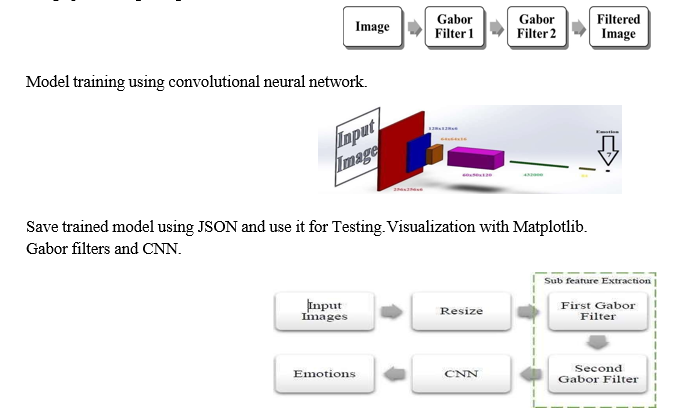

Image processing using Gabor filter.

V. ACKNOWLEDGMENT

I would like to thank various people, as well as HKBK College of Engineering, for their help and support during my graduate education. Predominantly, I want to express my gratitude to Prof Swaathie V for her enthusiasm, patience, interesting and thoughtful observations, practical assistance, and ideas, all of which have considerably assisted me during my studies. Because of her wide knowledge, deep competence, and professional abilities in Quality Control, I was able to successfully complete my research. This endeavor might not have been feasible without her assistance and instruction, and I couldn't have requested a finer study guide. I'd also like to express my gratitude to Visvesvaraya Technological University for admitting me to the degree program. In addition, I believe this is due to appreciation for the whole staff at my college.

Conclusion

The accuracy of all the multiple techniques may be determined from the results. Face detection accuracy is best in LDA (96.25%) and lowest in CNN (93%). As a result, the accuracy of the image processing technique may be improved by combining several techniques. This is the foundational study for future research into improving the performance of face unlock devices, applying machine learning to higher safety systems, and boosting the algorithms\' tolerance capacity. In a variety of non-standard datasets, the same findings should be obtained. This study examines and analyses the definition of emotion as well as the state-of-the-art in unimodal reaction recognition in dynamic data, together with facial expression recognition, voice emotional recognition, and textual emotional recognition. In addition, this paper summarizes corresponding benchmark datasets, metrics, and performances for clearly comprehending the development trend of research on the issue of emotion recognition. Ultimately, we present the latent research challenge and future direction to enrich the research in this field

References

[1] Shukla, S. Petridis, and M. Pantic, “Does visual self- supervision improve learning of speech representations for emotion recognition,” IEEE Transactions on Affective Computing, pp. 1–1, 2021. [2] J. Han, Z. Zhang, Z. Ren, and B. W. Schuller, “Emobed: Strengthenin monomodal emotion recognition via training with crossmodal emotion embeddings,” IEEE Transactions on Affective Computing, 2019 [3] S. Latif, R. Rana, S. Khalifa, R. Jurdak, J. Epps, and B. W. Schuller,“Multi-task semi-supervised adversarial auto encoding for speech emotion recognition,” IEEE Transac tions on Affective Computing, 2020. [4] W. Shen, J. Chen, X. Quan, and Z. Xie, “Dialogxl: All-in- one xlnet for multi-party conversation emotion recogni tation,” in Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Virtual Event, February 2-9, 2021, 2021, pp. 13 789–13 797 .L. Zheng, A. Bl, and C. Jtab, “Decn: Dialogical emotion correction network for conversational emotion recognition,” Neurocomputing, [5] R. Plutchik, “The nature of emotions: Human emotions have deep evolutionary roots,” 2001. [6] J. A. Russell, “A circumplex model of affect,” Journal of Personality and Social Psychology, vol. 39, no. 6, pp. 1161– 1178, 1980. [7] A. Mehrabian, “Pleasure-arousal-dominance: A general framework for describing and measuring the individuals differences in temperament, ” Current Psychology, vol. 14, no. 4, pp. 261–292, 1996. [8] P. Viola and M. Jones, “Rapid object detection using a boosted cascade of simple features,” in Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, vol. 1, 2001, pp. I–I. [9] X. Zhu and D. Ramanan, “Face detection, pose esti mation, and landmark localization in the wild,” in Computer Vision and Pattern Recognition (CVPR), 2012 IEEE Conference on, 2012. [10] D. Deng, Y. Zhou, J. Pi, and B. E. Shi, “Multimodal utterance-level affect analysis using visual, audio and text features,” 2018. [11] Z. Zheng, C. Cao, X. Chen, and G. Xu, “Multimodal emotion recognition for one-minute-gradual emotion challenge,” 2018. [12] A. Triantafyllopoulos, H. Sagha, F. Eyben, and B. Schuller, “audeering’s approach to the one-minute-gradual emotion challenge,” 2018. [13] O. M. Parkhi, A. Vedaldi, and A. Zisserman, “Deep face recognition,” in British Machine Vision Conference, 2015. [14] J. Chung, C. Gulcehre, K. H. Cho, and Y. Bengio, “Empirical evaluation of gated recurrent neural networks on sequence modeling,” Eprint Arxiv, 2014. [15] S. Zhang, X. Zhao, and Q. Tian, “Spontaneous speech emotion recognition using multiscale deep convolutional lstm,” IEEE Transactions on Affective Computing, pp. 1–1, 2019. [16] Mustaqeem and S. Kwon, “Att-net: Enhanced emotion recognition system using lightweight self-attention module,” Applied Soft Computing, vol. 102, no. 4, 2021. [17] R. Chatterjee, S. Mazumdar, R. S. Sherratt, R. Halder, T. Maitra, and D. Giri, “Real-time speech emotion analysis for smart home assistants,” IEEE Transactions on Consumer Electronics, vol. 67, no. 1, pp. 68–76, 2021. [18] A. Sl, B. Xx, B. Wf, C. Bc, and B. Pf, “Spatiotemporal and frequentialcascaded attention networks for speech emotion recognition,” Neurocomputing,2021. [19] S. Khorram, M. McInnis, and E. Mower Provost, “Jointly aligning igoand predicting continuous emotion annotations,” IEEE Transactions on Affective Computing, 201 [20] N. R. Deepak and S. Balaji, \"Performance analysis of MIMO-based transmission techniques for image quality in 4G wireless network,\" 2015 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), 2015, pp. 1-5, doi: 10.1109/ICCIC.2015.7435774. [21] Deepak NR, Thanuja N, Smart City for Future: Design of Data Acquisition Method using Threshold Concept Technique, International Journal of Engineering and Advanced Technology (IJEAT) ISSN: 2249-8958 (Online), Volume-11 Issue-1, October 202.

Copyright

Copyright © 2022 Arvind R, Dhanush Gowda S P, Bhaskar Prajapati, Ikram Nawaz Khan, Prof. Swaathie V . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET41536

Publish Date : 2022-04-17

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online