Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Using Facial Features to Detect Driver’s Drowsiness State

Authors: Kaushiki Krity, Kunal Goyal, Mohit Khatri, Naman Pandey, Suguna M. K

DOI Link: https://doi.org/10.22214/ijraset.2023.51947

Certificate: View Certificate

Abstract

Drowsiness and fatigue are one of the main causes leading to road accidents. They can be prevented by taking effort to get enough sleep before driving, drink coffee or energy drink, or have a rest when the signs of drowsiness occur. The popular drowsiness detection method uses complex methods, such as EEG and ECG [19]. This method has high accuracy for its measurement but it need to use contact measurement and it has many limitations on driver fatigue and drowsiness monitor. Thus, it is not comfortable to be used in real time driving. This paper proposes a way to detect the drowsiness signs among drivers by measuring the eye closing rate and yawning. We provide a robust and intelligent strategy for detecting driver tiredness in this work to address this growing problem. This method involves installing a camera inside the car to record the driver\'s facial look. The first phase involves using computer vision algorithms to identify and track the face region in the recorded video sequence. After that, the head is removed and its lateral and frontal assent are examined for indications of driver weariness. The driver\'s state is finally assessed during the fusion phase, and if drowsiness is found, a warning message and an alert are given to the driver. Our tests provide strong support for the proposed theory. The parameters of the eyes and mouth detection are created within the face image. The video was change into images frames per second. From there, locating the eyes and mouth can be performed. Once the eyes are located, measuring the intensity changes in the eye area determine the eyes are open or closed. The major goal of this research is to create a non-intrusive system that can recognise driver weariness and deliver a prompt warning.

Introduction

I. INTRODUCTION

A safety feature in automobiles called driver drowsiness detection prevents accidents by detecting when the driver is about to doze off. According to Sarbjit [1], most people close their eyes and sleep for 5–6 seconds. In this instance, is referring to complete slumber. On the other hand, microsleep is seen when a motorist does a little period of sleeping (2-3 seconds). In the study "Eye Tracking Based Driver Fatigue Monitoring and Warning System," the PERCLOS (% of time eyelids are closed) measure is employed to calculate sleepiness. Driver sleepiness, in contrast to driver distraction, lacks a cause and is instead characterised by a gradual loss of focus on the road and traffic demands. However, both driver indolence and distraction may result in a reduction in attention, response time, psychomotor coordination, and information processing.

According to several studies, tiredness may be a factor for up to 48% of some specific types of streets and up to 22% of all vehicle related accidents. According to data from the Society of Automotive Engineers in the USA, there is one driver fatigue-related mortality for every eight people killed in traffic accidents.

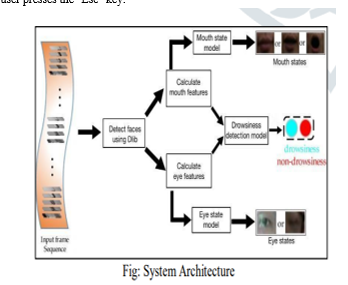

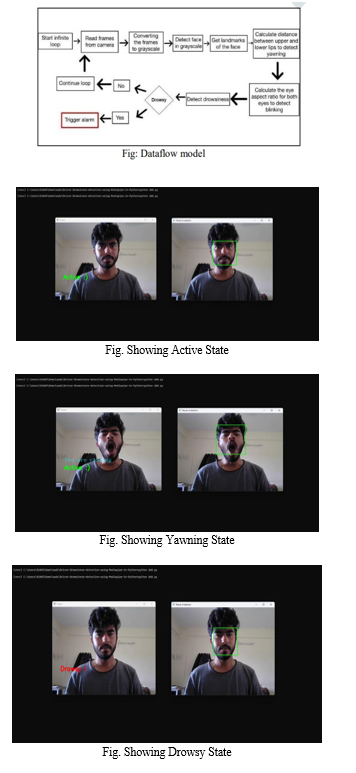

The block diagram of the entire system is displayed in the above figure. Based on the video captured by the front-facing camera, real-time processing of an incoming video stream is done to determine the driver's degree of exhaustion. If the level of fatigue is determined to be high, the output is transmitted to the alarm, and the alarm system is triggered.

There are many products out there that provide the measure of fatigue level in the drivers which are implemented in many vehicles. The driver drowsiness detection system provides the similar functionality but with better results and additional benefits. Also, it alerts the user on reaching a certain saturation point of the drowsiness measure.

II. LITERATURE SURVEY

In [5], an embedded system for detecting driver intoxication is presented in this paper. The authors suggest an effective and low-cost approach for measuring a driver's state of tiredness utilizing accelerometers, infrared sensors, and other gear connected to a microcontroller. The technology is made to be installed in cars as a preventative measure against collisions brought on by tired or intoxicated drivers.

Finally, this article developed a unique approach for detecting driver tiredness based on yawn recognition utilising depth information and an active contour model. The suggested technique was evaluated on a real-world dataset, and the findings revealed that it can detect yawns with a 97.5% accuracy.

In [6], the study introduces a new technique for detecting lane compliance and driver fatigue. The authors offer a thorough overview of the available solutions to this issue as well as a thorough analysis of relevant works in the subject. Then, based on the idea of lane discipline detection and analysis, they suggest a novel strategy utilizing computer vision. Experiments in actual driving situations are used to test this method, and encouraging findings are given.

The study in [7] introduces a new technique for detecting lane compliance and driver fatigue. The authors offer a thorough overview of the available solutions to this issue as well as a thorough analysis of relevant works in the subject. Then, based on the idea of lane discipline detection and analysis, they suggest a novel strategy utilizing computer vision. Experiments in actual driving situations are used to test this method, and encouraging findings are given.

[8] In this paper, the possibility of detecting driver tiredness by combining yawning and eye closure detection is explored. The authors provide a cutting-edge technique that makes use of frequency-based features that are retrieved from video pictures captured by a webcam installed on a car's dashboard. They then use fuzzy logic techniques to accurately categorize each frame as either awake or asleep by fusing these traits with additional indicators like mouth movement and head position.

In order to assess a driver's level of sleepiness, the research in [9] introduces a revolutionary driver drowsiness detection method that integrates facial characteristics with eye closure time. The authors outline their suggested approach as well as the drawbacks of earlier approaches and how they were enhanced. Then they assess their strategy using multiple datasets and contrast it with other methodologies. They conclude by summarizing their research and making recommendations for further investigation.

This study in [10] describes an eye tracking-based driver tiredness monitoring and warning system. The system's design as well as its elements, such as an image capture device, an infrared illuminator source, an imaging lens, and a display unit, are discussed by the authors. They go over various methods for gathering images from the driver's eyes in order to look for indicators of weariness. Finally, they offer experimental findings that show how their system can detect driver sleepiness indications with high levels of accuracy.

III. METHODOLOGY

A. Face Detection

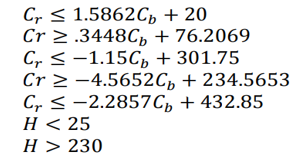

Regardless of the image's size, orientation, colour, illumination, etc., a face detection technique is employed to locate the face region. In order to extract the face area from the acquired image, the skin region is first chosen using the YCbCr and HSV colour spaces' respective boundary criteria, as

where the two final requirements for the H component are merged by logical "OR," and the five initial conditions for the Cr and Cb components are applied by logical "AND." Next, the background turns dark and the skin part that was detected is made white. The largest and topmost connected component of the detected skin area is ultimately determined to be the facial region after applying various morphological operations.

B. Head Tilt Method

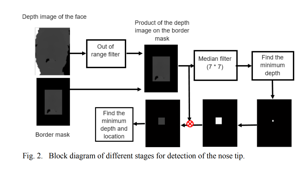

The depth map's pixel with the lowest depth value is located at the tip of the nose. When head rotation is limited to a short distance and the remainder of the face is lower than the nose tip, this statement is accurate. The glasses or cheeks will have the least depth, for instance, if the head rotation reaches a specific threshold and the head rotation angle is outside the range of +20 degrees to -20 degrees. When the tilt angle is between +15 and -15 degrees, the chin or forehead is the shallowest. Two steps make up a basic nose tip detection method. To remove inaccurate depth information, it first applies an out-of-range filter, after which it locates the pixels with the shallowest depth. This straightforward technique can reliably identify the nose tip, however noise can cause slight variations in the depth information, making the depth minima mistakenly assigned to spectacles or hair. The image is multiplied by an edge mask with dimensions selected in accordance with the depth image's size to remove the hair regions surrounding the face in order to remove this undesirable variance. The depth image is then subjected to a 7*7 median filter.

C. Mouth Detection

The procedure suggested in [4] is utilised to separate the mouth region from the face area. This approach is straightforward and operates in the YCbCr colour space. The map is binarized and morphological operators are added once the mouth map has been calculated. The largest connected component will be the area around the mouth.

- Mouth Openness detection

If the first two criteria are met, we can identify an open mouth: 1: There should be more than a threshold of difference between the maximum depth and average depth of the pixels in the mask region (ROI):

???????? (???????????????????????????? ????????????????? − ???????????????????????????? ?????????????????) > ???? (1) ????????? ????????????????????????????????????1 ???????? ????????????????.

2: The second condition is satisfied if the sum of the number of pixels in the mask area with depths larger than the average depth of the area, plus T, is greater than 1.5 of the total number of pixels in the area (N):

S = The number of pixels in the mask for which Depth(i, j) > Average Depth + T (2) ???????? ???? > 1 5 ???? \s????????????? ???????????????????????????????????? 2 ???????? ????????????????.Yawn Detection.

Based on the extracted mouth's aspect ratio, which is larger than a threshold when yawning occurs, the yawn is detected. Experimentally, a threshold of.65 is chosen. Additionally, to strengthen this stage, the mouth area is examined to determine if there is a hole in its map; if there is, the state of yawning is confirmed.

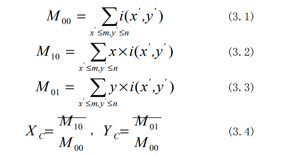

D. Eye Tracking

The approach utilised in this research [9] is robust and nonparametric. It uses the CAMSHIFT method, which tracks an item with a known hue in colour image sequences using a one-dimensional histogram. Back projection images are created from the original colour image using the colour histogram as a look-up table, given a colour image and a colour histogram. The reverse projection picture is a probability distribution of the model in the colour image if the histogram is a model density distribution. By using mean shift and dynamically modifying the target distribution's characteristics, CAMSHIFT locates the mode in the probability distribution image. The procedure is repeated until convergence in a single image. A detection method can be used to subsequent frames of a video series to track a single target. The target's last known position can be used to limit the search region, potentially saving a significant amount of processing time. The detection outcome is used as input to the following detection procedure in this scheme's feed-back loop.

The detecting algorithm is described in the paragraphs that follow:

- Set the search window's size and position.

- Determine the mass centre as shown in (3.4)

3. Align the window's centre with the mass centre.

4. Repeat steps 2 and 3 until the distance between the two centres (the window centre and the mass centre) is less than a certain threshold. Based on the CAMSHIFT algorithm described above.

The following is the tracking procedure:

a. Create an initialised search window with the eyes.

b. Convert colour space to Y Cr Cb, compute histogram of Y, and compute histogram back projection;

c. Run CAMSHIFT to obtain the new search window.

d. Return to step 2 after using the updated window as the initialised search window size and position in the next video frame.

2-Image Processing

This approach analyses camera photos using MATLAB software to find bodily changes in drivers like as eyelid blinking, pupil movement, yawn, and head ascent. The PERCLOS system measures the percentage of eyelid closure over the pupil over time using a camera and image processing technology. Northrop Grumman Co. employed similar approaches to produce a single vehicle sensor with all three capabilities[3]. Regardless of the fact that this video-based technology is non-intrusive and will not irritate drivers. Furthermore, this approach requires the camera to focus on a tiny region. As a result, each driver must precisely adjust the camera focus.

3-Image Processing

A detection algorithm can be used to successive frames of a video clip to track a single target. The search region can be limited to the target's latest known position, possibly saving high computation time. The detection result is utilised as input to the next detection step in this method, which involves a feed-back loop. The detecting methodology is described further down.

IV. IMPLEMENTATION

We will be using a combined model which consists of Image acquisition, Image pre-processing and classification and Convolutional Neural Network (CNN). In this work, step by step, we use the data strategy below:

- Import the necessary libraries: cv2, NumPy, dib, and os.

- Initialize the video capture instance and the face detector and landmark detector.

- Create a function named compute that takes two points as input and returns the Euclidean distance between them.

- Create another function named blinked that takes six points as input and returns 2 if the eyes are closed, 1 if the eyes are partially closed, and 0 if the eyes are open.

- Inside a while loop, read the video frames and convert them to grayscale.

- Detect faces in the grayscale image and for each face, draw a rectangle around it.

- Detect the facial landmarks for the current face.

- Compute the distance between the upper and lower lips and check if the person is yawning.

- Determine the state of the person's eyes by calling the blinked function.

- Update the status and color variables based on the state of the person's eyes and the distance between their lips.

- Display the status and the facial landmarks on the video frames.

- Exit the loop when the user presses the "Esc" key.

To evaluate and analyze the performance of the proposed method, we performed many experiments on the NTHU dataset. Following are the detailed explanation of the implementation steps:

A. Importing Libraries

The implementation starts with importing necessary libraries. cv2 is imported for basic image processing functions, numpy for array related functions, dlib for deep learning-based Modules and face landmark detection, and face_utils for basic operations of conversion.

B. Initializing Camera and Detectors

The VideoCapture() function from the cv2 library is used to initialize the camera instance. Then, dlib's get_frontal_face_detector() function and shape_predictor() function are used to initialize the face detector and landmark detector, respectively. The shape_predictor() function requires the path to the shape_predictor_68_face_landmarks.dat file, which is a pre-trained file for detecting 68 facial landmarks.

C. Defining Helper Functions

Two helper functions are defined to compute the distance between two points and to detect a blink. The compute() function calculates the Euclidean distance between two points in a 2D space. The blinked() function takes six facial landmarks as input and computes the ratio of the sum of the distances between the upper eyelid and the eyebrow and the lower eyelid and the cheek, to the distance between the horizontal midpoint of the eyes and the vertical midpoint of the face. Based on this ratio, the function returns 0 if not blinked, 1 if partially blinked, and 2 if fully blinked.

D. Processing Video

Feed The implementation then enters a while loop that continuously captures frames from the camera and processes them. cap.read() function reads a single frame from the camera. cv2.cvtColor() function is used to convert the frame to grayscale, which is required by the face detector.

E. Detecting Faces and Facial Landmarks

The detector() function from dlib detects faces in the grayscale frame. for loop is used to loop over all the detected faces. The top-left and bottomright coordinates of each detected face are used to draw a rectangle around the face using cv2.rectangle() function. The predictor() function from dlib is used to predict facial landmarks for each face. The facial landmark detection algorithm returns a set of (x, y) coordinates for 68 specific points on the face.

F. Detecting Mouth and Eyes

The shape_to_np() function from face_utils is used to convert the detected facial landmark points to a NumPy array. The coordinates of the upper and lower lips are extracted using the coordinates of the 62nd and 66th landmarks, respectively. The blinked() function is called twice to detect blinks in both eyes using the landmarks around the eyes.

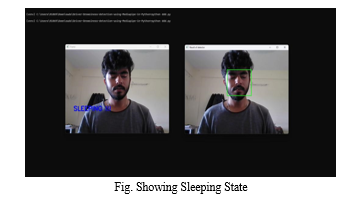

G. Determining Status of Driver

Based on the number of blinks and the distance between the upper and lower lips, the program determines whether the driver is active, drowsy, or sleeping. If the distance between the lips is greater than 25, it is considered a yawn. The program keeps a count of the number of consecutive blinks and yawns and sets the status variable accordingly. A color is also assigned to the status, which is used to display the status text on the video feed.

H. Displaying Results

The putText() function from cv2 is used to display the status text on the video feed. The circle() function from cv2 is used to draw a circle around each facial landmark point. Finally, the original video feed and the processed video feed with the detected facial landmarks are displayed using imshow() function from cv2.

I. Exiting the Program

The program continues to loop until the user presses the escape key (ESC). Once the user presses the escape key(ASCII -27), the program comes to a halt.

V. EXPERIMENTAL RESULT

To evaluate and analyze the performance of the proposed method, we performed many experiments on the dataset [14]. This dataset contains 20 subjects from different ethnicities,wearing or not wearing accessories and shakiness from the camera. The video sequences have been recorded under day and night conditions. The test provided an accuracy of 98%.

VI. FUTURE SCOPE

The model used in the system has the potential for incremental improvements by incorporating other parameters such as blink rate, yawning, and the state of the car, among others. By leveraging these additional parameters, the accuracy of the model can be significantly enhanced, leading to more reliable and precise drowsiness detection.

In our future plans for the project, we intend to incorporate a sensor to track the driver's heart rate. This additional feature would help prevent accidents that may occur due to sudden heart attacks while driving. By monitoring the heart rate, the system can proactively detect any irregularities and issue timely alerts to the driver, enabling them to take appropriate action.

Furthermore, the same model and techniques used in the system can have various other applications. For instance, in streaming services like Netflix, the model can be utilized to detect when the user falls asleep and automatically pause the video accordingly. Similarly, the model can be employed in applications that prevent users from falling asleep, such as in educational or productivity settings.

The model's potential for improvement and diverse applications, showcasing versatility and scalability, makes it a promising solution for addressing drowsy driving concerns and other related areas where real-time detection of alertness or drowsiness is crucial.

Conclusion

Potential tiredness concerns can be averted by monitoring the driver\'s behavioural features and assessing their level of consciousness. To make the system more robust, both yawning and closed-eye recognition approaches are implemented, while the algorithms are basic and suited to commercial applications. The system has successfully met all the objectives and requirements set forth for its development. Through diligent efforts, all the bugs and issues that were identified during the development process have been thoroughly addressed, resulting in a stable and reliable framework. The users of the system, who are knowledgeable about its functionalities and benefits, find it intuitive and user-friendly. They understand that the system effectively addresses the problem of fatigue-related issues, particularly in the context of informing individuals about their drowsiness levels while driving. This feature is especially crucial in mitigating the risks associated with drowsy driving, which can lead to accidents and other safety hazards. The framework\'s ability to provide timely alerts and warnings about drowsiness levels empowers users to take appropriate measures to prevent potential accidents due to driver fatigue. The system\'s stability, usability, and effectiveness in addressing the safety concerns related to drowsy driving make it a valuable solution for enhancing road safety and minimizing the risks associated with fatigue-related issues.

References

[1] Sarbjit, S., Nikolaos, P.: Monitoring Driver Fatigue Using Facial Analysis Techniques. Intelligent Transportation Systems. ITS, Japan (1999) [2] Comaniciu, D.; Meer, P.; A robust approach toward feature space analysis; Pattern Analysis and Machine Intelligence, IEEE Transactions on Volume 24, Issue 5, May 2002 Page(s):603 – 619 [3] R. P. Hamlin, “Three-in-one vehicle operator sensor”, Transportation Research Board, National Research Council, IDEA program project final report ITS-18, 1995. [4] M. H. Yang, D. Kriegman and N. Ahuja, “Detecting Faces in Images: A Survey”, IEEE Trans. on PAMI, Vol. 24, No. 1, pp.34-58, Jan. 2002 [5] Tianyi Hong and Huabiao Qin, \"Driver’s drowsiness detection in embedded system,\" 2007 IEEE International Conference on Vehicular Electronics and Safety, 2007, pp. 1-5, doi: 10.1109/ICVES.2007.4456381. [6] Y. Katyal, S. Alur and S. Dwivedi, \"Safe driving by detecting lane discipline and driver drowsiness,\" 2014 IEEE International Conference on Advanced Communications, Control and Computing Technologies, 2014, pp. 1008-1012, doi: 10.1109/ICACCCT.2014.7019248. [7] J. W. Baek, B. -G. Han, K. -J. Kim, Y. -S. Chung and S. -I. Lee, \"Real-Time Drowsiness Detection Algorithm for Driver State Monitoring Systems,\" 2018 Tenth International Conference on Ubiquitous and Future Networks (ICUFN), 2018, pp. 73-75, doi: 10.1109/ICUFN.2018.8436988. [8] M. Omidyeganeh, A. Javadtalab and S. Shirmohammadi, \"Intelligent driver drowsiness detection through fusion of yawning and eye closure,\" 2011 IEEE International Conference on Virtual Environments, Human-Computer Interfaces and Measurement Systems Proceedings, 2011, pp. 1-6, doi: 10.1109/VECIMS.2011.6053857. [9] B. Alshaqaqi, A. S. Baquhaizel, M. E. Amine Ouis, M. Boumehed, A. Ouamri and M. Keche, \"Driver drowsiness detection system,\" 2013 8th International Workshop on Systems, Signal Processing and their Applications (WoSSPA), 2013, pp. 151-155, doi: 10.1109/WoSSPA.2013.6602353. [10] H. Singh, J. S. Bhatia and J. Kaur, \"Eye tracking based driver fatigue monitoring and warning system,\" India International Conference on Power Electronics 2010 (IICPE2010), 2011, pp. 1-6, doi: 10.1109/IICPE.2011.5728062. [11] M. Z. Jafari Yazdi and M. Soryani, \"Driver Drowsiness Detection by Yawn Identification Based on Depth Information and Active Contour Model,\" 2019 2nd International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), 2019, pp. 1522-1526, doi: 10.1109/ICICICT46008.2019.8993385. [12] C. Yu, X. Qin, Y. Chen, J. Wang and C. Fan, \"DrowsyDet: A Mobile Application for Real-time Driver Drowsiness Detection,\" 2019 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), 2019, pp. 425-432, doi: 10.1109/SmartWorld-UIC-ATC-SCALCOM-IOP-SCI.2019.00116. [13] C. V. Anilkumar, M. Ahmed, R. Sahana, R. Thejashwini and P. S. Anisha, \"Design of drowsiness, heart beat detection system and alertness indicator for driver safety,\" 2016 IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), 2016, pp. 937-941, doi: 10.1109/RTEICT.2016.7807966. [14] Fouzia, R. Roopalakshmi, J. A. Rathod, A. S. Shetty and K. Supriya, \"Driver Drowsiness Detection System Based on Visual Features,\" 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), 2018, pp. 1344-1347, doi: 10.1109/ICICCT.2018.8473203. [15] Galarza, E.E., Egas, F.D., Silva, F.M., Velasco, P.M., Galarza, E.D. (2018). Real Time Driver Drowsiness Detection Based on Driver\'s Face Image Behavior Using a System of Human Computer Interaction Implemented in a Smartphone. In: Rocha, A., Guarda, T. (eds) Proceedings of the International Conference on Information Technology & Systems (ICITS 2018). ICITS 2018. Advances in Intelligent Systems and Computing, vol 721. Springer, Cham. https://doi.org/10.1007/978-3-319- 73450-7_53 [16] Akrout, B., Mahdi, W. (2013). A Blinking Measurement Method for Driver Drowsiness Detection.In: Burduk, R., Jackowski, K., Kurzynski, M., Wozniak, M., Zolnierek, A. (eds) Proceedings of the 8th International Conference on Computer Recognition Systems CORES 2013. Advances in Intelligent and Computing, vol 226. Springer, Heidelberg.https://doi.org/10.1007/978-3-319-00969-8_64 [17] K. S. Sankaran, N. Vasudevan and V. Nagarajan, \"Driver Drowsiness Detection using Percentage Eye Closure Method,\" 2020 International Conference on Communication and Signal Processing (ICCSP), 2020, pp. 1422-1425, doi: 10.1109/ICCSP48568.2020.9182059. [18] G. Zhenhai, L. DinhDat, H. Hongyu, Y. Ziwen and W. Xinyu, \"Driver Drowsiness Detection Based on Time Series Analysis of Steering Wheel Angular Velocity,\" 2017 9th International Conference on Measuring Technology and Mechatronics Automation (ICMTMA), 2017, pp. 99-101, doi: 10.1109/ICMTMA.2017.0031. [19] Qing, W., BingXi, S., Bin, X., & Junjie, Z. (2010, October). A perclos-based driver fatigue recognition application for smart vehicle space. In Information Processing (ISIP), 2010 Third International Symposium on (pp. 437-441). IEEE [20] Machine Learning and Deep Learning based Makeup Considered Eye Status Recognition for Driver Drowsiness, Procedia Computer Science, Volume 147,2019,Pages 264-270,ISSN 1877-0509,Machine Learning and Deep Learning based Makeup Considered Eye Status Recognition for Driver Drowsiness, Procedia Computer Science, Volume 147,2019,Pages 264- 270,ISSN 1877-0509

Copyright

Copyright © 2023 Kaushiki Krity, Kunal Goyal, Mohit Khatri, Naman Pandey, Suguna M. K. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET51947

Publish Date : 2023-05-10

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online