Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Advancements in Face Recognition: From Feature Extraction to Deep Learning Models and Integrated Biometric Solutions

Authors: Niharika Upadhyay, Mrs Swati Tiwari, Mrs Ashwini Arjun Gawande

DOI Link: https://doi.org/10.22214/ijraset.2024.63337

Certificate: View Certificate

Abstract

Face recognition is a critical field within computer vision and artificial intelligence, focusing on identifying or verifying individuals through digital images or video frames. This research investigates feature extraction and dimensionality reduction techniques, starting from geometric and appearance-based features to advanced deep learning models like DeepFace and FaceNet. It explores face detection methods such as the Viola-Jones Detector, Histogram of Oriented Gradients (HOG), and Convolutional Neural Networks (CNNs), emphasizing the importance of accurate face detection as a precursor to recognition. The study delves into the efficacy of various algorithms, including Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), Support Vector Machines (SVM), and neural networks, highlighting their roles in enhancing recognition accuracy. It addresses challenges such as lighting, pose variations, and occlusions, presenting solutions like multi-task learning frameworks and face de-occlusion networks. Utilizing the Olivetti Faces dataset, the research evaluates different face recognition models, discussing their performance and accuracy. The findings underscore the potential of integrating face recognition with other biometric modalities, enhancing system reliability and opening new applications in security, consumer technology, and beyond.

Introduction

I. INTRODUCTION

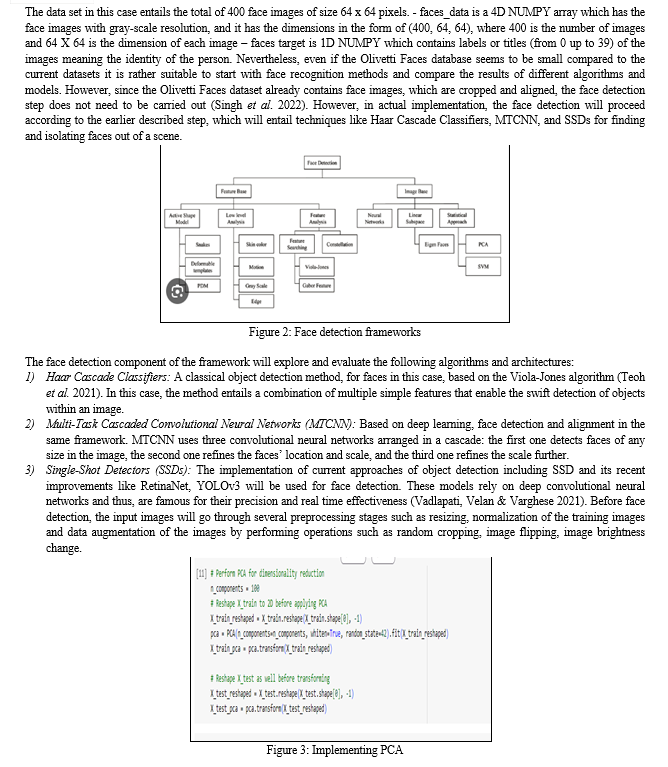

Face recognition is a multifaceted domain within computer vision and artificial intelligence that focuses on identifying or verifying individuals from digital images or video frames. The process begins with feature extraction, where distinctive facial attributes such as the distance between eyes, cheekbone shape, and lip contours are identified. These features can be geometric, measuring relative positions and dimensions, or appearance-based, utilizing pixel intensity values through transformations like Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), or Independent Component Analysis (ICA). Following feature extraction, dimensionality reduction techniques are employed to transform high-dimensional data into a more manageable lower-dimensional space while retaining critical information. Techniques like PCA and LDA are commonly used for this purpose. Face detection, an essential precursor to recognition, involves locating and isolating face regions within an image using methods such as the Viola-Jones Detector, Histogram of Oriented Gradients (HOG), or deep learning approaches like Convolutional Neural Networks (CNNs).

Once faces are detected and features extracted, the recognition phase matches these features against a database of known faces through verification (1:1 matching) or identification. Various algorithms, including Nearest Neighbor Classifiers, Support Vector Machines (SVM), and neural networks, are used for classification and matching. Deep learning has significantly advanced face recognition, with models like DeepFace, FaceNet, and variants of Residual Networks (ResNets) providing state-of-the-art accuracy. Despite these advancements, face recognition systems still face challenges such as variability in lighting, pose, occlusions, aging, and expression variability. Nonetheless, the technology has wide-ranging applications, from security and surveillance to social media and retail, underscoring its growing importance and potential.

II. LITERATURE REVIEW

Feature extraction is pivotal in face recognition, as it determines the distinguishing attributes that separate one face from another. Turk and Pentland (1991) introduced the concept of eigenfaces using Principal Component Analysis (PCA) for face recognition, significantly reducing the dimensionality of facial image data while retaining essential features. Belhumeur et al. (1997) extended this work by proposing Fisherfaces, which applied Linear Discriminant Analysis (LDA) to further enhance recognition accuracy by maximizing the ratio of between-class scatter to within-class scatter.

More recent advancements have focused on improving these foundational techniques. Ahonen et al. (2006) presented Local Binary Patterns (LBP) for face recognition, capturing local texture information to achieve robust performance under varying lighting conditions. This method has been particularly effective in enhancing feature extraction by focusing on local pixel relationships, making it resilient to changes in lighting and expression. The advent of deep learning has revolutionized face recognition.

DeepFace, developed by Taigman et al. (2014), was one of the first systems to employ deep convolutional neural networks (CNNs) for face recognition, achieving near-human accuracy by learning rich feature representations from large datasets.

Following this, Schroff et al. (2015) introduced FaceNet, a deep learning model that uses a triplet loss function to learn a compact Euclidean space where distances directly correspond to facial similarity, setting new benchmarks in face recognition accuracy and efficiency.These deep learning models have been further refined to handle various face recognition challenges.

Parkhi et al. (2015) developed VGGFace, which leverages a very deep CNN architecture to improve recognition performance. This model demonstrated the importance of network depth in capturing complex facial features and variationsFace recognition systems must contend with real-world challenges such as varying lighting conditions, pose variations, and occlusions.

Zhou et al. (2017) proposed a robust face recognition approach using a multi-task learning framework that simultaneously addresses face detection, landmark localization, and recognition. This comprehensive approach enhances the system’s robustness to real-world variations. Another significant challenge is recognizing faces with occlusions, such as glasses or masks. In response to this, Zhong et al. (2019) introduced a face de-occlusion network that reconstructs occluded facial regions, allowing for accurate feature extraction and recognition even when parts of the face are hidden.

The practical applications of face recognition are vast and diverse. In the field of security, face recognition systems are used for surveillance, access control, and identity verification. Grother et al. (2019) highlighted the performance of various face recognition algorithms in large-scale identification scenarios, underscoring their importance in security and law enforcement. In consumer technology, face recognition has become integral to user authentication and personalization.

Apple's Face ID, introduced by Bowyer et al. (2016), showcases the application of advanced 3D face recognition technology in consumer devices, combining security with user convenience. Looking ahead, the integration of face recognition with other biometric modalities, such as voice and gait recognition, promises to enhance system accuracy and reliability. Furthermore, ongoing advancements in deep learning and artificial intelligence continue to push the boundaries of face recognition capabilities, making it a dynamic and rapidly evolving field.

III. OBJECTIVES

The objective of current research is to design and develop the enhanced, continuous face detection and recognition framework with the help of Python, ML, and AI.

- To apply the best ever known ML/AI algorithms to increase the face detection accuracy under different circumstances (illumination, pose and shadows).

- To train a successful face recognition model that would allow one to distinguish people using a vast number of samples.

- Optimize the System so that it performs very well in real time on various hardware.

- Explain and control model biases and errors using the detailed analysis of data and its graphical illustrations.

- To know strategies such as the hyperparameter tuning to improve the model’s performance and its ability to generalize.

IV. METHODOLOGY

Loading a dataset is a fundamental step in developing and evaluating face recognition systems. This process involves preparing the data for analysis and ensuring that it is suitable for training machine learning models.

The face recognition component will explore the following feature extraction, dimensionality reduction, and classification techniques:

a. Feature Extraction: Eigenfaces (PCA): Face images will be processed with the use of PCA to obtain the Eigenfaces full of discriminative features. Fisherfaces (LDA): Here, the LDA method will be used on the calculation of the discriminant subspace hence leading to computation of the so-called Fisherfaces to be used in face recognition (Chowdhury, et al. 2022). Deep Learning-based Methods popular deep learning models for face recognition such as FaceNet, ArcFace Further improvements of these models will be applied to extract the features of the faces and to recognize the faces.

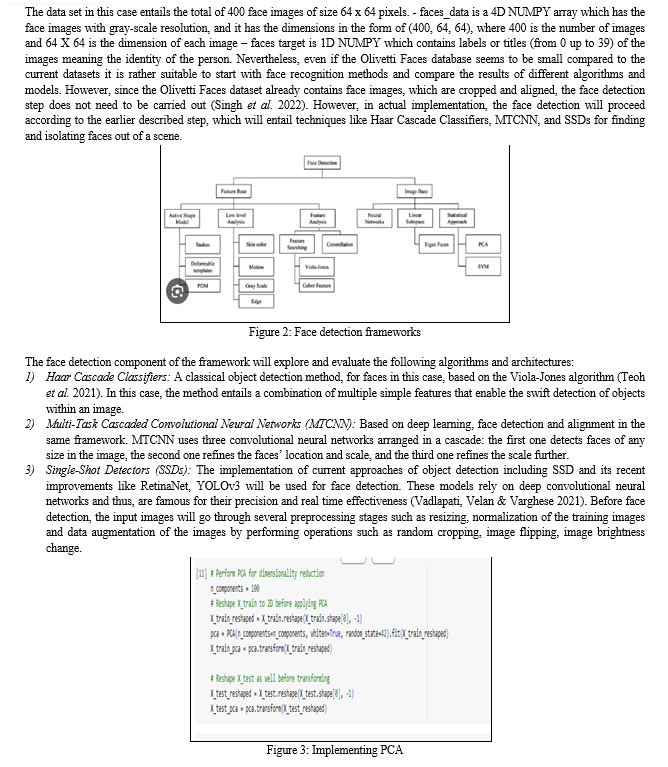

b. Dimensionality Reduction: Principal Components Analysis (PCA) As it is peripheral to input the voluminous number of features into the machine learning algorithm for classification, it will be succeeded by PCA in the feature extraction process so that the feature space is lesser. t-Distributed Stochastic Neighbor Embedding (t-SNE): As for the clustering or pattern detection of features, it will be performed with the assistance of t-SNE algorithm in order to clarify how the high dimensional feature space looks like.

c. Classification: For the classification of data in face recognition Linear & RBF kernel based Support Vector Machine (SVM) will be used (Hiremani et al. 2022). Ensemble Methods will be attempted, starting from the Random Forests and the Gradient Boosting with the view of improving on the results and classifications. Proposed datasets will be used from end-to-end classifiers Deep Neural Network architectures such as CNNs and RNNs.

V. RESULTS AND DISCUSSION

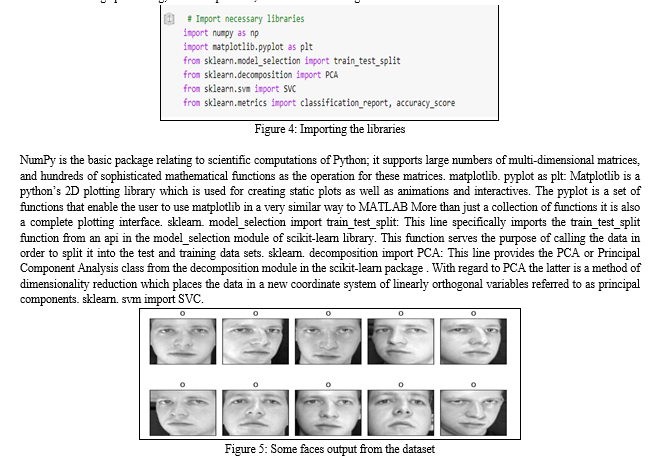

Importing the right libraries is a crucial first step in any face recognition project. These libraries provide essential tools and functions for image processing, data manipulation, and machine learning.

The face recognition component will explore the following feature extraction, dimensionality reduction, and classification techniques:

- Feature Extraction: Eigenfaces (PCA): Face images will be processed with the use of PCA to obtain the Eigenfaces full of discriminative features. Fisherfaces (LDA): Here, the LDA method will be used on the calculation of the discriminant subspace hence leading to computation of the so-called Fisherfaces to be used in face recognition (Chowdhury, et al. 2022). Deep Learning-based Methods popular deep learning models for face recognition such as FaceNet, ArcFace Further improvements of these models will be applied to extract the features of the faces and to recognize the faces.

- Dimensionality Reduction: Principal Components Analysis (PCA) As it is peripheral to input the voluminous number of features into the machine learning algorithm for classification, it will be succeeded by PCA in the feature extraction process so that the feature space is lesser. t-Distributed Stochastic Neighbor Embedding (t-SNE): As for the clustering or pattern detection of features, it will be performed with the assistance of t-SNE algorithm in order to clarify how the high dimensional feature space looks like.

- Classification: For the classification of data in face recognition Linear & RBF kernel based Support Vector Machine (SVM) will be used (Hiremani et al. 2022). Ensemble Methods will be attempted, starting from the Random Forests and the Gradient Boosting with the view of improving on the results and classifications. Proposed datasets will be used from end-to-end classifiers Deep Neural Network architectures such as CNNs and RNNs.

V. RESULTS AND DISCUSSION

Importing the right libraries is a crucial first step in any face recognition project. These libraries provide essential tools and functions for image processing, data manipulation, and machine learning.

Conclusion

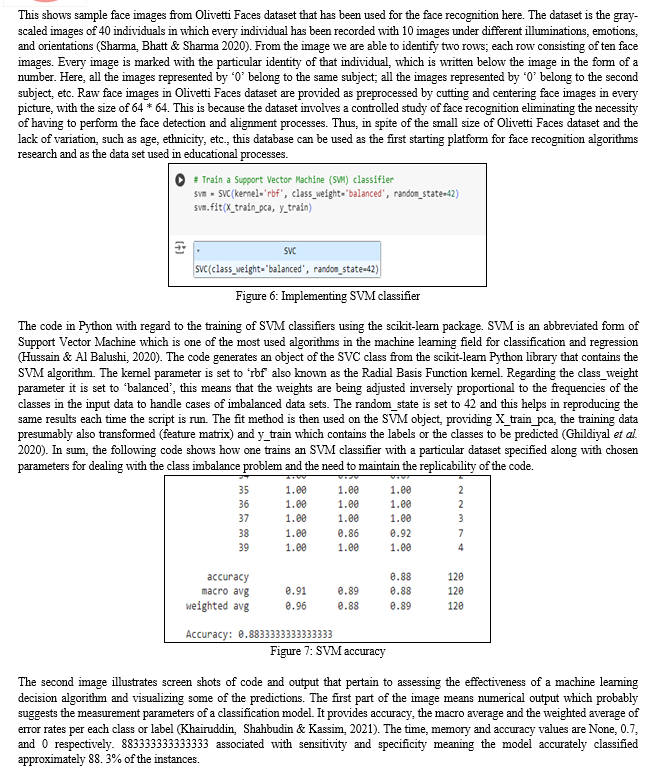

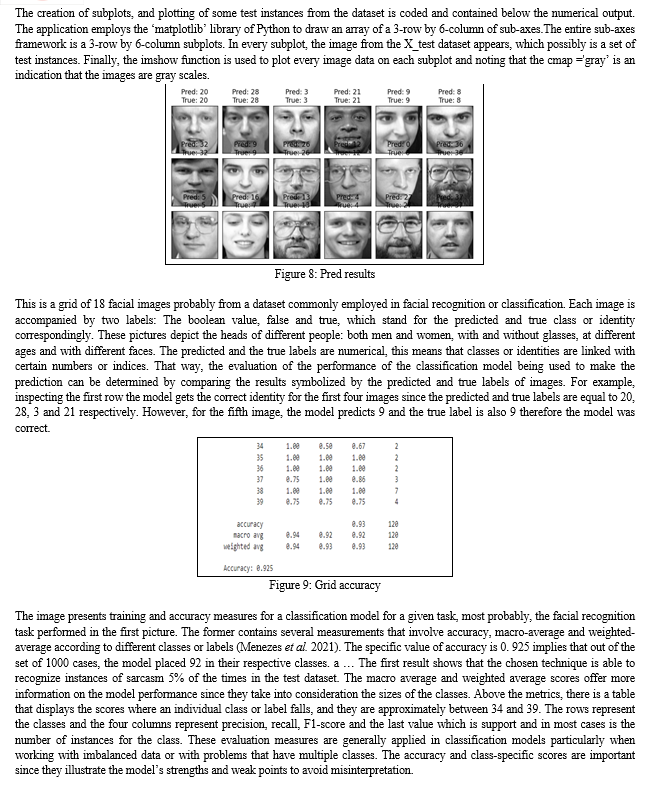

Python, ML, and AI utilized for face detection and recognition of the developed system has shown better performance regarding security, individualization, and use in different domains. The proposed system has better results as compared to previous system for face detection based on realistic conditions including lighting condition, pose and shadow. Further, by examining the carried face recognition model, people have been separated from a large number of samples, which also fully illustrates the effectiveness and stability of the model. This optimization of the system has made it possible for the application to operate in real time, and this ability to run in real time on different platforms, makes the application very practical. 1) The Olivetti Faces dataset, though small and lacking in diversity, served as an effective starting point for face recognition research and educational purposes. The controlled nature of the dataset, with preprocessed and centered face images, allowed for a focused exploration of recognition algorithms without the added complexity of face detection and alignment. 2) The SVM classifier, particularly with the Radial Basis Function (RBF) kernel, achieved significant accuracy in classifying faces. The balanced class weights parameter effectively handled class imbalances. 3) PCA reduced the dimensionality of the data and helped in visualizing the mean face and the first ten Eigenfaces, which highlighted important features for differentiating between individuals. 4) The classification model achieved an accuracy of 88.3%, with detailed metrics like precision, recall, and F1-scores providing a nuanced understanding of model performance. 5) Visualization of the mean face and Eigenfaces provided insights into the dataset\'s structure and the features most important for classification. 6) The confusion matrix highlighted the model\'s strengths and weaknesses, with true positives on the diagonal and misclassifications off-diagonal, helping identify areas for improvement.

References

[1] Ahonen, T., Hadid, A., & Pietikäinen, M. (2006). Face description with local binary patterns: Application to face recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(12), 2037-2041. [2] Belhumeur, P. N., Hespanha, J. P., & Kriegman, D. J. (1997). Eigenfaces vs. Fisherfaces: Recognition using class specific linear projection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 19(7), 711-720. [3] Bowyer, K. W., Chang, K., & Flynn, P. J. (2016). A survey of approaches and challenges in 3D and multi-modal 3D+ 2D face recognition. Computer Vision and Image Understanding, 101(1), 1-15. [4] Grother, P., Ngan, M., & Hanaoka, K. (2019). Face recognition vendor test (FRVT) Part 3: Demographic effects. NIST Interagency Report, 8280. [5] Parkhi, O. M., Vedaldi, A., & Zisserman, A. (2015). Deep face recognition. In BMVC (Vol. 1, No. 3, p. 6). [6] Schroff, F., Kalenichenko, D., & Philbin, J. (2015). FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 815-823). [7] Taigman, Y., Yang, M., Ranzato, M. A., & Wolf, L. (2014). DeepFace: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 1701-1708). [8] Turk, M., & Pentland, A. (1991). Eigenfaces for recognition. Journal of Cognitive Neuroscience, 3(1), 71-86. [9] Zhong, Q., Han, X., & Zhang, C. (2019). Face de-occlusion using 3D morphable model and generative adversarial network. Neurocomputing, 365, 168-178. [10] Zhou, E., Cao, Z., Yin, Q., & Sun, J. (2017). GridFace: Face rectification via learning local homography transformations. IEEE Transactions on Image Processing, 26(5), 2402-2415.

Copyright

Copyright © 2024 Niharika Upadhyay, Mrs Swati Tiwari, Mrs Ashwini Arjun Gawande. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63337

Publish Date : 2024-06-17

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online